Python Bytes: #160 Your JSON shall be streamed

Steve Dower: What makes Python a great language?

I know I’m far from the only person who has opined about this topic, but figured I’d take my turn.

A while ago I hinted on Twitter that I have Thoughts(tm) about the future of Python, and while this is not going to be that post, this is going to be important background for when I do share those thoughts.

If you came expecting a well researched article full of citations to peer-reviewed literature, you came to the wrong place. Similarly if you were hoping for unbiased and objective analysis. I’m not even going to link to external sources for definitions. This is literally just me on a soap box, and you can take it or leave it.

I’m also deliberately not talking about CPython the runtime, pip the package manager, venv the %PATH% manipulator, or PyPI the ecosystem. This post is about the Python language.

My hope is that you will get some ideas for thinking about why some programming languages feel better than others, even if you don’t agree that Python feels better than most.

Need To Know

What makes Python a great language? It gets the need to know balance right.

When I use the term “need to know”, I think of how the military uses the term. For many, “need to know” evokes thoughts of power imbalances, secrecy, and dominance-for-the-sake-of-dominance. But even in cases that may look like or actually be as bad as these, the intent is to achieve focus.

In a military organisation, every individual needs to make frequent life-or-death choices. The more time you spend making each choice, the more likely you are choosing death (specifically, your own). Having to factor in the full range of ethical factors into every decision is very inefficient.

Since no army wants to lose their own men, they delegate decision-making up through a series of ranks. By the time individuals are in the field, the biggest decisions are already made, and the soldier has a very narrow scope to make their own decisions. They can focus on exactly what they need to know, trusting that their superiors have taken into account anything else that they don’t need to know.

Software libraries and abstractions are fundamentally the same. Another developer has taken the broader context into account, and has provided you – the end-developer – with only what you need to know. You get to focus on your work, trusting that the rest has been taken care of.

Memory management is probably the easiest example. Languages that decide how memory management is going to work (such as through a garbage collector) have taken that decision for you. You don’t need to know. You get to use the time you would have been thinking about deallocation to focus on your actual task.

Does “need to know” ever fail? Of course it does. Sometimes you need more context in order to make a good decision. In a military organisation, there are conventions for requesting more information, ways to get promoted into positions with more context (and more complex decisions), and systems for refusing to follow orders (which mostly don’t turn out so well for the person refusing, but hey, there’s a system).

In software, “need to know” breaks down when you need some functionality that isn’t explicitly exposed or documented, when you need to debug library or runtime code, or just deal with something not behaving as it claims it should. When these situations arise, not being able to incrementally increase what you know becomes a serious blockage.

A good balance of “need to know” will actively help you focus on getting your job done, while also providing the escape hatches necessary to handle the times you need to know more. Python gets this balance right.

Python’s Need To Know levels

There are many levels of what you “need to know” to use Python.

At the lowest level, there’s the basic syntax and most trivial semantics of assignment, attributes and function calls. These concepts, along with your project-specific context, are totally sufficient to write highly effective code.

The example to the right (source) generates a histogram from a random distribution. By my count, there are two distinct words in that are not specific to the task at hand (“import” and “as”), and the places they are used are essentially boiler-plate – they were likely copied by the author, rather than created by the author. Everything else in the sample code relates to specifying the random distribution and creating the plot.

The most complex technical concept used is tuple unpacking, but all the user needs to know here is that they’re getting multiple return values. The fact that there’s really only a single return value and that the unpacking is performed by the assignment isn’t necessary or useful knowledge.

Find a friend who’s not a developer and try this experiment on them: show them

x, y = get_points()and explain how it works, without ever mentioning that it’s returning multiple values. Then point out thatget_points()actually just returns two values, andx, y =is how you give them names. Turns out, they won’t need to know how it works, just what it does.

As you add introduce new functionality, you will see the same pattern repeated. for x in y: can (and should) be explained without mentioning iterators. open() can (and should) be explained without mentioning the io module. Class instantiation can (and should) be explained without mentioning __call__. And so on.

Python very effectively hides unnecessary details from those who just want to use it.

Think about basically any other language you’ve used. How many concepts do you need to express the example above?

Basically every other language is going to distinguish between declaring a variable and assigning a variable. Many are going to require nominal typing, where you need to know about types before you can do assignment. I can’t think of many languages with fewer than the three concepts Python requires to generate a histogram from a random distribution with certain parameters (while also being readable from top to bottom – yes, I thought of LISP).

When Need To Know breaks down

But when need to know starts breaking down, Python has some of the best escape hatches in the entire software industry.

For starters, there are no truly private members. All the code you use in your Python program belongs to you. You can read everything, mutate everything, wrap everything, proxy everything, and nobody can stop you. Because it’s your program. Duck typing makes a heroic appearance here, enabling new ways to overcome limiting abstractions that would be fundamentally impossible in other languages.

Should you make a habit of doing this? Of course not. You’re using libraries for a reason – to help you focus on your own code by delegating “need to know” decisions to someone else. If you are going to regularly question and ignore their decisions, you completely spoil any advantage you may have received. But Python also allows you to rely on someone else’s code without becoming a hostage to their choices.

Today, the Python ecosystem is almost entirely publicly-visible code. You don’t need to know how it works, but you have the option to find out. And you can find out by following the same patterns that you’re familiar with, rather than having to learn completely new skills. Reading Python code, or interactively inspecting live object graphs, are exactly what you were doing with your own code.

Compare Python to languages that tend towards sharing compiled, minified, packaged or obfuscated code, and you’ll have a very different experience figuring out how things really (don’t) work.

Compare Python to languages that emphasize privacy, information hiding, encapsulation and nominal typing, and you’ll have a very different experience overcoming a broken or limiting abstraction.

Features you don’t Need To Know about

In the earlier plot example, you didn’t need to know about anything beyond assignment, attributes and function calls. How much more do you need to know to use Python? And who needs to know about these extra features?

As it turns out, there are millions of Python developers who don’t need much more than assignment, attributes and function calls. Those of us in the 1% of the Python community who use Twitter and mailing lists like to talk endlessly about incredibly advanced features, such as assignment expressions and position-only parameters, but the reality is that most Python users never need these and should never have to care.

When I teach introductory Python programming, my order of topics is roughly assignment, arithmetic, function calls (with imports thrown in to get to the interesting ones), built-in collection types, for loops, if statements, exception handling, and maybe some simple function definitions and decorators to wrap up. That should be enough for 90% of Python careers (syntactically – learning which functions to call and when is considerably more effort than learning the language).

The next level up is where things get interesting. Given the baseline knowledge above, the Python’s next level allows 10% of developers to provide the 90% with significantly more functionality without changing what they need to know about the language. Those awesome libraries are written by people with deeper technical knowledge, but (can/should) expose only the simplest syntactic elements.

When I adopt classes, operator overloading, generators, custom collection types, type checking, and more, Python does not force my users to adopt them as well. When I expand my focus to include more complexity, I get to make decisions that preserve my users’ need to know.

For example, my users know that calling something returns a value, and that returned values have attributes or methods. Whether the callable is a function or a class is irrelevant to them in Python. But compare with most other languages, where they would have to change their syntax if I changed a function into a class.

When I change a function to return a custom mapping type rather than a standard dictionary, it is irrelevant to them. In other languages, the return type is also specified explicitly in my user’s code, and so even a compatible change might force them outside of what they really need to know.

If I return a number-like object rather than a built-in integer, my users don’t need to know. Most languages don’t have any way to replace primitive types, but Python provides all the functionality I need to create a truly number-like object.

Clearly the complexity ramps up quickly, even at this level. But unlike most other languages, complexity does not travel down. Just because some complexity is used within your codebase doesn’t mean you will be forced into using it everywhere throughout the codebase.

The next level adds even more complexity, but its use also remains hidden behind normal syntax. Metaclasses, object factories, decorator implementations, slots, __getattribute__ and more allow a developer to fundamentally rewrite how the language works. There’s maybe 1% of Python developers who ever need to be aware of these features, and fewer still who should use them, but the enabling power is unique among languages that also have such an approachable lowest level.

Even with this ridiculous level of customisation, the same need to know principles apply, and in a way that only Python can do it. Enums and data classes in Python are based on these features, but the knowledge required to use them is not the same as the knowledge required to create them. Users get to focus on what they’re doing, assisted by trusting someone else to have made the right decision about what they need to know.

Summary and foreshadowing

People often cite Python’s ecosystem as the main reason for its popularity. Others claim the language’s simplicity or expressiveness is the primary reason.

I would argue that the Python language has an incredibly well-balanced sense of what developers need to know. Better than any other language I’ve used.

Most developers get to write incredibly functional and focused code with just a few syntax constructs. Some developers produce reusable functionality that is accessible through simple syntax. A few developers manage incredible complexity to provide powerful new semantics without leaving the language.

By actively helping library developers write complex code that is not complex to use, Python has been able to build an amazing ecosystem. And that amazing ecosystem is driving the popularity of the language.

But does our ecosystem have the longevity to maintain the language…? Does the Python language have the qualities to survive a changing ecosystem…? Will popular libraries continue to drive the popularity of the language, or does something need to change…?

(Contact me on Twitter for discussion.)

Kushal Das: Updates on Unoon in December 2019

This Saturday evening, I sat with Unoon project after a few weeks, I was continuously running it, but, did not resume the development effort. This time Bhavin also joined me. Together, we fixed a location of the whitelist files issue, and unoon now also has a database (using SQLite), which stores all the historical process and connection information. In the future, we will provide some way to query this information.

As usual, we learned many new things about different Linux processes while doing this development. One of the important ones is about running podman process, and how the user id maps to the real system. Bhavin added a patch that fixes a previously known issue of crashing due to missing user name. Now, unoon shows the real user ID when it can not find the username in the /etc/passwd file.

You can read about Unoon more in my previous blog post.

Kushal Das: Highest used usernames in break-in attempts to my servers 2019

A few days ago, I wrote about different IP addresses trying to break into my servers. Today, I looked into another server to find the frequently used user names used in the SSH attempts.

- admin 36228

- test 19249

- user 17164

- ubuntu 16233

- postgres 16217

- oracle 9738

- git 8118

- ftpuser 7028

- teamspea 6560

- mysql 5650

- nagios 5599

- pi 5239

- deploy 5167

- hadoop 5011

- guest 4798

- dev 4468

- ts3 4277

- minecraf 4145

- support 3940

- ubnt 3549

- debian 3515

- demo 3489

- tomcat 3435

- vagrant 3042

- zabbix 3033

- jenkins 3027

- develope 2941

- sinusbot 2914

- user1 2898

- administ 2747

- bot 2590

- testuser 2459

- ts 2403

- apache 2391

- www 2329

- default 2293

- odoo 2168

- test2 2161

- backup 2133

- steam 2129

- 1234 2026

- server 1890

- www-data 1853

- web 1850

- centos 1796

- vnc 1783

- csgoserv 1715

- prueba 1677

- test1 1648

- a 1581

- student 1568

- csgo 1524

- weblogic 1522

- ts3bot 1521

- mc 1434

- gpadmin 1427

- redhat 1378

- alex 1375

- system 1362

- manager 1359

I never knew that admin is such important user name for Linux servers, I thought I will see root there. Also, why alex? I can under the reason behind pi. If you want to find out the similar details, you can use the following command.

last -f /var/log/btmp

Programiz: Python Dictionary Comprehension

Steve Dower: What makes Python a great language?

I know I’m far from the only person who has opined about this topic, but figured I’d take my turn.

A while ago I hinted on Twitter that I have Thoughts(tm) about the future of Python, and while this is not going to be that post, this is going to be important background for when I do share those thoughts.

If you came expecting a well researched article full of citations to peer-reviewed literature, you came to the wrong place. Similarly if you were hoping for unbiased and objective analysis. I’m not even going to link to external sources for definitions. This is literally just me on a soap box, and you can take it or leave it.

I’m also deliberately not talking about CPython the runtime, pip the package manager, venv the %PATH% manipulator, or PyPI the ecosystem. This post is about the Python language.

My hope is that you will get some ideas for thinking about why some programming languages feel better than others, even if you don’t agree that Python feels better than most.

Need To Know

What makes Python a great language? It gets the need to know balance right.

When I use the term “need to know”, I think of how the military uses the term. For many, “need to know” evokes thoughts of power imbalances, secrecy, and dominance-for-the-sake-of-dominance. But even in cases that may look like or actually be as bad as these, the intent is to achieve focus.

In a military organisation, every individual needs to make frequent life-or-death choices. The more time you spend making each choice, the more likely you are choosing death (specifically, your own). Having to factor in the full range of ethical factors into every decision is very inefficient.

Since no army wants to lose their own men, they delegate decision-making up through a series of ranks. By the time individuals are in the field, the biggest decisions are already made, and the soldier has a very narrow scope to make their own decisions. They can focus on exactly what they need to know, trusting that their superiors have taken into account anything else that they don’t need to know.

Software libraries and abstractions are fundamentally the same. Another developer has taken the broader context into account, and has provided you – the end-developer – with only what you need to know. You get to focus on your work, trusting that the rest has been taken care of.

Memory management is probably the easiest example. Languages that decide how memory management is going to work (such as through a garbage collector) have taken that decision for you. You don’t need to know. You get to use the time you would have been thinking about deallocation to focus on your actual task.

Does “need to know” ever fail? Of course it does. Sometimes you need more context in order to make a good decision. In a military organisation, there are conventions for requesting more information, ways to get promoted into positions with more context (and more complex decisions), and systems for refusing to follow orders (which mostly don’t turn out so well for the person refusing, but hey, there’s a system).

In software, “need to know” breaks down when you need some functionality that isn’t explicitly exposed or documented, when you need to debug library or runtime code, or just deal with something not behaving as it claims it should. When these situations arise, not being able to incrementally increase what you know becomes a serious blockage.

A good balance of “need to know” will actively help you focus on getting your job done, while also providing the escape hatches necessary to handle the times you need to know more. Python gets this balance right.

Python’s Need To Know levels

There are many levels of what you “need to know” to use Python.

At the lowest level, there’s the basic syntax and most trivial semantics of assignment, attributes and function calls. These concepts, along with your project-specific context, are totally sufficient to write highly effective code.

The example to the right (source) generates a histogram from a random distribution. By my count, there are two distinct words in that are not specific to the task at hand (“import” and “as”), and the places they are used are essentially boiler-plate – they were likely copied by the author, rather than created by the author. Everything else in the sample code relates to specifying the random distribution and creating the plot.

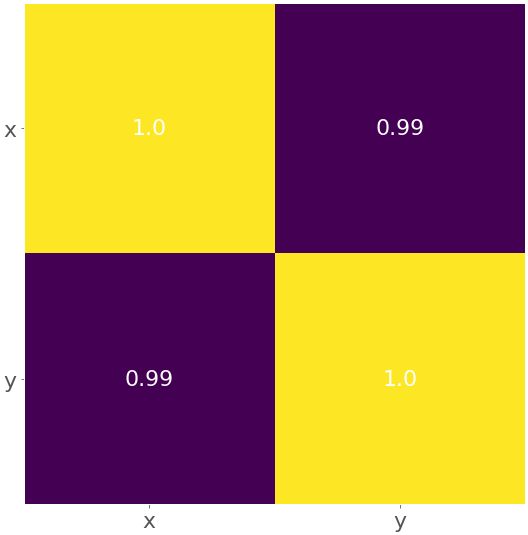

The most complex technical concept used is tuple unpacking, but all the user needs to know here is that they’re getting multiple return values. The fact that there’s really only a single return value and that the unpacking is performed by the assignment isn’t necessary or useful knowledge.

Find a friend who’s not a developer and try this experiment on them: show them

x, y = get_points()and explain how it works, without ever mentioning that it’s returning multiple values. Then point out thatget_points()actually just returns two values, andx, y =is how you give them names. Turns out, they won’t need to know how it works, just what it does.

As you add introduce new functionality, you will see the same pattern repeated. for x in y: can (and should) be explained without mentioning iterators. open() can (and should) be explained without mentioning the io module. Class instantiation can (and should) be explained without mentioning __call__. And so on.

Python very effectively hides unnecessary details from those who just want to use it.

Think about basically any other language you’ve used. How many concepts do you need to express the example above?

Basically every other language is going to distinguish between declaring a variable and assigning a variable. Many are going to require nominal typing, where you need to know about types before you can do assignment. I can’t think of many languages with fewer than the three concepts Python requires to generate a histogram from a random distribution with certain parameters (while also being readable from top to bottom – yes, I thought of LISP).

When Need To Know breaks down

But when need to know starts breaking down, Python has some of the best escape hatches in the entire software industry.

For starters, there are no truly private members. All the code you use in your Python program belongs to you. You can read everything, mutate everything, wrap everything, proxy everything, and nobody can stop you. Because it’s your program. Duck typing makes a heroic appearance here, enabling new ways to overcome limiting abstractions that would be fundamentally impossible in other languages.

Should you make a habit of doing this? Of course not. You’re using libraries for a reason – to help you focus on your own code by delegating “need to know” decisions to someone else. If you are going to regularly question and ignore their decisions, you completely spoil any advantage you may have received. But Python also allows you to rely on someone else’s code without becoming a hostage to their choices.

Today, the Python ecosystem is almost entirely publicly-visible code. You don’t need to know how it works, but you have the option to find out. And you can find out by following the same patterns that you’re familiar with, rather than having to learn completely new skills. Reading Python code, or interactively inspecting live object graphs, are exactly what you were doing with your own code.

Compare Python to languages that tend towards sharing compiled, minified, packaged or obfuscated code, and you’ll have a very different experience figuring out how things really (don’t) work.

Compare Python to languages that emphasize privacy, information hiding, encapsulation and nominal typing, and you’ll have a very different experience overcoming a broken or limiting abstraction.

Features you don’t Need To Know about

In the earlier plot example, you didn’t need to know about anything beyond assignment, attributes and function calls. How much more do you need to know to use Python? And who needs to know about these extra features?

As it turns out, there are millions of Python developers who don’t need much more than assignment, attributes and function calls. Those of us in the 1% of the Python community who use Twitter and mailing lists like to talk endlessly about incredibly advanced features, such as assignment expressions and position-only parameters, but the reality is that most Python users never need these and should never have to care.

When I teach introductory Python programming, my order of topics is roughly assignment, arithmetic, function calls (with imports thrown in to get to the interesting ones), built-in collection types, for loops, if statements, exception handling, and maybe some simple function definitions and decorators to wrap up. That should be enough for 90% of Python careers (syntactically – learning which functions to call and when is considerably more effort than learning the language).

The next level up is where things get interesting. Given the baseline knowledge above, the Python’s next level allows 10% of developers to provide the 90% with significantly more functionality without changing what they need to know about the language. Those awesome libraries are written by people with deeper technical knowledge, but (can/should) expose only the simplest syntactic elements.

When I adopt classes, operator overloading, generators, custom collection types, type checking, and more, Python does not force my users to adopt them as well. When I expand my focus to include more complexity, I get to make decisions that preserve my users’ need to know.

For example, my users know that calling something returns a value, and that returned values have attributes or methods. Whether the callable is a function or a class is irrelevant to them in Python. But compare with most other languages, where they would have to change their syntax if I changed a function into a class.

When I change a function to return a custom mapping type rather than a standard dictionary, it is irrelevant to them. In other languages, the return type is also specified explicitly in my user’s code, and so even a compatible change might force them outside of what they really need to know.

If I return a number-like object rather than a built-in integer, my users don’t need to know. Most languages don’t have any way to replace primitive types, but Python provides all the functionality I need to create a truly number-like object.

Clearly the complexity ramps up quickly, even at this level. But unlike most other languages, complexity does not travel down. Just because some complexity is used within your codebase doesn’t mean you will be forced into using it everywhere throughout the codebase.

The next level adds even more complexity, but its use also remains hidden behind normal syntax. Metaclasses, object factories, decorator implementations, slots, __getattribute__ and more allow a developer to fundamentally rewrite how the language works. There’s maybe 1% of Python developers who ever need to be aware of these features, and fewer still who should use them, but the enabling power is unique among languages that also have such an approachable lowest level.

Even with this ridiculous level of customisation, the same need to know principles apply, and in a way that only Python can do it. Enums and data classes in Python are based on these features, but the knowledge required to use them is not the same as the knowledge required to create them. Users get to focus on what they’re doing, assisted by trusting someone else to have made the right decision about what they need to know.

Summary and foreshadowing

People often cite Python’s ecosystem as the main reason for its popularity. Others claim the language’s simplicity or expressiveness is the primary reason.

I would argue that the Python language has an incredibly well-balanced sense of what developers need to know. Better than any other language I’ve used.

Most developers get to write incredibly functional and focused code with just a few syntax constructs. Some developers produce reusable functionality that is accessible through simple syntax. A few developers manage incredible complexity to provide powerful new semantics without leaving the language.

By actively helping library developers write complex code that is not complex to use, Python has been able to build an amazing ecosystem. And that amazing ecosystem is driving the popularity of the language.

But does our ecosystem have the longevity to maintain the language…? Does the Python language have the qualities to survive a changing ecosystem…? Will popular libraries continue to drive the popularity of the language, or does something need to change…?

(Contact me on Twitter for discussion.)

Peter Bengtsson: A Python and Preact app deployed on Heroku

Heroku is great but it's sometimes painful when your app isn't just in one single language. What I have is a project where the backend is Python (Django) and the frontend is JavaScript (Preact). The folder structure looks like this:

/

- README.md

- manage.py

- requirements.txt

- my_django_app/

- settings.py

- asgi.py

- api/

- urls.py

- views.py

- frontend/

- package.json

- yarn.lock

- preact.config.js

- build/

...

- src/

...A bunch of things omitted for brevity but people familiar with Django and preact-cli/create-create-app should be familiar.

The point is that the root is a Python app and the front-end is exclusively inside a sub folder.

When you do local development, you start two servers:

./manage.py runserver- startshttp://localhost:8000cd frontend && yarn start- startshttp://localhost:3000

The latter is what you open in your browser. That preact app will do things like:

constresponse=awaitfetch('/api/search');and, in preact.config.js I have this:

exportdefault(config,env,helpers)=>{if(config.devServer){config.devServer.proxy=[{path:"/api/**",target:"http://localhost:8000"}];}};...which is hopefully self-explanatory. So, calls like GET http://localhost:3000/api/search actually goes to http://localhost:8000/api/search.

That's when doing development. The interesting thing is going into production.

Before we get into Heroku, let's first "merge" the two systems into one and the trick used is Whitenoise. Basically, Django's web server will be responsibly not only for things like /api/search but also static assets such as / --> frontend/build/index.html and /bundle.17ae4.js --> frontend/build/bundle.17ae4.js.

This is basically all you need in settings.py to make that happen:

MIDDLEWARE=["django.middleware.security.SecurityMiddleware","whitenoise.middleware.WhiteNoiseMiddleware",...]WHITENOISE_INDEX_FILE=TrueSTATIC_URL="/"STATIC_ROOT=BASE_DIR/"frontend"/"build"However, this isn't quite enough because the preact app uses preact-router which uses pushState() and other code-splitting magic so you might have a URL, that users see, like this: https://myapp.example.com/that/thing/special and there's nothing about that in any of the Django urls.py files. Nor is there any file called frontend/build/that/thing/special/index.html or something like that.

So for URLs like that, we have to take a gamble on the Django side and basically hope that the preact-router config knows how to deal with it. So, to make that happen with Whitenoise we need to write a custom middleware that looks like this:

fromwhitenoise.middlewareimportWhiteNoiseMiddlewareclassCustomWhiteNoiseMiddleware(WhiteNoiseMiddleware):defprocess_request(self,request):ifself.autorefresh:static_file=self.find_file(request.path_info)else:static_file=self.files.get(request.path_info)# These two lines is the magic.# Basically, the URL didn't lead to a file (e.g. `/manifest.json`)# it's either a API path or it's a custom browser path that only# makes sense within preact-router. If that's the case, we just don't# know but we'll give the client-side preact-router code the benefit# of the doubt and let it through.ifnotstatic_fileandnotrequest.path_info.startswith("/api"):static_file=self.files.get("/")ifstatic_fileisnotNone:returnself.serve(static_file,request)And in settings.py this change:

MIDDLEWARE = [

"django.middleware.security.SecurityMiddleware",

- "whitenoise.middleware.WhiteNoiseMiddleware",+ "my_django_app.middleware.CustomWhiteNoiseMiddleware",

...

]

Now, all traffic goes through Django. Regular Django view functions, static assets, and everything else fall back to frontend/build/index.html.

Heroku

Heroku tries to make everything so simple for you. You basically, create the app (via the cli or the Heroku web app) and when you're ready you just do git push heroku master. However that won't be enough because there's more to this than Python.

Unfortunately, I didn't take notes of my hair-pulling excruciating journey of trying to add buildpacks and hacks and Procfiles and custom buildpacks. Nothing seemed to work. Perhaps the answer was somewhere in this issue: "Support running an app from a subdirectory" but I just couldn't figure it out. I still find buildpacks confusing when it's beyond Hello World. Also, I didn't want to run Node as a service, I just wanted it as part of the "build process".

Docker to the rescue

Finally I get a chance to try "Deploying with Docker" in Heroku which is a relatively new feature. And the only thing that scared me was that now I need to write a heroku.yml file which was confusing because all I had was a Dockerfile. We'll get back to that in a minute!

So here's how I made a Dockerfile that mixes Python and Node:

FROMnode:12asfrontendCOPY . /app

WORKDIR /appRUNcd frontend && yarn install && yarn build

FROMpython:3.8-slimWORKDIR /appRUN groupadd --gid 10001 app && useradd -g app --uid 10001 --shell /usr/sbin/nologin app

RUN chown app:app /tmp

RUN apt-get update &&\

apt-get upgrade -y &&\

apt-get install -y --no-install-recommends \

gcc apt-transport-https python-dev

# Gotta try moving this to poetry instead!COPY ./requirements.txt /app/requirements.txt

RUN pip install --upgrade --no-cache-dir -r requirements.txt

COPY . /app

COPY --from=frontend /app/frontend/build /app/frontend/build

USER appENVPORT=8000EXPOSE $PORTCMD uvicorn gitbusy.asgi:application --host 0.0.0.0 --port $PORTIf you're not familiar with it, the critical trick is on the first line where it builds some Node with as frontend. That gives me a thing I can then copy from into the Python image with COPY --from=frontend /app/frontend/build /app/frontend/build.

Now, at the very end, it starts a uvicorn server with all the static .js, index.html, and favicon.ico etc. available to uvicorn which ultimately runs whitenoise.

To run and build:

docker build . -t my_app docker run -t -i --rm --env-file .env -p 8000:8000 my_app

Now, opening http://localhost:8000/ is a production grade app that mixes Python (runtime) and JavaScript (static).

Heroku + Docker

Heroku says to create a heroku.yml file and that makes sense but what didn't make sense is why I would add cmd line in there when it's already in the Dockerfile. The solution is simple: omit it. Here's what my final heroku.yml file looks like:

build:docker:web:DockerfileCheck in the heroku.yml file and git push heroku master and voila, it works!

To see a complete demo of all of this check out https://github.com/peterbe/gitbusy and https://gitbusy.herokuapp.com/

Weekly Python StackOverflow Report: (ccvi) stackoverflow python report

Between brackets: [question score / answers count]

Build date: 2019-12-14 12:53:35 GMT

- Python __getitem__ and in operator result in strange behavior - [22/1]

- What is the reason for difference between integer division and float to int conversion in python? - [18/1]

- Match multiple(3+) occurrences of each character - [7/5]

- Pandas Dataframe: Multiplying Two Columns - [7/3]

- What does !r mean in Python? - [7/1]

- python how to find the number of days in each month from Dec 2019 and forward between two date columns - [6/4]

- Itertools zip_longest with first item of each sub-list as padding values in stead of None by default - [6/4]

- Writing more than 50 millions from Pyspark df to PostgresSQL, best efficient approach - [6/0]

- How to merge and groupby between seperate dataframes - [5/3]

- Setting the index after merging with pandas? - [5/3]

Catalin George Festila: Python 3.7.5 : Django admin shell by Grzegorz Tężycki.

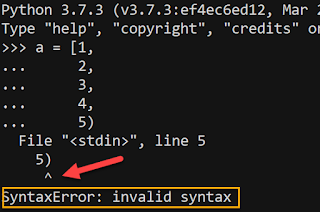

Andre Roberge: A Tiny Python Exception Oddity

- The inconsistent behaviour is so tiny, that I doubt most people would notice - including myself before working on Friendly-traceback.

- This is SyntaxError that is not picked up by flake8; however, pylint does pick it up.

- By Python, I mean CPython. After trying to figure out why this case was different, I downloaded Pypy and saw that Pypy did not show the odd behaviour.

- To understand the origin of this different behaviour, one needs to look at some obscure inner parts of the CPython interpreter.

- This would likely going to be found totally irrelevant by 99.999% of Python programmers. If you are not the type of person who is annoyed by tiny oddities, you probably do not want to read any further.

Normal behaviour

Odd case

Why does it matter to me?

Why is this case handled differently by CPython?

Conclusion

Anwesha Das: Rootconf Hyderbad, 2019

What is Rootconf?

Rootconf is the conference on sysadmins, DevOps, SRE, Network engineers. Rootconf started its journey in 2012 in Bangalore, 2019 was the 7th edition of Rootconf. In these years, through all the Rootconfs, there is a community that has developed around Rootconf. Now people do come to attend Rootconf not just to attend the conference but also to attend friends and peers to discuss projects and ideas.

Need for more Rootconf

Over all these years, we have witnessed changes in the network, infrastructure, and security threats. We have designed Rootconf (in all these years), keeping in mind the changing needs of the community. Lately, we have realized that the needs of the community based on their geographic locations/ cities. Like in Pune, there is a considerable demand for sessions that deals with small size infrastructure suited for startups and SMEs as there is a growing startup industry there. In Delhi, there is a demand for discussion around data centers, network designs, and so on. And in Hyderabad, there is a want for solutions around large scale infrastructure. The Bangalore event did not suffice to solve all these needs. So more the merrier, we decided to have more than one Rootconf a year.

Rootconf Pune was the first of this 'outstation Rootconf journey'. The next was Rootconf Hyderabad. It was the first event for which I was organizing the editorial, community, and all by myself.

I joined HasGeek as Community Manager and Editorial co-ordinator. After my Rootconf, Bangalore Zainab fixed a goal for me.

Z : 'Anwesha, I want to organize Rootconf Hyderabad all by yourself, you must be doing with no or minimum help from me.'

A: "Ummm hmmm ooops"

Z: 'Do not worry, I will be there to guide you. We will have our test run with you in Pune. So buck up, girl.'

Rootconf Hyderabad, the conference

The preparation for Rootconf Hyderabad started with them. After months of the editorial process - scouting for the proposals, reviewing them, having several rehearsals, and after passing the iron test in Pune, I reached Hyderabad to join my colleague Mak. Mak runs the sales at Hasgeek. With the camera, we had our excellent AV captain Amogh. So I was utterly secured and not worried about those two aspects.

A day before the conference Damini, our emcee, and I chalked out the plans for navigating the schedule and coordinating the conference. We met the volunteers at the venue after a humongous lunch with Hyderabadi deliciously (honest confession: food is the primary reason why I love to attend the conference in Hyderabad). We have several call volunteers in which our volunteer coordinator Jyotsna briefed them the duties. But it is always essential to make the volunteers introduced with the ground reality. We had a meet up at Thought Works.

The day of the conference starts early, much too early for the organizers and volunteers. Rootconf Hyderabad was no different. We opened the registration, and people started flocking in the auditorium. I opened the conference by addressing -

- What is Rootconf?

- The journey of Rootconf.

- Why we need several editions of Rootconf in different geographical locations all across India?

Then our emcee Damini took over. The first half of our schedule designed keeping the problems of large scale infrastructure in mind, like observability, maintainability, scalability, performance, taming the large systems, and networking issues. Piyush started our first speaker gave a talk on Observability and control theory. Next was Flipkart's journey of "Fast object distribution using P2P" by Ankur Jain. After a quick beverage break, Anubhav Mishra shared his take on "Taming infrastructure workflow at scale", the story of Hashicorp Followed by Tasdik Rahman and his story of "Achieving repeatable, extensible and self serve infrastructure" at Gojek."

The next half of the day planned to address the issues shared with infrastructure despite size or complexity. Like - Security, DevOpSec, scaling, and of course, microservices (an infrastructure conference seems incomplete without the discussion around monolith to microservices). Our very own security expert Lavakumar started it with "Deploying and managing CSP: the browser-side firewall", describing the security complexities post mege cart attack days. Jambunathan shared the tale of "Designing microservices around your data design” . For the last talk of the day, we had Gaurav Kamboj. He told us what happens with the system engineers at Hotstar when Virat Kohli is batting on his 90s, in "Scaling hotstar.com for 25 million concurrent viewers"

Birds of a Feather (BOF) session has always been a favorite at Rootconf. These non-recorded sessions give the participants a chance, to be frank. We have facilitators to progress the discussion and not presenters. While we had talks going on in the main audi, there are dedicated BOF area where we had sessions on

- AI Ops facilitated by Jayesh Bapu Ahire and Jambunathan Valady,

- Infrastructure as Code facilitated by Anubhav Mishra, Tasdik Rahman

- Observability by Gaurav.

This was the first time gauging the popularity of the BOFs we tried something new. We had a BOF session planned at the primary audi. It was on "Doing DevSecOps in your organization," aided by Lava and Hari. It was one session in which our emcee Damini had a difficult time to end. People had so many stories to share questions to ask, but there was no time. I also got some angry looks (which I do not mind at all) :).

In India, I have noticed that most of the conferences fail to have good/up to the mark flash talks. Invariably they have community information, conference, or meetup notifications (the writer is guilty of doing it). So I proposed that why can not we accept proposals for flash talks as well. Half of them are pre-selected and rest selected on the spot. Zainab agreed to it. Now we are following this rule since Rootconf Pune, and the quality of the flash talks has improved a lot. We had some fantastic flash talks. You can check it for yourself at https://www.youtube.com/watch?v=AlREWUAEMVk.

Thank you

Organizing a conference is not a person's job. In an extensive infrastructure, it is the small tools, microservices that keeps an extensive system working. Consider conference as a system, tasks as microservices. It requires each task to be perfect for the conference to be successful and flawless. And I am blessed to have an amazing team. Each amazing volunteers, the Null Hyderabad, Mozilla, AWS community, our emcee Damini, Hall manager Geetanjali, Speakers, Sponsors, attendees, and my team HasGeek. Last but not least, thank you, Zainab, for trusting me, being by my side, and not letting me fall.

The experience

Organizing a conference has been the journey of estrogen and adrenaline overflow for me. Be it getting into nightmares the excitement of each ticket sales, the long chats with the reviewers about talks, BOFs, discussion with the communities what they want from Rootconf, jitters before the conference starts or tweets, a blog post from the people that they enjoyed the conference was useful for them. It was an exciting, scary, happy, and satisfying journey for me. And guess what, my life continues to be so as Rootconf is ready with it's Delhi edition. I hope to meet you there.

Catalin George Festila: Python 3.7.5 : Simple intro in CSRF.

S. Lott: Functional programming design pattern: Nested Iterators == Flattening

Consider this data collection process.

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

if some_filter(l):

logger.info("Processing %s %s", h, l)

some_function(h, l)

Here's something that's a little easier to work with:

def h_l_iter(arg1, arg2):

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

if some_filter(l):

logger.info("Processing %s %s", h, l)

yield h, l

itertools.starmap(some_function, h_l_iter(arg1, arg2))

I think the pattern here is a kind of "Flattened Map" transformation. The initial design, with nested loops wrapping a process wasn't a good plan. A better plan is to think of the nested loops as a way to flatten the two tiers of the hierarchy into a single iterator. Then a mapping can be applied to process each item from that flat iterator.

Extracting the Filter

We can now tease apart the nested loops to expose the filter. In the version above, the body of the h_l_iter() function binds log-writing with the yield. If we take those two apart, we gain the flexibility of being able to change the filter (or the logging) without an awfully complex rewrite.T = TypeVar('T')

def logging_iter(source: Iterable[T]) -> Iterator[T]:

for item in source:

logger.info("Processing %s", item)

yield item

def h_l_iter(arg1, arg2):

for h in some_high_level_collection(arg1):

for l in h.some_low_level_collection(arg2):

yield h, l

raw_data = h_l_iter(arg1, arg2)

filtered_subset = logging_iter(filter(some_filter, raw_data))

itertools.starmap(some_function, filtered_subset)

Now, I can introduce various forms of multiprocessing to improve concurrency.

This transformed a hard-wired set of nest loops, if, and function evaluation into a "Flattener" that can be combined with off-the shelf filtering and mapping functions.

I've snuck in a kind of "tee" operation that writes an iterable sequence to a log. This can be injected at any point in the processing.

Logging the entire "item" value isn't really a great idea. Another mapping is required to create sensible log messages from each item. I've left that out to keep this exposition more focused.

Full Flattening

The h_l_iter() function is actually a generator expression. A function isn't needed.h_l_iter = (

(h, l)

for h in some_high_level_collection(arg1)

for l in h.some_low_level_collection(arg2)

)

This simplification doesn't add much value, but it seems to be general truth. In Python, it's a small change in syntax and therefore, an easy optimization to make.

What About The File System?

def recursive_walk(path: Path) -> Iterator[Path]:

for item in path.glob():

if item.is_file():

yield item

elif item.is_dir():

yield from recursive_walk(item)

tl;dr

The too-long version of this is:This pattern works for simple, flat items, nested structures, and even recursively-defined trees. It introduces flexibility with no real cost.

The other pattern in play is:

These felt like blinding revelations to me.

Codementor: Function-Based Views vs Class-Based Views in Django

Wesley Chun: Authorized Google API access from Python (part 2 of 2)

NOTE: You can also watch a video walkthrough of the common code covered in this blogpost here.

UPDATE (Apr 2019): In order to have a closer relationship between the GCP and G Suite worlds of Google Cloud, all G Suite Python code samples have been updated, replacing some of the older G Suite API client libraries with their equivalents from GCP. NOTE: using the newer libraries requires more initial code/effort from the developer thus will seem "less Pythonic." However, we will leave the code sample here with the original client libraries (deprecated but not shutdown yet) to be consistent with the video.

UPDATE (Aug 2016): The code has been modernized to use

oauth2client.tools.run_flow() instead of the deprecated oauth2client.tools.run_flow(). You can read more about that change here.UPDATE (Jun 2016): Updated to Python 2.7 & 3.3+ and Drive API v3.

Introduction

In this final installment of a (currently) two-part series introducing Python developers to building on Google APIs, we'll extend from the simple API example from the first post (part 1) just over a month ago. Those first snippets showed some skeleton code and a short real working sample that demonstrate accessing a public (Google) API with an API key (that queried public Google+ posts). An API key however, does not grant applications access to authorized data.Authorized data, including user information such as personal files on Google Drive and YouTube playlists, require additional security steps before access is granted. Sharing of and hardcoding credentials such as usernames and passwords is not only insecure, it's also a thing of the past. A more modern approach leverages token exchange, authenticated API calls, and standards such as OAuth2.

In this post, we'll demonstrate how to use Python to access authorized Google APIs using OAuth2, specifically listing the files (and folders) in your Google Drive. In order to better understand the example, we strongly recommend you check out the OAuth2 guides (general OAuth2 info, OAuth2 as it relates to Python and its client library) in the documentation to get started.

The docs describe the OAuth2 flow: making a request for authorized access, having the user grant access to your app, and obtaining a(n access) token with which to sign and make authorized API calls with. The steps you need to take to get started begin nearly the same way as for simple API access. The process diverges when you arrive on the Credentials page when following the steps below.

Google API access

In order to Google API authorized access, follow these instructions (the first three of which are roughly the same for simple API access):- Go to the Google Developers Console and login.

- Use your Gmail or Google credentials; create an account if needed

- Click "Create a Project" from pulldown under your username (at top)

- Enter a Project Name (mutable, human-friendly string only used in the console)

- Enter a Project ID (immutable, must be unique and not already taken)

- Once project has been created, enable APIs you wish to use

- You can toggle on any API(s) that support(s) simple or authorized API access.

- For the code example below, we use the Google Drive API.

- Other ideas: YouTube Data API, Google Sheets API, etc.

- Find more APIs (and version#s which you need) at the OAuth Playground.

- Select "Credentials" in left-nav

- Click "Create credentials" and select OAuth client ID

- In the new dialog, select your application type — we're building a command-line script which is an "Installed application"

- In the bottom part of that same dialog, specify the type of installed application; choose "Other" (cmd-line scripts are not web nor mobile)

- Click "Create Client ID" to generate your credentials

- Finally, click "Download JSON" to save the new credentials to your computer... perhaps choose a shorter name like "client_secret.json" or "client_id.json"

Accessing Google APIs from Python

In order to access authorized Google APIs from Python, you still need the Google APIs Client Library for Python, so in this case, do follow those installation instructions from part 1.We will again use the apiclient.discovery.build() function, which is what we need to create a service endpoint for interacting with an API, authorized or otherwise. However, for authorized data access, we need additional resources, namely the httplib2 and oauth2client packages. Here are the first five lines of the new boilerplate code for authorized access:

from __future__ import print_function

from googleapiclient import discoverySCOPES is a critical variable: it represents the set of scopes of authorization an app wants to obtain (then access) on behalf of user(s). What's does a scope look like?

from httplib2 import Http

from oauth2client import file, client, tools

SCOPES = # one or more scopes (strings)

Each scope is a single character string, specifically a URL. Here are some examples:

- 'https://www.googleapis.com/auth/plus.me'— access your personal Google+ settings

- 'https://www.googleapis.com/auth/drive.metadata.readonly'— read-only access your Google Drive file or folder metadata

- 'https://www.googleapis.com/auth/youtube'— access your YouTube playlists and other personal information

SCOPES = 'https://www.googleapis.com/auth/plus.me https://www.googleapis.com/auth/youtube'That is space-delimited and made tiny by me so it doesn't wrap in a regular-sized browser window; or it could be an easier-to-read, non-tiny, and non-wrapped tuple:

SCOPES = (

'https://www.googleapis.com/auth/plus.me',

'https://www.googleapis.com/auth/youtube',

)

Our example command-line script will just list the files on your Google Drive, so we only need the read-only Drive metadata scope, meaning our SCOPES variable will be just this:

SCOPES = 'https://www.googleapis.com/auth/drive.metadata.readonly'The next section of boilerplate represents the security code:

store = file.Storage('storage.json')

creds = store.get()

if not creds or creds.invalid:

flow = client.flow_from_clientsecrets('client_secret.json', SCOPES)

creds = tools.run_flow(flow, store)

Once the user has authorized access to their personal data by your app, a special "access token" is given to your app. This precious resource must be stored somewhere local for the app to use. In our case, we'll store it in a file called "storage.json". The lines setting the store and creds variables are attempting to get a valid access token with which to make an authorized API call.If the credentials are missing or invalid, such as being expired, the authorization flow (using the client secret you downloaded along with a set of requested scopes) must be created (by client.flow_from_clientsecrets()) and executed (by tools.run_flow()) to ensure possession of valid credentials. The client_secret.json file is the credentials file you saved when you clicked "Download JSON" from the DevConsole after you've created your OAuth2 client ID.

If you don't have credentials at all, the user much explicitly grant permission — I'm sure you've all seen the OAuth2 dialog describing the type of access an app is requesting (remember those scopes?). Once the user clicks "Accept" to grant permission, a valid access token is returned and saved into the storage file (because you passed a handle to it when you called tools.run_flow()).

Note: tools.run() deprecated by tools.run_flow()You may have seen usage of the older tools.run() function, but it has been deprecated by tools.run_flow(). We explain this in more detail in another blogpost specifically geared towards migration. |

Once the user grants access and valid credentials are saved, you can create one or more endpoints to the secure service(s) desired with apiclient.discovery.build(), just like with simple API access. Its call will look slightly different, mainly that you need to sign your HTTP requests with your credentials rather than passing an API key:

In our example, we're going to list your files and folders in your Google Drive, so for API, use the string 'drive'. The API is currently on version 3 so use 'v3' for VERSION:

DRIVE = discovery.build('drive', 'v3', http=creds.authorize(Http()))

Going back to our working example, once you have an established service endpoint, you can use the list() method of the files service to request the file data:

files = DRIVE.files().list().execute().get('files', [])

If there's any data to read, the response dict will contain an iterable of files that we can loop over (or default to an empty list so the loop doesn't fail), displaying file names and types:

for f in files:

print(f['name'], f['mimeType'])

Conclusion

To find out more about the input parameters as well as all the fields that are in the response, take a look at the docs for files().list(). For more information on what other operations you can execute with the Google Drive API, take a look at the reference docs and check out the companion video for this code sample. That's it!Below is the entire script for your convenience:

'''When you run it, you should see pretty much what you'd expect, a list of file or folder names followed by their MIMEtypes — I named my script drive_list.py:

drive_list.py -- Google Drive API authorized demo

updated Aug 2016 by +WesleyChun/@wescpy

'''

from __future__ import print_function

from apiclient import discovery

from httplib2 import Http

from oauth2client import file, client, tools

SCOPES = 'https://www.googleapis.com/auth/drive.readonly.metadata'

store = file.Storage('storage.json')

creds = store.get()

if not creds or creds.invalid:

flow = client.flow_from_clientsecrets('client_secret.json', SCOPES)

creds = tools.run_flow(flow, store)

DRIVE = discovery.build('drive', 'v3', http=creds.authorize(Http()))

files = DRIVE.files().list().execute().get('files', [])

for f in files:

print(f['name'], f['mimeType'])

$ python3 drive_list.py

Google Maps demo application/vnd.google-apps.spreadsheet

Overview of Google APIs - Sep 2014 application/vnd.google-apps.presentation

tiresResearch.xls application/vnd.google-apps.spreadsheet

6451_Core_Python_Schedule.doc application/vnd.google-apps.document

out1.txt application/vnd.google-apps.document

tiresResearch.xls application/vnd.ms-excel

6451_Core_Python_Schedule.doc application/msword

out1.txt text/plain

Maps and Sheets demo application/vnd.google-apps.spreadsheet

ProtoRPC Getting Started Guide application/vnd.google-apps.document

gtaskqueue-1.0.2_public.tar.gz application/x-gzip

Pull Queues application/vnd.google-apps.folder

gtaskqueue-1.0.1_public.tar.gz application/x-gzip

appengine-java-sdk.zip application/zip

taskqueue.py text/x-python-script

Google Apps Security Whitepaper 06/10/2010.pdf application/pdf

Obviously your output will be different, depending on what files are in your Google Drive. But that's it... hope this is useful. You can now customize this code for your own needs and/or to access other Google APIs. Thanks for reading!EXTRA CREDIT: To test your skills, add functionality to this code that also displays the last modified timestamp, the file (byte)size, and perhaps shave the MIMEtype a bit as it's slightly harder to read in its entirety... perhaps take just the final path element? One last challenge: in the output above, we have both Microsoft Office documents as well as their auto-converted versions for Google Apps... perhaps only show the filename once and have a double-entry for the filetypes!

Andre Roberge: Friendly Mu

Kushal Das: Indian police attacked university campuses on government order

Yesterday, Indian police attacked protesting students across different university campuses. They fired tear gas shells inside of libraries; they lit buses on fire and then told that the students did it. They broke into a Mosque and beat up students there.

The Internet has been shut down in Kashmir for over 130 days, and now few more states + different smaller parts of the country are having the same.

Search for #JamiaProtest or #SOSJamia on twitter to see what is going on in India. I asked to my around 5k followers, to reply if they can see our tweets (only around 5 replied via the original tweet).

Trigger warning (The following tweets shows police brutality)

I have curated a few tweets for you, please see these (if possible) and then share those.

- Police + goons (posing as police) beating up the girls https://twitter.com/amirul_alig/status/1206273340194746368

- Police attacked the library where students were studying https://twitter.com/i_kathayat/status/1206271022976008194

- Police attacking Jamia Milia University library https://twitter.com/imMAK02/status/1206213159981203458

- Police vandalizing university https://twitter.com/amirul_alig/status/1206273340194746368

- Police firing tear gases at AMU https://twitter.com/imMAK02/status/1206244102641049600

- Police firing their guns https://twitter.com/imMAK02/status/1206233908083187712

- Police vandalizing public property https://twitter.com/imMAK02/status/1206259162969038848

- Police breaking the gate of Aligarh Muslim University https://twitter.com/imMAK02/status/1206261850943311873

- Police putting fire on a bus https://twitter.com/DesiPoliticks/status/1206222796927438848

- Police attacking AMU https://twitter.com/tariq_shameem/status/1206259086796279810

Why am I writing this in my blog (maybe you are reading it on a technical planet)?

Most people are ignorant about the fascist regime in India, and the IT industry (including us) mostly tries to pretend that everything is perfect. I hope at least a few more around will read the tweets linked from this post and also watch the videos. I hope you will share those in your social circles. To stop fascists, we have to rise together.

Btw, you should at least read this story from New Yorker on how the fascist government is attacking the fellow citizens.

To know about the reason behind the current protest, read this story showing the similarities between Nazi Germany and current Indian government.

Top most drawing credit: I am yet to find the original artist, I will update when I find the name.

Mike Driscoll: PyDev of the Week: Ted Petrou

This week we welcome Ted Petrou (@TedPetrou) as our PyDev of the Week! Ted is the author of the Pandas Cookbook and also teaches Pandas in several courses on Udemy. Let’s take some time to get to know Ted better!

Can you tell us a little about yourself (hobbies, education, etc):

I graduated with a masters degree in statistics from Rice University in Houston, Texas in 2006. During my degree, I never heard the phrase “machine learning” uttered even once and it was several years before the field of data science became popular. I had entered the program pursuing a Ph.D with just six other students. Although statistics was a highly viable career at the time, it wasn’t nearly as popular as it is today.

After limping out of the program with a masters degree, I looked into the fields of actuarial science, became a professional poker play, taught high school math, built reports with SQL and Excel VBA as a financial analyst before becoming a data scientist at Schlumberger. During my stint as a data scientist, I started the meetup group Houston Data Science where I gave tutorials on various Python data science topics. Once I accumulated enough material, I started my company Dunder Data, teaching data science full time.

Why did you start using Python?

I began using Python when I took an introductory course offered by Rice University on coursera.org in 2013 when I was teaching high school math. I had done quite a bit of programming prior to that, but had never heard of Python before. It was a great course where we built a new game each week.

What other programming languages do you know and which is your favorite?

I began programming on a TI-82 calculator about 22 years ago. There was a minimal built-in language that my friends and I would use to build games. I remember making choose-your-own adventure games using the menu command. I took classes in C and Java in college and worked with R as a graduate student. A while later, I learned enough HTML and JavaScript to build basic websites. I also know SQL quite well and have done some work in Excel VBA.

My favorite language is Python, but I have no emotional attachment to it. I’d actually prefer to use a language that is statically typed, but I don’t have much of a choice as the demand for Python is increasing.

What projects are you working on now?

Outside of building material for my courses, I have two major projects, Dexplo and Dexplot, that are in current development. Dexplo is a data analysis library similar to pandas with the goal of having simpler syntax, better performance, one obvious way to perform common tasks, and more functionality. Dexplot is similar to seaborn with similar goals.

Which Python libraries are your favorite (core or 3rd party)?

I really enjoy using scikit-learn. The uniformity of the estimator object makes it easy to use. The recent introduction of the ColumnTransformer and the upgrade to the OneHotEncoder have really improved the library.

I see you are an author. How did that come about?

I contacted both O’Reilly and Packt Publishing with book ideas. O’Reilly offered me an online course and Packt needed an author for Pandas Cookbook. I really wanted to write a book and already had lots of material available from teaching my data science bootcamp so I went with Packt.

Do you have any advice for other aspiring authors?

It really helps to teach the material before you publish it. The feedback you get from the students is invaluable to making improvements. You can see firsthand what works and what needs to be changed.

What’s the origin story for your company, Dunder Data?

During my time as a data scientist at Schlumberger, I participated in several weeks of poorly taught corporate training. This experience motivated me to start creating tutorials. I started the Houston Data Science meetup group which helped lay the foundation for my Dunder Data. Many people ask if the “Dunder” is related to Dunder Mifflin, the paper company from the popular TV show, The Office. The connection is coincidental, as dunder refers to “magic” or “special” methods. The idea is that Dunder Data translates to “Magical Data”.

Thanks for doing the interview, Ted!

The post PyDev of the Week: Ted Petrou appeared first on The Mouse Vs. The Python.

Janusworx: #100DaysOfCode, Days 024, 025 & 026 – Watched Videos

Life has suddenly turned a little topsy turvy at work.

No time to work at stuff.

Keeping up my #100DaysOfCode streak by watching the course videos.

Learnt about the Itertools module, learnt decorators and error handling.

P.S.

Adding these posts back to the planet, because more than a couple of you, kind folk, have been missing me.

Real Python: Python Statistics Fundamentals: How to Describe Your Data

In the era of big data and artificial intelligence, data science and machine learning have become essential in many fields of science and technology. A necessary aspect of working with data is the ability to describe, summarize, and represent data visually. Python statistics libraries are comprehensive, popular, and widely used tools that will assist you in working with data.

In this tutorial, you’ll learn:

- What numerical quantities you can use to describe and summarize your datasets

- How to calculate descriptive statistics in pure Python

- How to get descriptive statistics with available Python libraries

- How to visualize your datasets

Free Bonus:Click here to download 5 Python + Matplotlib examples with full source code that you can use as a basis for making your own plots and graphics.

Understanding Descriptive Statistics

Descriptive statistics is about describing and summarizing data. It uses two main approaches:

- The quantitative approach describes and summarizes data numerically.

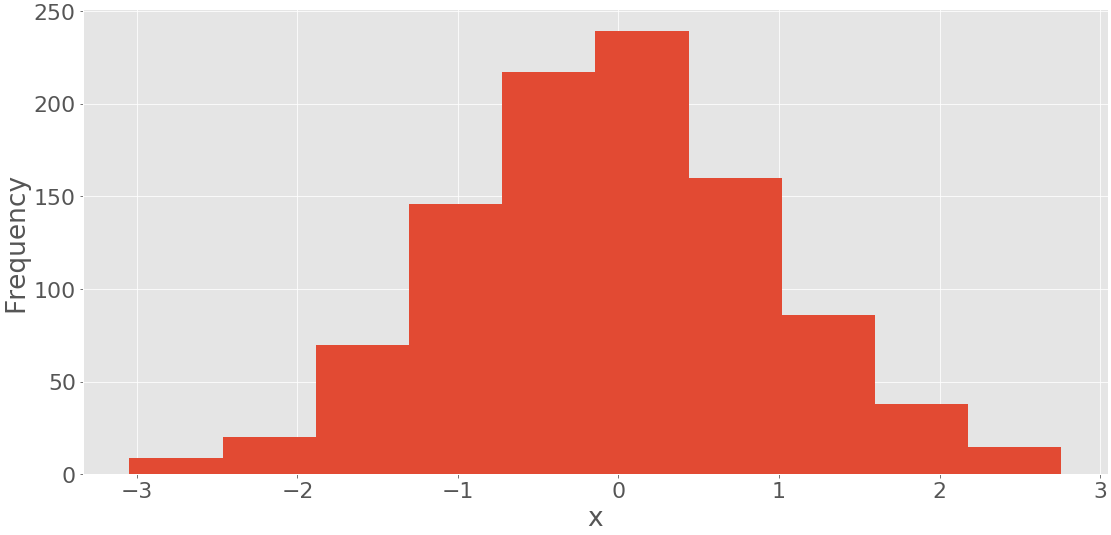

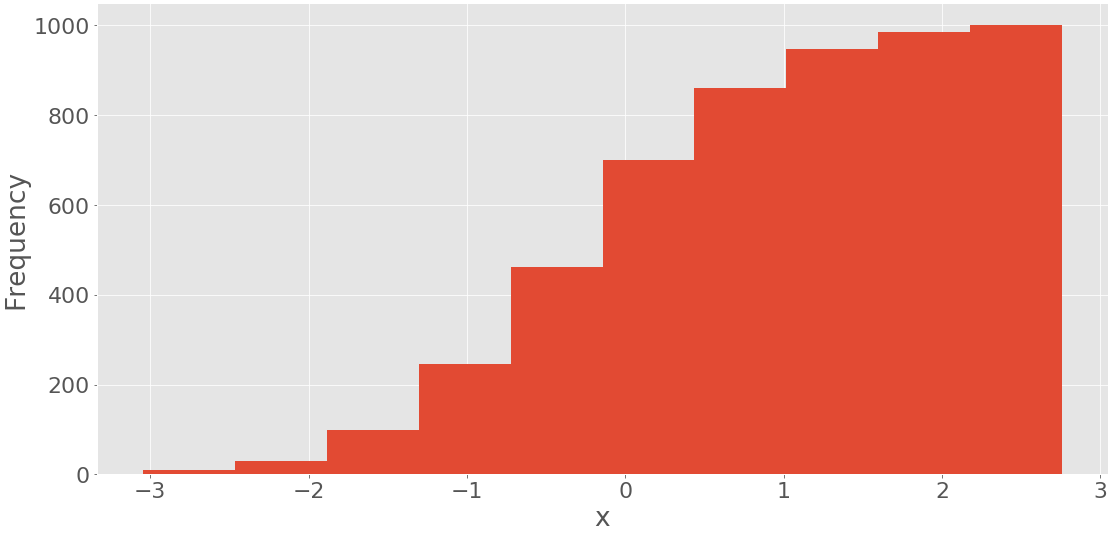

- The visual approach illustrates data with charts, plots, histograms, and other graphs.

You can apply descriptive statistics to one or many datasets or variables. When you describe and summarize a single variable, you’re performing univariate analysis. When you search for statistical relationships among a pair of variables, you’re doing a bivariate analysis. Similarly, a multivariate analysis is concerned with multiple variables at once.

Types of Measures

In this tutorial, you’ll learn about the following types of measures in descriptive statistics:

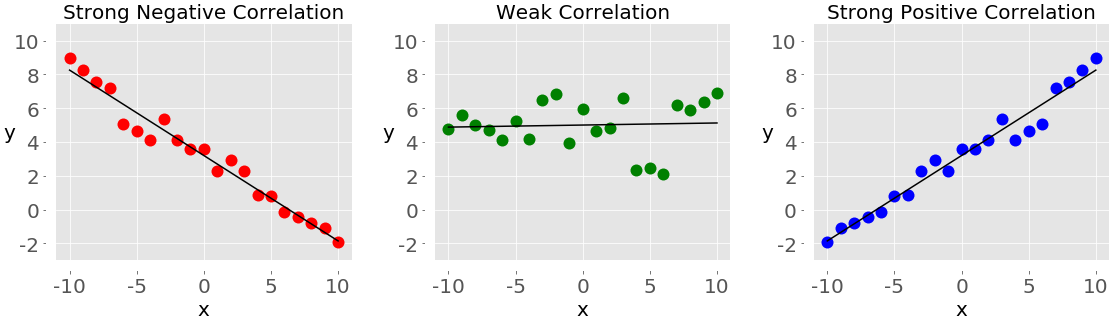

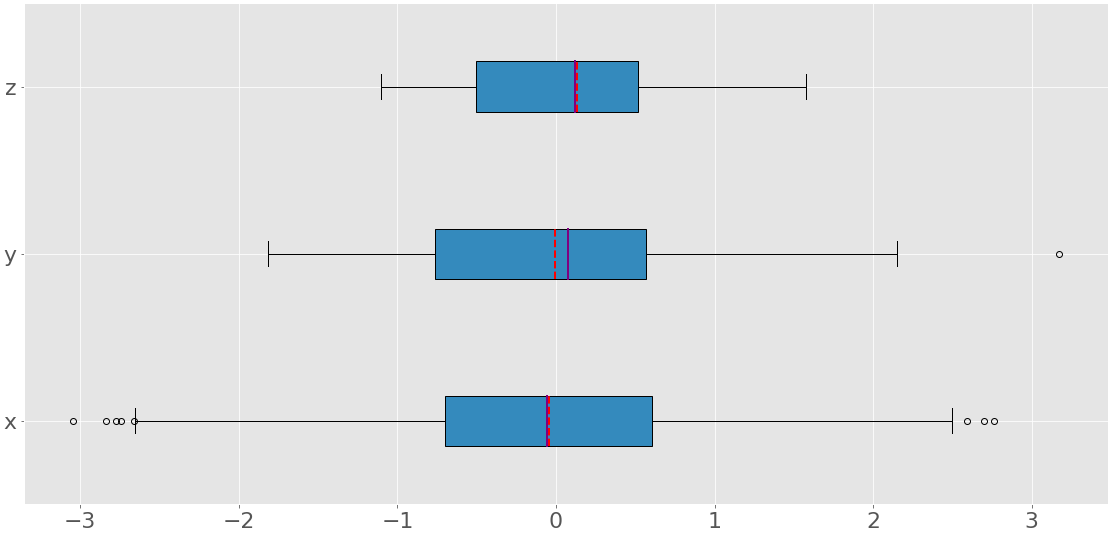

- Central tendency tells you about the centers of the data. Useful measures include the mean, median, and mode.

- Variability tells you about the spread of the data. Useful measures include variance and standard deviation.

- Correlation or joint variability tells you about the relation between a pair of variables in a dataset. Useful measures include covariance and the correlation coefficient.

You’ll learn how to understand and calculate these measures with Python.

Population and Samples

In statistics, the population is a set of all elements or items that you’re interested in. Populations are often vast, which makes them inappropriate for collecting and analyzing data. That’s why statisticians usually try to make some conclusions about a population by choosing and examining a representative subset of that population.

This subset of a population is called a sample. Ideally, the sample should preserve the essential statistical features of the population to a satisfactory extent. That way, you’ll be able to use the sample to glean conclusions about the population.

Outliers

An outlier is a data point that differs significantly from the majority of the data taken from a sample or population. There are many possible causes of outliers, but here are a few to start you off:

- Natural variation in data

- Change in the behavior of the observed system

- Errors in data collection

Data collection errors are a particularly prominent cause of outliers. For example, the limitations of measurement instruments or procedures can mean that the correct data is simply not obtainable. Other errors can be caused by miscalculations, data contamination, human error, and more.

There isn’t a precise mathematical definition of outliers. You have to rely on experience, knowledge about the subject of interest, and common sense to determine if a data point is an outlier and how to handle it.

Choosing Python Statistics Libraries

There are many Python statistics libraries out there for you to work with, but in this tutorial, you’ll be learning about some of the most popular and widely used ones:

Python’s

statisticsis a built-in Python library for descriptive statistics. You can use it if your datasets are not too large or if you can’t rely on importing other libraries.NumPy is a third-party library for numerical computing, optimized for working with single- and multi-dimensional arrays. Its primary type is the array type called

ndarray. This library contains many routines for statistical analysis.SciPy is a third-party library for scientific computing based on NumPy. It offers additional functionality compared to NumPy, including

scipy.statsfor statistical analysis.Pandas is a third-party library for numerical computing based on NumPy. It excels in handling labeled one-dimensional (1D) data with

Seriesobjects and two-dimensional (2D) data withDataFrameobjects.Matplotlib is a third-party library for data visualization. It works well in combination with NumPy, SciPy, and Pandas.

Note that, in many cases, Series and DataFrame objects can be used in place of NumPy arrays. Often, you might just pass them to a NumPy or SciPy statistical function. In addition, you can get the unlabeled data from a Series or DataFrame as a np.ndarray object by calling .values or .to_numpy().

Getting Started With Python Statistics Libraries

The built-in Python statistics library has a relatively small number of the most important statistics functions. The official documentation is a valuable resource to find the details. If you’re limited to pure Python, then the Python statistics library might be the right choice.

A good place to start learning about NumPy is the official User Guide, especially the quickstart and basics sections. The official reference can help you refresh your memory on specific NumPy concepts. While you read this tutorial, you might want to check out the statistics section and the official scipy.stats reference as well.

Note:

To learn more about NumPy, check out these resources:

If you want to learn Pandas, then the official Getting Started page is an excellent place to begin. The introduction to data structures can help you learn about the fundamental data types, Series and DataFrame. Likewise, the excellent official introductory tutorial aims to give you enough information to start effectively using Pandas in practice.

Note:

To learn more about Pandas, check out these resources:

matplotlib has a comprehensive official User’s Guide that you can use to dive into the details of using the library. Anatomy of Matplotlib is an excellent resource for beginners who want to start working with matplotlib and its related libraries.

Note:

To learn more about data visualization, check out these resources:

Let’s start using these Python statistics libraries!

Calculating Descriptive Statistics

Start by importing all the packages you’ll need:

>>> importmath>>> importstatistics>>> importnumpyasnp>>> importscipy.stats>>> importpandasaspdThese are all the packages you’ll need for Python statistics calculations. Usually, you won’t use Python’s built-in math package, but it’ll be useful in this tutorial. Later, you’ll import matplotlib.pyplot for data visualization.

Let’s create some data to work with. You’ll start with Python lists that contain some arbitrary numeric data:

>>> x=[8.0,1,2.5,4,28.0]>>> x_with_nan=[8.0,1,2.5,math.nan,4,28.0]>>> x[8.0, 1, 2.5, 4, 28.0]>>> x_with_nan[8.0, 1, 2.5, nan, 4, 28.0]Now you have the lists x and x_with_nan. They’re almost the same, with the difference that x_with_nan contains a nan value. It’s important to understand the behavior of the Python statistics routines when they come across a not-a-number value (nan). In data science, missing values are common, and you’ll often replace them with nan.

Note: How do you get a nan value?

In Python, you can use any of the following:

You can use all of these functions interchangeably:

>>> math.isnan(np.nan),np.isnan(math.nan)(True, True)>>> math.isnan(y_with_nan[3]),np.isnan(y_with_nan[3])(True, True)You can see that the functions are all equivalent. However, please keep in mind that comparing two nan values for equality returns False. In other words, math.nan == math.nan is False!

Now, create np.ndarray and pd.Series objects that correspond to x and x_with_nan:

>>> y,y_with_nan=np.array(x),np.array(x_with_nan)>>> z,z_with_nan=pd.Series(x),pd.Series(x_with_nan)>>> yarray([ 8. , 1. , 2.5, 4. , 28. ])>>> y_with_nanarray([ 8. , 1. , 2.5, nan, 4. , 28. ])>>> z0 8.01 1.02 2.53 4.04 28.0dtype: float64>>> z_with_nan0 8.01 1.02 2.53 NaN4 4.05 28.0dtype: float64You now have two NumPy arrays (y and y_with_nan) and two Pandas Series (z and z_with_nan). All of these are 1D sequences of values.

Note: Although you’ll use lists throughout this tutorial, please keep in mind that, in most cases, you can use tuples in the same way.

You can optionally specify a label for each value in z and z_with_nan.

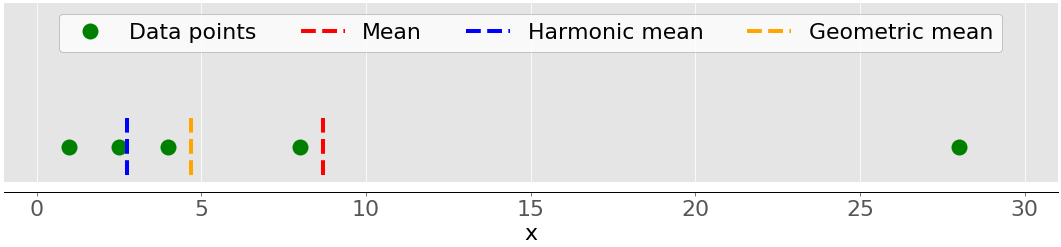

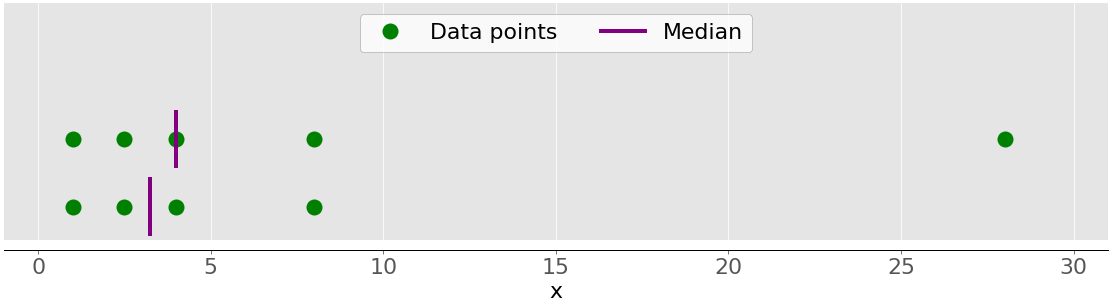

Measures of Central Tendency

The measures of central tendency show the central or middle values of datasets. There are several definitions of what’s considered to be the center of a dataset. In this tutorial, you’ll learn how to identify and calculate these measures of central tendency:

- Mean

- Weighted mean

- Geometric mean

- Harmonic mean

- Median

- Mode

Mean

The sample mean, also called the sample arithmetic mean or simply the average, is the arithmetic average of all the items in a dataset. The mean of a dataset 𝑥 is mathematically expressed as Σᵢ𝑥ᵢ/𝑛, where 𝑖 = 1, 2, …, 𝑛. In other words, it’s the sum of all the elements 𝑥ᵢ divided by the number of items in the dataset 𝑥.

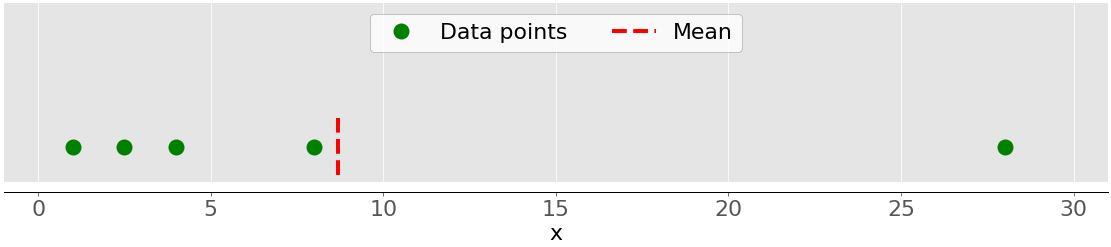

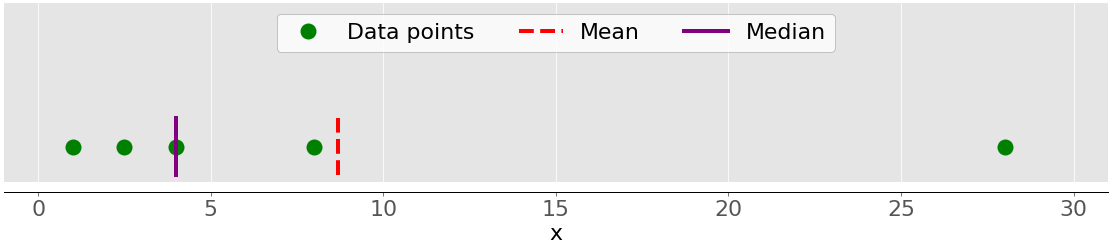

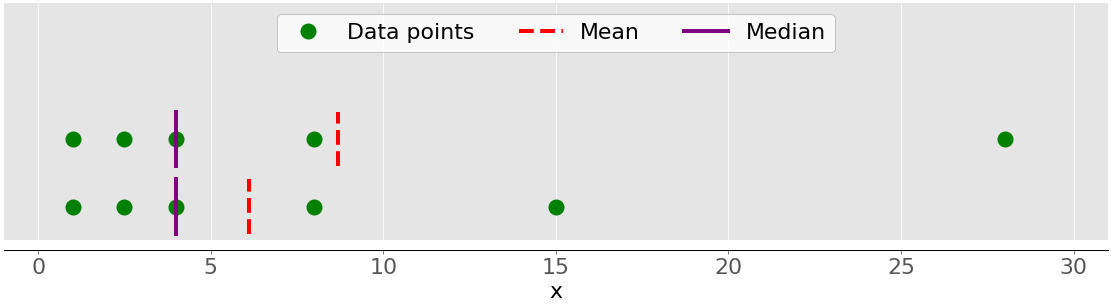

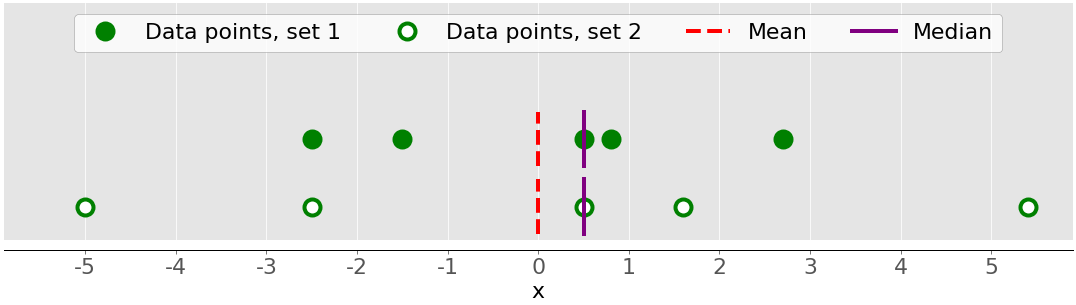

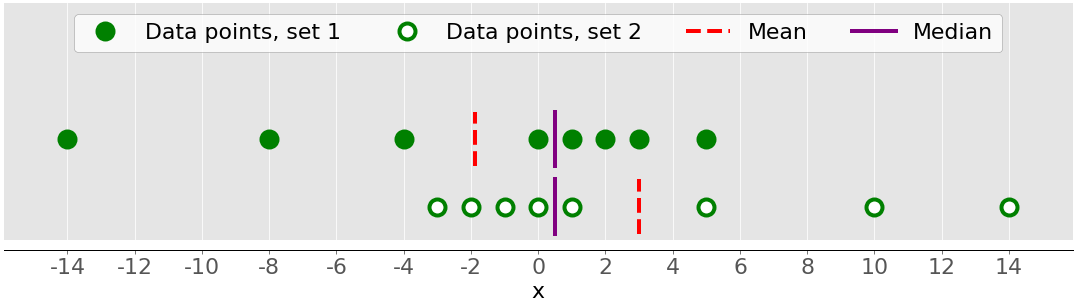

This figure illustrates the mean of a sample with five data points:

The green dots represent the data points 1, 2.5, 4, 8, and 28. The red dashed line is their mean, or (1 + 2.5 + 4 + 8 + 28) / 5 = 8.7.

You can calculate the mean with pure Python using sum() and len(), without importing libraries:

>>> mean_=sum(x)/len(x)>>> mean_8.7Although this is clean and elegant, you can also apply built-in Python statistics functions:

>>> mean_=statistics.mean(x)>>> mean_8.7>>> mean_=statistics.fmean(x)>>> mean_8.7You’ve called the functions mean() and fmean() from the built-in Python statistics library and got the same result as you did with pure Python. fmean() is introduced in Python 3.8 as a faster alternative to mean(). It always returns a floating-point number.

However, if there are nan values among your data, then statistics.mean() and statistics.fmean() will return nan as the output:

>>> mean_=statistics.mean(x_with_nan)>>> mean_nan>>> mean_=statistics.fmean(x_with_nan)>>> mean_nanThis result is consistent with the behavior of sum(), because sum(x_with_nan) also returns nan.

If you use NumPy, then you can get the mean with np.mean():

>>> mean_=np.mean(y)>>> mean_8.7In the example above, mean() is a function, but you can use the corresponding method .mean() as well:

>>> mean_=y.mean()>>> mean_8.7The function mean() and method .mean() from NumPy return the same result as statistics.mean(). This is also the case when there are nan values among your data:

>>> np.mean(y_with_nan)nan>>> y_with_nan.mean()nanYou often don’t need to get a nan value as a result. If you prefer to ignore nan values, then you can use np.nanmean():

>>> np.nanmean(y_with_nan)8.7nanmean() simply ignores all nan values. It returns the same value as mean() if you were to apply it to the dataset without the nan values.

pd.Series objects also have the method .mean():