Python Bytes: #159 Brian's PR is merged, the src will flow

Codementor: Developer Tools & Frameworks for a Python Developer - Reading Time: 3 Mins

Philip Semanchuk: Mailing lists for my Python IPC packages

My package sysv_ipc celebrates its 11th birthday tomorrow, so I thought I would give it a mailing list as a gift. I didn’t want its sibling posix_ipc to get jealous, so I created one for that too.

You can read/join the sysv_ipc group here: https://groups.io/g/python-sysv-ipc

You can read/join the posix_ipc group here: https://groups.io/g/python-posix-ipc

Janusworx: #100DaysOfCode, Day 014 – Classes, List Comprehensions and Generators

Did a video session again today, since I came back late from the doc.

Watched videos about building a small d&d game, using classes.

This was fun :)

Working on the challenge will be exciting.

And then some more on list comprehensions and generators.

I had one aha, about tools as I watched this.

The instructor used a regular expression to process a list and that little line, cut down his code by lots.

That made me realise that programming is simply picking up the right tool for the job, and that there are a plethora, to do the work you need to do. One is not necessarily better than the other, just that some are better suited to the job at hand, than others.

Revised how list comprehensions and generators work.

And like a dork, I just realised that the operative thing is comprehension. You write in a comprehensive way to build some sort of collection. A list comprehension to write lists, a dictionary comprehension to build a dictionary, a generator comprehension to … well, you get the idea :)

This is all I got for today.

Will work more tomorrow.

Robin Wilson: Automatically downloading nursery photos from ParentZone using Selenium

My son goes to a nursery part-time, and the nursery uses a system called ParentZone from Connect Childcare to send information between us (his parents) and nursery. Primarily, this is used to send us updates on the boring details of the day (what he’s had to eat, nappy changes and so on), and to send ‘observations’ which include photographs of what he’s been doing at nursery. The interfaces include a web app (pictured below) and a mobile app:

I wanted to be able to download all of these photos easily to keep them in my (enormous) set of photos of my son, without manually downloading each one. So, I wrote a script to do this, with the help of Selenium.

If you want to jump straight to the script, then have a look at the ParentZonePhotoDownloader Github repository. The script is documented and has a nice command-line interface. For more details on how I created it, read on…

Selenium is a browser automation tool that allows you to control pretty-much everything a browser does through code, while also accessing the underlying HTML that the browser is displaying. This makes it the perfect choice for scraping websites that have a lot of Javascript – like the ParentZone website.

To get Selenium working you need to install a ‘webdriver’ that will connect to a particular web browser and do the actual controlling of the browser. I’ve chosen to use chromedriver to control Google Chrome. See the Getting Started guide to see how to install chromedriver – but it’s basically as simple as downloading a binary file and putting it in your PATH.

My script starts off fairly simply, by creating an instance of the Chrome webdriver, and navigating to the ParentZone homepage:

driver = webdriver.Chrome()

driver.get("https://www.parentzone.me/")The next line: driver.implicitly_wait(10) tells Selenium to wait up to 10 seconds for elements to appear before giving up and giving an error. This is useful for sites that might be slightly slow to load (eg. those with large pictures).

We then fill in the email address and password in the login form:

email_field = driver.find_element_by_xpath('//*[@id="login"]/fieldset/div[1]/input')

email_field.clear()

email_field.send_keys(email)Here we’re selecting the email address field using it’s XPath, which is a sort of query language for selecting nodes from an XML document (or, by extension, an HTML document – as HTML is a form of XML). I have some basic knowledge of XPath, but usually I just copy the expressions I need from the Chrome Dev Tools window. To do this, select the right element in Dev Tools, then right click on the element’s HTML code and choose ‘Copy->Copy XPath’:

We then clear the field, and fake the typing of the email string that we took as a command-line argument.

We then repeat the same thing for the password field, and then just send the ‘Enter’ key to submit the field (easier than finding the right submit button and fake-clicking it).

Once we’ve logged in and gone to the correct page (the ‘timeline’ page) we want to narrow down the page to just show ‘Observations’ (as these are usually the only posts that have photographs). We do this by selecting a dropdown, and then choosing an option from the dropdown box:

dropdown = Select(driver.find_element_by_xpath('//*[@id="filter"]/div[2]/div[4]/div/div[1]/select'))

dropdown.select_by_value('7')I found the right value (7) to set this to by reading the HTML code where the options were defined, which included this line: <option value="7">Observation</option>.

We then click the ‘Submit’ button:

submit_button = driver.find_element_by_id('submit-filter')

submit_button.click()Now we get to the bit that had me stuck for a while… The page has ‘infinite scrolling’ – that is, as you scroll down, more posts ‘magically’ appear. We need to scroll right down to the bottom so that we have all of the observations before we try to download them.

I tried using various complicated Javascript functions, but none of them seemed to work – so I settled on a naive way to do it. I simply send the ‘End’ key (which scrolls to the end of the page), wait a few seconds, and then count the number of photos on the page (in this case, elements with the class img-responsive, which is used for photos from observations). When this number stops increasing, I know I’ve reached the point where there are no more pictures to load.

The code that does this is fairly easy to understand:

html = driver.find_element_by_tag_name('html')

old_n_photos = 0

while True:

# Scroll

html.send_keys(Keys.END)

time.sleep(3)

# Get all photos

media_elements = driver.find_elements_by_class_name('img-responsive')

n_photos = len(media_elements)

if n_photos > old_n_photos:

old_n_photos = n_photos

else:

breakWe’ve now got a page with all the photos on it, so we just need to extract them. In fact, we’ve already got a list of all of these photo elements in media_elements, so we just iterate through this and grab some details for each image. Specifically, we get the image URL with element.get_attribute('src'), and then extract the unique image ID from that URL. We then choose the filename to save the file as based on the type of element that was used to display it on the web page (the element.tag_name). If it was a <img> tag then it’s an image, if it was a <video> tag then it was a video.

We then download the image/video file from the website using the requests library (that is, not through Selenium, but separately, just using the URL obtained through Selenium):

# For each image that we've found

for element in media_elements:

image_url = element.get_attribute('src')

image_id = image_url.split("&d=")[-1]

# Deal with file extension based on tag used to display the media

if element.tag_name == 'img':

extension = 'jpg'

elif element.tag_name == 'video':

extension = 'mp4'

image_output_path = os.path.join(output_folder,

f'{image_id}.{extension}')

# Only download and save the file if it doesn't already exist

if not os.path.exists(image_output_path):

r = requests.get(image_url, allow_redirects=True)

open(image_output_path, 'wb').write(r.content)Putting this all together into a command-line script was made much easier by the click library. Adding the following decorators to the top of the main function creates a whole command-line interface automatically – even including prompts to specify parameters that weren’t specified on the command-line:

@click.command()

@click.option('--email', help='Email address used to log in to ParentZone',

prompt='Email address used to log in to ParentZone')

@click.option('--password', help='Password used to log in to ParentZone',

prompt='Password used to log in to ParentZone')

@click.option('--output_folder', help='Output folder',

default='./output')So, that’s it. Less than 100 lines in total for a very useful script that saves me a lot of tedious downloading. The full script is available on Github

Zato Blog: Auto-generating API specifications as OpenAPI, WSDL and Sphinx

This article presents a workflow for auto-generation of API specifications for your Zato services - if you need to share your APIs with partners, external or internal, this is how it can be done.

Sample services

Let's consider the services below - they represent a subset of a hypothetical API of a telecommunication company. In this case, they are to do with pre-paid cards. Deploy them on your servers in a module called api.py.

Note that their implementation is omitted, we only deal with their I/O, as it is expressed using SimpleIO.

What we would like to have, and what we will achieve here, is a website with static HTML describing the services in terms of a formal API specification.

# -*- coding: utf-8 -*-# Zatofromzato.server.serviceimportInt,Service# #####################################################################classRechargeCard(Service):""" Recharges a pre-paid card. Amount must not be less than 1 and it cannot be greater than 10000."""classSimpleIO:input_required='number',Int('amount')output_required=Int('status')defhandle(self):pass# #####################################################################classGetCurrentBalance(Service):""" Returns current balance of a pre-paid card."""classSimpleIO:input_required=Int('number')output_required=Int('status')output_optional='balance'defhandle(self):pass# #####################################################################Docstrings and SimpleIO

In the sample services, observe that:

Documentation is added as docstrings - this is something that services, being simply Python classes, will have anyway

One of the services has a multi-line docstring whereas the other one's is single-line, this will be of significance later on

SimpleIO definitions use both string types and integers

Command line usage

To generate API specifications, command zato apispec is used. This is part of the CLI that Zato ships with.

Typically, only well-chosen services should be documented publicly, and the main two options the command has are --include and --exclude.

Both accept a comma-separated list of shell-like glob patterns that indicate which services should or should not be documented.

For instance, if the code above is saved in api.py, the command to output their API specification is:

zato apispec /path/to/server \

--dir /path/to/output/directory \

--include api.*

Next, we can navigate to the directory just created and type the command below to build HTML.

cd /path/to/output/directory

make html

OpenAPI, WSDL and Sphinx

The result of the commands is as below - OpenAPI and WSDL files are in the menu column to the left.

Also, note that in the main index only the very first line of a docstring is used but upon opening a sub-page for each service its full docstring is used.

Branding and customisation

While the result is self-contained and it can be already used as-is, there is still room for more.

Given that the output is generated using Sphinx, it is possible to customise it as needed, for instance, by applying custom CSS or other branding information, such as the logo of a company exposing a given API.

All of the files used for generation of HTML are stored in config directories of each server - if the path to a server is /path/to/server then the full path to Sphinx templates is in /path/to/server/config/repo/static/sphinxdoc/apispec.

Summary

That is everything - generating static documentation is a matter of just a single command. The output can be fully customised while the resulting OpenAPI and WSDL artifacts can be given to partners to let third-parties automatically generate API clients for your Zato services.

Stack Abuse: Tensorflow 2.0: Solving Classification and Regression Problems

After much hype, Google finally released TensorFlow 2.0 which is the latest version of Google's flagship deep learning platform. A lot of long-awaited features have been introduced in TensorFlow 2.0. This article very briefly covers how you can develop simple classification and regression models using TensorFlow 2.0.

Classification with Tensorflow 2.0

If you have ever worked with Keras library, you are in for a treat. TensorFlow 2.0 now uses Keras API as its default library for training classification and regression models. Before TensorFlow 2.0, one of the major criticisms that the earlier versions of TensorFlow had to face stemmed from the complexity of model creation. Previously you need to stitch graphs, sessions and placeholders together in order to create even a simple logistic regression model. With TensorFlow 2.0, creating classification and regression models have become a piece of cake.

So without further ado, let's develop a classification model with TensorFlow.

The Dataset

The dataset for the classification example can be downloaded freely from this link. Download the file in CSV format. If you open the downloaded CSV file, you will see that the file doesn't contain any headers. The detail of the columns is available at UCI machine learning repository. I will recommend that you read the dataset information in detail from the download link. I will briefly summarize the dataset in this section.

The dataset basically consists of 7 columns:

- price (the buying price of the car)

- maint ( the maintenance cost)

- doors (number of doors)

- persons (the seating capacity)

- lug_capacity (the luggage capacity)

- safety (how safe is the car)

- output (the condition of the car)

Given the first 6 columns, the task is to predict the value for the 7th column i.e. the output. The output column can have one of the three values i.e. "unacc" (unacceptable), "acc" (acceptable), good, and very good.

Importing Libraries

Before we import the dataset into our application, we need to import the required libraries.

import pandas as pd

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

sns.set(style="darkgrid")

Before we proceed, I want you to make sure that you have the latest version of TensorFlow i.e. TensorFlow 2.0. You can check your TensorFlow version with the following command:

print(tf.__version__)

If you do not have TensorFlow 2.0 installed, you can upgrade to the latest version via the following command:

$ pip install --upgrade tensorflow

Importing the Dataset

The following script imports the dataset. Change the path to your CSV data file according.

cols = ['price', 'maint', 'doors', 'persons', 'lug_capacity', 'safety','output']

cars = pd.read_csv(r'/content/drive/My Drive/datasets/car_dataset.csv', names=cols, header=None)

Since the CSV file doesn't contain column headers by default, we passed a list of column headers to the pd.read_csv() method.

Let's now see the first 5 rows of the dataset via the head() method.

cars.head()

Output:

You can see the 7 columns in the dataset.

Data Analysis and Preprocessing

Let's briefly analyze the dataset by plotting a pie chart that shows the distribution of the output. The following script increases the default plot size.

plot_size = plt.rcParams["figure.figsize"]

plot_size [0] = 8

plot_size [1] = 6

plt.rcParams["figure.figsize"] = plot_size

And the following script plots the pie chart showing the output distribution.

cars.output.value_counts().plot(kind='pie', autopct='%0.05f%%', colors=['lightblue', 'lightgreen', 'orange', 'pink'], explode=(0.05, 0.05, 0.05,0.05))

Output:

The output shows that majority of cars (70%) are in unacceptable condition while 20% cars are in acceptable conditions. The ratio of cars in good and very good condition is very low.

All the columns in our dataset are categorical. Deep learning is based on statistical algorithms and statistical algorithms work with numbers. Therefore, we need to convert the categorical information into numeric columns. There are various approaches to do that but one of the most common approach is one-hot encoding. In one-hot encoding, for each unique value in the categorical column, a new column is created. For the rows in the actual column where the unique value existed, a 1 is added to the corresponding row of the column created for that particular value. This might sound complex but the following example will make it clear.

The following script converts categorical columns into numeric columns:

price = pd.get_dummies(cars.price, prefix='price')

maint = pd.get_dummies(cars.maint, prefix='maint')

doors = pd.get_dummies(cars.doors, prefix='doors')

persons = pd.get_dummies(cars.persons, prefix='persons')

lug_capacity = pd.get_dummies(cars.lug_capacity, prefix='lug_capacity')

safety = pd.get_dummies(cars.safety, prefix='safety')

labels = pd.get_dummies(cars.output, prefix='condition')

To create our feature set, we can merge the first six columns horizontally:

X = pd.concat([price, maint, doors, persons, lug_capacity, safety] , axis=1)

Let's see how our label column looks now:

labels.head()

Output:

The label column is basically a one-hot encoded version of the output column that we had in our dataset. The output column had four unique values: unacc, acc, good and very good. In the one-hot encoded label dataset, you can see four columns, one for each of the unique values in the output column. You can see 1 in the column for the unique value that originally existed in that row. For instance, in the first five rows of the output column, the column value was unacc. In the labels column, you can see 1 in the first five rows of the condition_unacc column.

Let's now convert our labels into a numpy array since deep learning models in TensorFlow accept numpy array as input.

y = labels.values

The final step before we can train our TensorFlow 2.0 classification model is to divide the dataset into training and test sets:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=42)

Model Training

To train the model, let's import the TensorFlow 2.0 classes. Execute the following script:

from tensorflow.keras.layers import Input, Dense, Activation,Dropout

from tensorflow.keras.models import Model

As I said earlier, TensorFlow 2.0 uses the Keras API for training the model. In the script above we basically import Input, Dense, Activation, and Dropout classes from tensorflow.keras.layers module. Similarly, we also import the Model class from the tensorflow.keras.models module.

The next step is to create our classification model:

input_layer = Input(shape=(X.shape[1],))

dense_layer_1 = Dense(15, activation='relu')(input_layer)

dense_layer_2 = Dense(10, activation='relu')(dense_layer_1)

output = Dense(y.shape[1], activation='softmax')(dense_layer_2)

model = Model(inputs=input_layer, outputs=output)

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['acc'])

As can be seen from the script, the model contains three dense layers. The first two dense layers contain 15 and 10 nodes, respectively with relu activation function. The final dense layer contain 4 nodes (y.shape[1] == 4) and softmax activation function since this is a classification task. The model is trained using categorical_crossentropy loss function and adam optimizer. The evaluation metric is accuracy.

The following script shows the model summary:

print(model.summary())

Output:

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 21)] 0

_________________________________________________________________

dense (Dense) (None, 15) 330

_________________________________________________________________

dense_1 (Dense) (None, 10) 160

_________________________________________________________________

dense_2 (Dense) (None, 4) 44

=================================================================

Total params: 534

Trainable params: 534

Non-trainable params: 0

_________________________________________________________________

None

Finally, to train the model execute the following script:

history = model.fit(X_train, y_train, batch_size=8, epochs=50, verbose=1, validation_split=0.2)

The model will be trained for 50 epochs but here for the sake of space, the result of only last 5 epochs is displayed:

Epoch 45/50

1105/1105 [==============================] - 0s 219us/sample - loss: 0.0114 - acc: 1.0000 - val_loss: 0.0606 - val_acc: 0.9856

Epoch 46/50

1105/1105 [==============================] - 0s 212us/sample - loss: 0.0113 - acc: 1.0000 - val_loss: 0.0497 - val_acc: 0.9856

Epoch 47/50

1105/1105 [==============================] - 0s 219us/sample - loss: 0.0102 - acc: 1.0000 - val_loss: 0.0517 - val_acc: 0.9856

Epoch 48/50

1105/1105 [==============================] - 0s 218us/sample - loss: 0.0091 - acc: 1.0000 - val_loss: 0.0536 - val_acc: 0.9856

Epoch 49/50

1105/1105 [==============================] - 0s 213us/sample - loss: 0.0095 - acc: 1.0000 - val_loss: 0.0513 - val_acc: 0.9819

Epoch 50/50

1105/1105 [==============================] - 0s 209us/sample - loss: 0.0080 - acc: 1.0000 - val_loss: 0.0536 - val_acc: 0.9856

By the end of the 50th epoch, we have training accuracy of 100% while validation accuracy of 98.56%, which is impressive.

Let's finally evaluate the performance of our classification model on the test set:

score = model.evaluate(X_test, y_test, verbose=1)

print("Test Score:", score[0])

print("Test Accuracy:", score[1])

Here is the output:

WARNING:tensorflow:Falling back from v2 loop because of error: Failed to find data adapter that can handle input: <class 'pandas.core.frame.DataFrame'>, <class 'NoneType'>

346/346 [==============================] - 0s 55us/sample - loss: 0.0605 - acc: 0.9740

Test Score: 0.06045335989359314

Test Accuracy: 0.9739884

Our model achieves an accuracy of 97.39% on the test set. Though it is slightly less than the training accuracy of 100%, it is still very good given the fact that we randomly chose the number of layers and the nodes. You can add more layers to the model with more nodes and see if you can get better results on the validation and test sets.

Regression with TensorFlow 2.0

In regression problem, the goal is to predict a continuous value. In this section, you will see how to solve a regression problem with TensorFlow 2.0

The Dataset

The dataset for this problem can be downloaded freely from this link. Download the CSV file.

The following script imports the dataset. Do not forget to change the path to your own CSV datafile.

petrol_cons = pd.read_csv(r'/content/drive/My Drive/datasets/petrol_consumption.csv')

Let's print the first five rows of the dataset via the head() function:

petrol_cons.head()

Output:

You can see that there are five columns in the dataset. The regression model will be trained on the first four columns, i.e. Petrol_tax, Average_income, Paved_Highways, and Population_Driver_License(%). The value for the last column i.e. Petrol_Consumption will be predicted. As you can see that there is no discrete value for the output column, rather the predicted value can be any continuous value.

Data Preprocessing

In the data preprocessing step we will simply split the data into features and labels, followed by dividing the data into test and training sets. Finally the data will be normalized. For regression problems in general, and for regression problems with deep learning, it is highly recommended that you normalize your dataset. Finally, since all the columns are numeric, here we do not need to perform one-hot encoding of the columns.

X = petrol_cons.iloc[:, 0:4].values

y = petrol_cons.iloc[:, 4].values

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

In the above script, in the feature set X, the first four columns of the dataset are included. In the label set y, only the 5th column is included. Next, the data set is divided into training and test size via the train_test_split method of the sklearn.model_selection module. The value for the test_size attribute is 0.2 which means that the test set will contain 20% of the original data and the training set will consist of the remaining 80% of the original dataset. Finally, the StandardScaler class from the sklearn.preprocessing module is used to scale the dataset.

Model Training

The next step is to train our model. This is process is quite similar to training the classification. The only change will be in the loss function and the number of nodes in the output dense layer. Since now we are predicting a single continuous value, the output layer will only have 1 node.

input_layer = Input(shape=(X.shape[1],))

dense_layer_1 = Dense(100, activation='relu')(input_layer)

dense_layer_2 = Dense(50, activation='relu')(dense_layer_1)

dense_layer_3 = Dense(25, activation='relu')(dense_layer_2)

output = Dense(1)(dense_layer_3)

model = Model(inputs=input_layer, outputs=output)

model.compile(loss="mean_squared_error" , optimizer="adam", metrics=["mean_squared_error"])

Our model consists of four dense layers with 100, 50, 25, and 1 node, respectively. For regression problems, one of the most commonly used loss functions is mean_squared_error. The following script prints the summary of the model:

Model: "model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_4 (InputLayer) [(None, 4)] 0

_________________________________________________________________

dense_10 (Dense) (None, 100) 500

_________________________________________________________________

dense_11 (Dense) (None, 50) 5050

_________________________________________________________________

dense_12 (Dense) (None, 25) 1275

_________________________________________________________________

dense_13 (Dense) (None, 1) 26

=================================================================

Total params: 6,851

Trainable params: 6,851

Non-trainable params: 0

Finally, we can train the model with the following script:

history = model.fit(X_train, y_train, batch_size=2, epochs=100, verbose=1, validation_split=0.2)

Here is the result from the last 5 training epochs:

Epoch 96/100

30/30 [==============================] - 0s 2ms/sample - loss: 510.3316 - mean_squared_error: 510.3317 - val_loss: 10383.5234 - val_mean_squared_error: 10383.5234

Epoch 97/100

30/30 [==============================] - 0s 2ms/sample - loss: 523.3454 - mean_squared_error: 523.3453 - val_loss: 10488.3036 - val_mean_squared_error: 10488.3037

Epoch 98/100

30/30 [==============================] - 0s 2ms/sample - loss: 514.8281 - mean_squared_error: 514.8281 - val_loss: 10379.5087 - val_mean_squared_error: 10379.5088

Epoch 99/100

30/30 [==============================] - 0s 2ms/sample - loss: 504.0919 - mean_squared_error: 504.0919 - val_loss: 10301.3304 - val_mean_squared_error: 10301.3311

Epoch 100/100

30/30 [==============================] - 0s 2ms/sample - loss: 532.7809 - mean_squared_error: 532.7809 - val_loss: 10325.1699 - val_mean_squared_error: 10325.1709

To evaluate the performance of a regression model on test set, one of the most commonly used metrics is root mean squared error. We can find mean squared error between the predicted and actual values via the mean_squared_error class of the sklearn.metrics module. We can then take square root of the resultant mean squared error. Look at the following script:

from sklearn.metrics import mean_squared_error

from math import sqrt

pred_train = model.predict(X_train)

print(np.sqrt(mean_squared_error(y_train,pred_train)))

pred = model.predict(X_test)

print(np.sqrt(mean_squared_error(y_test,pred)))

The output shows the mean squared error for both the training and test sets. The results show that model performance is better on the training set since the root mean squared error value for training set is less. Our model is overfitting. The reason is obvious, we only had 48 records in the dataset. Try to train regression models with a larger dataset to get better results.

50.43599665058207

84.31961060849562

Conclusion

TensorFlow 2.0 is the latest version of Google's TensorFlow library for deep learning. This article briefly covers how to create classification and regression models with TensorFlow 2.0. To have a hands on experience, I would suggest that you practice the examples given in this article and try to create simple regression and classification models with TensorFlow 2.0 using some other datasets.

Real Python: Beautiful Soup: Build a Web Scraper With Python

The incredible amount of data on the Internet is a rich resource for any field of research or personal interest. To effectively harvest that data, you’ll need to become skilled at web scraping. The Python libraries requests and Beautiful Soup are powerful tools for the job. If you like to learn with hands-on examples and you have a basic understanding of Python and HTML, then this tutorial is for you.

In this tutorial, you’ll learn how to:

- Use

requestsand Beautiful Soup for scraping and parsing data from the Web - Walk through a web scraping pipeline from start to finish

- Build a script that fetches job offers from the Web and displays relevant information in your console

This is a powerful project because you’ll be able to apply the same process and the same tools to any static website out there on the World Wide Web. You can download the source code for the project and all examples in this tutorial by clicking on the link below:

Get Sample Code:Click here get the sample code you'll use for the project and examples in this tutorial.

Let’s get started!

What Is Web Scraping?

Web scraping is the process of gathering information from the Internet. Even copy-pasting the lyrics of your favorite song is a form of web scraping! However, the words “web scraping” usually refer to a process that involves automation. Some websites don’t like it when automatic scrapers gather their data, while others don’t mind.

If you’re scraping a page respectfully for educational purposes, then you’re unlikely to have any problems. Still, it’s a good idea to do some research on your own and make sure that you’re not violating any Terms of Service before you start a large-scale project. To learn more about the legal aspects of web scraping, check out Legal Perspectives on Scraping Data From The Modern Web.

Why Scrape the Web?

Say you’re a surfer (both online and in real life) and you’re looking for employment. However, you’re not looking for just any job. With a surfer’s mindset, you’re waiting for the perfect opportunity to roll your way!

There’s a job site that you like that offers exactly the kinds of jobs you’re looking for. Unfortunately, a new position only pops up once in a blue moon. You think about checking up on it every day, but that doesn’t sound like the most fun and productive way to spend your time.

Thankfully, the world offers other ways to apply that surfer’s mindset! Instead of looking at the job site every day, you can use Python to help automate the repetitive parts of your job search. Automated web scraping can be a solution to speed up the data collection process. You write your code once and it will get the information you want many times and from many pages.

In contrast, when you try to get the information you want manually, you might spend a lot of time clicking, scrolling, and searching. This is especially true if you need large amounts of data from websites that are regularly updated with new content. Manual web scraping can take a lot of time and repetition.

There’s so much information on the Web, and new information is constantly added. Something among all that data is likely of interest to you, and much of it is just out there for the taking. Whether you’re actually on the job hunt, gathering data to support your grassroots organization, or are finally looking to get all the lyrics from your favorite artist downloaded to your computer, automated web scraping can help you accomplish your goals.

Challenges of Web Scraping

The Web has grown organically out of many sources. It combines a ton of different technologies, styles, and personalities, and it continues to grow to this day. In other words, the Web is kind of a hot mess! This can lead to a few challenges you’ll see when you try web scraping.

One challenge is variety. Every website is different. While you’ll encounter general structures that tend to repeat themselves, each website is unique and will need its own personal treatment if you want to extract the information that’s relevant to you.

Another challenge is durability. Websites constantly change. Say you’ve built a shiny new web scraper that automatically cherry-picks precisely what you want from your resource of interest. The first time you run your script, it works flawlessly. But when you run the same script only a short while later, you run into a discouraging and lengthy stack of tracebacks!

This is a realistic scenario, as many websites are in active development. Once the site’s structure has changed, your scraper might not be able to navigate the sitemap correctly or find the relevant information. The good news is that many changes to websites are small and incremental, so you’ll likely be able to update your scraper with only minimal adjustments.

However, keep in mind that because the internet is dynamic, the scrapers you’ll build will probably require constant maintenance. You can set up continuous integration to run scraping tests periodically to ensure that your main script doesn’t break without your knowledge.

APIs: An Alternative to Web Scraping

Some website providers offer Application Programming Interfaces (APIs) that allow you to access their data in a predefined manner. With APIs, you can avoid parsing HTML and instead access the data directly using formats like JSON and XML. HTML is primarily a way to visually present content to users.

When you use an API, the process is generally more stable than gathering the data through web scraping. That’s because APIs are made to be consumed by programs, rather than by human eyes. If the design of a website changes, then it doesn’t mean that the structure of the API has changed.

However, APIs can change as well. Both the challenges of variety and durability apply to APIs just as they do to websites. Additionally, it’s much harder to inspect the structure of an API by yourself if the provided documentation is lacking in quality.

The approach and tools you need to gather information using APIs are outside the scope of this tutorial. To learn more about it, check out API Integration in Python.

Scraping the Monster Job Site

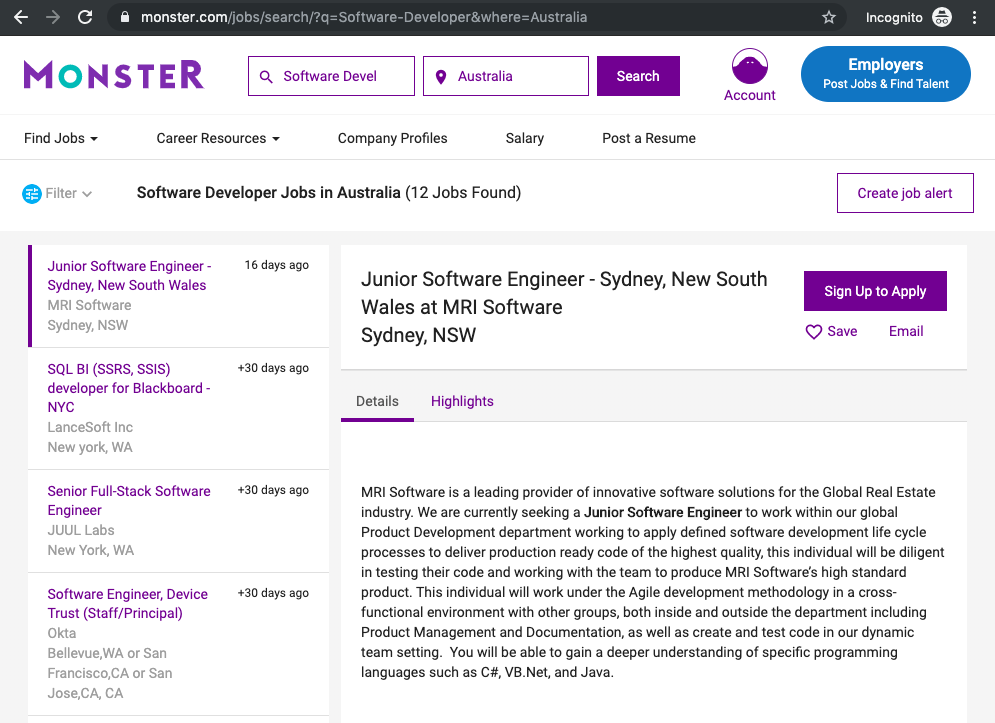

In this tutorial, you’ll build a web scraper that fetches Software Developer job listings from the Monster job aggregator site. Your web scraper will parse the HTML to pick out the relevant pieces of information and filter that content for specific words.

You can scrape any site on the Internet that you can look at, but the difficulty of doing so depends on the site. This tutorial offers you an introduction to web scraping to help you understand the overall process. Then, you can apply this same process for every website you’ll want to scrape.

Part 1: Inspect Your Data Source

The first step is to head over to the site you want to scrape using your favorite browser. You’ll need to understand the site structure to extract the information you’re interested in.

Explore the Website

Click through the site and interact with it just like any normal user would. For example, you could search for Software Developer jobs in Australia using the site’s native search interface:

You can see that there’s a list of jobs returned on the left side, and there are more detailed descriptions about the selected job on the right side. When you click on any of the jobs on the left, the content on the right changes. You can also see that when you interact with the website, the URL in your browser’s address bar also changes.

Decipher the Information in URLs

A lot of information can be encoded in a URL. Your web scraping journey will be much easier if you first become familiar with how URLs work and what they’re made of. Try to pick apart the URL of the site you’re currently on:

https://www.monster.com/jobs/search/?q=Software-Developer&where=Australia

You can deconstruct the above URL into two main parts:

- The base URL represents the path to the search functionality of the website. In the example above, the base URL is

https://www.monster.com/jobs/search/. - The query parameters represent additional values that can be declared on the page. In the example above, the query parameters are

?q=Software-Developer&where=Australia.

Any job you’ll search for on this website will use the same base URL. However, the query parameters will change depending on what you’re looking for. You can think of them as query strings that get sent to the database to retrieve specific records.

Query parameters generally consist of three things:

- Start: The beginning of the query parameters is denoted by a question mark (

?). - Information: The pieces of information constituting one query parameter are encoded in key-value pairs, where related keys and values are joined together by an equals sign (

key=value). - Separator: Every URL can have multiple query parameters, which are separated from each other by an ampersand (

&).

Equipped with this information, you can pick apart the URL’s query parameters into two key-value pairs:

q=Software-Developerselects the type of job you’re looking for.where=Australiaselects the location you’re looking for.

Try to change the search parameters and observe how that affects your URL. Go ahead and enter new values in the search bar up top:

Change these values to observe the changes in the URL.

Change these values to observe the changes in the URL.

Next, try to change the values directly in your URL. See what happens when you paste the following URL into your browser’s address bar:

https://www.monster.com/jobs/search/?q=Programmer&where=New-York

You’ll notice that changes in the search box of the site are directly reflected in the URL’s query parameters and vice versa. If you change either of them, then you’ll see different results on the website. When you explore URLs, you can get information on how to retrieve data from the website’s server.

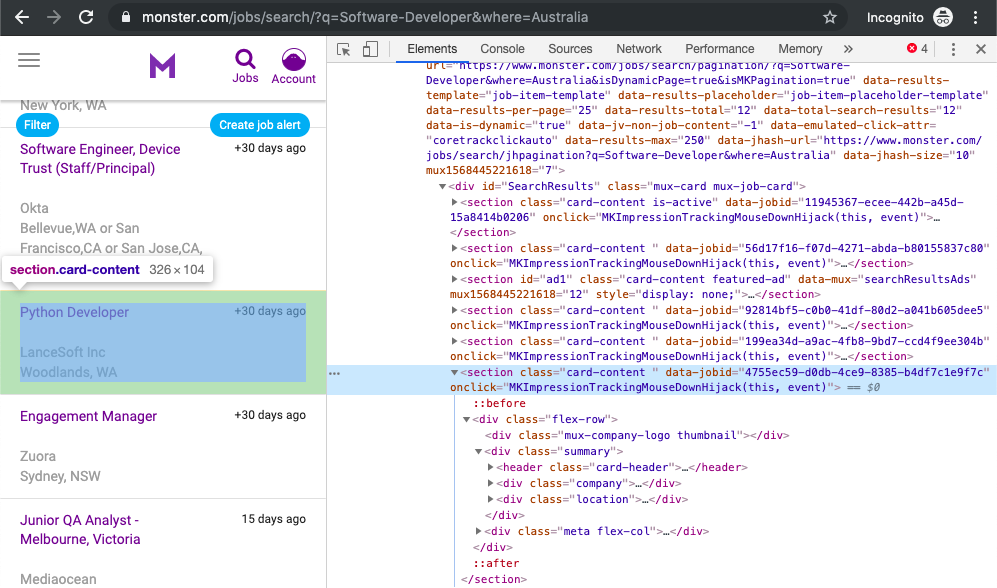

Inspect the Site Using Developer Tools

Next, you’ll want to learn more about how the data is structured for display. You’ll need to understand the page structure to pick what you want from the HTML response that you’ll collect in one of the upcoming steps.

Developer tools can help you understand the structure of a website. All modern browsers come with developer tools installed. In this tutorial, you’ll see how to work with the developer tools in Chrome. The process will be very similar to other modern browsers.

In Chrome, you can open up the developer tools through the menu View → Developer → Developer Tools. You can also access them by right-clicking on the page and selecting the Inspect option, or by using a keyboard shortcut.

Developer tools allow you to interactively explore the site’s DOM to better understand the source that you’re working with. To dig into your page’s DOM, select the Elements tab in developer tools. You’ll see a structure with clickable HTML elements. You can expand, collapse, and even edit elements right in your browser:

The HTML on the right represents the structure of the page you can see on the left.

The HTML on the right represents the structure of the page you can see on the left.

You can think of the text displayed in your browser as the HTML structure of that page. If you’re interested, then you can read more about the difference between the DOM and HTML on CSS-TRICKS.

When you right-click elements on the page, you can select Inspect to zoom to their location in the DOM. You can also hover over the HTML text on your right and see the corresponding elements light up on the page.

Task: Find a single job posting. What HTML element is it wrapped in, and what other HTML elements does it contain?

Play around and explore! The more you get to know the page you’re working with, the easier it will be to scrape it. However, don’t get too overwhelmed with all that HTML text. You’ll use the power of programming to step through this maze and cherry-pick only the interesting parts with Beautiful Soup.

Part 2: Scrape HTML Content From a Page

Now that you have an idea of what you’re working with, it’s time to get started using Python. First, you’ll want to get the site’s HTML code into your Python script so that you can interact with it. For this task, you’ll use Python’s requests library. Type the following in your terminal to install it:

$ pip3 install requests

Then open up a new file in your favorite text editor. All you need to retrieve the HTML are a few lines of code:

importrequestsURL='https://www.monster.com/jobs/search/?q=Software-Developer&where=Australia'page=requests.get(URL)This code performs an HTTP request to the given URL. It retrieves the HTML data that the server sends back and stores that data in a Python object.

If you take a look at the downloaded content, then you’ll notice that it looks very similar to the HTML you were inspecting earlier with developer tools. To improve the structure of how the HTML is displayed in your console output, you can print the object’s .content attribute with pprint().

Static Websites

The website you’re scraping in this tutorial serves static HTML content. In this scenario, the server that hosts the site sends back HTML documents that already contain all the data you’ll get to see as a user.

When you inspected the page with developer tools earlier on, you discovered that a job posting consists of the following long and messy-looking HTML:

<sectionclass="card-content"data-jobid="4755ec59-d0db-4ce9-8385-b4df7c1e9f7c"onclick="MKImpressionTrackingMouseDownHijack(this, event)"><divclass="flex-row"><divclass="mux-company-logo thumbnail"></div><divclass="summary"><headerclass="card-header"><h2class="title"><adata-bypass="true"data-m_impr_a_placement_id="JSR2CW"data-m_impr_j_cid="4"data-m_impr_j_coc=""data-m_impr_j_jawsid="371676273"data-m_impr_j_jobid="0"data-m_impr_j_jpm="2"data-m_impr_j_jpt="3"data-m_impr_j_lat="30.1882"data-m_impr_j_lid="619"data-m_impr_j_long="-95.6732"data-m_impr_j_occid="11838"data-m_impr_j_p="3"data-m_impr_j_postingid="4755ec59-d0db-4ce9-8385-b4df7c1e9f7c"data-m_impr_j_pvc="4496dab8-a60c-4f02-a2d1-6213320e7213"data-m_impr_s_t="t"data-m_impr_uuid="0b620778-73c7-4550-9db5-df4efad23538"href="https://job-openings.monster.com/python-developer-woodlands-wa-us-lancesoft-inc/4755ec59-d0db-4ce9-8385-b4df7c1e9f7c"onclick="clickJobTitle('plid=619&pcid=4&poccid=11838','Software Developer',''); clickJobTitleSiteCat('{"events.event48":"true","eVar25":"Python Developer","eVar66":"Monster","eVar67":"JSR2CW","eVar26":"_LanceSoft Inc","eVar31":"Woodlands_WA_","prop24":"2019-07-02T12:00","eVar53":"1500127001001","eVar50":"Aggregated","eVar74":"regular"}')">Python Developer

</a></h2></header><divclass="company"><spanclass="name">LanceSoft Inc</span><ulclass="list-inline"></ul></div><divclass="location"><spanclass="name">

Woodlands, WA

</span></div></div><divclass="meta flex-col"><timedatetime="2017-05-26T12:00">2 days ago</time><spanclass="mux-tooltip applied-only"data-mux="tooltip"title="Applied"><iaria-hidden="true"class="icon icon-applied"></i><spanclass="sr-only">Applied</span></span><spanclass="mux-tooltip saved-only"data-mux="tooltip"title="Saved"><iaria-hidden="true"class="icon icon-saved"></i><spanclass="sr-only">Saved</span></span></div></div></section>It can be difficult to wrap your head around such a long block of HTML code. To make it easier to read, you can use an HTML formatter to automatically clean it up a little more. Good readability helps you better understand the structure of any code block. While it may or may not help to improve the formatting of the HTML, it’s always worth a try.

Note: Keep in mind that every website will look different. That’s why it’s necessary to inspect and understand the structure of the site you’re currently working with before moving forward.

The HTML above definitely has a few confusing parts in it. For example, you can scroll to the right to see the large number of attributes that the <a> element has. Luckily, the class names on the elements that you’re interested in are relatively straightforward:

class="title": the title of the job postingclass="company": the company that offers the positionclass="location": the location where you’d be working

In case you ever get lost in a large pile of HTML, remember that you can always go back to your browser and use developer tools to further explore the HTML structure interactively.

By now, you’ve successfully harnessed the power and user-friendly design of Python’s requests library. With only a few lines of code, you managed to scrape the static HTML content from the web and make it available for further processing.

However, there are a few more challenging situations you might encounter when you’re scraping websites. Before you begin using Beautiful Soup to pick the relevant information from the HTML that you just scraped, take a quick look at two of these situations.

Hidden Websites

Some pages contain information that’s hidden behind a login. That means you’ll need an account to be able to see (and scrape) anything from the page. The process to make an HTTP request from your Python script is different than how you access a page from your browser. That means that just because you can log in to the page through your browser, that doesn’t mean you’ll be able to scrape it with your Python script.

However, there are some advanced techniques that you can use with the requests to access the content behind logins. These techniques will allow you to log in to websites while making the HTTP request from within your script.

Dynamic Websites

Static sites are easier to work with because the server sends you an HTML page that already contains all the information as a response. You can parse an HTML response with Beautiful Soup and begin to pick out the relevant data.

On the other hand, with a dynamic website the server might not send back any HTML at all. Instead, you’ll receive JavaScript code as a response. This will look completely different from what you saw when you inspected the page with your browser’s developer tools.

Note: To offload work from the server to the clients’ machines, many modern websites avoid crunching numbers on their servers whenever possible. Instead, they’ll send JavaScript code that your browser will execute locally to produce the desired HTML.

As mentioned before, what happens in the browser is not related to what happens in your script. Your browser will diligently execute the JavaScript code it receives back from a server and create the DOM and HTML for you locally. However, doing a request to a dynamic website in your Python script will not provide you with the HTML page content.

When you use requests, you’ll only receive what the server sends back. In the case of a dynamic website, you’ll end up with some JavaScript code, which you won’t be able to parse using Beautiful Soup. The only way to go from the JavaScript code to the content you’re interested in is to execute the code, just like your browser does. The requests library can’t do that for you, but there are other solutions that can.

For example, requests-html is a project created by the author of the requests library that allows you to easily render JavaScript using syntax that’s similar to the syntax in requests. It also includes capabilities for parsing the data by using Beautiful Soup under the hood.

Note: Another popular choice for scraping dynamic content is Selenium. You can think of Selenium as a slimmed-down browser that executes the JavaScript code for you before passing on the rendered HTML response to your script.

You won’t go deeper into scraping dynamically-generated content in this tutorial. For now, it’s enough for you to remember that you’ll need to look into the above-mentioned options if the page you’re interested in is generated in your browser dynamically.

Part 3: Parse HTML Code With Beautiful Soup

You’ve successfully scraped some HTML from the Internet, but when you look at it now, it just seems like a huge mess. There are tons of HTML elements here and there, thousands of attributes scattered around—and wasn’t there some JavaScript mixed in as well? It’s time to parse this lengthy code response with Beautiful Soup to make it more accessible and pick out the data that you’re interested in.

Beautiful Soup is a Python library for parsing structured data. It allows you to interact with HTML in a similar way to how you would interact with a web page using developer tools. Beautiful Soup exposes a couple of intuitive functions you can use to explore the HTML you received. To get started, use your terminal to install the Beautiful Soup library:

$ pip3 install beautifulsoup4

Then, import the library and create a Beautiful Soup object:

importrequestsfrombs4importBeautifulSoupURL='https://www.monster.com/jobs/search/?q=Software-Developer&where=Australia'page=requests.get(URL)soup=BeautifulSoup(page.content,'html.parser')When you add the two highlighted lines of code, you’re creating a Beautiful Soup object that takes the HTML content you scraped earlier as its input. When you instantiate the object, you also instruct Beautiful Soup to use the appropriate parser.

Find Elements by ID

In an HTML web page, every element can have an id attribute assigned. As the name already suggests, that id attribute makes the element uniquely identifiable on the page. You can begin to parse your page by selecting a specific element by its ID.

Switch back to developer tools and identify the HTML object that contains all of the job postings. Explore by hovering over parts of the page and using right-click to Inspect.

Note: Keep in mind that it’s helpful to periodically switch back to your browser and interactively explore the page using developer tools. This helps you learn how to find the exact elements you’re looking for.

At the time of this writing, the element you’re looking for is a <div> with an id attribute that has the value "ResultsContainer". It has a couple of other attributes as well, but below is the gist of what you’re looking for:

<divid="ResultsContainer"><!-- all the job listings --></div>Beautiful Soup allows you to find that specific element easily by its ID:

results=soup.find(id='ResultsContainer')For easier viewing, you can .prettify() any Beautiful Soup object when you print it out. If you call this method on the results variable that you just assigned above, then you should see all the HTML contained within the <div>:

print(results.prettify())When you use the element’s ID, you’re able to pick one element out from among the rest of the HTML. This allows you to work with only this specific part of the page’s HTML. It looks like the soup just got a little thinner! However, it’s still quite dense.

Find Elements by HTML Class Name

You’ve seen that every job posting is wrapped in a <section> element with the class card-content. Now you can work with your new Beautiful Soup object called results and select only the job postings. These are, after all, the parts of the HTML that you’re interested in! You can do this in one line of code:

job_elems=results.find_all('section',class_='card-content')Here, you call .find_all() on a Beautiful Soup object, which returns an iterable containing all the HTML for all the job listings displayed on that page.

Take a look at all of them:

forjob_eleminjob_elems:print(job_elem,end='\n'*2)That’s already pretty neat, but there’s still a lot of HTML! You’ve seen earlier that your page has descriptive class names on some elements. Let’s pick out only those:

forjob_eleminjob_elems:# Each job_elem is a new BeautifulSoup object.# You can use the same methods on it as you did before.title_elem=job_elem.find('h2',class_='title')company_elem=job_elem.find('div',class_='company')location_elem=job_elem.find('div',class_='location')print(title_elem)print(company_elem)print(location_elem)print()Great! You’re getting closer and closer to the data you’re actually interested in. Still, there’s a lot going on with all those HTML tags and attributes floating around:

<h2class="title"><adata-bypass="true"data-m_impr_a_placement_id="JSR2CW"data-m_impr_j_cid="4"data-m_impr_j_coc=""data-m_impr_j_jawsid="371676273"data-m_impr_j_jobid="0"data-m_impr_j_jpm="2"data-m_impr_j_jpt="3"data-m_impr_j_lat="30.1882"data-m_impr_j_lid="619"data-m_impr_j_long="-95.6732"data-m_impr_j_occid="11838"data-m_impr_j_p="3"data-m_impr_j_postingid="4755ec59-d0db-4ce9-8385-b4df7c1e9f7c"data-m_impr_j_pvc="4496dab8-a60c-4f02-a2d1-6213320e7213"data-m_impr_s_t="t"data-m_impr_uuid="0b620778-73c7-4550-9db5-df4efad23538"href="https://job-openings.monster.com/python-developer-woodlands-wa-us-lancesoft-inc/4755ec59-d0db-4ce9-8385-b4df7c1e9f7c"onclick="clickJobTitle('plid=619&pcid=4&poccid=11838','Software Developer',''); clickJobTitleSiteCat('{"events.event48":"true","eVar25":"Python Developer","eVar66":"Monster","eVar67":"JSR2CW","eVar26":"_LanceSoft Inc","eVar31":"Woodlands_WA_","prop24":"2019-07-02T12:00","eVar53":"1500127001001","eVar50":"Aggregated","eVar74":"regular"}')">Python Developer

</a></h2><divclass="company"><spanclass="name">LanceSoft Inc</span><ulclass="list-inline"></ul></div><divclass="location"><spanclass="name">

Woodlands, WA

</span></div>You’ll see how to narrow down this output in the next section.

Extract Text From HTML Elements

For now, you only want to see the title, company, and location of each job posting. And behold! Beautiful Soup has got you covered. You can add .text to a Beautiful Soup object to return only the text content of the HTML elements that the object contains:

forjob_eleminjob_elems:title_elem=job_elem.find('h2',class_='title')company_elem=job_elem.find('div',class_='company')location_elem=job_elem.find('div',class_='location')print(title_elem.text)print(company_elem.text)print(location_elem.text)print()Run the above code snippet and you’ll see the text content displayed. However, you’ll also get a lot of whitespace. Since you’re now working with Python strings, you can .strip() the superfluous whitespace. You can also apply any other familiar Python string methods to further clean up your text.

Note: The web is messy and you can’t rely on a page structure to be consistent throughout. Therefore, you’ll more often than not run into errors while parsing HTML.

When you run the above code, you might encounter an AttributeError:

AttributeError: 'NoneType' object has no attribute 'text'If that’s the case, then take a step back and inspect your previous results. Were there any items with a value of None? You might have noticed that the structure of the page is not entirely uniform. There could be an advertisement in there that displays in a different way than the normal job postings, which may return different results. For this tutorial, you can safely disregard the problematic element and skip over it while parsing the HTML:

forjob_eleminjob_elems:title_elem=job_elem.find('h2',class_='title')company_elem=job_elem.find('div',class_='company')location_elem=job_elem.find('div',class_='location')ifNonein(title_elem,company_elem,location_elem):continueprint(title_elem.text.strip())print(company_elem.text.strip())print(location_elem.text.strip())print()Feel free to explore why one of the elements is returned as None. You can use the conditional statement you wrote above to print() out and inspect the relevant element in more detail. What do you think is going on there?

After you complete the above steps try running your script again. The results finally look much better:

Python Developer

LanceSoft Inc

Woodlands, WA

Senior Engagement Manager

Zuora

Sydney, NSW

Find Elements by Class Name and Text Content

By now, you’ve cleaned up the list of jobs that you saw on the website. While that’s pretty neat already, you can make your script more useful. However, not all of the job listings seem to be developer jobs that you’d be interested in as a Python developer. So instead of printing out all of the jobs from the page, you’ll first filter them for some keywords.

You know that job titles in the page are kept within <h2> elements. To filter only for

specific ones, you can use the string argument:

python_jobs=results.find_all('h2',string='Python Developer')This code finds all <h2> elements where the contained string matches 'Python Developer' exactly. Note that you’re directly calling the method on your first results variable. If you go ahead and print() the output of the above code snippet to your console, then you might be disappointed because it will probably be empty:

[]There was definitely a job with that title in the search results, so why is it not showing up? When you use string= like you did above, your program looks for exactly that string. Any differences in capitalization or whitespace will prevent the element from matching. In the next section, you’ll find a way to make the string more general.

Pass a Function to a Beautiful Soup Method

In addition to strings, you can often pass functions as arguments to Beautiful Soup methods. You can change the previous line of code to use a function instead:

python_jobs=results.find_all('h2',string=lambdatext:'python'intext.lower())Now you’re passing an anonymous function to the string= argument. The lambda function looks at the text of each <h2> element, converts it to lowercase, and checks whether the substring 'python' is found anywhere in there. Now you’ve got a match:

>>> print(len(python_jobs))1Your program has found a match!

Note: In case you still don’t get a match, try adapting your search string. The job offers on this page are constantly changing and there might not be a job listed that includes the substring 'python' in its title at the time that you’re working through this tutorial.

The process of finding specific elements depending on their text content is a powerful way to filter your HTML response for the information that you’re looking for. Beautiful Soup allows you to use either exact strings or functions as arguments for filtering text in Beautiful Soup objects.

Extract Attributes From HTML Elements

At this point, your Python script already scrapes the site and filters its HTML for relevant job postings. Well done! However, one thing that’s still missing is the link to apply for a job.

While you were inspecting the page, you found that the link is part of the element that has the title HTML class. The current code strips away the entire link when accessing the .text attribute of its parent element. As you’ve seen before, .text only contains the visible text content of an HTML element. Tags and attributes are not part of that. To get the actual URL, you want to extract one of those attributes instead of discarding it.

Look at the list of filtered results python_jobs that you created above. The URL is contained in the href attribute of the nested <a> tag. Start by fetching the <a> element. Then, extract the value of its href attribute using square-bracket notation:

python_jobs=results.find_all('h2',string=lambdatext:"python"intext.lower())forp_jobinpython_jobs:link=p_job.find('a')['href']print(p_job.text.strip())print(f"Apply here: {link}\n")The filtered results will only show links to job opportunities that include python in their title. You can use the same square-bracket notation to extract other HTML attributes as well. A common use case is to fetch the URL of a link, as you did above.

Building the Job Search Tool

If you’ve written the code alongside this tutorial, then you can already run your script as-is. To wrap up your journey into web scraping, you could give the code a final makeover and create a command line interface app that looks for Software Developer jobs in any location you define.

You can check out a command line app version of the code you built in this tutorial at the link below:

Get Sample Code:Click here get the sample code you'll use for the project and examples in this tutorial.

If you’re interested in learning how to adapt your script as a command line interface, then check out How to Build Command Line Interfaces in Python With argparse.

Additional Practice

Below is a list of other job boards. These linked pages also return their search results as static HTML responses. To keep practicing your new skills, you can revisit the web scraping process using any or all of the following sites:

Go through this tutorial again from the top using one of these other sites. You’ll see that the structure of each website is different and that you’ll need to re-build the code in a slightly different way to fetch the data you want. This is a great way to practice the concepts that you just learned. While it might make you sweat every so often, your coding skills will be stronger for it!

During your second attempt, you can also explore additional features of Beautiful Soup. Use the documentation as your guidebook and inspiration. Additional practice will help you become more proficient at web scraping using Python, requests, and Beautiful Soup.

Conclusion

Beautiful Soup is packed with useful functionality to parse HTML data. It’s a trusted and helpful companion for your web scraping adventures. Its documentation is comprehensive and relatively user-friendly to get started with. You’ll find that Beautiful Soup will cater to most of your parsing needs, from navigating to advanced searching through the results.

In this tutorial, you’ve learned how to scrape data from the Web using Python, requests, and Beautiful Soup. You built a script that fetches job postings from the Internet and went through the full web scraping process from start to finish.

You learned how to:

- Inspect the HTML structure of your target site with your browser’s developer tools

- Gain insight into how to decipher the data encoded in URLs

- Download the page’s HTML content using Python’s

requestslibrary - Parse the downloaded HTML with Beautiful Soup to extract relevant information

With this general pipeline in mind and powerful libraries in your toolkit, you can go out and see what other websites you can scrape! Have fun, and remember to always be respectful and use your programming skills responsibly.

You can download the source code for the sample script that you built in this tutorial by clicking on the link below:

Get Sample Code:Click here get the sample code you'll use for the project and examples in this tutorial.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Martijn Faassen: Framework Patterns

A software framework is code that calls your (application) code. That's how we distinguish a framework from a library. Libraries have aspects of frameworks so there is a gray area.

My friend Christian Theune puts it like this: a framework is a text where you fill in the blanks. The framework defines the grammar, you bring some of the words. The words are the code you bring into it.

If you as an developer use a framework, you need to tell it about your code. You need to tell the framework what to call, when. Let's call this configuring the framework.

There are many ways to configure a framework. Each approach has its own trade-offs. I will describe some of these framework configuration patterns here, with brief examples and mention of some of the trade-offs. Many frameworks use more than a single pattern. I don't claim this list is exhaustive -- there are more patterns.

The patterns I describe are generally language agnostic, though some depend on specific language features. Some of these patterns make more sense in object oriented languages. Some are easier to accomplish in one language compared to another. Some languages have rich run-time introspection abilities, and that make certain patterns a lot easier to implement. A language with a powerful macro facility will make other patterns easier to implement.

Where I give example code, I will use Python. I give some abstract code examples, and try to supply a few real-world examples as well. The examples show the framework from the perspective of the application developer.

Pattern: Callback function

The framework lets you pass in a callback function to configure its behavior.

Fictional example

This is a Form class where you can pass in a function that implements what should happen when you save the form.

fromframeworkimportFormdefmy_save(data):...applicationcodetosavethedatasomewhere...my_form=Form(save=my_save)

Real-world example: Python map

A real-world example: map is a (nano)framework that takes a (pure) function:

>>>list(map(lambdax:x*x,[1,2,3]))[1,4,9]

You can go very far with this approach. Functional languages do. If you glance at React in a certain way, it's configured with a whole bunch of callback functions called React components, along with more callback functions called event handlers.

Trade-offs

I am a big fan of this approach as the trade-offs are favorable in many circumstances. In object-oriented language this pattern is sometimes ignored because people feel they need something more complicated like pass in some fancy object or do inheritance, but I think callback functions should in fact be your first consideration.

Functions are simple to understand and implement. The contract is about as simple as it can be for code. Anything you may need to implement your function is passed in as arguments by the framework, which limits how much knowledge you need to use the framework.

Configuration of a callback function can be very dynamic in run-time - you can dynamically assemble or create functions and pass them into the framework, based on some configuration stored in a database, for instance.

Configuration with callback functions doesn't really stand out, which can be a disadvantage -- it's easier to see someone subclasses a base class or implements an interface, and language-integrated methods of configuration can stand out even more.

Sometimes you want to configure multiple related functions at once, in which case an object that implements an interface can make more sense -- I describe that pattern below.

It helps if your language has support for function closures. And of course your language needs to actually support first class functions that you can pass around -- Java for a long time did not.

Pattern: Subclassing

The framework provides a base-class which you as the application developer can subclass. You implement one or more methods that the framework will call.

Fictional example

fromframeworkimportFormBaseclassMyForm(FormBase):defsave(self,data):...applicationcodesavethedatasomewhere...

Real-world example: Django REST Framework

Many frameworks offer base classes - Django offers them, and Django REST Framework even more.

Here's an example from Django REST Framework:

classAccountViewSet(viewsets.ModelViewSet):""" A simple ViewSet for viewing and editing accounts. """queryset=Account.objects.all()serializer_class=AccountSerializerpermission_classes=[IsAccountAdminOrReadOnly]

A ModelViewSet does a lot: it implements a lot of URLs and request methods to interact with them. It automatically glues this Django's ORM so that you can create database objects.

Subclassing questions

When you subclass a class, this is what you might need to know:

- what base classes are there?

- what methods can you override?

- when you override a method, can you call other methods on self (this) or not? Is there is a particular order in which you are allowed to call these methods?

- does the base class provide an implementation of this method, or is it really empty?

- if the base class provides an implementation already, you need to know whether it's intended to be supplemented, or overridden, or both.

- if it's intended to be supplemented, you need to make sure to call this method on the superclass in your implementation.

- if you can override a method entirely, you may need to know what methods to use to to play a part in the framework -- perhaps other methods that can be overridden.

- does the base class inherit from other classes that also let you override methods? when you implement a method, can it interact with other methods on these other classes?

Trade-offs

Many object-oriented languages support inheritance as a language feature. You can make the subclasser implement multiple related methods. It seems obvious to use inheritance as a way to let applications use and configure the framework.

It's not surprising then that this design is very common for frameworks. But I try to avoid it in my own frameworks, and I often am frustrated when a framework forces me to subclass.

The reason for this is that you as the application developer have to start worrying about many of the questions above. If you're lucky they are answered by documentation, though it can still take a bit of effort to understand it. But all too often you have to guess or read the code yourself.

And then even with a well designed base class with plausible overridable methods, it can still be surprisingly hard for you to do what you actually need because the contract of the base class is just not right for your use case.

Languages like Java and TypeScript offer the framework implementer a way to give you guidance (private/protected/public, final). The framework designer can put hard limits on which methods you are allowed to override. This takes away some of these concerns, as with sufficient effort on the part of the framework designer, the language tooling can enforce the contract. Even so such an API can be complex for you to understand and difficult for the framework designer to maintain.

Many languages, such as Python, Ruby and JavaScript, don't have the tools to offer such guidance. You can subclass any base class. You can override any method. The only guidance is documentation. You may feel a bit lost as a result.

A framework tends to evolve over time to let you override more methods in more classes, and thus grows in complexity. This complexity doesn't grow just linearily as methods get added, as you have to worry about their interaction too. A framework that has to deal with a variety of subclasses that override a wide range of methods can expect less from them. Too much flexibility can make it harder for the framework to offer useful features.

Base classes also don't lend themselves very well to run-time dynamism - some languages (like Python) do let you generate a subclass dynamically with custom methods, but that kind of code is difficult to understand.

I think the disadvantages of subclassing outweigh the advantages for a framework's external API. I still sometimes use base classes internally in a library or framework -- base classes are a lightweight way to do reuse there. In this context many of the disadvantages go away: you are in control of the base class contract yourself and you presumably understand it.

I also sometimes use an otherwise empty base class to define an interface, but that's really another pattern which I discuss next.

Pattern: interfaces

The framework provides an interface that you as the application developer can implement. You implement one or more methods that the framework calls.

Fictional example

fromframeworkimportForm,IFormBackendclassMyFormBackend(IFormBackend):defload(self):...applicationcodetoloadthedatahere...defsave(self,data):...applicationcodesavethedatasomewhere...my_form=Form(MyFormBackend())

Real-world example: Python iterable/iterator

The iterable/iterator protocol in Python is an example of an interface. If you implement it, the framework (in this case the Python language) will be able to do all sorts of things with it -- print out its contents, turn it into a list, reverse it, etc.

classRandomIterable:def__iter__(self):returnselfdefnext(self):ifrandom.choice(["go","stop"])=="stop":raiseStopIterationreturn1

Faking interfaces

Many typed languages offer native support for interfaces. But what if your language doesn't do that?

In a dynamically typed language you don't really need to do anything: any object can implement any interface. It's just you don't really get a lot of guidance from the language. What if you want a bit more?

In Python you can use the standard library abc module, or zope.interface. You can also use the typing module and implement base classes and in Python 3.8, PEP-544 protocols.

But let's say you don't have all of that or don't want to bother yet as you're just prototyping. You can use a simple Python base class to describe an interface:

classIFormBackend:defload(self):"Load the data from the backend. Should return a dict with the data."raiseNotImplementedError()defsave(self,data):"Save the data dict to the backend."raiseNotImplementedError()

It doesn't do anything, which is the point - it just describes the methods that the application developer should implement. You could supply one or two with a simple default implementation, but that's it. You may be tempted to implement framework behavior on it, but that brings you into base class land.

Trade-offs

The trade-offs are quite similar to those of callback functions. This is a useful pattern to use if you want to define related functionality in a single bundle.

I go for interfaces if my framework offers a more extensive contract that an application needs to implement, especially if the application needs to maintain its own internal state.

The use of interfaces can lead to clean composition-oriented designs, where you adapt one object into another.

You can use run-time dynamism like with functions where you assemble an object that implements an interface dynamically.

Many languages offer interfaces as a language feature, and any object-oriented language can fake them. Or have too many way to do it, like Python.

Pattern: imperative registration API

You register your code with the framework in a registry object.

When you have a framework that dispatches on a wide range of inputs, and you need to plug in application specific code that handles it, you are going to need some type of registry.

What gets registered can be a callback or an object that implements an interface -- it therefore builds on those patterns.

The application developer needs to call a registration method explicitly.

Frameworks can have specific ways to configure their registries that build on top of this basic pattern -- I will elaborate on that later.

Fictional Example

fromframeworkimportform_save_registrydefsave(data):...applicationcodetosavethedatasomewhere...# we configure what save function to use for the form named 'my_form'form_save_registry.register('my_form',save)

Real-world example: Falcon web framework

A URL router such as in a web framework uses some type of registry. Here is an example from the Falcon web framework:

classQuoteResource:defon_get(self,req,resp):...usercode...api=falcon.API()api.add_route('/quote',QuoteResource())

In this example you can see two patterns go together: QuoteResource implements an (implicit) interface, and you register it with a particular route.

Application code can register handlers for a variety of routes, and the framework then uses the registry to match a request's URL with a route, and then can all into user code to generate a response.

Trade-offs