Programiz: Python CSV

Catalin George Festila: Python 3.7.5 : Script install and import python packages.

Trey Hunner: Black Friday Sale: Gift Python Morsels to a Friend

From today until the end of Monday December 2nd, I’m selling bundles of two 52-week Python Morsels redemption codes.

You can buy 12 months of Python Morsels for yourself and gift 12 months of Python Morsels to a friend for free!

Or, if you’re extra generous, you can buy two redemption codes (for the price of one) and gift them both to two friends.

What is Python Morsels?🐍🍪

Python Morsels is a weekly Python skill-building service for professional Python developers. Subscribers receive one Python exercise every week in the Python skill level of their choosing (novice, intermediate, advanced).

Each exercise is designed to help you think the way Python thinks, so you can write your code less like a C/Java/Perl developer would and more like a fluent Pythonista would. Each programming language has its own unique ways of looking at the world: Python Morsels will help you embrace Python’s.

One year’s worth of Python Morsels will help even experienced Python developers deepen their Python skills and find new insights about Python to incorporate into their day-to-day work.

How does this work? 🤔

Normally a 12 month Python Morsels subscription costs $200. For $200, I’m instead selling two redemption codes, each of which can be used for 12 months (52 weeks) of Python Morsels exercises.

With this sale, you’ll get two 12-month redemption codes for the price of one. So you’ll get 1 year of Python Morsels for 2 friends for just $200.

These codes can be used at any time and users of these codes will always maintain access to the 52 exercises received over the 12 month period. You can use one of these codes to extend your current subscription, but new users can also use this redemption code without signing up for an ongoing subscription.

Only one of these codes can be used per account (though you can purchase as many as you’d like to gift to others).

What will I (and my friends) get with Python Morsels? 🎁

With Python Morsels you’ll get:

- An email every Monday which includes a detailed problem to solve using Python

- Multiple bonuses for almost every problem (most have 3 bonuses, almost all have 2) so you can re-adjust your difficulty level on a weekly basis

- Hints for each problem which you can use when you get stuck

- An online progress tracking tool to keep track of which exercises you’ve solved and how many bonuses you solved for each exercise

- Automated tests (to ensure correctness) which you can run locally and which also run automatically when you submit your solutions

- An email every Wednesday with a detailed walkthrough of various solutions (usually 5-10) for each problem, including walkthroughs of each bonus and a discussion of why some solutions may be better than others

- A skill level selection tool (novice, intermediate, advanced) which you can adjust based on your Python experience

- A web interface you can come back to even after your 12 months are over

Okay, I’m interested. Now what? ✨

First of all, don’t wait. This buy-one-get-one-free sale ends Monday!

You can sign up and purchase 2 redemption codes by visiting http://trey.io/sale2019

Note that you need to create a Python Morsels account to purchase the redemption codes. You don’t need to have an on-going subscription, you just need an account.

If you have any questions about this sale, please don’t hesitate to email me.

Stack Abuse: Insertion Sort in Python

Introduction

If you're majoring in Computer Science, Insertion Sort is most likely one of the first sorting algorithms you have heard of. It is intuitive and easy to implement, but it's very slow on large arrays and is almost never used to sort them.

Insertion sort is often illustrated by comparing it to sorting a hand of cards while playing rummy. For those of you unfamiliar with the game, most players want the cards in their hand sorted in ascending order so they can quickly see which combinations they have at their disposal.

The dealer deals out 14 cards to you, you pick them up one by one and put them in your hand in the right order. During this entire process your hand holds the sorted "subarray" of your cards, while the remaining face down cards on the table are unsorted - from which you take cards one by one, and put them in your hand.

Insertion Sort is very simple and intuitive to implement, which is one of the reasons it's generally taught at an early stage in programming. It's a stable, in-place algorithm, that works really well for nearly-sorted or small arrays.

Let's elaborate these terms:

- in-place: Requires a small, constant additional space (no matter the input size of the collection), but rewrites the original memory locations of the elements in the collection.

- stable: The algorithm maintains the relative order of equal objects from the initial array. In other words, say your company's employee database returns "Dave Watson" and "Dave Brown" as two employees, in that specific order. If you were to sort them by their (shared) first name, a stable algorithm would guarantee that this order remains unchanged.

Another thing to note: Insertion Sort doesn't need to know the entire array in advance before sorting. The algorithm can receive one element at a time. Which is great if we want to add more elements to be sorted - the algorithm only inserts that element in it's proper place without "re-doing" the whole sort.

Insertion Sort is used rather often in practice, because of how efficient it is for small (~10 item) data sets. We will talk more about that later.

How Insertion Sort Works

An array is partitioned into a "sorted" subarray and an "unsorted" subarray. At the beginning, the sorted subarray contains only the first element of our original array.

The first element in the unsorted array is evaluated so that we can insert it into its proper place in the sorted subarray.

The insertion is done by moving all elements larger than the new element one position to the right.

Continue doing this until our entire array is sorted.

Keep in mind however that when we say an element is larger or smaller than another element - it doesn't necessarily mean larger or smaller integers.

We can define the words "larger" and "smaller" however we like when using custom objects. For example, point A can be "larger" than point B if it's further away from the center of the coordinate system.

We will mark the sorted subarray with bolded numbers, and use the following array to illustrate the algorithm:

8, 5, 4, 10, 9

The first step would be to "add" 8 to our sorted subarray.

8, 5, 4, 10, 9

Now we take a look at the first unsorted element - 5. We keep that value in a separate variable, for example current, for safe-keeping. 5 is less than 8. We move 8 one place to the right, effectively overwriting the 5 that was previously stored there (hence the separate variable for safe keeping):

8, 8, 4, 10, 9 (

current= 5)

5 is lesser than all the elements in our sorted subarray, so we insert it in to the first position:

5, 8, 4, 10, 9

Next we look at number 4. We save that value in current. 4 is less than 8 so we move 8 to the right, and do the same with 5.

5, 5, 8, 10, 9 (

current= 4)

Again we've encountered an element lesser than our entire sorted subarray, so we put it in the first position:

4, 5, 8, 10, 9

10 is greater than our rightmost element in the sorted subarray and is therefore larger than any of the elements to the left of 8. So we simply move on to the next element:

4, 5, 8, 10, 9

9 is less than 10, so we move 10 to the right:

4, 5, 8, 10, 10 (

current= 9)

However, 9 is greater than 8, so we simply insert 9 right after 8.

4, 5, 8, 9, 10

Implementation

As we have previously mentioned, Insertion Sort is fairly easy to implement. We'll implement it first on a simple array of integers and then on some custom objects.

In practice, it's much more likely that you'll be working with objects and sorting them based on certain criteria.

Sorting Arrays

def insertion_sort(array):

# We start from 1 since the first element is trivially sorted

for index in range(1, len(array)):

currentValue = array[index]

currentPosition = index

# As long as we haven't reached the beginning and there is an element

# in our sorted array larger than the one we're trying to insert - move

# that element to the right

while currentPosition > 0 and array[currentPosition - 1] > currentValue:

array[currentPosition] = array[currentPosition -1]

currentPosition = currentPosition - 1

# We have either reached the beginning of the array or we have found

# an element of the sorted array that is smaller than the element

# we're trying to insert at index currentPosition - 1.

# Either way - we insert the element at currentPosition

array[currentPosition] = currentValue

Let's populate a simple array and sort it:

array = [4, 22, 41, 40, 27, 30, 36, 16, 42, 37, 14, 39, 3, 6, 34, 9, 21, 2, 29, 47]

insertion_sort(array)

print("sorted array: " + str(array))

Output:

sorted array: [2, 3, 4, 6, 9, 14, 16, 21, 22, 27, 29, 30, 34, 36, 37, 39, 40, 41, 42, 47]

Note: It would have been technically incorrect if we reversed the order of conditions in our while loop. That is, if we first checked whether array[currentPosition-1] > currentValue before checking whether currentPosition > 0.

This would mean that if we did indeed reach the 0th element, we would first check whether array[-1] > currentValue. In Python this is "fine", although technically incorrect, since it wouldn't cause the loop to end prematurely or continue when it shouldn't.

This is because if we had reached the zeroth element, the second condition of currentPosition > 0 would fail regardless of the first condition, and cause the loop to break. In Python array[-1] > currentValue is equivalent to array[len(array) - 2] > currentValue and the interpreter wouldn't complain, but this is not a comparison we actually want to happen.

This reversed order of conditions is a bad idea. In a lot of cases it can lead to unexpected results that can be hard to debug because there is no syntactic or semantic error. Most programming languages would "complain" for trying to access the -1st element, but Python won't complain and it's easy to miss such a mistake.

The takeaway advice from this is to always check whether the index is valid before using it to access elements..

Sorting Custom Objects

We've mentioned before that we can define "greater than" and "lesser than" however we want - that the definition didn't need to rely solely on integers. There are several ways we can change our algorithm to work with custom objects.

We could redefine the actual comparison operators for our custom class and keep the same algorithm code as above. However, that would mean that we'd need to overload those operators if we wanted to sort the objects of our class in a different way.

Arguably the best way to use Insertion Sort for custom classes is to pass another argument to the insertion_sort method - specifically a comparison method. The most convenient way to do this is by using a custom lambda function when calling the sorting method.

In this implementation, we'll sort points where a "smaller" point is the one with a lower x coordinate.

First we'll define our Point class:

class Point:

def __init__(self, x, y):

self.x = x

self.y = y

def __str__(self):

return str.format("({},{})", self.x, self.y)

Then we make a slight change to our insertion_sort method to accommodate custom sorting:

def insertion_sort(array, compare_function):

for index in range(1, len(array)):

currentValue = array[index]

currentPosition = index

while currentPosition > 0 and compare_function(array[currentPosition - 1], currentValue):

array[currentPosition] = array[currentPosition - 1]

currentPosition = currentPosition - 1

array[currentPosition] = currentValue

Finally we test the program:

A = Point(1,2)

B = Point(4,4)

C = Point(3,1)

D = Point(10,0)

array = [A,B,C,D]

# We sort by the x coordinate, ascending

insertion_sort(array, lambda a, b: a.x > b.x)

for point in array:

print(point)

We get the output:

(1,2)

(3,1)

(4,4)

(10,0)

This algorithm will now work for any type of array, as long as we provide an appropriate comparison function.

Insertion Sort in Practice

Insertion sort may seem like a slow algorithm, and indeed in most cases it is too slow for any practical use with its O(n2) time complexity. However, as we've mentioned, it's very efficient on small arrays and on nearly sorted arrays.

This makes Insertion Sort very relevant for use in combination with algorithms that work well on large data sets.

For example, Java used a Dual Pivot Quick Sort as the primary sorting algorithm, but used Insertion Sort whenever the array (or subarray created by Quick Sort) had less than 7 elements.

Another efficient combination is simply ignoring all small subarrays created by Quick Sort, and then passing the final, nearly-sorted array, through Insertion Sort.

Another place where Insertion Sort left its mark is with a very popular algorithm called Shell Sort. Shell Sort works by calling Insertion Sort to sort pairs of elements far apart from each other, then incrementally reducing the gap between elements to should be compared.

Essentially making a lot of Insertion Sort calls to first small, and then nearly-sorted larger arrays, harnessing all the advantages it can.

Conclusion

Insertion Sort is a very simple, generally inefficient algorithm that nonetheless has several specific advantages that make it relevant even after many other, generally more efficient algorithms have been developed.

It remains a great algorithm for introducing future software developers into the world of sorting algorithms, and is still used in practice for specific scenarios in which it shines.

Programiz: Python CSV

Janusworx: #100DaysOfCode, Day 010 – Quick and Dirty Web Page Download

Decided to take a break from the course, and do something for me.

I want to check a site and download new content if any.

The day went sideways though.

Did not quite do what I wanted.

Watched a video on how to setup Visual Studio Code just the way I wanted.

So not quite all wasted.

Test and Code: 95: Data Science Pipeline Testing with Great Expectations - Abe Gong

Data science and machine learning are affecting more of our lives every day. Decisions based on data science and machine learning are heavily dependent on the quality of the data, and the quality of the data pipeline.

Some of the software in the pipeline can be tested to some extent with traditional testing tools, like pytest.

But what about the data? The data entering the pipeline, and at various stages along the pipeline, should be validated.

That's where pipeline tests come in.

Pipeline tests are applied to data. Pipeline tests help you guard against upstream data changes and monitor data quality.

Abe Gong and Superconductive are building an open source project called Great Expectations. It's a tool to help you build pipeline tests.

This is quite an interesting idea, and I hope it gains traction and takes off.

Special Guest: Abe Gong.

Sponsored By:

- Raygun: Detect, diagnose, and destroy Python errors that are affecting your customers. With smart Python error monitoring software from Raygun.com, you can be alerted to issues affecting your users the second they happen.

Support Test & Code: Python Software Testing & Engineering

Links:

<p>Data science and machine learning are affecting more of our lives every day. Decisions based on data science and machine learning are heavily dependent on the quality of the data, and the quality of the data pipeline.</p> <p>Some of the software in the pipeline can be tested to some extent with traditional testing tools, like pytest.</p> <p>But what about the data? The data entering the pipeline, and at various stages along the pipeline, should be validated.</p> <p>That's where pipeline tests come in.</p> <p>Pipeline tests are applied to data. Pipeline tests help you guard against upstream data changes and monitor data quality.</p> <p>Abe Gong and Superconductive are building an open source project called Great Expectations. It's a tool to help you build pipeline tests.</p> <p>This is quite an interesting idea, and I hope it gains traction and takes off.</p><p>Special Guest: Abe Gong.</p><p>Sponsored By:</p><ul><li><a href="https://testandcode.com/raygun" rel="nofollow">Raygun</a>: <a href="https://testandcode.com/raygun" rel="nofollow">Detect, diagnose, and destroy Python errors that are affecting your customers. With smart Python error monitoring software from Raygun.com, you can be alerted to issues affecting your users the second they happen.</a></li></ul><p><a href="https://www.patreon.com/testpodcast" rel="payment">Support Test & Code: Python Software Testing & Engineering</a></p><p>Links:</p><ul><li><a href="https://greatexpectations.io/" title="Great Expectations" rel="nofollow">Great Expectations</a></li></ul>Weekly Python StackOverflow Report: (ccv) stackoverflow python report

These are the ten most rated questions at Stack Overflow last week.

Between brackets: [question score / answers count]

Build date: 2019-11-30 17:26:36 GMT

- Unstack and return value counts for each variable? - [11/5]

- Pandas: How to create a column that indicates when a value is present in another column a set number of rows in advance? - [7/2]

- How to generate all possible combinations with a given condition to make it more efficient? - [6/2]

- How do I print values only when they appear more than once in a list in python - [6/2]

- Reverse cumsum for countdown functionality in pandas? - [6/1]

- How to convert multiple columns to single column? - [5/3]

- Checking the type of relationship between columns in python/pandas? (one-to-one, one-to-many, or many-to-many) - [5/3]

- Mean Square Displacement as a Function of Time in Python - [5/2]

- Map dict lookup - [5/2]

- How to hide row of a multiple column based on hided data values - [5/1]

Mike C. Fletcher: PyOpenGL 3.1.4 is Out

So I just went ahead and pulled the trigger on getting PyOpenGL and PyOpenGL Accelerate 3.1.4 out the door. Really, there is little that has changed in PyOpenGL, save that I'm actually doing a final (non alpha/beta/rc) release. The last final release having been about 5.5 years ago if PyPI history is to be believed(!)

Big things of note:

- Development has moved to github

- I'm in the process of moving the website to github pages (from sourceforge)

- Python 3.x seems to be working, and we've got Appveyor .whl builds for Python 2.7, 3.6, 3.7 and 3.8, 32 and 64 bit

- Appveyor is now running the test-suite on Windows, this doesn't test much, as it's a very old OpenGL, but it does check that there's basic operation on the platform

- The end result of that should be that new releases can be done without me needing to boot a windows environment, something that has made doing final/formal releases a PITA

Enjoy yourselves!

John Cook: Data Science and Star Science

I recently got a review copy of Statistics, Data Mining, and Machine Learning in Astronomy. I’m sure the book is especially useful to astronomers, but those of us who are not astronomers could use it as a survey of data analysis techniques, especially using Python tools, where all the examples happen to come from astronomy. It covers a lot of ground and is pleasant to read.

Gaël Varoquaux: Getting a big scientific prize for open-source software

Note

An important acknowledgement for a different view of doing science: open, collaborative, and more than a proof of concept.

A few days ago, Loïc Estève, Alexandre Gramfort, Olivier Grisel, Bertrand Thirion, and myself received the “Académie des Sciences Inria prize for transfer”, for our contributions to the scikit-learn project. To put things simply, it’s quite a big deal to me, because I feel that it illustrates a change of culture in academia.

It is a great honor, because the selection was made by the members of the Académie des Sciences, very accomplished scientists with impressive contributions to science. The “Académie” is the hallmark of fundamental academic science in France. To me, this prize is also symbolic because it recognizes an open view of academic research and transfer, a view that sometimes felt as not playing according to the incentives. We started scikit-learn as a crazy endeavor, a bit of a hippy science thing. People didn’t really take us seriously. We were working on software, and not publications. We were doing open source, while industrial transfer is made by creating startups or filing patents. We were doing Python, while academic machine learning was then done in Matlab, and industrial transfer in C++. We were not pursuing the latest publications, while these are thought to be research’s best assets. We were interested in reaching out to non experts, while partners considered as interesting have qualified staff.

No. We did it different. We reached out to an open community. We did BSD-licensed code. We worked to achieve quality at the cost of quantity. We cared about installation issues, on-boarding biologists or medical doctors, playing well with the wider scientific Python ecosystem. We gave decision power to people outside of Inria, sometimes whom we had never met in real life. We made sure that Inria was never the sole actor, the sole stake-holder. We never pushed our own scientific publications in the project. We limited complexity, trading off performance for ease of use, ease of installation, ease of understanding.

As a consequence, we slowly but surely assembled a large community. In such a community, the sum is greater than the parts. The breadth of interlocutors and cultures slows movement down, but creates better results, because these results are understandable to many and usable on a diversity of problems. The consequence of this quality is that we were progressively used in more and more places: industrial data-science labs, startups, research in applied or fundamental statistical learning, teaching. Ironically, the institutional world did not notice. It got hard, next to impossible, to get funding [1]. A few years ago, I was told by a central governmental agency that we, open-source zealots, were destroying an incredible amount of value by giving away for free the production of research [2]. The French report on AI, lead by a Fields medal, cited tensorflow and theano –a discontinued software–, but ignored scikit-learn; maybe because we were doing “boring science”?

But, scikit-learn’s amazing community continued plowing forward. We grew so much that we were heard from the top. The prize from the Académie shows that we managed to capture the attention of senior scientists with open-source software, because this software is really having a worldwide impact in many disciplines.

Presenting scikit-learn at the Academie Des Sciences

There were only five of us on stage, as the prize is for Inria permanent staff. But this is of course not a fair account of how the project has grown and what made it successful.

In 2011, at the first international sprint, I felt something was happening: Incredible people whom I had never met before were sitting next to me, working very hard on solving problems with me. This experience of being united to solve difficult problems is something amazing. And I deeply thank every single person who has worked on this project, the 1500 contributors, many of those that I have never met, in particular the core team who is committed to making sure that every detail of scikit-learn is solid and serves the users. The team that has assembled over the years is of incredible quality.

The world does not understand how much the promises of data science, for today and tomorrow, need open source projects, easy to install and to use by everybody. These projects are like roads and bridges: they are needed for growth thought no one wants to pay for maintaining them. I hope that I can use the podium that the prize will give us to stress the importance of the battle that we are fighting.

| [1] | Getting funding from the government implied too much politics and risks. For these reasons, I turned to private donors, in a foundation. |

| [2] | Inria always supported us, and often paid developers in my team out of its own pockets. |

PS: As an another illustration of the culture change toward openness in science, it was announced during the ceremony that the “Compte Rendu de l’Académie des Sciences” is becoming open access, without publication charges!

Tryton News: Newsletter December 2019

@ced wrote:

To end the year, here are some changes that focus on simplifying the usage for both users and developers.

During your holidays, you can help translate Tryton or make a donation via our new service provider.Contents:

Changes For The User

When the shipment tolerance is exceeded, in the error message we now show the quantities involved so that the user understands the reason for the error and can then adjust them as required.

The asset depreciation per year now uses a fixed year of 365 days. This prevents odd calculations when leap years are involved.

By default the web client loads 20 records. If the user wants to see more records, they had to click on a button. Now these records are loaded automatically when the user scrolls to the bottom of the list. (For this to work the browser needs to support the

IntersectionObserver)If you cancel a move that groups multiple lines, Tryton will warn you. Then ungroup the lines before canceling the move.

When importing OFX statements, if the payee can not be found on the system we add the payee content to the description. This then allows the user to perform a manual search for the correct party.

We reworked the record name of most of the lines. They also now contain the quantity in addition to the product, the order name, etc.

We improved the experience with product attributes. The selection view has been simplified, and when creating a new attribute, the name of the key is automatically deduced from the “string” (label).

Changes For The Developer

We have removed the implicit list of fields in

ModelStorage.search_read. So if you were using this behavior, you must now update your calls to explicitly request the fields otherwise you will only get the ids.

As a by-product of this, we now have a dedicated method that fetches data for actions and is cached by the client for 1 day.We have dropped support for the

skiptestattribute for XML<data/>. It was no longer used by any of the standard modules and we think it is always better that the tests actually test the loading of all the data.When adding instances to a

One2Manyfield, the system automatically sets the reverseMany2Onefor each instance. This helps when writing code that needs to work with saved and unsaved records.It is now possible to define wizard transitions that do not required a valid form to click on. For example, the “Skip” button on the reconcile wizard does not need to have a valid form.

We re-factored the code in the group line wizard. It can now be called from code without the need to instantiate the wizard. This is useful when automating workflows that require grouping lines.

We noticed that calling the getter methods on the

trytond.confighas a non negligible cost, especially in core methods that are called very often. So we changed some of these into global variables.Every record/wizard/report has a

__url__attribute which returns thetryton://URL that the desktop client can open, but we were missing this for the web client. We now also have a__href__attribute.

In addition to this change, we also added some helpers intrytond.urllikeis_secure,hostandhttp_host. They make it easier to compose a URL that points to Tryton’s routes.We added a slugify tool as an helper to convert arbitrary strings to a “normalized” keyword.

Posts: 3

Participants: 3

Janusworx: #100DaysOfCode, Day 011 – Quick and Dirty Web Page Download

Watched another Corey Schafer video on how to scrape web pages.

Thought that would be handy in my image from a web page download project.

Corey’s an awesome teacher. The video was fun and it taught me lots.

Then started hacking away at my little project.

And then realised that the site has rss feeds.

I could just process them instead of scraping a page.

Went looking for a quick way to do that.

Found the Universal Feed Parser.

Got the rss feed and am now playing with it.

That is all I had time for today.

But I learnt lots.

Will probably get back to the project on Wednesday.

Office beckons for the next two days.

Hope to keep up the streak by doing something productive though.

Today was fun!

Today was also the day, I realised, I actually love coding.

I have no clue how 4 hours passed me by!

Spyder IDE: Variable Explorer improvements in Spyder 4

This blogpost was originally published on the Quansight Labs website.

Spyder 4 will be released very soon with lots of interesting new features that you'll want to check out, reflecting years of effort by the team to improve the user experience. In this post, we will be talking about the improvements made to the Variable Explorer.

These include the brand new Object Explorer for inspecting arbitrary Python variables, full support for MultiIndex dataframes with multiple dimensions, and the ability to filter and search for variables by name and type, and much more.

It is important to mention that several of the above improvements were made possible through integrating the work of two other projects. Code from gtabview was used to implement the multi-dimensional Pandas indexes, while objbrowser was the foundation of the new Object Explorer.

New viewer for arbitrary Python objects

For Spyder 4 we added a long-requested feature: full support for inspecting any kind of Python object through the Variable Explorer. For many years, Spyder has been able to view and edit a small subset of Python variables: NumPy arrays, Pandas DataFrames and Series, and builtin collections (lists, dictionaries and tuples). Other objects were displayed as dictionaries of their attributes, inspecting any of which required showing a new table. This made it rather cumbersome to use this functionality, and was the reason arbitrary Python objects were hidden by default from the Variable Explorer view.

For the forthcoming Spyder release, we've integrated the excellent objbrowser project by Pepijn Kenter (@titusjan), which provides a tree-like view of Python objects, to offer a much simpler and more user-friendly way to inspect them.

As can be seen above, this viewer will also allow users to browse extra metadata about the inspected object, such as its documentation, source code and the file that holds it.

It is very important to note that this work was accomplished thanks to the generosity of Pepijn, who kindly changed the license of objbrowser to allow us to integrate it with Spyder.

To expose this new functionality, we decided to set the option to hide arbitrary Python objects in the Variable Explorer to disabled by default, and introduced a new one called Exclude callables and modules. With this enabled by default, Spyder will now display a much larger fraction of objects that can be inspected, while still excluding most "uninteresting" variables.

Finally, we added a context-menu action to open any object using the new Object Explorer even if they already have a builtin viewer (DataFrames, arrays, etc), allowing for deeper inspection of the inner workings of these datatypes.

Multi-index support in the dataframe viewer

One of the first features we added to the Variable Explorer in Spyder 4 was MultiIndex support in its DataFrame inspector, including for multi-level and multi-dimensional indices. Spyder 3 had basic support for such, but it was very rudimentary, making inspecting such DataFrames a less than user-friendly experience.

For Spyder 4, we took advantage of the work done by Scott Hansen (@firecat53) and Yuri D'Elia (@wavexx) in their gtabview project, particularly its improved management of column and table headings, which allows the new version of Spyder to display the index shown above in a much nicer way.

Fuzzy filtering of variables

Spyder 4 also includes the ability to filter the variables shown down to only those of interest. This employs fuzzy matching between the text entered in the search field and the name and type of all available variables.

To access this functionality, click the search icon in the Variable Explorer toolbar, or press Ctrl+F (Cmd-F on macOS) when the Variable Explorer has focus.

To remove the current filter, simply click the search icon again, or press Esc or Ctrl+F (Cmd-F) while the Variable Explorer is focused.

Refresh while code is running

We added back the ability to refresh the Variable Explorer while code is running in the console. This feature was dropped in Spyder 3.2, when we removed the old and unmaintained Python console. However, this functionality will return in Spyder 4, thanks to the fantastic work done by Quentin Peter (@impact27) to completely re-architect the way Spyder talks to the Jupyter kernels that run the code in our IPython console, integrating support for Jupyter Comms.

To trigger a refresh, simply click the reload button on the Variable Explorer toolbar, or press the shortcut Ctrl+R (Cmd-R) when it has focus.

Full support for sets

In Spyder 3, the Variable Explorer could only show builtin Python sets as arbitrary objects, making it very difficult for users to browse and interact with them. In Spyder 4, you can now view sets just like lists, as well as perform various operations on them.

UI enhancements and more

Finally, beyond the headline features, we've added numerous smaller improvements to make the Variable Explorer easier and more efficient to use. These include support for custom index names, better and more efficient automatic resizing of column widths, support for displaying Pandas Indices, tooltips for truncated column headers, and more.

Spyder's Variable Explorer is what many of you consider to be one of its standout features, so we can't wait for you all to get your hands on the even better version in Spyder 4. Thanks again to Quansight, our generous community donors, and as always all of you! Spyder 4.0.0 final is planned to be released within approximately one more week, but if you'd like to test it out immediately, follow the instructions on our GitHub to install the pre-release version (which won't touch your existing Spyder install or settings). As always, happy Spydering!

BangPypers: Guidelines for BangPypers Dev Sprints

How do you get started on open source programming? How can you contribute to that framework you’ve been itching to add an extra feature to? How do you get guidance and get help pushing your changes to merge upstream?

If you’ve wondered on the above at least once, then you’re in dire need to attend one of our dev sprints.

What is Dev Sprint ?

Dev sprint is a day long activity where participants work on FOSS projects. The task can be to fix a bug, add a new feature, improve test coverage, write documentation. Participants should bring their own laptop.

If you’re getting started with programming, have been pushing changes upstream for years or just want to brush up your skills, dev sprints are excellent place to interact with the rest of open source programming community and learn about new and challenging problems they’re facing.

Here’s beautiful piece on Making the most of the PyCon sprints.

As for BangPypers, we follow a list of strict guidelines to select the best project for each sprint:

- Projects need to be in Python.

- Only those projects with an existing issue/bug lists will be considered. Project issues submitted for dev sprint, should be labelled BangPypers.

- Projects need to have at least 1 maintainer present on dev sprint day for throughout the duration of the event.

- Projects should have a contributing doc. An example of contributing document. If not, we(BangPypers) can help set it up for the project.

- Projects must have a public repository under appropriate open source license. Please do not bring in your enterprise softwares in private repositories. Private repos will not be considered.

- Projects should be actively developed/maintained. We’ll check the Last commits and commit frequency on repo.

- Also label your issues with classifiers like good-first-issue or beginner and intermediate or advanced to make it easier to select issues to work from.

Submit your project here: >watch this space<

Guidelines for the participants:

- Your own Laptop with pre-configured needed softwares to get started with.

- Read the description or readme for the project you’re interested in. Go through any and all pre-requisites for the project. Try and setup the project on your system before coming down.

- Knowledge of Git/svn/hg depending on the what the project uses.

- Read and abide by the Code of Conduct that your project might have. Here’s an example of Code of Conduct.

How can I pick up a project ?

Please go through list of projects and their issues. If the project is interesting enough, pick the project. If you have any doubts in the project, please leave a comment here and clear your doubt. Please go through the issue tracker and pick an issue to work on. If the issue isn’t clear ask the questions .

Don’t worry if you feel nervous and all this seems a bit intimidating. We’ll have mentors to guide you every step of the way.

Here’s some of our previous dev sprints:

Get ready for our next bangpypers dev sprint of 2020 in February.

Mike Driscoll: PyDev of the Week: Bob Belderbos

This week we welcome Bob Belderbos (@bbelderbos) as our PyDev of the Week! Bob is a co-founder of PyBites. Bob has also contributed to Real Python and he’s a Talk Python trainer. You can learn more about Bob by checking out his website or visiting his Github profile. Let’s spend some quality time getting to know Bob better!

Can you tell us a little about yourself (hobbies, education, etc):

I am a software developer currently working at Oracle in the Global Construction Engineering group. But I am probably better known as co-founder of PyBites, a community that masters Python through code challenges.

I have a business economics background. After finishing my studies in 2004 though, I migrated from Holland to Spain and started working in the IT industry. I got fired up about programming. I taught myself web design and coding and started living my biggest passion: automate the boring stuff making other people’s lives easier.

When not coding I love spending time with my family (dad of 2), working out, reading books and (if time allows one day) would love to pick up painting and Italian again

Why did you start using Python?

Back at Sun Microsystems I built a suite of support tools to diagnose server faults. I went from shell scripting to Perl but it quickly became a maintenance nightmare. Enter Python. After getting used to the required indenting, I fell in love with Python. I was amazed how much happier it made me as a developer (Eric Raymond’s Why Python? really resonated with me).

Since then I never looked back. Even if I’d like to, now with PyBites it’s even harder to seriously invest in other languages (more on this later).

What other programming languages do you know and which is your favorite?

I started my software journey building websites using PHP and MySQL. I taught myself a good foundation of HTML and CSS which serves me to this day.

As a web developer, it’s important to know JavaScript, it powers most of the web! It definitely is not Python but the more I use it the more I come to appreciate the language. Lastly I learned some Java years ago but did not find a use case except writing an Android game. I am fortunate to be able to use Python for almost all my work these days and it’s by far my favorite programming language.

What projects are you working on now?

Almost all my free time goes towards PyBites and in particular our CodeChalleng.es platform. We rolled out a lot of exciting features lately: learning paths, Newbie Bites (our exercises are called Bites) and flake8/black.

The platform is built using Django and it uses AWS Lambda for code execution. You can listen to me talking about the stack here.

Apart from platform dev, there are the actual Bite exercises (currently 229). I enjoy adding new ones (myself or working with our Bite authors), and helping people with their code.

Altogether we now have a great ecosystem which allows us to teach (and learn) more Python every day. This is enormously satisfying!

What’s the origin story for PyBites?

Julian and myself, after years of friendship and brainstorming, literally said one day: “if we want to make an impact, we need to start a project of some kind, get ourselves out there!”.

The subject turned out to be Python: I wanted to improve my skills and Julian wanted to learn Python programming from scratch. This difference in (initial) skill level added a nice dynamic to it.

We started our pybit.es blog where we shared what we learned every single week. As we learned from The Compound Effect: “To up your chances of success, get a success buddy, someone who’ll keep you accountable as you cement your new habit while you return the favor.” – this really worked in our favor!

One thing stood out for us: “to learn to code you need to write a lot of code!”. Around the same time we heard about a fun experiment Noah Kagan was doing: the coffee challenge. This led to our blog code challenges which ultimately led to our #100DaysOfCode journey (and course) and our CodeChalleng.es platform.

What kinds of challenges did you face with the project?

We had to learn how to balance work and family life. Doing this alongside a full-time job meant a great personal time investment. You have to organize, think about scale and delegate things to not get overwhelmed / burned out.

Which leads to the second challenge we had: learning about the marketing/ business side. “Build it and they will come”… well not exactly! A great challenge is to build mechanisms to get feedback and iterate quickly (“kaizen”) to make a product people actually want/need.

Which Python libraries are your favorite (core or 3rd party)?

In the standard library there are a lot of gems which I get to use at work or on our platform. Some favorites: collections (namedtuple/ defaultdict/ Counter), itertools, re and datetime/ calendar.

External modules: with 200K packages on pypi, I barely used the tip of the iceberg. However there are some goto libraries: requests, feedparser, BeautifulSoup, dateutil, pandas and Django of course.

When we learn something cool we try to wrap it in one or more Bites and/or post a tip to Twitter, check out our collection and add your own here.

Is there anything else you’d like to say?

Thanks for having me on. I enjoy reading these interviews, what moves other developers, it’s really inspiring. Keep up the good work!

And we would not be PyBites without a call to action: as Jake VanderPlas said: “Don’t set out to learn Python. Choose a problem you’re interested in and learn to solve it with Python.” – visit us at CodeChalleng.es and get coding!

Thanks for doing the interview, Bob!

The post PyDev of the Week: Bob Belderbos appeared first on The Mouse Vs. The Python.

Kushal Das: IP addresses which tried to break into this server in 2019

This Friday, I tried to look into the SSH failures on my servers, how different systems/bots/people tried to break into the servers. I have the logs from July this month (when I moved into newer servers).

Following the standard trends, most of the IP addresses are working as a staging area for attacks by other malware or people. Most of these IP addresses are the real people/places from where the attacks are originating. There are around 2.3k+ IP addresses in this list.

Origin IP locations

The above is a map of all of the IP addresses which tried to break into my system.

Country wise

You can see there is a big RED circle here, as one particular IP from Belgium tried 3k+ times, the second country is China, and the USA is in third place. I made the map into a static image as that is easier for the page load.

BE 3032

CN 1577

US 978

FR 934

RU 807

SG 483

DE 405

NL 319

CA 279

KR 276

Known VS unknown IP addresses

I also verified the IP addresses against AlienVault database, and it is an open threat intelligence community. The API is very simple to use.

According to the AlienVault, 1513 IP addresses are already known for similar kinds of attacks, and 864 IP addresses are unknown. In the coming days, I will submit back these IP addresses to AlienVault.

I took the highest amount of time to learn how to do that heatmap on the world map. I will write a separate blog post on that topic.

Trey Hunner: Cyber Monday Python Sales

I’m running a sale that ends in 24 hours, but I’m not the only one. This post is a compilation of the different Cyber Monday deals I’ve found related to Python and Python learning.

Python Morsels, weekly skill-building for professional Pythonistas

Python Morsels is my weekly Python skill-building service.

I’m offering something sort of like a “buy one get one free” sale this year.

You can pay $200 to get 2 redemption codes, each worth 12 months of Python Morsels.

You can use one code for yourself and give one to a friend. Or you could be extra generous and give them both away to two friends. Either way, 2 people are each getting one year’s worth of weekly Python training.

You can find more details on this sale here.

Data School’s Machine Learning course

Kevin Markham of Data School is selling his “Machine Learning with Text in Python” course for $195 (it’s usually $295). You can find more details on this sale on the Data School Black Friday post.

Talk Python Course Bundle

Michael Kennedy is selling a bundle that includes every Talk Python course for $250.

There are 20 courses included in this bundle. If you’re into Python and you don’t already own most of these courses, this bundle could be a really good deal for you.

Reuven Lerner’s Python courses

Reuven Lerner is offering a 50% off sale on his courses. Reuven has courses on Python, Git, and regular expressions.

This sale also includes Reuven’s Weekly Python Exercise, which is similar to Python Morsels, but has its own flavor. You could sign up for both if you want double the weekly learning.

Real Python courses

Real Python is also offering $40 off their annual memberships. Real Python has many tutorials and courses as well.

PyBites Code Challenges

Bob and Julian of PyBites are offering their a 40% discount off their Newbie Bites on their PyBites Code Challenges platform.

If you’re new to Python and programming, check out their newbie bites.

Other Cyber Monday deals?

If you have questions about the Python Morsels sale, email me.

The Python Morsels sale and likely all the other sales above will end in the next 24 hours, probably sooner depending on when you’re reading this.

So go check them out!

Did I miss a deal that you know about? Link to it in the comments!

Django Weblog: Django security releases issued: 2.2.8 and 2.1.15

In accordance with our security release policy, the Django team is issuing Django 2.2.8 and Django 2.1.15. These release addresses the security issue detailed below. We encourage all users of Django to upgrade as soon as possible.

CVE-2019-19118: Privilege escalation in the Django admin.

Since Django 2.1, a Django model admin displaying a parent model with related model inlines, where the user has view-only permissions to a parent model but edit permissions to the inline model, would display a read-only view of the parent model but editable forms for the inline.

Submitting these forms would not allow direct edits to the parent model, but would trigger the parent model's save() method, and cause pre and post-save signal handlers to be invoked. This is a privilege escalation as a user who lacks permission to edit a model should not be able to trigger its save-related signals.

To resolve this issue, the permission handling code of the Django admin interface has been changed. Now, if a user has only the "view" permission for a parent model, the entire displayed form will not be editable, even if the user has permission to edit models included in inlines.

This is a backwards-incompatible change, and the Django security team is aware that some users of Django were depending on the ability to allow editing of inlines in the admin form of an otherwise view-only parent model.

Given the complexity of the Django admin, and in-particular the permissions related checks, it is the view of the Django security team that this change was necessary: that it is not currently feasible to maintain the existing behavior whilst escaping the potential privilege escalation in a way that would avoid a recurrence of similar issues in the future, and that would be compatible with Django's safe by default philosophy.

For the time being, developers whose applications are affected by this change should replace the use of inlines in read-only parents with custom forms and views that explicitly implement the desired functionality. In the longer term, adding a documented, supported, and properly-tested mechanism for partially-editable multi-model forms to the admin interface may occur in Django itself.

Thank you to Shen Ying for reporting this issue.

Affected supported versions

- Django master branch

- Django 3.0 (which will be released in a separate blog post later today)

- Django 2.2

- Django 2.1

Resolution

Patches to resolve the issue have been applied to Django's master branch and the 3.0, 2.2, and 2.1 release branches. The patches may be obtained from the following changesets:

- On the master branch

- On the 3.0 release branch

- On the 2.2 release branch

- On the 2.1 release branch

The following releases have been issued:

- Django 2.2.8 (download Django 2.2.8 | 2.2.8 checksums)

- Django 2.1.15 (download Django 2.1.15 | 2.1.15 checksums)

The PGP key ID used for these releases is Carlton Gibson: E17DF5C82B4F9D00.

General notes regarding security reporting

As always, we ask that potential security issues be reported via private email to security@djangoproject.com, and not via Django's Trac instance or the django-developers list. Please see our security policies for further information.

Made With Mu: A Manga Book on CircuitPython and Mu

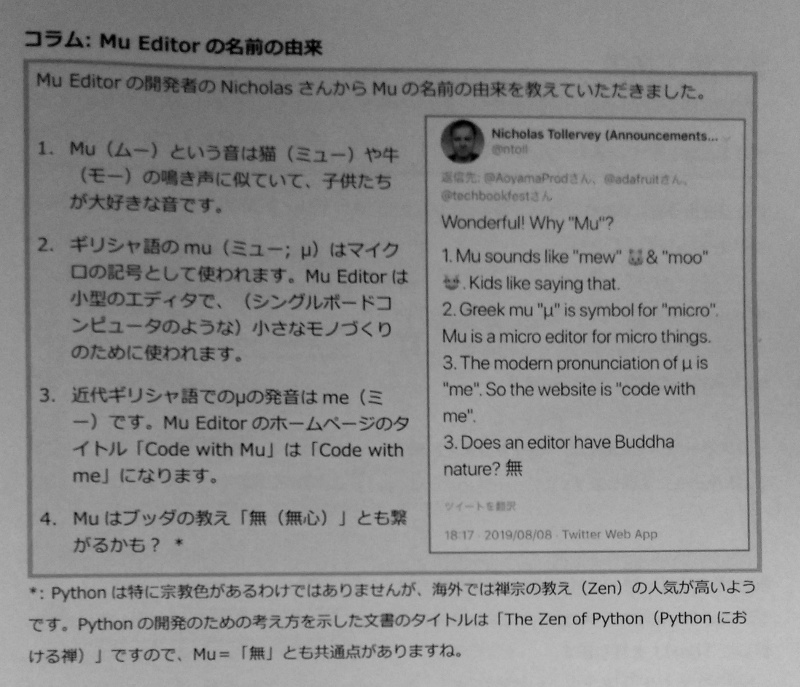

I was recently contacted by Mitsuharu Aoyama (青山光春), a Japanese maker and author. It turns out they’ve written a cool book about using CircuitPython on the Circuit Playground Express with Mu. Not only is it really great to see folks write about Mu, but the book has wonderful Manga artwork.

Many congratulations to everyone involved in publishing this book..!

Mitsuharu is part of STEAM Tokyo whose activities you can follow on Twitter.

Our paths had crossed via Twitter while the book was written and I was rather pleased to see the origin story for the name “Mu” got a mention since I shared it with Mitsuharu in a tweet. As you’ll read below (and in typical fashion for me), there are many layers to my reason for the choice of name.

Most of all, I’m pleased such a book exists because it’s validation that all the work done by the volunteers who contribute to Mu is paying off, especially so when it comes to translation and accessibility efforts. Folks all over the world see value in using Mu to help with their journey into programming, especially when used with wonderful projects such as Adafruit’s CircuitPython and their amazing line of boards.

If you’re a Japanese speaker, a teacher of Japanese students or Japanophile you can purchase the book from our friends over at Adafruit.