If you’re like most Python users, including me, then you probably started your Python journey by learning about print(). It helped you write your very own hello world one-liner. You can use it to display formatted messages onto the screen and perhaps find some bugs. But if you think that’s all there is to know about Python’s print() function, then you’re missing out on a lot!

Keep reading to take full advantage of this seemingly boring and unappreciated little function. This tutorial will get you up to speed with using Python print() effectively. However, prepare for a deep dive as you go through the sections. You may be surprised how much print() has to offer!

By the end of this tutorial, you’ll know how to:

- Avoid common mistakes with Python’s

print() - Deal with newlines, character encodings, and buffering

- Write text to files

- Mock

print() in unit tests - Build advanced user interfaces in the terminal

If you’re a complete beginner, then you’ll benefit most from reading the first part of this tutorial, which illustrates the essentials of printing in Python. Otherwise, feel free to skip that part and jump around as you see fit.

Note:print() was a major addition to Python 3, in which it replaced the old print statement available in Python 2.

There were a number of good reasons for that, as you’ll see shortly. Although this tutorial focuses on Python 3, it does show the old way of printing in Python for reference.

Printing in a Nutshell

Let’s jump in by looking at a few real-life examples of printing in Python. By the end of this section, you’ll know every possible way of calling print(). Or, in programmer lingo, you’d say you’ll be familiar with the function signature.

Calling Print

The simplest example of using Python print() requires just a few keystrokes:

You don’t pass any arguments, but you still need to put empty parentheses at the end, which tell Python to actually execute the function rather than just refer to it by name.

This will produce an invisible newline character, which in turn will cause a blank line to appear on your screen. You can call print() multiple times like this to add vertical space. It’s just as if you were hitting Enter on your keyboard in a word processor.

A newline character is a special control character used to indicate the end of a line (EOL). It usually doesn’t have a visible representation on the screen, but some text editors can display such non-printable characters with little graphics.

The word “character” is somewhat of a misnomer in this case, because a newline is often more than one character long. For example, the Windows operating system, as well as the HTTP protocol, represent newlines with a pair of characters. Sometimes you need to take those differences into account to design truly portable programs.

To find out what constitutes a newline in your operating system, use Python’s built-in os module.

This will immediately tell you that Windows and DOS represent the newline as a sequence of \r followed by \n:

>>>>>> importos>>> os.linesep'\r\n'

On Unix, Linux, and recent versions of macOS, it’s a single \n character:

>>>>>> importos>>> os.linesep'\n'

The classic Mac OS X, however, sticks to its own “think different” philosophy by choosing yet another representation:

>>>>>> importos>>> os.linesep'\r'

Notice how these characters appear in string literals. They use special syntax with a preceding backslash (\) to denote the start of an escape character sequence. Such sequences allow for representing control characters, which would be otherwise invisible on screen.

Most programming languages come with a predefined set of escape sequences for special characters such as these:

\\: backslash\b: backspace\t: tab\r: carriage return (CR)\n: newline, also known as line feed (LF)

The last two are reminiscent of mechanical typewriters, which required two separate commands to insert a newline. The first command would move the carriage back to the beginning of the current line, while the second one would advance the roll to the next line.

By comparing the corresponding ASCII character codes, you’ll see that putting a backslash in front of a character changes its meaning completely. However, not all characters allow for this–only the special ones.

To compare ASCII character codes, you may want to use the built-in ord() function:

>>>>>> ord('r')114>>> ord('\r')13 Keep in mind that, in order to form a correct escape sequence, there must be no space between the backslash character and a letter!

As you just saw, calling print() without arguments results in a blank line, which is a line comprised solely of the newline character. Don’t confuse this with an empty line, which doesn’t contain any characters at all, not even the newline!

You can use Python’s string literals to visualize these two:

'\n'# Blank line''# Empty line

The first one is one character long, whereas the second one has no content.

Note: To remove the newline character from a string in Python, use its .rstrip() method, like this:

>>>>>> 'A line of text.\n'.rstrip()'A line of text.'

This strips any trailing whitespace from the right edge of the string of characters.

In a more common scenario, you’d want to communicate some message to the end user. There are a few ways to achieve this.

First, you may pass a string literal directly to print():

>>>>>> print('Please wait while the program is loading...') This will print the message verbatim onto the screen.

String literals in Python can be enclosed either in single quotes (') or double quotes ("). According to the official PEP 8 style guide, you should just pick one and keep using it consistently. There’s no difference, unless you need to nest one in another.

For example, you can’t use double quotes for the literal and also include double quotes inside of it, because that’s ambiguous for the Python interpreter:

"My favorite book is "PythonTricks""# Wrong!

What you want to do is enclose the text, which contains double quotes, within single quotes:

'My favorite book is "Python Tricks"'

The same trick would work the other way around:

"My favorite book is 'Python Tricks'"

Alternatively, you could use escape character sequences mentioned earlier, to make Python treat those internal double quotes literally as part of the string literal:

"My favorite book is \"Python Tricks\""

Escaping is fine and dandy, but it can sometimes get in the way. Specifically, when you need your string to contain relatively many backslash characters in literal form.

One classic example is a file path on Windows:

'C:\Users\jdoe'# Wrong!'C:\\Users\\jdoe'

Notice how each backslash character needs to be escaped with yet another backslash.

This is even more prominent with regular expressions, which quickly get convoluted due to the heavy use of special characters:

'^\\w:\\\\(?:(?:(?:[^\\\\]+)?|(?:[^\\\\]+)\\\\[^\\\\]+)*)$'

Fortunately, you can turn off character escaping entirely with the help of raw-string literals. Simply prepend an r or R before the opening quote, and now you end up with this:

r'C:\Users\jdoe'r'^\w:\\(?:(?:(?:[^\\]+)?|(?:[^\\]+)\\[^\\]+)*)$'

That’s much better, isn’t it?

There are a few more prefixes that give special meaning to string literals in Python, but you won’t get into them here.

Lastly, you can define multi-line string literals by enclosing them between ''' or """, which are often used as docstrings.

Here’s an example:

"""This is an exampleof a multi-line stringin Python."""

To prevent an initial newline, simply put the text right after the opening """:

"""This is an exampleof a multi-line stringin Python."""

You can also use a backslash to get rid of the newline:

"""\This is an exampleof a multi-line stringin Python."""

To remove indentation from a multi-line string, you might take advantage of the built-in textwrap module:

>>>>>> importtextwrap>>> paragraph='''... This is an example... of a multi-line string... in Python.... '''...>>> print(paragraph) This is an example of a multi-line string in Python.>>> print(textwrap.dedent(paragraph).strip())This is an exampleof a multi-line stringin Python.

This will take care of unindenting paragraphs for you. There are also a few other useful functions in textwrap for text alignment you’d find in a word processor.

Secondly, you could extract that message into its own variable with a meaningful name to enhance readability and promote code reuse:

>>>>>> message='Please wait while the program is loading...'>>> print(message)

Lastly, you could pass an expression, like string concatenation, to be evaluated before printing the result:

>>>>>> importos>>> print('Hello, '+os.getlogin()+'! How are you?')Hello, jdoe! How are you? In fact, there are a dozen ways to format messages in Python. I highly encourage you to take a look at f-strings, introduced in Python 3.6, because they offer the most concise syntax of them all:

>>>>>> importos>>> print(f'Hello, {os.getlogin()}! How are you?') Moreover, f-strings will prevent you from making a common mistake, which is forgetting to type cast concatenated operands. Python is a strongly typed language, which means it won’t allow you to do this:

>>>>>> 'My age is '+42Traceback (most recent call last):

File "<input>", line 1, in <module>'My age is '+42TypeError: can only concatenate str (not "int") to str

That’s wrong because adding numbers to strings doesn’t make sense. You need to explicitly convert the number to string first, in order to join them together:

>>>>>> 'My age is '+str(42)'My age is 42'

Unless you handle such errors yourself, the Python interpreter will let you know about a problem by showing a traceback.

Note:str() is a global built-in function that converts an object into its string representation.

You can call it directly on any object, for example, a number:

Built-in data types have a predefined string representation out of the box, but later in this article, you’ll find out how to provide one for your custom classes.

As with any function, it doesn’t matter whether you pass a literal, a variable, or an expression. Unlike many other functions, however, print() will accept anything regardless of its type.

So far, you only looked at the string, but how about other data types? Let’s try literals of different built-in types and see what comes out:

>>>>>> print(42)# <class 'int'>42>>> print(3.14)# <class 'float'>3.14>>> print(1+2j)# <class 'complex'>(1+2j)>>> print(True)# <class 'bool'>True>>> print([1,2,3])# <class 'list'>[1, 2, 3]>>> print((1,2,3))# <class 'tuple'>(1, 2, 3)>>> print({'red','green','blue'})# <class 'set'>{'red', 'green', 'blue'}>>> print({'name':'Alice','age':42})# <class 'dict'>{'name': 'Alice', 'age': 42}>>> print('hello')# <class 'str'>hello Watch out for the None constant, though. Despite being used to indicate an absence of a value, it will show up as 'None' rather than an empty string:

How does print() know how to work with all these different types? Well, the short answer is that it doesn’t. It implicitly calls str() behind the scenes to type cast any object into a string. Afterward, it treats strings in a uniform way.

Later in this tutorial, you’ll learn how to use this mechanism for printing custom data types such as your classes.

Okay, you’re now able to call print() with a single argument or without any arguments. You know how to print fixed or formatted messages onto the screen. The next subsection will expand on message formatting a little bit.

To achieve the same result in the previous language generation, you’d normally want to drop the parentheses enclosing the text:

# Python 2printprint'Please wait...'print'Hello, %s! How are you?'%os.getlogin()print'Hello, %s. Your age is %d.'%(name,age)

That’s because print wasn’t a function back then, as you’ll see in the next section. Note, however, that in some cases parentheses in Python are redundant. It wouldn’t harm to include them as they’d just get ignored. Does that mean you should be using the print statement as if it were a function? Absolutely not!

For example, parentheses enclosing a single expression or a literal are optional. Both instructions produce the same result in Python 2:

>>>>>> # Python 2>>> print'Please wait...'Please wait...>>> print('Please wait...')Please wait... Round brackets are actually part of the expression rather than the print statement. If your expression happens to contain only one item, then it’s as if you didn’t include the brackets at all.

On the other hand, putting parentheses around multiple items forms a tuple:

>>>>>> # Python 2>>> print'My name is','John'My name is John>>> print('My name is','John')('My name is', 'John') This is a known source of confusion. In fact, you’d also get a tuple by appending a trailing comma to the only item surrounded by parentheses:

>>>>>> # Python 2>>> print('Please wait...')Please wait...>>> print('Please wait...',)# Notice the comma('Please wait...',) The bottom line is that you shouldn’t call print with brackets in Python 2. Although, to be completely accurate, you can work around this with the help of a __future__ import, which you’ll read more about in the relevant section.

Separating Multiple Arguments

You saw print() called without any arguments to produce a blank line and then called with a single argument to display either a fixed or a formatted message.

However, it turns out that this function can accept any number of positional arguments, including zero, one, or more arguments. That’s very handy in a common case of message formatting, where you’d want to join a few elements together.

Arguments can be passed to a function in one of several ways. One way is by explicitly naming the arguments when you’re calling the function, like this:

>>>>>> defdiv(a,b):... returna/b...>>> div(a=3,b=4)0.75

Since arguments can be uniquely identified by name, their order doesn’t matter. Swapping them out will still give the same result:

Conversely, arguments passed without names are identified by their position. That’s why positional arguments need to follow strictly the order imposed by the function signature:

>>>>>> div(3,4)0.75>>> div(4,3)1.3333333333333333

print() allows an arbitrary number of positional arguments thanks to the *args parameter.

Let’s have a look at this example:

>>>>>> importos>>> print('My name is',os.getlogin(),'and I am',42)My name is jdoe and I am 42 print() concatenated all four arguments passed to it, and it inserted a single space between them so that you didn’t end up with a squashed message like 'My name isjdoeand I am42'.

Notice that it also took care of proper type casting by implicitly calling str() on each argument before joining them together. If you recall from the previous subsection, a naïve concatenation may easily result in an error due to incompatible types:

>>>>>> print('My age is: '+42)Traceback (most recent call last):

File "<input>", line 1, in <module>print('My age is: '+42)TypeError: can only concatenate str (not "int") to str Apart from accepting a variable number of positional arguments, print() defines four named or keyword arguments, which are optional since they all have default values. You can view their brief documentation by calling help(print) from the interactive interpreter.

Let’s focus on sep just for now. It stands for separator and is assigned a single space (' ') by default. It determines the value to join elements with.

It has to be either a string or None, but the latter has the same effect as the default space:

>>>>>> print('hello','world',sep=None)hello world>>> print('hello','world',sep=' ')hello world>>> print('hello','world')hello world If you wanted to suppress the separator completely, you’d have to pass an empty string ('') instead:

>>>>>> print('hello','world',sep='')helloworld You may want print() to join its arguments as separate lines. In that case, simply pass the escaped newline character described earlier:

>>>>>> print('hello','world',sep='\n')helloworld A more useful example of the sep parameter would be printing something like file paths:

>>>>>> print('home','user','documents',sep='/')home/user/documents Remember that the separator comes between the elements, not around them, so you need to account for that in one way or another:

>>>>>> print('/home','user','documents',sep='/')/home/user/documents>>> print('','home','user','documents',sep='/')/home/user/documents Specifically, you can insert a slash character (/) into the first positional argument, or use an empty string as the first argument to enforce the leading slash.

Note: Be careful about joining elements of a list or tuple.

Doing it manually will result in a well-known TypeError if at least one of the elements isn’t a string:

>>>>>> print(' '.join(['jdoe is',42,'years old']))Traceback (most recent call last):

File "<input>", line 1, in <module>print(','.join(['jdoe is',42,'years old']))TypeError: sequence item 1: expected str instance, int found It’s safer to just unpack the sequence with the star operator (*) and let print() handle type casting:

>>>>>> print(*['jdoe is',42,'years old'])jdoe is 42 years old

Unpacking is effectively the same as calling print() with individual elements of the list.

One more interesting example could be exporting data to a comma-separated values (CSV) format:

>>>>>> print(1,'Python Tricks','Dan Bader',sep=',')1,Python Tricks,Dan Bader

This wouldn’t handle edge cases such as escaping commas correctly, but for simple use cases, it should do. The line above would show up in your terminal window. In order to save it to a file, you’d have to redirect the output. Later in this section, you’ll see how to use print() to write text to files straight from Python.

Finally, the sep parameter isn’t constrained to a single character only. You can join elements with strings of any length:

>>>>>> print('node','child','child',sep=' -> ')node -> child -> child In the upcoming subsections, you’ll explore the remaining keyword arguments of the print() function.

To print multiple elements in Python 2, you must drop the parentheses around them, just like before:

>>>>>> # Python 2>>> importos>>> print'My name is',os.getlogin(),'and I am',42My name is jdoe and I am 42

If you kept them, on the other hand, you’d be passing a single tuple element to the print statement:

>>>>>> # Python 2>>> importos>>> print('My name is',os.getlogin(),'and I am',42)('My name is', 'jdoe', 'and I am', 42) Moreover, there’s no way of altering the default separator of joined elements in Python 2, so one workaround is to use string interpolation like so:

>>>>>> # Python 2>>> importos>>> print'My name is %s and I am %d'%(os.getlogin(),42)My name is jdoe and I am 42

That was the default way of formatting strings until the .format() method got backported from Python 3.

Preventing Line Breaks

Sometimes you don’t want to end your message with a trailing newline so that subsequent calls to print() will continue on the same line. Classic examples include updating the progress of a long-running operation or prompting the user for input. In the latter case, you want the user to type in the answer on the same line:

Are you sure you want to do this? [y/n] y

Many programming languages expose functions similar to print() through their standard libraries, but they let you decide whether to add a newline or not. For example, in Java and C#, you have two distinct functions, while other languages require you to explicitly append \n at the end of a string literal.

Here are a few examples of syntax in such languages:

| Language | Example |

|---|

| Perl | print "hello world\n" |

| C | printf("hello world\n"); |

| C++ | std::cout << "hello world"<< std::endl; |

In contrast, Python’s print() function always adds \n without asking, because that’s what you want in most cases. To disable it, you can take advantage of yet another keyword argument, end, which dictates what to end the line with.

In terms of semantics, the end parameter is almost identical to the sep one that you saw earlier:

- It must be a string or

None. - It can be arbitrarily long.

- It has a default value of

'\n'. - If equal to

None, it’ll have the same effect as the default value. - If equal to an empty string (

''), it’ll suppress the newline.

Now you understand what’s happening under the hood when you’re calling print() without arguments. Since you don’t provide any positional arguments to the function, there’s nothing to be joined, and so the default separator isn’t used at all. However, the default value of end still applies, and a blank line shows up.

Note: You may be wondering why the end parameter has a fixed default value rather than whatever makes sense on your operating system.

Well, you don’t have to worry about newline representation across different operating systems when printing, because print() will handle the conversion automatically. Just remember to always use the \n escape sequence in string literals.

This is currently the most portable way of printing a newline character in Python:

>>>>>> print('line1\nline2\nline3')line1line2line3 If you were to try to forcefully print a Windows-specific newline character on a Linux machine, for example, you’d end up with broken output:

>>>>>> print('line1\r\nline2\r\nline3')line3 On the flip side, when you open a file for reading with open(), you don’t need to care about newline representation either. The function will translate any system-specific newline it encounters into a universal '\n'. At the same time, you have control over how the newlines should be treated both on input and output if you really need that.

To disable the newline, you must specify an empty string through the end keyword argument:

print('Checking file integrity...',end='')# (...)print('ok')Even though these are two separate print() calls, which can execute a long time apart, you’ll eventually see only one line. First, it’ll look like this:

Checking file integrity...

However, after the second call to print(), the same line will appear on the screen as:

Checking file integrity...ok

As with sep, you can use end to join individual pieces into a big blob of text with a custom separator. Instead of joining multiple arguments, however, it’ll append text from each function call to the same line:

print('The first sentence',end='. ')print('The second sentence',end='. ')print('The last sentence.')These three instructions will output a single line of text:

The first sentence. The second sentence. The last sentence.

You can mix the two keyword arguments:

print('Mercury','Venus','Earth',sep=', ',end=', ')print('Mars','Jupiter','Saturn',sep=', ',end=', ')print('Uranus','Neptune','Pluto',sep=', ')Not only do you get a single line of text, but all items are separated with a comma:

Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, Neptune, Pluto

There’s nothing to stop you from using the newline character with some extra padding around it:

print('Printing in a Nutshell',end='\n * ')print('Calling Print',end='\n * ')print('Separating Multiple Arguments',end='\n * ')print('Preventing Line Breaks')It would print out the following piece of text:

Printing in a Nutshell

* Calling Print

* Separating Multiple Arguments

* Preventing Line Breaks

As you can see, the end keyword argument will accept arbitrary strings.

Note: Looping over lines in a text file preserves their own newline characters, which combined with the print() function’s default behavior will result in a redundant newline character:

>>>>>> withopen('file.txt')asfile_object:... forlineinfile_object:... print(line)...Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmodtempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam,quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo There are two newlines after each line of text. You want to strip one of the them, as shown earlier in this article, before printing the line:

Alternatively, you can keep the newline in the content but suppress the one appended by print() automatically. You’d use the end keyword argument to do that:

>>>>>> withopen('file.txt')asfile_object:... forlineinfile_object:... print(line,end='')...Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmodtempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam,quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo By ending a line with an empty string, you effectively disable one of the newlines.

You’re getting more acquainted with printing in Python, but there’s still a lot of useful information ahead. In the upcoming subsection, you’ll learn how to intercept and redirect the print() function’s output.

Preventing a line break in Python 2 requires that you append a trailing comma to the expression:

However, that’s not ideal because it also adds an unwanted space, which would translate to end=' ' instead of end='' in Python 3. You can test this with the following code snippet:

print'BEFORE'print'hello',print'AFTER'

Notice there’s a space between the words hello and AFTER:

In order to get the expected result, you’d need to use one of the tricks explained later, which is either importing the print() function from __future__ or falling back to the sys module:

importsysprint'BEFORE'sys.stdout.write('hello')print'AFTER'This will print the correct output without extra space:

While using the sys module gives you control over what gets printed to the standard output, the code becomes a little bit more cluttered.

Printing to a File

Believe it or not, print() doesn’t know how to turn messages into text on your screen, and frankly it doesn’t need to. That’s a job for lower-level layers of code, which understand bytes and know how to push them around.

print() is an abstraction over these layers, providing a convenient interface that merely delegates the actual printing to a stream or file-like object. A stream can be any file on your disk, a network socket, or perhaps an in-memory buffer.

In addition to this, there are three standard streams provided by the operating system:

stdin: standard inputstdout: standard outputstderr: standard error

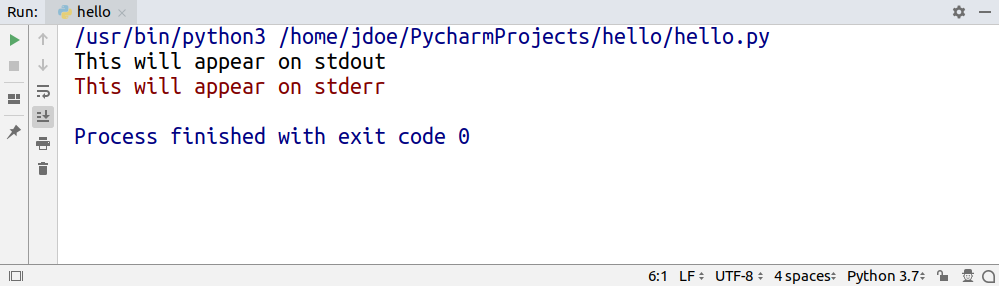

Standard output is what you see in the terminal when you run various command-line programs including your own Python scripts:

$ cat hello.py

print('This will appear on stdout')$ python hello.py

This will appear on stdoutUnless otherwise instructed, print() will default to writing to standard output. However, you can tell your operating system to temporarily swap out stdout for a file stream, so that any output ends up in that file rather than the screen:

$ python hello.py > file.txt

$ cat file.txt

This will appear on stdout

That’s called stream redirection.

The standard error is similar to stdout in that it also shows up on the screen. Nonetheless, it’s a separate stream, whose purpose is to log error messages for diagnostics. By redirecting one or both of them, you can keep things clean.

Note: To redirect stderr, you need to know about file descriptors, also known as file handles.

They’re arbitrary, albeit constant, numbers associated with standard streams. Below, you’ll find a summary of the file descriptors for a family of POSIX-compliant operating systems:

| Stream | File Descriptor |

|---|

stdin | 0 |

stdout | 1 |

stderr | 2 |

Knowing those descriptors allows you to redirect one or more streams at a time:

| Command | Description |

|---|

./program > out.txt | Redirect stdout |

./program 2> err.txt | Redirect stderr |

./program > out.txt 2> err.txt | Redirect stdout and stderr to separate files |

./program &> out_err.txt | Redirect stdout and stderr to the same file |

Note that > is the same as 1>.

Some programs use different coloring to distinguish between messages printed to stdout and stderr:

![The output of a program executed in PyCharm]()

Run Tool Window in PyCharm

While both stdout and stderr are write-only, stdin is read-only. You can think of standard input as your keyboard, but just like with the other two, you can swap out stdin for a file to read data from.

In Python, you can access all standard streams through the built-in sys module:

>>>>>> importsys>>> sys.stdin<_io.TextIOWrapper name='<stdin>' mode='r' encoding='UTF-8'>>>> sys.stdin.fileno()0>>> sys.stdout<_io.TextIOWrapper name='<stdout>' mode='w' encoding='UTF-8'>>>> sys.stdout.fileno()1>>> sys.stderr<_io.TextIOWrapper name='<stderr>' mode='w' encoding='UTF-8'>>>> sys.stderr.fileno()2

As you can see, these predefined values resemble file-like objects with mode and encoding attributes as well as .read() and .write() methods among many others.

By default, print() is bound to sys.stdout through its file argument, but you can change that. Use that keyword argument to indicate a file that was open in write or append mode, so that messages go straight to it:

withopen('file.txt',mode='w')asfile_object:print('hello world',file=file_object)This will make your code immune to stream redirection at the operating system level, which might or might not be desired.

For more information on working with files in Python, you can check out Reading and Writing Files in Python (Guide).

Note: Don’t try using print() for writing binary data as it’s only well suited for text.

Just call the binary file’s .write() directly:

withopen('file.dat','wb')asfile_object:file_object.write(bytes(4))file_object.write(b'\xff')If you wanted to write raw bytes on the standard output, then this will fail too because sys.stdout is a character stream:

>>>>>> importsys>>> sys.stdout.write(bytes(4))Traceback (most recent call last):

File "<stdin>", line 1, in <module>TypeError: write() argument must be str, not bytes

You must dig deeper to get a handle of the underlying byte stream instead:

>>>>>> importsys>>> num_bytes_written=sys.stdout.buffer.write(b'\x41\x0a')A

This prints an uppercase letter A and a newline character, which correspond to decimal values of 65 and 10 in ASCII. However, they’re encoded using hexadecimal notation in the bytes literal.

Note that print() has no control over character encoding. It’s the stream’s responsibility to encode received Unicode strings into bytes correctly. In most cases, you won’t set the encoding yourself, because the default UTF-8 is what you want. If you really need to, perhaps for legacy systems, you can use the encoding argument of open():

withopen('file.txt',mode='w',encoding='iso-8859-1')asfile_object:print('über naïve café',file=file_object)Instead of a real file existing somewhere in your file system, you can provide a fake one, which would reside in your computer’s memory. You’ll use this technique later for mocking print() in unit tests:

>>>>>> importio>>> fake_file=io.StringIO()>>> print('hello world',file=fake_file)>>> fake_file.getvalue()'hello world\n' If you got to this point, then you’re left with only one keyword argument in print(), which you’ll see in the next subsection. It’s probably the least used of them all. Nevertheless, there are times when it’s absolutely necessary.

There’s a special syntax in Python 2 for replacing the default sys.stdout with a custom file in the print statement:

withopen('file.txt',mode='w')asfile_object:print>>file_object,'hello world'Because strings and bytes are represented with the same str type in Python 2, the print statement can handle binary data just fine:

withopen('file.dat',mode='wb')asfile_object:print>>file_object,'\x41\x0a'Although, there’s a problem with character encoding. The open() function in Python 2 lacks the encoding parameter, which would often result in the dreadful UnicodeEncodeError:

>>>>>> withopen('file.txt',mode='w')asfile_object:... unicode_text=u'\xfcber na\xefve caf\xe9'... print>>file_object,unicode_text... Traceback (most recent call last):

File "<stdin>", line 3, in <module>UnicodeEncodeError: 'ascii' codec can't encode character u'\xfc'... Notice how non-Latin characters must be escaped in both Unicode and string literals to avoid a syntax error. Take a look at this example:

unicode_literal=u'\xfcber na\xefve caf\xe9'string_literal='\xc3\xbcber na\xc3\xafve caf\xc3\xa9'

Alternatively, you could specify source code encoding according to PEP 263 at the top of the file, but that wasn’t the best practice due to portability issues:

#!/usr/bin/env python2# -*- coding: utf-8 -*-unescaped_unicode_literal=u'über naïve café'unescaped_string_literal='über naïve café'

Your best bet is to encode the Unicode string just before printing it. You can do this manually:

withopen('file.txt',mode='w')asfile_object:unicode_text=u'\xfcber na\xefve caf\xe9'encoded_text=unicode_text.encode('utf-8')print>>file_object,encoded_textHowever, a more convenient option is to use the built-in codecs module:

importcodecswithcodecs.open('file.txt','w',encoding='utf-8')asfile_object:unicode_text=u'\xfcber na\xefve caf\xe9'print>>file_object,unicode_textIt’ll take care of making appropriate conversions when you need to read or write files.

Buffering Print Calls

In the previous subsection, you learned that print() delegates printing to a file-like object such as sys.stdout. Some streams, however, buffer certain I/O operations to enhance performance, which can get in the way. Let’s take a look at an example.

Imagine you were writing a countdown timer, which should append the remaining time to the same line every second:

Your first attempt may look something like this:

importtimenum_seconds=3forcountdowninreversed(range(num_seconds+1)):ifcountdown>0:print(countdown,end='...')time.sleep(1)else:print('Go!')As long as the countdown variable is greater than zero, the code keeps appending text without a trailing newline and then goes to sleep for one second. Finally, when the countdown is finished, it prints Go! and terminates the line.

Unexpectedly, instead of counting down every second, the program idles wastefully for three seconds, and then suddenly prints the entire line at once:

![Terminal with buffered output]()

That’s because the operating system buffers subsequent writes to the standard output in this case. You need to know that there are three kinds of streams with respect to buffering:

- Unbuffered

- Line-buffered

- Block-buffered

Unbuffered is self-explanatory, that is, no buffering is taking place, and all writes have immediate effect. A line-buffered stream waits before firing any I/O calls until a line break appears somewhere in the buffer, whereas a block-buffered one simply allows the buffer to fill up to a certain size regardless of its content. Standard output is both line-buffered and block-buffered, depending on which event comes first.

Buffering helps to reduce the number of expensive I/O calls. Think about sending messages over a high-latency network, for example. When you connect to a remote server to execute commands over the SSH protocol, each of your keystrokes may actually produce an individual data packet, which is orders of magnitude bigger than its payload. What an overhead! It would make sense to wait until at least a few characters are typed and then send them together. That’s where buffering steps in.

On the other hand, buffering can sometimes have undesired effects as you just saw with the countdown example. To fix it, you can simply tell print() to forcefully flush the stream without waiting for a newline character in the buffer using its flush flag:

print(countdown,end='...',flush=True)

That’s all. Your countdown should work as expected now, but don’t take my word for it. Go ahead and test it to see the difference.

Congratulations! At this point, you’ve seen examples of calling print() that cover all of its parameters. You know their purpose and when to use them. Understanding the signature is only the beginning, however. In the upcoming sections, you’ll see why.

There isn’t an easy way to flush the stream in Python 2, because the print statement doesn’t allow for it by itself. You need to get a handle of its lower-level layer, which is the standard output, and call it directly:

importtimeimportsysnum_seconds=3forcountdowninreversed(range(num_seconds+1)):ifcountdown>0:sys.stdout.write('%s...'%countdown)sys.stdout.flush()time.sleep(1)else:print'Go!'Alternatively, you could disable buffering of the standard streams either by providing the -u flag to the Python interpreter or by setting up the PYTHONUNBUFFERED environment variable:

$ python2 -u countdown.py

$PYTHONUNBUFFERED=1 python2 countdown.py

Note that print() was backported to Python 2 and made available through the __future__ module. Unfortunately, it doesn’t come with the flush parameter:

>>>>>> from__future__importprint_function>>> help(print)Help on built-in function print in module __builtin__:print(...) print(value, ..., sep=' ', end='\n', file=sys.stdout)

What you’re seeing here is a docstring of the print() function. You can display docstrings of various objects in Python using the built-in help() function.

Printing Custom Data Types

Up until now, you only dealt with built-in data types such as strings and numbers, but you’ll often want to print your own abstract data types. Let’s have a look at different ways of defining them.

For simple objects without any logic, whose purpose is to carry data, you’ll typically take advantage of namedtuple, which is available in the standard library. Named tuples have a neat textual representation out of the box:

>>>>>> fromcollectionsimportnamedtuple>>> Person=namedtuple('Person','name age')>>> jdoe=Person('John Doe',42)>>> print(jdoe)Person(name='John Doe', age=42) That’s great as long as holding data is enough, but in order to add behaviors to the Person type, you’ll eventually need to define a class. Take a look at this example:

classPerson:def__init__(self,name,age):self.name,self.age=name,age

If you now create an instance of the Person class and try to print it, you’ll get this bizarre output, which is quite different from the equivalent namedtuple:

>>>>>> jdoe=Person('John Doe',42)>>> print(jdoe)<__main__.Person object at 0x7fcac3fed1d0> It’s the default representation of objects, which comprises their address in memory, the corresponding class name and a module in which they were defined. You’ll fix that in a bit, but just for the record, as a quick workaround you could combine namedtuple and a custom class through inheritance:

fromcollectionsimportnamedtupleclassPerson(namedtuple('Person','name age')):passYour Person class has just become a specialized kind of namedtuple with two attributes, which you can customize.

Note: In Python 3, the pass statement can be replaced with the ellipsis (...) literal to indicate a placeholder:

This prevents the interpreter from raising IndentationError due to missing indented block of code.

That’s better than a plain namedtuple, because not only do you get printing right for free, but you can also add custom methods and properties to the class. However, it solves one problem while introducing another. Remember that tuples, including named tuples, are immutable in Python, so they can’t change their values once created.

It’s true that designing immutable data types is desirable, but in many cases, you’ll want them to allow for change, so you’re back with regular classes again.

Note: Following other languages and frameworks, Python 3.7 introduced data classes, which you can think of as mutable tuples. This way, you get the best of both worlds:

>>>>>> fromdataclassesimportdataclass>>> @dataclass... classPerson:... name:str... age:int... ... defcelebrate_birthday(self):... self.age+=1... >>> jdoe=Person('John Doe',42)>>> jdoe.celebrate_birthday()>>> print(jdoe)Person(name='John Doe', age=43) The syntax for variable annotations, which is required to specify class fields with their corresponding types, was defined in Python 3.6.

From earlier subsections, you already know that print() implicitly calls the built-in str() function to convert its positional arguments into strings. Indeed, calling str() manually against an instance of the regular Person class yields the same result as printing it:

>>>>>> jdoe=Person('John Doe',42)>>> str(jdoe)'<__main__.Person object at 0x7fcac3fed1d0>' str(), in turn, looks for one of two magic methods within the class body, which you typically implement. If it doesn’t find one, then it falls back to the ugly default representation. Those magic methods are, in order of search:

def __str__(self)def __repr__(self)

The first one is recommended to return a short, human-readable text, which includes information from the most relevant attributes. After all, you don’t want to expose sensitive data, such as user passwords, when printing objects.

However, the other one should provide complete information about an object, to allow for restoring its state from a string. Ideally, it should return valid Python code, so that you can pass it directly to eval():

>>>>>> repr(jdoe)"Person(name='John Doe', age=42)">>> type(eval(repr(jdoe)))<class '__main__.Person'>

Notice the use of another built-in function, repr(), which always tries to call .__repr__() in an object, but falls back to the default representation if it doesn’t find that method.

Note: Even though print() itself uses str() for type casting, some compound data types delegate that call to repr() on their members. This happens to lists and tuples, for example.

Consider this class with both magic methods, which return alternative string representations of the same object:

classUser:def__init__(self,login,password):self.login=loginself.password=passworddef__str__(self):returnself.logindef__repr__(self):returnf"User('{self.login}', '{self.password}')"If you print a single object of the User class, then you won’t see the password, because print(user) will call str(user), which eventually will invoke user.__str__():

>>>>>> user=User('jdoe','s3cret')>>> print(user)jdoe However, if you put the same user variable inside a list by wrapping it in square brackets, then the password will become clearly visible:

>>>>>> print([user])[User('jdoe', 's3cret')] That’s because sequences, such as lists and tuples, implement their .__str__() method so that all of their elements are first converted with repr().

Python gives you a lot of freedom when it comes to defining your own data types if none of the built-in ones meet your needs. Some of them, such as named tuples and data classes, offer string representations that look good without requiring any work on your part. Still, for the most flexibility, you’ll have to define a class and override its magic methods described above.

The semantics of .__str__() and .__repr__() didn’t change since Python 2, but you must remember that strings were nothing more than glorified byte arrays back then. To convert your objects into proper Unicode, which was a separate data type, you’d have to provide yet another magic method: .__unicode__().

Here’s an example of the same User class in Python 2:

classUser(object):def__init__(self,login,password):self.login=loginself.password=passworddef__unicode__(self):returnself.logindef__str__(self):returnunicode(self).encode('utf-8')def__repr__(self):user=u"User('%s', '%s')"%(self.login,self.password)returnuser.encode('unicode_escape')As you can see, this implementation delegates some work to avoid duplication by calling the built-in unicode() function on itself.

Both .__str__() and .__repr__() methods must return strings, so they encode Unicode characters into specific byte representations called character sets. UTF-8 is the most widespread and safest encoding, while unicode_escape is a special constant to express funky characters, such as é, as escape sequences in plain ASCII, such as \xe9.

The print statement is looking for the magic .__str__() method in the class, so the chosen charset must correspond to the one used by the terminal. For example, default encoding in DOS and Windows is CP 852 rather than UTF-8, so running this can result in a UnicodeEncodeError or even garbled output:

>>>>>> user=User(u'\u043d\u0438\u043a\u0438\u0442\u0430',u's3cret')>>> printuserđŻđŞđ║đŞĐéđ░

However, if you ran the same code on a system with UTF-8 encoding, then you’d get the proper spelling of a popular Russian name:

>>>>>> user=User(u'\u043d\u0438\u043a\u0438\u0442\u0430',u's3cret')>>> printuserникита

It’s recommended to convert strings to Unicode as early as possible, for example, when you’re reading data from a file, and use it consistently everywhere in your code. At the same time, you should encode Unicode back to the chosen character set right before presenting it to the user.

It seems as if you have more control over string representation of objects in Python 2 because there’s no magic .__unicode__() method in Python 3 anymore. You may be asking yourself if it’s possible to convert an object to its byte string representation rather than a Unicode string in Python 3. It’s possible, with a special .__bytes__() method that does just that:

>>>>>> classUser(object):... def__init__(self,login,password):... self.login=login... self.password=password... ... def__bytes__(self):# Python 3... returnself.login.encode('utf-8')...>>> user=User(u'\u043d\u0438\u043a\u0438\u0442\u0430',u's3cret')>>> bytes(user)b'\xd0\xbd\xd0\xb8\xd0\xba\xd0\xb8\xd1\x82\xd0\xb0' Using the built-in bytes() function on an instance delegates the call to its __bytes__() method defined in the corresponding class.

Understanding Python Print

You know how to use print() quite well at this point, but knowing what it is will allow you to use it even more effectively and consciously. After reading this section, you’ll understand how printing in Python has improved over the years.

Print Is a Function in Python 3

You’ve seen that print() is a function in Python 3. More specifically, it’s a built-in function, which means that you don’t need to import it from anywhere:

>>>>>> print<built-in function print>

It’s always available in the global namespace so that you can call it directly, but you can also access it through a module from the standard library:

>>>>>> importbuiltins>>> builtins.print<built-in function print>

This way, you can avoid name collisions with custom functions. Let’s say you wanted to redefineprint() so that it doesn’t append a trailing newline. At the same time, you wanted to rename the original function to something like println():

>>>>>> importbuiltins>>> println=builtins.print>>> defprint(*args,**kwargs):... builtins.print(*args,**kwargs,end='')...>>> println('hello')hello>>> print('hello\n')hello Now you have two separate printing functions just like in the Java programming language. You’ll define custom print() functions in the mocking section later as well. Also, note that you wouldn’t be able to overwrite print() in the first place if it wasn’t a function.

On the other hand, print() isn’t a function in the mathematical sense, because it doesn’t return any meaningful value other than the implicit None:

>>>>>> value=print('hello world')hello world>>> print(value)None Such functions are, in fact, procedures or subroutines that you call to achieve some kind of side-effect, which ultimately is a change of a global state. In the case of print(), that side-effect is showing a message on the standard output or writing to a file.

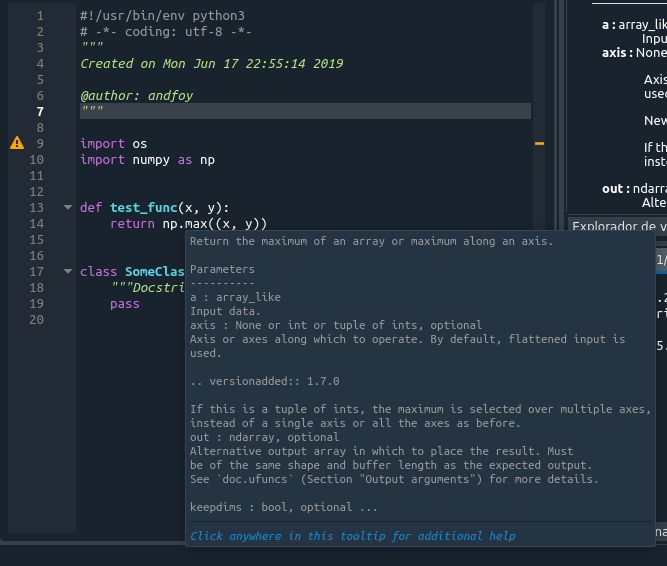

Because print() is a function, it has a well-defined signature with known attributes. You can quickly find its documentation using the editor of your choice, without having to remember some weird syntax for performing a certain task.

Besides, functions are easier to extend. Adding a new feature to a function is as easy as adding another keyword argument, whereas changing the language to support that new feature is much more cumbersome. Think of stream redirection or buffer flushing, for example.

Another benefit of print() being a function is composability. Functions are so-called first-class objects or first-class citizens in Python, which is a fancy way of saying they’re values just like strings or numbers. This way, you can assign a function to a variable, pass it to another function, or even return one from another. print() isn’t different in this regard. For instance, you can take advantage of it for dependency injection:

defdownload(url,log=print):log(f'Downloading {url}')# ...defcustom_print(*args):pass# Do not print anythingdownload('/js/app.js',log=custom_print)Here, the log parameter lets you inject a callback function, which defaults to print() but can be any callable. In this example, printing is completely disabled by substituting print() with a dummy function that does nothing.

Note: A dependency is any piece of code required by another bit of code.

Dependency injection is a technique used in code design to make it more testable, reusable, and open for extension. You can achieve it by referring to dependencies indirectly through abstract interfaces and by providing them in a push rather than pull fashion.

There’s a funny explanation of dependency injection circulating on the Internet:

Dependency injection for five-year-olds

When you go and get things out of the refrigerator for yourself, you can cause problems. You might leave the door open, you might get something Mommy or Daddy doesn’t want you to have. You might even be looking for something we don’t even have or which has expired.

What you should be doing is stating a need, “I need something to drink with lunch,” and then we will make sure you have something when you sit down to eat.

— John Munsch, 28 October 2009. (Source)

Composition allows you to combine a few functions into a new one of the same kind. Let’s see this in action by specifying a custom error() function that prints to the standard error stream and prefixes all messages with a given log level:

>>>>>> fromfunctoolsimportpartial>>> importsys>>> redirect=lambdafunction,stream:partial(function,file=stream)>>> prefix=lambdafunction,prefix:partial(function,prefix)>>> error=prefix(redirect(print,sys.stderr),'[ERROR]')>>> error('Something went wrong')[ERROR] Something went wrong This custom function uses partial functions to achieve the desired effect. It’s an advanced concept borrowed from the functional programming paradigm, so you don’t need to go too deep into that topic for now. However, if you’re interested in this topic, I recommend taking a look at the functools module.

Unlike statements, functions are values. That means you can mix them with expressions, in particular, lambda expressions. Instead of defining a full-blown function to replace print() with, you can make an anonymous lambda expression that calls it:

>>>>>> download('/js/app.js',lambdamsg:print('[INFO]',msg))[INFO] Downloading /js/app.js However, because a lambda expression is defined in place, there’s no way of referring to it elsewhere in the code.

Note: In Python, you can’t put statements, such as assignments, conditional statements, loops, and so on, in an anonymous lambda function. It has to be a single expression!

Another kind of expression is a ternary conditional expression:

>>>>>> user='jdoe'>>> print('Hi!')ifuserisNoneelseprint(f'Hi, {user}.')Hi, jdoe. Python has both conditional statements and conditional expressions. The latter is evaluated to a single value that can be assigned to a variable or passed to a function. In the example above, you’re interested in the side-effect rather than the value, which evaluates to None, so you simply ignore it.

As you can see, functions allow for an elegant and extensible solution, which is consistent with the rest of the language. In the next subsection, you’ll discover how not having print() as a function caused a lot of headaches.

Print Was a Statement in Python 2

A statement is an instruction that may evoke a side-effect when executed but never evaluates to a value. In other words, you wouldn’t be able to print a statement or assign it to a variable like this:

result=print'hello world'

That’s a syntax error in Python 2.

Here are a few more examples of statements in Python:

- assignment:

= - conditional:

if - loop:

while - assertion:

assert

Note: Python 3.8 brings a controversial walrus operator (:=), which is an assignment expression. With it, you can evaluate an expression and assign the result to a variable at the same time, even within another expression!

Take a look at this example, which calls an expensive function once and then reuses the result for further computation:

# Python 3.8+values=[y:=f(x),y**2,y**3]

This is useful for simplifying the code without losing its efficiency. Typically, performant code tends to be more verbose:

y=f(x)values=[y,y**2,y**3]

The controversy behind this new piece of syntax caused a lot of argument. An abundance of negative comments and heated debates eventually led Guido van Rossum to step down from the Benevolent Dictator For Life or BDFL position.

Statements are usually comprised of reserved keywords such as if, for, or print that have fixed meaning in the language. You can’t use them to name your variables or other symbols. That’s why redefining or mocking the print statement isn’t possible in Python 2. You’re stuck with what you get.

Furthermore, you can’t print from anonymous functions, because statements aren’t accepted in lambda expressions:

>>>>>> lambda:print'hello world'

File "<stdin>", line 1lambda:print'hello world'^SyntaxError: invalid syntax

The syntax of the print statement is ambiguous. Sometimes you can add parentheses around the message, and they’re completely optional:

>>>>>> print'Please wait...'Please wait...>>> print('Please wait...')Please wait... At other times they change how the message is printed:

>>>>>> print'My name is','John'My name is John>>> print('My name is','John')('My name is', 'John') String concatenation can raise a TypeError due to incompatible types, which you have to handle manually, for example:

>>>>>> values=['jdoe','is',42,'years old']>>> print' '.join(map(str,values))jdoe is 42 years old

Compare this with similar code in Python 3, which leverages sequence unpacking:

>>>>>> values=['jdoe','is',42,'years old']>>> print(*values)# Python 3jdoe is 42 years old

There aren’t any keyword arguments for common tasks such as flushing the buffer or stream redirection. You need to remember the quirky syntax instead. Even the built-in help() function isn’t that helpful with regards to the print statement:

>>>>>> help(print)

File "<stdin>", line 1help(print)^SyntaxError: invalid syntax

Trailing newline removal doesn’t work quite right, because it adds an unwanted space. You can’t compose multiple print statements together, and, on top of that, you have to be extra diligent about character encoding.

The list of problems goes on and on. If you’re curious, you can jump back to the previous section and look for more detailed explanations of the syntax in Python 2.

However, you can mitigate some of those problems with a much simpler approach. It turns out the print() function was backported to ease the migration to Python 3. You can import it from a special __future__ module, which exposes a selection of language features released in later Python versions.

Note: You may import future functions as well as baked-in language constructs such as the with statement.

To find out exactly what features are available to you, inspect the module:

>>>>>> import__future__>>> __future__.all_feature_names['nested_scopes', 'generators', 'division', 'absolute_import', 'with_statement', 'print_function', 'unicode_literals']

You could also call dir(__future__), but that would show a lot of uninteresting internal details of the module.

To enable the print() function in Python 2, you need to add this import statement at the beginning of your source code:

from__future__importprint_function

From now on the print statement is no longer available, but you have the print() function at your disposal. Note that it isn’t the same function like the one in Python 3, because it’s missing the flush keyword argument, but the rest of the arguments are the same.

Other than that, it doesn’t spare you from managing character encodings properly.

Here’s an example of calling the print() function in Python 2:

>>>>>> from__future__importprint_function>>> importsys>>> print('I am a function in Python',sys.version_info.major)I am a function in Python 2 You now have an idea of how printing in Python evolved and, most importantly, understand why these backward-incompatible changes were necessary. Knowing this will surely help you become a better Python programmer.

Printing With Style

If you thought that printing was only about lighting pixels up on the screen, then technically you’d be right. However, there are ways to make it look cool. In this section, you’ll find out how to format complex data structures, add colors and other decorations, build interfaces, use animation, and even play sounds with text!

Pretty-Printing Nested Data Structures

Computer languages allow you to represent data as well as executable code in a structured way. Unlike Python, however, most languages give you a lot of freedom in using whitespace and formatting. This can be useful, for example in compression, but it sometimes leads to less readable code.

Pretty-printing is about making a piece of data or code look more appealing to the human eye so that it can be understood more easily. This is done by indenting certain lines, inserting newlines, reordering elements, and so forth.

Python comes with the pprint module in its standard library, which will help you in pretty-printing large data structures that don’t fit on a single line. Because it prints in a more human-friendly way, many popular REPL tools, including JupyterLab and IPython, use it by default in place of the regular print() function.

Note: To toggle pretty printing in IPython, issue the following command:

>>>In [1]: %pprintPretty printing has been turned OFFIn [2]: %pprintPretty printing has been turned ON

This is an example of Magic in IPython. There are a lot of built-in commands that start with a percent sign (%), but you can find more on PyPI, or even create your own.

If you don’t care about not having access to the original print() function, then you can replace it with pprint() in your code using import renaming:

>>>>>> frompprintimportpprintasprint>>> print<function pprint at 0x7f7a775a3510>

Personally, I like to have both functions at my fingertips, so I’d rather use something like pp as a short alias:

frompprintimportpprintaspp

At first glance, there’s hardly any difference between the two functions, and in some cases there’s virtually none:

>>>>>> print(42)42>>> pp(42)42>>> print('hello')hello>>> pp('hello')'hello' # Did you spot the difference? That’s because pprint() calls repr() instead of the usual str() for type casting, so that you may evaluate its output as Python code if you want to. The differences become apparent as you start feeding it more complex data structures:

>>>>>> data={'powers':[x**10forxinrange(10)]}>>> pp(data){'powers': [0, 1, 1024, 59049, 1048576, 9765625, 60466176, 282475249, 1073741824, 3486784401]} The function applies reasonable formatting to improve readability, but you can customize it even further with a couple of parameters. For example, you may limit a deeply nested hierarchy by showing an ellipsis below a given level:

>>>>>> cities={'USA':{'Texas':{'Dallas':['Irving']}}}>>> pp(cities,depth=3){'USA': {'Texas': {'Dallas': [...]}}} The ordinary print() also uses ellipses but for displaying recursive data structures, which form a cycle, to avoid stack overflow error:

>>>>>> items=[1,2,3]>>> items.append(items)>>> print(items)[1, 2, 3, [...]]

However, pprint() is more explicit about it by including the unique identity of a self-referencing object:

>>>>>> pp(items)[1, 2, 3, <Recursion on list with id=140635757287688>]>>> id(items)140635757287688

The last element in the list is the same object as the entire list.

Note: Recursive or very large data sets can be dealt with using the reprlib module as well:

>>>>>> importreprlib>>> reprlib.repr([x**10forxinrange(10)])'[0, 1, 1024, 59049, 1048576, 9765625, ...]'

This module supports most of the built-in types and is used by the Python debugger.

pprint() automatically sorts dictionary keys for you before printing, which allows for consistent comparison. When you’re comparing strings, you often don’t care about a particular order of serialized attributes. Anyways, it’s always best to compare actual dictionaries before serialization.

Dictionaries often represent JSON data, which is widely used on the Internet. To correctly serialize a dictionary into a valid JSON-formatted string, you can take advantage of the json module. It too has pretty-printing capabilities:

>>>>>> importjson>>> data={'username':'jdoe','password':'s3cret'}>>> ugly=json.dumps(data)>>> pretty=json.dumps(data,indent=4,sort_keys=True)>>> print(ugly){"username": "jdoe", "password": "s3cret"}>>> print(pretty){"password": "s3cret","username": "jdoe"} Notice, however, that you need to handle printing yourself, because it’s not something you’d typically want to do. Similarly, the pprint module has an additional pformat() function that returns a string, in case you had to do something other than printing it.

Surprisingly, the signature of pprint() is nothing like the print() function’s one. You can’t even pass more than one positional argument, which shows how much it focuses on printing data structures.

Adding Colors With ANSI Escape Sequences

As personal computers got more sophisticated, they had better graphics and could display more colors. However, different vendors had their own idea about the API design for controlling it. That changed a few decades ago when people at the American National Standards Institute decided to unify it by defining ANSI escape codes.

Most of today’s terminal emulators support this standard to some degree. Until recently, the Windows operating system was a notable exception. Therefore, if you want the best portability, use the colorama library in Python. It translates ANSI codes to their appropriate counterparts in Windows while keeping them intact in other operating systems.

To check if your terminal understands a subset of the ANSI escape sequences, for example, related to colors, you can try using the following command:

My default terminal on Linux says it can display 256 distinct colors, while xterm gives me only 8. The command would return a negative number if colors were unsupported.

ANSI escape sequences are like a markup language for the terminal. In HTML you work with tags, such as <b> or <i>, to change how elements look in the document. These tags are mixed with your content, but they’re not visible themselves. Similarly, escape codes won’t show up in the terminal as long as it recognizes them. Otherwise, they’ll appear in the literal form as if you were viewing the source of a website.

As its name implies, a sequence must begin with the non-printable Esc character, whose ASCII value is 27, sometimes denoted as 0x1b in hexadecimal or 033 in octal. You may use Python number literals to quickly verify it’s indeed the same number:

>>>>>> 27==0x1b==0o33True

Additionally, you can obtain it with the \e escape sequence in the shell:

The most common ANSI escape sequences take the following form:

| Element | Description | Example |

|---|

| Esc | non-printable escape character | \033 |

[ | opening square bracket | [ |

| numeric code | one or more numbers separated with ; | 0 |

| character code | uppercase or lowercase letter | m |

The numeric code can be one or more numbers separated with a semicolon, while the character code is just one letter. Their specific meaning is defined by the ANSI standard. For example, to reset all formatting, you would type one of the following commands, which use the code zero and the letter m:

$echo -e "\e[0m"$echo -e "\x1b[0m"$echo -e "\033[0m"

At the other end of the spectrum, you have compound code values. To set foreground and background with RGB channels, given that your terminal supports 24-bit depth, you could provide multiple numbers:

$echo -e "\e[38;2;0;0;0m\e[48;2;255;255;255mBlack on white\e[0m"

It’s not just text color that you can set with the ANSI escape codes. You can, for example, clear and scroll the terminal window, change its background, move the cursor around, make the text blink or decorate it with an underline.

In Python, you’d probably write a helper function to allow for wrapping arbitrary codes into a sequence:

>>>>>> defesc(code):... returnf'\033[{code}m'...>>> print(esc('31;1;4')+'really'+esc(0)+' important') This would make the word really appear in red, bold, and underlined font:

![Text formatted with ANSI escape codes]()

However, there are higher-level abstractions over ANSI escape codes, such as the mentioned colorama library, as well as tools for building user interfaces in the console.

Building Console User Interfaces

While playing with ANSI escape codes is undeniably a ton of fun, in the real world you’d rather have more abstract building blocks to put together a user interface. There are a few libraries that provide such a high level of control over the terminal, but curses seems to be the most popular choice.

Note: To use the curses library in Windows, you need to install a third-party package:

C:\> pip install windows-curses

That’s because curses isn’t available in the standard library of the Python distribution for Windows.

Primarily, it allows you to think in terms of independent graphical widgets instead of a blob of text. Besides, you get a lot of freedom in expressing your inner artist, because it’s really like painting a blank canvas. The library hides the complexities of having to deal with different terminals. Other than that, it has great support for keyboard events, which might be useful for writing video games.

How about making a retro snake game? Let’s create a Python snake simulator:

![The retro snake game built with curses library]()

First, you need to import the curses module. Since it modifies the state of a running terminal, it’s important to handle errors and gracefully restore the previous state. You can do this manually, but the library comes with a convenient wrapper for your main function:

importcursesdefmain(screen):passif__name__=='__main__':curses.wrapper(main)

Note, the function must accept a reference to the screen object, also known as stdscr, that you’ll use later for additional setup.

If you run this program now, you won’t see any effects, because it terminates immediately. However, you can add a small delay to have a sneak peek:

importtime,cursesdefmain(screen):time.sleep(1)if__name__=='__main__':curses.wrapper(main)

This time the screen went completely blank for a second, but the cursor was still blinking. To hide it, just call one of the configuration functions defined in the module:

importtime,cursesdefmain(screen):curses.curs_set(0)# Hide the cursortime.sleep(1)if__name__=='__main__':curses.wrapper(main)

Let’s define the snake as a list of points in screen coordinates:

snake=[(0,i)foriinreversed(range(20))]

The head of the snake is always the first element in the list, whereas the tail is the last one. The initial shape of the snake is horizontal, starting from the top-left corner of the screen and facing to the right. While its y-coordinate stays at zero, its x-coordinate decreases from head to tail.

To draw the snake, you’ll start with the head and then follow with the remaining segments. Each segment carries (y, x) coordinates, so you can unpack them:

# Draw the snakescreen.addstr(*snake[0],'@')forsegmentinsnake[1:]:screen.addstr(*segment,'*')

Again, if you run this code now, it won’t display anything, because you must explicitly refresh the screen afterward:

importtime,cursesdefmain(screen):curses.curs_set(0)# Hide the cursorsnake=[(0,i)foriinreversed(range(20))]# Draw the snakescreen.addstr(*snake[0],'@')forsegmentinsnake[1:]:screen.addstr(*segment,'*')screen.refresh()time.sleep(1)if__name__=='__main__':curses.wrapper(main)

You want to move the snake in one of four directions, which can be defined as vectors. Eventually, the direction will change in response to an arrow keystroke, so you may hook it up to the library’s key codes:

directions={curses.KEY_UP:(-1,0),curses.KEY_DOWN:(1,0),curses.KEY_LEFT:(0,-1),curses.KEY_RIGHT:(0,1),}direction=directions[curses.KEY_RIGHT]How does a snake move? It turns out that only its head really moves to a new location, while all other segments shift towards it. In each step, almost all segments remain the same, except for the head and the tail. Assuming the snake isn’t growing, you can remove the tail and insert a new head at the beginning of the list:

# Move the snakesnake.pop()snake.insert(0,tuple(map(sum,zip(snake[0],direction))))

To get the new coordinates of the head, you need to add the direction vector to it. However, adding tuples in Python results in a bigger tuple instead of the algebraic sum of the corresponding vector components. One way to fix this is by using the built-in zip(), sum(), and map() functions.

The direction will change on a keystroke, so you need to call .getch() to obtain the pressed key code. However, if the pressed key doesn’t correspond to the arrow keys defined earlier as dictionary keys, the direction won’t change:

# Change direction on arrow keystrokedirection=directions.get(screen.getch(),direction)

By default, however, .getch() is a blocking call that would prevent the snake from moving unless there was a keystroke. Therefore, you need to make the call non-blocking by adding yet another configuration:

defmain(screen):curses.curs_set(0)# Hide the cursorscreen.nodelay(True)# Don't block I/O calls

You’re almost done, but there’s just one last thing left. If you now loop this code, the snake will appear to be growing instead of moving. That’s because you have to erase the screen explicitly before each iteration.

Finally, this is all you need to play the snake game in Python:

importtime,cursesdefmain(screen):curses.curs_set(0)# Hide the cursorscreen.nodelay(True)# Don't block I/O callsdirections={curses.KEY_UP:(-1,0),curses.KEY_DOWN:(1,0),curses.KEY_LEFT:(0,-1),curses.KEY_RIGHT:(0,1),}direction=directions[curses.KEY_RIGHT]snake=[(0,i)foriinreversed(range(20))]whileTrue:screen.erase()# Draw the snakescreen.addstr(*snake[0],'@')forsegmentinsnake[1:]:screen.addstr(*segment,'*')# Move the snakesnake.pop()snake.insert(0,tuple(map(sum,zip(snake[0],direction))))# Change direction on arrow keystrokedirection=directions.get(screen.getch(),direction)screen.refresh()time.sleep(0.1)if__name__=='__main__':curses.wrapper(main)This is merely scratching the surface of the possibilities that the curses module opens up. You may use it for game development like this or more business-oriented applications.

Living It Up With Cool Animations

Not only can animations make the user interface more appealing to the eye, but they also improve the overall user experience. When you provide early feedback to the user, for example, they’ll know if your program’s still working or if it’s time to kill it.

To animate text in the terminal, you have to be able to freely move the cursor around. You can do this with one of the tools mentioned previously, that is ANSI escape codes or the curses library. However, I’d like to show you an even simpler way.

If the animation can be constrained to a single line of text, then you might be interested in two special escape character sequences:

- Carriage return:

\r - Backspace:

\b

The first one moves the cursor to the beginning of the line, whereas the second one moves it only one character to the left. They both work in a non-destructive way without overwriting text that’s already been written.

Let’s take a look at a few examples.

You’ll often want to display some kind of a spinning wheel to indicate a work in progress without knowing exactly how much time’s left to finish:

![Indefinite animation in the terminal]()

Many command line tools use this trick while downloading data over the network. You can make a really simple stop motion animation from a sequence of characters that will cycle in a round-robin fashion:

fromitertoolsimportcyclefromtimeimportsleepforframeincycle(r'-\|/-\|/'):print('\r',frame,sep='',end='',flush=True)sleep(0.2)The loop gets the next character to print, then moves the cursor to the beginning of the line, and overwrites whatever there was before without adding a newline. You don’t want extra space between positional arguments, so separator argument must be blank. Also, notice the use of Python’s raw strings due to backslash characters present in the literal.

When you know the remaining time or task completion percentage, then you’re able to show an animated progress bar:

![Progress bar animation in the terminal]()

First, you need to calculate how many hashtags to display and how many blank spaces to insert. Next, you erase the line and build the bar from scratch:

fromtimeimportsleepdefprogress(percent=0,width=30):left=width*percent//100right=width-leftprint('\r[','#'*left,' '*right,']',f' {percent:.0f}%',sep='',end='',flush=True)foriinrange(101):progress(i)sleep(0.1)As before, each request for update repaints the entire line.

Note: There’s a feature-rich progressbar2 library, along with a few other similar tools, that can show progress in a much more comprehensive way.

Making Sounds With Print

If you’re old enough to remember computers with a PC speaker, then you must also remember their distinctive beep sound, often used to indicate hardware problems. They could barely make any more noises than that, yet video games seemed so much better with it.

Today you can still take advantage of this small loudspeaker, but chances are your laptop didn’t come with one. In such a case, you can enable terminal bell emulation in your shell, so that a system warning sound is played instead.

Go ahead and type this command to see if your terminal can play a sound:

This would normally print text, but the -e flag enables the interpretation of backslash escapes. As you can see, there’s a dedicated escape sequence \a, which stands for “alert”, that outputs a special bell character. Some terminals make a sound whenever they see it.

Similarly, you can print this character in Python. Perhaps in a loop to form some kind of melody. While it’s only a single note, you can still vary the length of pauses between consecutive instances. That seems like a perfect toy for Morse code playback!

The rules are the following:

- Letters are encoded with a sequence of dot (·) and dash (–) symbols.

- A dot is one unit of time.

- A dash is three units of time.

- Individual symbols in a letter are spaced one unit of time apart.

- Symbols of two adjacent letters are spaced three units of time apart.

- Symbols of two adjacent words are spaced seven units of time apart.

According to those rules, you could be “printing” an SOS signal indefinitely in the following way:

whileTrue:dot()symbol_space()dot()symbol_space()dot()letter_space()dash()symbol_space()dash()symbol_space()dash()letter_space()dot()symbol_space()dot()symbol_space()dot()word_space()

In Python, you can implement it in merely ten lines of code:

fromtimeimportsleepspeed=0.1defsignal(duration,symbol):sleep(duration)print(symbol,end='',flush=True)dot=lambda:signal(speed,'·\a')dash=lambda:signal(3*speed,'−\a')symbol_space=lambda:signal(speed,'')letter_space=lambda:signal(3*speed,'')word_space=lambda:signal(7*speed,' ')

Maybe you could even take it one step further and make a command line tool for translating text into Morse code? Either way, I hope you’re having fun with this!

Mocking Python Print in Unit Tests

Nowadays, it’s expected that you ship code that meets high quality standards. If you aspire to become a professional, you must learn how to test your code.

Software testing is especially important in dynamically typed languages, such as Python, which don’t have a compiler to warn you about obvious mistakes. Defects can make their way to the production environment and remain dormant for a long time, until that one day when a branch of code finally gets executed.

Sure, you have linters, type checkers, and other tools for static code analysis to assist you. But they won’t tell you whether your program does what it’s supposed to do on the business level.

So, should you be testing print()? No. After all, it’s a built-in function that must have already gone through a comprehensive suite of tests. What you want to test, though, is whether your code is calling print() at the right time with the expected parameters. That’s known as a behavior.

You can test behaviors by mocking real objects or functions. In this case, you want to mock print() to record and verify its invocations.

Note: You might have heard the terms: dummy, fake, stub, spy, or mock used interchangeably. Some people make a distinction between them, while others don’t.

Martin Fowler explains their differences in a short glossary and collectively calls them test doubles.

Mocking in Python can be done twofold. First, you can take the traditional path of statically-typed languages by employing dependency injection. This may sometimes require you to change the code under test, which isn’t always possible if the code is defined in an external library: