When you’re first learning a new programming language, a lot of your time and effort go into understanding the syntax, code style, and built-in tooling. This is just as true for Python as it is for any other language. Once you gain enough familiarity to be comfortable with the ins and outs of Python, you can start to invest time into building a Python environment that will foster your productivity.

Your shell is more than a prebuilt program provided to you as-is. It’s a framework on which you can build an ecosystem. This ecosystem will come to fit your needs so that you can spend less time fiddling and more time thinking about the next big project you’re working on.

Although no two developers have the same setup, there are a number of choices everyone faces when cultivating their Python environment. It’s important to understand each of these decisions and the options available to you!

By the end of this article, you’ll be able to answer questions like:

- What shell should I use? What terminal should I use?

- What version(s) of Python can I use?

- How do I manage dependencies for different projects?

- How can I make my tools do some of the work for me?

Once you’ve answered these questions for yourself, you can embark on the journey of creating a Python environment to call your very own. Let’s get started!

Shells

When you use a command-line interface (CLI), you execute commands and see their output. A shell is a program that provides this (usually text-based) interface to you. Shells often provide their own programming language that you can use to manipulate files, install software, and so on.

There are more unique shells than could be reasonably listed here, so you’ll see a few prominent ones. Others differ in syntax or enhanced features, but they generally provide the same core functionality.

Unix Shells

Unix is a family of operating systems first developed in the early days of computing. Unix’s popularity has lasted through today, heavily inspiring Linux and macOS. The first shells were developed for use with Unix and Unix-like operating systems.

Bourne Shell (sh)

The Bourne shell—developed by Stephen Bourne for Bell Labs in 1979—was one of the first to incorporate the idea of environment variables, conditionals, and loops. It has provided a strong basis for many other shells in use today and is still available on most systems at /bin/sh.

Bourne-Again Shell (bash)

Built on the success of the original Bourne shell, bash introduced improved user-interaction features. With bash, you get Tab completion, history, and wildcard searching for commands and paths. The bash programming language provides more data types, like arrays.

Z Shell (zsh)

zsh combines many of the best features from other shells along with a few of its own tricks into one experience. zsh offers autocorrection of misspelled commands, shorthand for manipulating multiple files, and advanced options for customizing your command prompt.

zsh also provides a framework for deep customization. The Oh My Zsh project supplies a rich set of themes and plugins, and is often used hand in hand with zsh.

macOS will ship with zsh as its default shell starting with Catalina, speaking to the shell’s popularity. Consider acquainting yourself with zsh now so that you’ll be comfortable with it going forward.

Xonsh

If you’re feeling particularly adventurous, you can give Xonsh a try. Xonsh is a shell that combines some features of other Unix-like shells with the power of Python syntax. You can use the language you already know to accomplish tasks on your filesystem and so on.

Although Xonsh is powerful, it lacks the compatibility other shells tend to share. You might not be able to run many existing shell scripts in Xonsh as a result. If you find that you like Xonsh, but compatibility is a concern, then you can use Xonsh as a supplement to your activities in a more widely used shell.

Windows Shells

Similarly to Unix-like operating systems, Windows also offers a number of options when it comes to shells. The shells offered in Windows vary in features and syntax, so you may need to try several to find one you like best.

CMD (cmd.exe)

CMD (short for “command”) is the default CLI shell for Windows. It’s the predecessor to COMMAND.COM, the shell built for DOS (disk operating system).

Because DOS and Unix evolved independently, the commands and syntax in CMD are markedly different from shells built for Unix-like systems. However, CMD still provides the same core functionality for browsing and manipulating files, running commands, and viewing output.

PowerShell

PowerShell was released in 2006 and also ships with Windows. It provides Unix-like aliases for most commands, so if you’re coming to Windows from macOS or Linux or have to use both, then PowerShell might be great for you.

PowerShell is vastly more powerful than CMD. With PowerShell you can:

- Pipe the output of one command to the input of another

- Automate tasks through the exposed Windows management features

- Use a scripting language to accomplish complex tasks

Windows Subsystem for Linux

Microsoft has released a Windows subsystem for Linux (WSL) for running Linux directly on Windows. If you install WSL, then you can use zsh, bash, or any other Unix-like shell. If you want strong compatibility across your Windows and macOS or Linux environments, then be sure to give WSL a try. You may also consider dual-booting Linux and Windows as an alternative.

See this comparison of command shells for exhaustive coverage.

Terminal Emulators

Early developers used terminals to interact with a central mainframe computer. These were devices with a keyboard and a screen or printer that would display computed output.

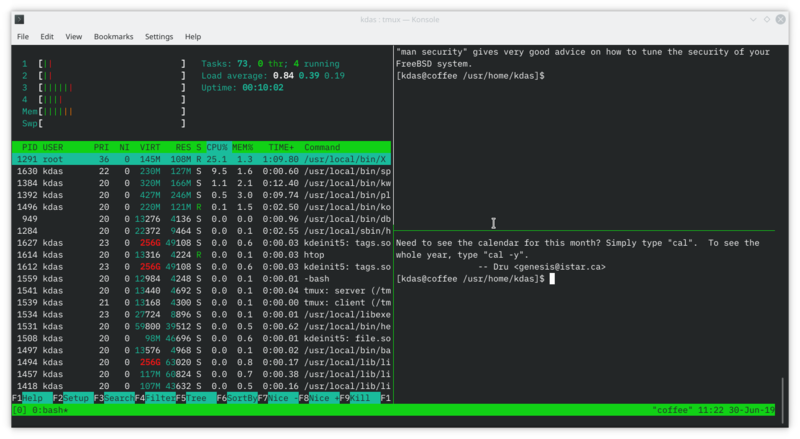

Today, computers are portable and don’t require separate devices to interact with them, but the terminology still remains. Whereas a shell provides the prompt and interpreter you use to interface with text-based CLI tools, a terminal emulator (often shortened to terminal) is the graphical application you run to access the shell.

Almost any terminal you encounter should support the same basic features:

- Text colors for syntax highlighting in your code or distinguishing meaningful text in command output

- Scrolling for viewing an earlier command or its output

- Copy/paste for transferring text in or out of the shell from other programs

- Tabs for running multiple programs at once or separating your work into different sessions

macOS Terminals

The terminal options available for macOS are all full-featured, differing mostly in aesthetics and specific integrations with other tools.

Terminal

If you’re using a Mac, then you may have used the built-in Terminal app before. Terminal supports all the usual functionality, and you can also customize the color scheme and a few hotkeys. It’s a nice enough tool if you don’t need many bells and whistles. You can find the Terminal app in Applications → Utilities → Terminal on macOS.

iTerm2

I’ve been a long-time user of iTerm2. It takes the developer experience on Mac a step further, offering a much wider palette of customization and productivity options that enable you to:

- Integrate with the shell to jump quickly to previously entered commands

- Create custom search term highlighting in the output from commands

- Open URLs and files displayed in the terminal with Cmd+click

A Python API ships with the latest versions of iTerm2, so you can even improve your Python chops by developing more intricate customizations!

iTerm2 is popular enough to enjoy first-class integration with several other tools, and has a healthy community building plugins and so on. It’s a good choice because of its more frequent release cycle compared to Terminal, which only updates as often as macOS does.

Hyper

A relative newcomer, Hyper is a terminal built on Electron, a framework for building desktop applications using web technologies. Electron apps are heavily customizable because they’re “just JavaScript” under the hood. You can create any functionality that you can write the JavaScript for.

On the other hand, JavaScript is a high-level programming language and won’t always perform as well as low-level languages like Objective-C or Swift. Be mindful of the plugins you install or create!

Windows Terminals

As with the shell options, Windows terminal options vary widely in utility. Some are tightly bound to a particular shell as well.

Command Prompt

Command Prompt is the graphical application you can use to work with CMD in Windows. Like CMD, it’s a bare-bones tool for getting a few small things done. Although Command Prompt and CMD provide fewer features than other alternatives, you can be confident that they’ll be available on nearly every Windows installation and in a consistent place.

Cygwin

Cygwin is a third-party suite of tools for Windows that provides a Unix-like wrapper. This was my preferred setup when I was in Windows, but you may consider adopting the Windows Subsystem for Linux as it receives more traction and polish.

Windows Terminal

Microsoft recently released an open source terminal for Windows 10 called Windows Terminal. It lets you work in CMD, PowerShell, and even the Windows Subsystem for Linux. If you need to do a fair amount of shell work in Windows, then Windows Terminal is probably your best bet! Windows Terminal is still in late beta, so it doesn’t ship with Windows yet. Check the documentation for instructions on getting access.

Python Version Management

With your choice of terminal and shell made, you can focus your attention on your Python environment specifically.

Something you’ll eventually run into is the need to run multiple versions of Python. Projects you use may only run on certain versions, or you may be interested in creating a project that supports multiple Python versions. You can configure your Python environment to accommodate these needs.

macOS and most Unix operating systems come with a version of Python installed by default. This is often called the system Python. The system Python works just fine, but it’s usually out of date. As of this writing, macOS High Sierra still ships with Python 2.7.10 as the system Python.

Note: You’ll almost certainly want to install the latest version of Python at a minimum, so you’ll have at least two versions of Python already.

It’s important that you leave the system Python as the default, because many parts of the system rely on the default Python being a specific version. This is one of many great reasons to customize your Python environment!

How do you navigate this? Tooling is here to help.

pyenv

pyenv is a mature tool for installing and managing multiple Python versions. I recommend installing it with Homebrew. After you’ve got pyenv installed, you can install multiple versions of Python into your Python environment with a few short commands:

$ pyenv versions

* system$ python --version

Python 2.7.10$ pyenv install 3.7.3 # This may take some time$ pyenv versions

* system 3.7.3

You can manage which Python you’d like to use in your current session, globally, or on a per-project basis as well. pyenv will make the python command point to whichever Python you specify. Note that none of these overrides the default system Python for other applications, so you’re safe to use them however they work best for you within your Python environment:

$ pyenv global 3.7.3

$ pyenv versions

system* 3.7.3 (set by /Users/dhillard/.pyenv/version)$ pyenv local3.7.3

$ pyenv versions

system* 3.7.3 (set by /Users/dhillard/myproj/.python-version)$ pyenv shell 3.7.3

$ pyenv versions

system* 3.7.3 (set by PYENV_VERSION environment variable)$ python --version

Python 3.7.3

Because I use a specific version of Python for work, the latest version of Python for personal projects, and multiple versions for testing open source projects, pyenv has proven to be a fairly smooth way for me to manage all these different versions within my own Python environment. See Managing Multiple Python Versions with pyenv for a detailed overview of the tool.

conda

If you’re in the data science community, you might already be using Anaconda (or Miniconda). Anaconda is a sort of one-stop shop for data science software that supports more than just Python.

If you don’t need the data science packages or all the things that come pre-packaged with Anaconda, pyenv might be a better lightweight solution for you. Managing Python versions is pretty similar in each, though. You can install Python versions similarly to pyenv, using the conda command:

$ conda install python=3.7.3

You’ll see a verbose list of all the dependent software conda will install, and it will ask you to confirm.

conda doesn’t have a way to set the “default” Python version or even a good way to see which versions of Python you’ve installed. Rather, it hinges on the concept of “environments,” which you can read more about in the following sections.

Virtual Environments

Now you know how to manage multiple Python versions. Often, you’ll be working on multiple projects that need the same Python version.

Because each project has its own set of dependencies, it’s a good practice to avoid mixing them. If all the dependencies are installed together in a single Python environment, then it will be difficult to discern where each one came from. In the worst cases, two different projects may depend on two different versions of a package, but with Python you can only have one version of a package installed at one time. What a mess!

Enter virtual environments. You can think of a virtual environment as a carbon copy of a base version of Python. If you’ve installed Python 3.7.3, for example, then you can create many virtual environments based off of it. When you install a package in a virtual environment, you do it in isolation from other Python environments you may have. Each virtual environment has its own copy of the python executable.

Tip: Most virtual environment tooling provides a way to update your shell’s command prompt to show the current active virtual environment. Make sure to do this if you frequently switch between projects so you’re sure you’re working inside the correct virtual environment.

venv

venv ships with Python versions 3.3+. You can create virtual environments just by passing it a path at which to store the environment’s python, installed packages, and so on:

$ python -m venv ~/.virtualenvs/my-env

You activate a virtual environment by sourcing its activate script:

$source ~/.virtualenvs/my-env/bin/activate

You exit the virtual environment using the deactivate command, which is made available when you activate the virtual environment:

venv is built on the wonderful work and successes of the independent virtualenv project. virtualenv still provides a few interesting features of its own, but venv is nice because it provides the utility of virtual environments without requiring you to install additional software. You can probably get pretty far with it if you’re working mostly in a single Python version in your Python environment.

If you’re already managing multiple Python versions (or plan to), then it could make sense to integrate with that tooling to simplify the process of making new virtual environments with specific versions of Python. The pyenv and conda ecosystems both provide ways to specify the Python version to use when you create new virtual environments, covered in the following sections.

pyenv-virtualenv

If you’re using pyenv, then pyenv-virtualenv enhances pyenv with a subcommand for managing virtual environments:

// Create virtual environment$ pyenv virtualenv 3.7.3 my-env

// Activate virtual environment$ pyenv activate my-env

// Exit virtual environment(my-env)$ pyenv deactivate

I switch contexts between a large handful of projects on a day-to-day basis. As a result, I have at least a dozen distinct virtual environments to manage in my Python environment. What’s really nice about pyenv-virtualenv is that you can configure a virtual environment using the pyenv local command and have pyenv-virtualenv auto-activate the right environments as you switch to different directories:

$ pyenv virtualenv 3.7.3 proj1

$ pyenv virtualenv 3.7.3 proj2

$cd /Users/dhillard/proj1

$ pyenv local proj1

(proj1)$cd ../proj2

$ pyenv local proj2

(proj2)$ pyenv versions

system 3.7.3 3.7.3/envs/proj1 3.7.3/envs/proj2 proj1* proj2 (set by /Users/dhillard/proj2/.python-version)

pyenv and pyenv-virtualenv have provided a particularly fluid workflow in my Python environment.

conda

You saw earlier that conda treats environments, rather than Python versions, as the main method of working. conda has built-in support for managing virtual environments:

// Create virtual environment$ conda create --name my-env python=3.7.3

// Activate virtual environment$ conda activate my-env

// Exit virtual environment(my-env)$ conda deactivate

conda will install the specified version of Python if it isn’t already installed, so you don’t have to run conda install python=3.7.3 first.

pipenv

pipenv is a relatively new tool that seeks to combine package management (more on this in a moment) with virtual environment management. It mostly abstracts the virtual environment management from you, which can be great as long as things go smoothly:

$cd /Users/dhillard/myproj

// Create virtual environment$ pipenv install

Creating a virtualenv for this project…Pipfile: /Users/dhillard/myproj/PipfileUsing /path/to/pipenv/python3.7 (3.7.3) to create virtualenv…✔ Successfully created virtual environment!Virtualenv location: /Users/dhillard/.local/share/virtualenvs/myproj-nAbMEAt0Creating a Pipfile for this project…Pipfile.lock not found, creating…Locking [dev-packages] dependencies…Locking [packages] dependencies…Updated Pipfile.lock (a65489)!Installing dependencies from Pipfile.lock (a65489)…🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 0/0 — 00:00:00To activate this project's virtualenv, run pipenv shell.Alternatively, run a command inside the virtualenv with pipenv run.// Activate virtual environment (uses a subshell)$ pipenv shell

Launching subshell in virtual environment… . /Users/dhillard/.local/share/virtualenvs/test-nAbMEAt0/bin/activate// Exit virtual environment (by exiting subshell)(myproj-nAbMEAt0)$exit

pipenv does all the heavy lifting of creating a virtual environment and activating it for you. If you look carefully, you can see that it also creates a file called Pipfile. After you first run pipenv install, this file contains just a few things:

[[source]]name="pypi"url="https://pypi.org/simple"verify_ssl=true[dev-packages][packages][requires]python_version="3.7"

In particular, note that it shows python_version = "3.7". By default, pipenv creates a virtual Python environment using the same Python version it was installed under. If you want to use a different Python version, then you can create the Pipfile yourself before running pipenv install and specify the version you want. If you have pyenv installed, then pipenv will use it to install the specified Python version if necessary.

Abstracting virtual environment management is a noble goal of pipenv, but it does get hung up with hard-to-read errors occasionally. Give it a try, but don’t worry if you feel confused or overwhelmed by it. The tool, documentation, and community will grow and improve around it as it matures.

To get an in-depth introduction to virtual environments, be sure to read Python Virtual Environments: A Primer.

Package Management

For many of the projects you work on, you’ll probably need some number of third-party packages. Those packages may have their own dependencies in turn. In the early days of Python, using packages involved manually downloading files and pointing Python at them. Today, we’re fortunate to have a variety of package management tools available to us.

Most package managers work in tandem with virtual environments, isolating the packages you install in one Python environment from another. Using the two together is where you really start to see the power of the tools available to you.

pip

pip (pip installs packages) has been the de facto standard for package management in Python for several years. It was heavily inspired by an earlier tool called easy_install. Python incorporated pip into the standard distribution starting in version 3.4. pip automates the process of downloading packages and making Python aware of them.

If you have multiple virtual environments, then you can see that they’re isolated by installing a few packages in one:

$ pyenv virtualenv 3.7.3 proj1

$ pyenv activate proj1

(proj1)$ pip list

Package Version---------- ---------pip 19.1.1setuptools 40.8.0(proj1)$ python -m pip install requests

Collecting requests Downloading .../requests-2.22.0-py2.py3-none-any.whl (57kB) 100% |████████████████████████████████| 61kB 2.2MB/sCollecting chardet<3.1.0,>=3.0.2 (from requests) Downloading .../chardet-3.0.4-py2.py3-none-any.whl (133kB) 100% |████████████████████████████████| 143kB 1.7MB/sCollecting certifi>=2017.4.17 (from requests) Downloading .../certifi-2019.6.16-py2.py3-none-any.whl (157kB) 100% |████████████████████████████████| 163kB 6.0MB/sCollecting urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 (from requests) Downloading .../urllib3-1.25.3-py2.py3-none-any.whl (150kB) 100% |████████████████████████████████| 153kB 1.7MB/sCollecting idna<2.9,>=2.5 (from requests) Downloading .../idna-2.8-py2.py3-none-any.whl (58kB) 100% |████████████████████████████████| 61kB 26.6MB/sInstalling collected packages: chardet, certifi, urllib3, idna, requestsSuccessfully installed packages$ pip list

Package Version---------- ---------certifi 2019.6.16chardet 3.0.4idna 2.8pip 19.1.1requests 2.22.0setuptools 40.8.0urllib3 1.25.3

pip installed requests, along with several packages it depends on. pip list shows you all the currently installed packages and their versions.

Warning: You can uninstall packages using pip uninstall requests, for example, but this will only uninstall requests—not any of its dependencies.

A common way to specify project dependencies for pip is with a requirements.txt file. Each line in the file specifies a package name and, optionally, the version to install:

scipy==1.3.0requests==2.22.0

You can then run python -m pip install -r requirements.txt to install all of the specified dependencies at once. For more on pip, see What is Pip? A Guide for New Pythonistas.

pipenv

pipenv has most of the same basic operations as pip but thinks about packages a bit differently. Remember the Pipfile that pipenv creates? When you install a package, pipenv adds that package to Pipfile and also adds more detailed information to a new lock file called Pipfile.lock. Lock files act as a snapshot of the precise set of packages installed, including direct dependencies as well as their sub-dependencies.

You can see pipenv sorting out the package management when you install a package:

$ pipenv install requests

Installing requests…Adding requests to Pipfile's [packages]…✔ Installation SucceededPipfile.lock (444a6d) out of date, updating to (a65489)…Locking [dev-packages] dependencies…Locking [packages] dependencies…✔ Success!Updated Pipfile.lock (444a6d)!Installing dependencies from Pipfile.lock (444a6d)…🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 5/5 — 00:00:00

pipenv will use this lock file, if present, to install the same set of packages. You can ensure that you always have the same set of working dependencies in any Python environment you create using this approach.

pipenv also distinguishes between development dependencies and production (regular) dependencies. You may need some tools during development, such as black or flake8, that you don’t need when you run your application in production. You can specify that a package is for development when you install it:

$ pipenv install --dev flake8

Installing flake8…Adding flake8 to Pipfile's [dev-packages]…✔ Installation Succeeded...

pipenv install (without any arguments) will only install your production packages by default, but you can tell it to install development dependencies as well with pipenv install --dev.

poetry

poetry addresses additional facets of package management, including creating and publishing your own packages. After installing poetry, you can use it to create a new project:

$ poetry new myproj

Created package myproj in myproj$ ls myproj/

README.rst myproj pyproject.toml tests

Similarly to how pipenv creates the Pipfile, poetry creates a pyproject.toml file. This recent standard contains metadata about the project as well as dependency versions:

[tool.poetry]name="myproj"version="0.1.0"description=""authors=["Dane Hillard <github@danehillard.com>"][tool.poetry.dependencies]python="^3.7"[tool.poetry.dev-dependencies]pytest="^3.0"[build-system]requires=["poetry>=0.12"]build-backend="poetry.masonry.api"

You can install packages with poetry add (or as development dependencies with poetry add --dev):

$ poetry add requests

Using version ^2.22 for requestsUpdating dependenciesResolving dependencies... (0.2s)Writing lock filePackage operations: 5 installs, 0 updates, 0 removals - Installing certifi (2019.6.16) - Installing chardet (3.0.4) - Installing idna (2.8) - Installing urllib3 (1.25.3) - Installing requests (2.22.0)

poetry also maintains a lock file, and it has a benefit over pipenv because it keeps track of which packages are subdependencies. As a result, you can uninstall requestsand its dependencies with poetry remove requests.

conda

With conda, you can use pip to install packages as usual, but you can also use conda install to install packages from different channels , which are collections of packages provided by Anaconda or other providers. To install requests from the conda-forge channel, you can run conda install -c conda-forge requests.

Learn more about package management in conda in Setting Up Python for Machine Learning on Windows.

Python Interpreters

If you’re interested in further customization of your Python environment, you can choose the command line experience you have when interacting with Python. The Python interpreter provides a read-eval-print loop (REPL), which is what comes up when you type python with no arguments in your shell:

>>>Python 3.7.3 (default, Jun 17 2019, 14:09:05)[Clang 10.0.1 (clang-1001.0.46.4)] on darwinType "help", "copyright", "credits" or "license" for more information.>>> 2+24>>> exit()

The REPL reads what you type, evaluates it as Python code, and prints the result. Then it waits to do it all over again. This is about as much as the default Python REPL provides, which is sufficient for a good portion of typical work.

IPython

Like Anaconda, IPython is a suite of tools supporting more than just Python, but one of its main features is an alternative Python REPL. IPython’s REPL numbers each command and explicitly labels each command’s input and output. After installing IPython (python -m pip install ipython), you can run the ipython command in place of the python command to use the IPython REPL:

>>>Python 3.7.3Type 'copyright', 'credits' or 'license' for more informationIPython 6.0.0.dev -- An enhanced Interactive Python. Type '?' for help.In [1]: 2+2Out[1]: 4In [2]: print("Hello!")Out[2]: Hello! IPython also supports Tab completion, more powerful help features, and strong integration with other tooling such as matplotlib for graphing. IPython provided the foundation for Jupyter, and both have been used extensively in the data science community because of their integration with other tools.

The IPython REPL is highly configurable too, so while it falls just shy of being a full development environment, it can still be a boon to your productivity. Its built-in and customizable magic commands are worth checking out.

bpython

bpython is another alternative REPL that provides inline syntax highlighting, tab completion, and even auto-suggestions as you type. It provides quite a few of the quick benefits of IPython without altering the interface much. Without the weight of the integrations and so on, bpython might be good to add to your repertoire for a while to see how it improves your use of the REPL.

Text Editors

You spend a third of your life sleeping, so it makes sense to invest in a great bed. As a developer, you spend a great deal of your time reading and writing code, so it follows that you should invest time in setting up your Python environment’s text editor just the way you like it.

Each editor offers a different set of key bindings and model for manipulating text. Some require a mouse to interact with them effectively, whereas others can be controlled with only the keyboard. Some people consider their choice of text editor and customizations some of the most personal decisions they make!

There are so many options to choose from in this arena, so I won’t attempt to cover it in detail here. Check out Python IDEs and Code Editors (Guide) for a broad overview. A good strategy is to find a simple, small text editor for quick changes and a full-featured IDE for more involved work. Vim and PyCharm, respectively, are my editors of choice.

Python Environment Tips and Tricks

Once you’ve made the big decisions about your Python environment, the rest of the road is paved with little tweaks to make your life a little easier. These tweaks each save minutes or seconds alone, but they collectively save you hours of time.

Making a certain activity easier reduces your cognitive load so you can focus on the task at hand instead of the logistics surrounding it. If you notice yourself performing an action over and over, then consider automating it. Use this wonderful chart from XKCD to determine if it’s worth automating a particular task.

Here are a few final tips.

Know your current virtual environment

As mentioned earlier, it’s a great idea to display the active Python version or virtual environment in your command prompt. Most tools will do this for you, but if not (or if you want to customize the prompt), the value is usually contained in the VIRTUAL_ENV environment variable.

Disable unnecessary, temporary files

Have you ever noticed *.pyc files all over your project directories? These files are pre-compiled Python bytecode—they help Python start your application faster. In production, these are a great idea because they’ll give you some performance gain. During local development, however, they’re rarely useful. Set PYTHONDONTWRITEBYTECODE=1 to disable this behavior. If you find use cases for them later, then you can easily remove this from your Python environment.

Customize your Python interpreter

You can affect how the REPL behaves using a startup file. Python will read this startup file and execute the code it contains before entering the REPL. Set the PYTHONSTARTUP environment variable to the path of your startup file. (Mine’s at ~/.pystartup.) If you’d like to hit Up for command history and Tab for completion like your shell provides, then give this startup file a try.

Conclusion

You learned about many facets of the typical Python environment. Armed with this knowledge, you can:

- Choose a terminal with the aesthetics and enhanced features you like

- Choose a shell with as many (or as few) customization options as you need

- Manage multiple versions of Python on your system

- Manage multiple projects that use a single version of Python, using virtual Python environments

- Install packages in your virtual environments

- Choose a REPL that suits your interactive coding needs

When you’ve got your Python environment just so, I hope you’ll share screenshots, screencasts, or blog posts about your perfect setup ✨

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Raphael Pierzina

Raphael Pierzina