Talk Python to Me: #171 1M Jupyter notebooks analyzed

Bhishan Bhandari: Python Tuples

This is an introductory post about tuples in python. We will see through examples what are tuples, its immutable property, use cases, various operations on it. Rather than a blog, it is a set of examples on tuples in python Tuples It is a sequence of objects in python. Unlike lists, tuple are immutable which […]

The post Python Tuples appeared first on The Tara Nights.

Sumana Harihareswara - Cogito, Ergo Sumana: "Python Grab Bag: A Set of Short Plays" Accepted for PyGotham 2018

(The format will be similar to the format I used in "Lessons, Myths, and Lenses: What I Wish I'd Known in 1998" (video, partial notes), but some plays will be more elaborate and theatrical -- much more like our inspiration "The Infinite Wrench".)

To quote the session description:

A frenetic combination of educational and entertaining segments, as chosen by the audience! In between segments, audience members will shout out numbers from a menu, and we'll perform the selected segment. It may be a short monologue, it may be a play, it may be a physical demo, or it may be a tiny traditional conference talk.Audience members should walk away with some additional understanding of the history of Python, knowledge of some tools and libraries available in the Python ecosystem, and some Python-related amusement.

So now Jason and I just have to find a director, write and memorize and rehearse and block probably 15-20 Python-related plays/songs?/dances?/presentations, acquire and set up some number of props, figure out lights and sound and visuals, possibly recruit volunteers to join us for a few bits, run some preview performances to see whether the lessons and jokes land, and perform our opening (also closing) performance. In 68 days.

(Simultaneously: I have three clients, and want to do my bit before the midterm elections, and work on a fairly major apartment-related project with Leonard, and and and and.)

Jason, thank you for the way your eyes lit up on the way back from PyCon when I mentioned this PyGotham session idea -- I think your enthusiasm will energize me when I'm feeling overwhelmed by the ambition of this project, and I predict I'll reciprocate the favor! PyGotham program committee & voters, thank you for your vote of confidence. Leonard, thanks in advance for your patience with me bouncing out of bed to write down a new idea, and probably running many painfully bad concepts past you. Future Sumana, it's gonna be ok. It will, possibly, be great. You're going to give that audience an experience they've never had before.

Kay Hayen: Nuitka Release 0.5.32

This is to inform you about the new stable release of Nuitka. It is the extremely compatible Python compiler. Please see the page "What is Nuitka?" for an overview.

This release contains substantial new optimization, bug fixes, and already the full support for Python 3.7. Among the fixes, the enhanced coroutine work for compatiblity with uncompiled ones is most important.

Bug Fixes

- Fix, was optimizing write backs of attribute in-place assignments falsely.

- Fix, generator stop future was not properly supported. It is now the default for Python 3.7 which showed some of the flaws.

- Python3.5: The __qualname__ of coroutines and asyncgen was wrong.

- Python3.5: Fix, for dictionary unpackings to calls, check the keys if they are string values, and raise an exception if not.

- Python3.6: Fix, need to check assignment unpacking for too short sequences, we were giving IndexError instead of ValueError for these. Also the error messages need to consider if they should refer to "at least" in their wording.

- Fix, outline nodes were cloned more than necessary, which would corrupt the code generation if they later got removed, leading to a crash.

- Python3.5: Compiled coroutines awaiting uncompiled coroutines was not working properly for finishing the uncompiled ones. Also the other way around was raising a RuntimeError when trying to pass an exception to them when they were already finished. This should resolve issues with asyncio module.

- Fix, side effects of a detected exception raise, when they had an exception detected inside of them, lead to an infinite loop in optimization. They are now optimized in-place, avoiding an extra step later on.

New Features

- Support for Python 3.7 with only some corner cases not supported yet.

Optimization

- Delay creation of StopIteration exception in generator code for as long as possible. This gives more compact code for generations, which now pass the return values via compiled generator attribute for Python 3.3 or higher.

- Python3: More immediate re-formulation of classes with no bases. Avoids noise during optimization.

- Python2: For class dictionaries that are only assigned from values without side effects, they are not converted to temporary variable usages, allowing the normal SSA based optimization to work on them. This leads to constant values for class dictionaries of simple classes.

- Explicit cleanup of nodes, variables, and local scopes that become unused, has been added, allowing for breaking of cyclic dependencies that prevented memory release.

Tests

- Adapted 3.5 tests to work with 3.7 coroutine changes.

- Added CPython 3.7 test suite.

Cleanups

- Removed remaining code that was there for 3.2 support. All uses of version comparisons with 3.2 have been adapted. For us, Python3 now means 3.3, and we will not work with 3.2 at all. This removed a fair bit of complexity for some things, but not all that much.

- Have dedicated file for import released helpers, so they are easier to find if necessary. Also do not have code for importing a name in the header file anymore, not performance relevant.

- Disable Python warnings when running scons. These are particularily given when using a Python debug binary, which is happening when Nuitka is run with --python-debug option and the inline copy of Scons is used.

- Have a factory function for all conditional statement nodes created. This solved a TODO and handles the creation of statement sequences for the branches as necessary.

- Split class reformulation into two files, one for Python2 and one for Python3 variant. They share no code really, and are too confusing in a single file, for the huge code bodies.

- Locals scopes now have a registry, where functions and classes register their locals type, and then it is created from that.

- Have a dedicated helper function for single argument calls in static code that does not require an array of objects as an argument.

Organizational

- There are now requirements-devel.txt and requirements.txt files aimed at usage with scons and by users, but they are not used in installation.

Summary

This releases has this important step to add conversion of locals dictionary usages to temporary variables. It is not yet done everywhere it is possible, and the resulting temporary variables are not yet propagated in the all the cases, where it clearly is possible. Upcoming releases ought to achieve that most Python2 classes will become to use a direct dictionary creation.

Adding support for Python 3.7 is of course also a huge step. And also this happened fairly quickly and soon after its release. The generic classes it adds were the only real major new feature. It breaking the internals for exception handling was what was holding back initially, but past that, it was really easy.

Expect more optimization to come in the next releases, aiming at both the ability to predict Python3 metaclasses __prepare__ results, and at more optimization applied to variables after they became temporary variables.

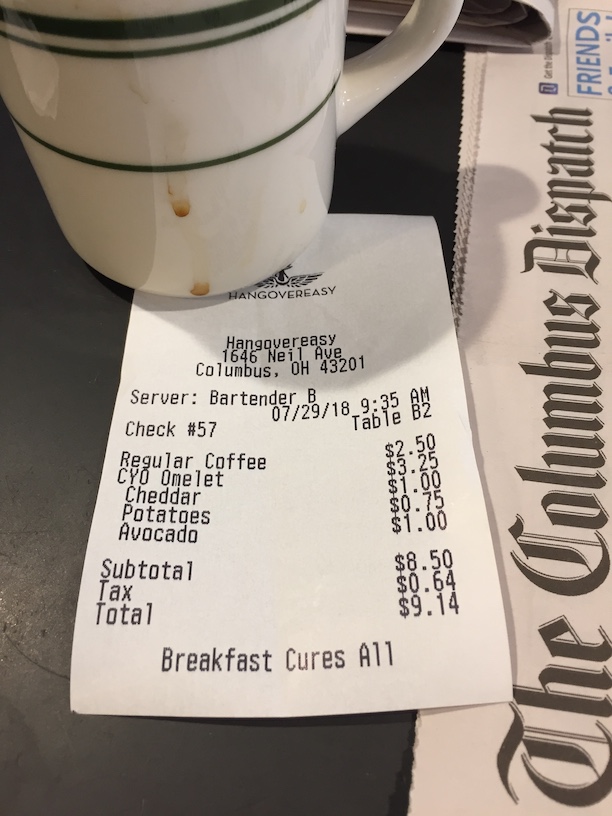

Philip Semanchuk: Thanks to PyOhio 2018!

Thanks to organizers, sponsors, volunteers, and attendees for another great PyOhio!

Here’s the slides from my talk on Python 2 to 3 migration.

Mike Driscoll: PyDev of the Week: William F. Punch

This week we welcome William Punch as our PyDev of the Week. Mr. Punch is the co-author of the book, The Practice of Computing using Python and he is an assistant professor of computer science at Michigan State University. Let’s take a few moments to get to know him better!

Can you tell us a little about yourself (hobbies, education, etc):

I’m a graduate of The Ohio State University. I did my undergraduate work in organic/bio chemistry and worked for a while in a medical research lab. I found I didn’t like the work as much as I did in college so I looked for something else. Fortunately the place I worked offered continuing education so I started going to school at night at the University of Cincinnati to study chemical engineering. I thought I could get through that quickly given my background. The first courses I took were computer science courses and I was completely taken with them. I changed to CS and eventually went back to OSU and got my Ph.D.

As a chemist I was always fascinated with glasswork, and so I do glass blowing in my town when I have the time.

Why did you start using Python?

My colleague, Rich Enbody, and I were starting to re-design our introductory programming course which had been in C++. We knew that was a bad choice for an introductory language but were unsure what to use instead. Most fortunately, we had a new faculty member join us as MSU, Titus Brown (now at UC Davis) who was a Python fan. His enthusiasm was contagious so we looked into Python and were immediately convinced that this was a great introductory programming language.

We redid the course in Python but couldn’t find a good book to support it so we wrote our own (“The Practice of Computing Using Python”, now in its third edition)

What other programming languages do you know and which is your favorite?

I was pretty proficient in Lisp, especially in my early years as I did a Ph.D. in Artificial Intelligence. I still love Lisp, but other languages proved more “durable” so I mostly program in Python or C++ (the alpha and omega I would think of programming languages, at least in terms of difficulty)

What projects are you working on now?

I think my experience with Python gave me the incentive to rethink how C++ is taught (we teach C++ as the second language here at MSU). Given the changes with C++11 through 17 and with the development of the STL, you can write very Python-like code in C++ and be effective. I’m revamping the course and developing a book to accompany it.

Which Python libraries are your favorite (core or 3rd party)?

Far and away my favorite library is matplotlib. The focus for the Python book was data analysis and visualization and matplotlib is the visualization tool. So easy to use but so flexible as well. I love their saying: “Making easy things easy and hard things possible”. I use numpy (who doesn’t?) and pandas (given the data analysis focus), but also like more computational stuff like pycuda and pytorch. One of my Ph.D. student’s did a lot of his Python prototyping using pypy to speed things up which helped us a lot in working out some of the details of his dissertation.

And finally, I use ipython as my console, which I think is a given these days as well. What Fernando Gomez did when he started the whole ipython/notebook/jupyter system is a remarkable story about how a small idea can take off and make a very big difference.

What did you learn from writing the book, “The Practice of Computing using Python”?

What I learned is probably not what most would think. I learned that writing a book is hard. Not just the hard work of grinding out the pages, but writing in a way that is useful to the students who might read it. Explaining ideas simply and clearly is not easily, nor quickly, done. One of my favorite quotes (probably rightfully attribute to Blaise Pascal), is translated to something like

“I have made this longer than usual because I have not had time to make it shorter.”

(see https://quoteinvestigator.com/2012/04/28/shorter-letter/ )

What would you do differently if you were to rewrite it?

I’m a huge fan of the whole notebook/jupyter ecosystem and I regret that our work came out before that was a well-developed system. I think the future of writing textbooks is going to be changed because of notebooks and students will be much better off because of that work.

Thanks for doing the interview!

Not Invented Here: Class Warfare in Python 2

In Python 2, there are two distinct types of classes, the so-called "old" (or "classic") and (increasingly inaccurately named) "new" style classes. This post will discuss the differences between the two as visible to the Python programmer, and explore a little bit of the implementation decisions behind those differences.

New Style Classes

New style classes were introduced in Python 2.2 back in December of 2001. [1] New style classes are most familiar to modern Python programmers and are the only kind of classes available in Python 3.

Let's look at some of the observable properties of new style classes.

>>> classNewStyle(object):... pass

In Python 2, we create new style classes by (explicitly or implicitly) inheriting from object[2]. This means that the class NewStyle is an instance of type, that is, type is the metaclass (alternately, we can say that any instance of type is a new style class):

>>> NewStyle<class 'NewStyle'>>>> isinstance(NewStyle,type)True>>> type(NewStyle)<type 'type'>

Instances of this class are, well, instances of this class, and their type is this class:

>>> new_style_instance=NewStyle()>>> type(new_style_instance)isNewStyleTrue>>> isinstance(new_style_instance,NewStyle)True

Unsurprisingly, instances of NewStyle classes are also instances of object; it's right there in the class definition and the class __bases__ and the method resolution order (MRO):

>>> isinstance(new_style_instance,object)True>>> NewStyle.__bases__(<type 'object'>,)>>> NewStyle.mro()[<class 'NewStyle'>, <type 'object'>]

The default repr of a new style instance includes its type's name:

>>> print(repr(new_style_instance))<NewStyle object at 0x...>

Old Style Classes

If that's a new style class, what's an old style class? Old style, or "classic" classes, were the only type of class available in Python 2.1 and earlier. For backwards compatibility when moving older code to Python 2.2 or later, they remained the default (we'll see shortly some of the practical behaviour differences between old and new style classes).

This means that we create an old style class by not specifying any ancestors. We also create old style classes when only specifying ancestors that themselves are old style classes.

>>> classOldStyle:... pass

Let's look at the same observable properties as we did with new style classes and compare them. We've already seen that we didn't specify object in the inheritance list. Is this class a type?

>>> OldStyle<class __builtin__.OldStyle at 0x...>>>> isinstance(OldStyle,type)False>>> type(OldStyle)<type 'classobj'>

If you've been paying attention, you're not surprised to see that old style classes are not instances of type (after all, that's the definition we used for new style classes). They have a different repr, which is not too surprising, but they also have a different type.

>>> type(type(OldStyle))<type 'type'>

Hmm, and the type of that different type (i.e., its metaclass) is type. That makes me wonder, do all old-style classes share a type? Let's continue exploring.

Instances of the old style class are of course still instances of the old style class, but their type is not that class, unlike with new style classes:

>>> old_style_instance=OldStyle()>>> isinstance(old_style_instance,OldStyle)True>>> type(old_style_instance)isOldStyleFalse>>> type(old_style_instance)<type 'instance'>

Instead, their type is something called instance. Now I'm wondering again, do all instances of old style classes share a type? If so, how does class-specific behaviour get implemented?

>>> classOldStyle2:... pass>>> old_style2_instance=OldStyle2()>>> type(old_style2_instance)<type 'instance'>>>> type(old_style2_instance)istype(old_style_instance)True

They do! Curiouser and curiouser. How is type-specific behaviour implemented then? Let's keep going.

We saw that new style classes are instances of object, and it exists in their __bases__ and MRO. What about old classes?

>>> isinstance(old_style_instance,object)True>>> OldStyle.__bases__()>>> OldStyle.mro()Traceback (most recent call last):...AttributeError: class OldStyle has no attribute 'mro'

Strangely, the old style instance is still an instance of object, even though it doesn't inherit from it, as seen by it not being in the __bases__ (and it doesn't even have the concept of an exposed MRO).

Finally, the repr of old style classes is also different:

>>> print(repr(old_style_instance))<__builtin__.OldStyle instance at 0x...>

Practically Speaking

OK, that's enough time spent down in the weeds dealing with subtle differences in inheritance bases and reprs. Before we talk about implementation, are there any practical differences a Python programmer should be concerned about, or at least know about?

Yes. I'll hit some of the highlights here, there are probably others. (TL;DR: Always write new-style classes.)

Operators

For new style classes, all the Python special "dunder" methods (like __repr__ and the arithmetic and comparison operators such as __add__ and __eq__) must be defined on the class itself. If we want to change the repr, we can't do it in the instance, we have to do so on the class:

>>> new_style_instance.__repr__=lambda:"Hi, I'm a new instance">>> print(repr(new_style_instance))<NewStyle object at 0x...>>>> NewStyle.__repr__=lambdaself:"Hi, I'm the new class">>> print(repr(new_style_instance))Hi, I'm the new class

(We monkey-patched a class after its definition here, which does work thanks to some magic well discuss soon, but that's not usually the way its done.)

In contrast, old style classes allowed dunders to be assigned to an instance, even overriding one set on the class, in exactly the same way that regular methods work on both new and old style classes.

>>> OldStyle.__repr__=lambdaself:"Hi, I'm the old class">>> print(repr(old_style_instance))Hi, I'm the old class>>> old_style_instance.__repr__=lambda:"Hi, I'm the old instance">>> print(repr(old_style_instance))Hi, I'm the old instance

Old Style Instances are Bigger

On a 64-bit platform, old style instances are one pointer size larger:

>>> importsys>>> sys.getsizeof(new_style_instance)64>>> sys.getsizeof(old_style_instance)72

Old Style Classes Ignore __slots__

The slots declaration can be used not only to optimize memory usage for small frequently used objects but offer tighter control on what attributes an instance can have, which may be useful in some scenarios. Unfortunately, old style classes accept but ignore this declaration.

>>> classNewWithSlots(object):... __slots__=('attr',)>>> new_with_slots=NewWithSlots()>>> sys.getsizeof(new_with_slots)56>>> new_with_slots.not_a_slot=42Traceback (most recent call last):...AttributeError: 'NewWithSlots' object has no attribute 'not_a_slot'>>> new_with_slots.attr=42>>> sys.getsizeof(new_with_slots)56>>> classOldWithSlots:... __slots__=('attr',)>>> old_with_slots=OldWithSlots()>>> sys.getsizeof(old_with_slots)72>>> old_with_slots.not_a_slot=42>>> sys.getsizeof(old_with_slots)72

Old Style Classes Ignore __getattribute__

The getattribute dunder method allows an instance to intercept all attribute access. It's what makes it possible to implement transparently persistent objects in pure-Python. Indeed, it's the same basic feature that allowed for descriptors to be implemented in Python 2.2. Old style classes only support __getattr__, which is only used when the attribute cannot be found.

>>> classNewWithCustomAttrs(object):... def__getattribute__(self,name):... print("Looking up %s"%name)... ifname=='computed':return'computed'... returnobject.__getattribute__(self,name)... def__getattr__(self,name):... print("Returning default for %s"%name)... return1>>> custom_new=NewWithCustomAttrs()>>> custom_new.fooLooking up fooReturning default for foo1>>> custom_new.foo=42>>> custom_new.fooLooking up foo42>>> custom_new.computed=42>>> custom_new.computedLooking up computed'computed'>>> classOldWithCustomAttrs:... def__getattribute__(self,name):... print("Looking up %s"%name)... ifname=='computed':return'computed'... returnobject.__getattribute__(self,name)... def__getattr__(self,name):... print("Returning default for %s"%name)... return1>>> custom_old=OldWithCustomAttrs()>>> custom_old.fooReturning default for foo1>>> custom_old.foo=42>>> custom_old.foo42>>> custom_old.computed=42>>> custom_old.computed42

Contrary to some information that can be found on the Internet, old style classes can use most descriptors, including @property.

Old Style Classes Have a Simplistic MRO

When multiple inheritance is involved, the MRO of an old style class can be quite surprising. I'll let Guido provide the explanation.

Old Style Classes are Slow

This is especially true on PyPy, but even on CPython 2.7 an old style class is three times slower at basic arithmetic.

>>> importtimeit>>> classNewAdd(object):... def__add__(self,other):... return1>>> timeit.new_add=NewAdd()# Cheating, sneak this into the globals>>> timeit.timeit('new_add + 1')0.2...>>> classOldAdd:... def__add__(self,other):... return1>>> timeit.old_add=OldAdd()>>> timeit.timeit('old_add + 1')0.6...

Implementing Old Style Classes

The shift to new style classes represents something of a shift away from Python's earlier free-wheeling days when it could almost be thought of as a prototype-based language into something more traditionally class based. Yet Python 2.2 up through Python 2.7 still support the old way of doing things. It would be silly to imagine there are two completely separate object and type hierarchy implementations inside the CPython interpreter, along with two separate ways of doing arithmetic and getting reprs, etc [3], yet the old style classes continue to work as always. How is this done?

We've had some tantalizing clues to help point us in the right direction.

Regarding instances of old style classes, even separate classes are the same type. The same is not true for new style classes.

Old style instances are still instances of object.

Regarding old style classes themselves, they are not instances of type, rather of classobj...which itself is an instance of type.

Regarding perfomance, the change to method lookup "provides significant scope for speed optimisations within the interpreter".

If you followed the link to see what was new in Python 2.2, you'd see that one of the other major features introduced by PEP 253 was the ability to subclass built-in types like list and override their operators. In Python 2.1, just as with old style classes today, all classes implemented in Python shared the same type, but classes implemented in C (like list) could be distinct types; importantly, types were not classes. Here's Python 2.1:

>>> classOldStyle:... pass>>> classOldStyle2:... pass>>> type(OldStyle())<type 'instance'>>>> type(OldStyle2())<type 'instance'>>>> type([])<type 'list'>>>> classFoo(list):... passTraceback (most recent call last):...TypeError: base is not a class object

To further understand how CPython implements both old and new-style classes, you need to understand just a bit about how it represents types internally and how it invokes those special dunder methods.

CPython Types

In CPython, all types are defined by a C structure called PyTypeObject. It has a series of members (confusingly also called slots) that are C function pointers that implement the behaviour defined by the type. For example, tp_repr is the slot for the __repr__ function, and tp_getattro is the slot for the __getattribute__ function. When the interpreter needs to get the repr of an object, or get an attribute from it, it always goes through those function pointers. An operation like repr(obj) becomes the C equivalent of type(obj).__repr__(obj).

When we create a new style class, the standard type metaclass makes a C function pointer out of each special dunder method defined in the class's __dict__ and uses that to fill in the slots in the C structure. Special methods that aren't present are inherited. Similarly, when we later set such an attribute, the __setattr__function of the type metaclass checks to see if it needs to update the C slots.

The C structure that defines the object type uses PyObject_GenericGetAttr for its tp_getattro (__getattribute__) slot. That function implements the "standard" way of accessing attributes of objects. It's the same thing we showed above in old_getattribute when we called object.__getattribute__(self, name). The object type also leaves most other slots (like for __add__) empty, meaning that subclasses must define them in the class __dict__ (or assign to them) for them to be found.

Importantly, though, other types implemented in C don't have to do any of that. They could set their tp_getattro and tp_repr slots, along with any of the other slots, to do whatever they want.

You might now understand how PEP 253, which gave us new style classes, also gave us the ability to subclass C types.

instance types

That's exactly what <type 'instance'> does. That type is defined by PyInstance_Type. The metaclass classobj (recall that's the type of old style classes) sets its tp_call slot (aka __call__) to PyInstance_New, a function that returns a new object of <type 'instance'>, aka PyInstance_Type (explaining while all old instances have the same type). (Incidentally, the metaclass also returns instances of itself, not new sub-types as the type metaclass does, which explains why they all have the same type.)

PyInstance_Type, in turn, overrides almost all of the possible slot definitions to implement the old behaviour of first looking in the instance's __dict__ and only if it's not present then looking up the class hierarchy or invoking __getattr__. For example, tp_repr is instance_repr, which uses instance_getattr to dynamically find an appropriate __repr__ function from the instance or class. This, by the way, is the source of the performance difference between old and new style classes.

Implementing Old Style Classes in Python

Can we re-implement old style classes in pure-Python (for example, on Python 3)? I haven't tried, but we can probably get pretty close. It would be similar to implementing transparent object proxies in pure-Python, which is verbose and tedious.

Footnotes

| [1] | Thus demonstrating the perils of naming something "new." Here we are 17 years later and still calling them that. |

| [2] | Or by explicitly specifying a __metaclass__ of type. |

| [3] | Good programmers are lazy. |

Wingware News: Wing Python IDE 6.1: July 30, 2018

Real Python: Python Code Quality: Tools & Best Practices

In this article, we’ll identify high-quality Python code and show you how to improve the quality of your own code.

We’ll analyze and compare tools you can use to take your code to the next level. Whether you’ve been using Python for a while, or just beginning, you can benefit from the practices and tools talked about here.

What is Code Quality?

Of course you want quality code, who wouldn’t? But to improve code quality, we have to define what it is.

A quick Google search yields many results defining code quality. As it turns out, the term can mean many different things to people.

One way of trying to define code quality is to look at one end of the spectrum: high-quality code. Hopefully, you can agree on the following high-quality code identifiers:

- It does what it is supposed to do.

- It does not contain defects or problems.

- It is easy to read, maintain, and extend.

These three identifiers, while simplistic, seem to be generally agreed upon. In an effort to expand these ideas further, let’s delve into why each one matters in the realm of software.

Why Does Code Quality Matter?

To determine why high-quality code is important, let’s revisit those identifiers. We’ll see what happens when code doesn’t meet them.

It does not do what it is supposed to do

Meeting requirements is the basis of any product, software or otherwise. We make software to do something. If in the end, it doesn’t do it… well it’s definitely not high quality. If it doesn’t meet basic requirements, it’s hard to even call it low quality.

It does contain defects and problems

If something you’re using has issues or causes you problems, you probably wouldn’t call it high-quality. In fact, if it’s bad enough, you may stop using it altogether.

For the sake of not using software as an example, let’s say your vacuum works great on regular carpet. It cleans up all the dust and cat hair. One fateful night the cat knocks over a plant, spilling dirt everywhere. When you try to use the vacuum to clean the pile of dirt, it breaks, spewing the dirt everywhere.

While the vacuum worked under some circumstances, it didn’t efficiently handle the occasional extra load. Thus, you wouldn’t call it a high-quality vacuum cleaner.

That is a problem we want to avoid in our code. If things break on edge cases and defects cause unwanted behavior, we don’t have a high-quality product.

It is difficult to read, maintain, or extend

Imagine this: a customer requests a new feature. The person who wrote the original code is gone. The person who has replaced them now has to make sense of the code that’s already there. That person is you.

If the code is easy to comprehend, you’ll be able to analyze the problem and come up with a solution much quicker. If the code is complex and convoluted, you’ll probably take longer and possibly make some wrong assumptions.

It’s also nice if it’s easy to add the new feature without disrupting previous features. If the code is not easy to extend, your new feature could break other things.

No one wants to be in the position where they have to read, maintain, or extend low-quality code. It means more headaches and more work for everyone.

It’s bad enough that you have to deal with low-quality code, but don’t put someone else in the same situation. You can improve the quality of code that you write.

If you work with a team of developers, you can start putting into place methods to ensure better overall code quality. Assuming that you have their support, of course. You may have to win some people over (feel free to send them this article 😃).

How to Improve Python Code Quality

There are a few things to consider on our journey for high-quality code. First, this journey is not one of pure objectivity. There are some strong feelings of what high-quality code looks like.

While everyone can hopefully agree on the identifiers mentioned above, the way they get achieved is a subjective road. The most opinionated topics usually come up when you talk about achieving readability, maintenance, and extensibility.

So keep in mind that while this article will try to stay objective throughout, there is a very-opinionated world out there when it comes to code.

So, let’s start with the most opinionated topic: code style.

Style Guides

Ah, yes. The age-old question: spaces or tabs?

Regardless of your personal view on how to represent whitespace, it’s safe to assume that you at least want consistency in code.

A style guide serves the purpose of defining a consistent way to write your code. Typically this is all cosmetic, meaning it doesn’t change the logical outcome of the code. Although, some stylistic choices do avoid common logical mistakes.

Style guides serve to help facilitate the goal of making code easy to read, maintain, and extend.

As far as Python goes, there is a well-accepted standard. It was written, in part, by the author of the Python programming language itself.

PEP 8 provides coding conventions for Python code. It is fairly common for Python code to follow this style guide. It’s a great place to start since it’s already well-defined.

A sister Python Enhancement Proposal, PEP 257 describes conventions for Python’s docstrings, which are strings intended to document modules, classes, functions, and methods. As an added bonus, if docstrings are consistent, there are tools capable of generating documentation directly from the code.

All these guides do is define a way to style code. But how do you enforce it? And what about defects and problems in the code, how can you detect those? That’s where linters come in.

Linters

What is a Linter?

First, let’s talk about lint. Those tiny, annoying little defects that somehow get all over your clothes. Clothes look and feel much better without all that lint. Your code is no different. Little mistakes, stylistic inconsistencies, and dangerous logic don’t make your code feel great.

But we all make mistakes. You can’t expect yourself to always catch them in time. Mistyped variable names, forgetting a closing bracket, incorrect tabbing in Python, calling a function with the wrong number of arguments, the list goes on and on. Linters help to identify those problem areas.

Additionally, most editors and IDE’s have the ability to run linters in the background as you type. This results in an environment capable of highlighting, underlining, or otherwise identifying problem areas in the code before you run it. It is like an advanced spell-check for code. It underlines issues in squiggly red lines much like your favorite word processor does.

Linters analyze code to detect various categories of lint. Those categories can be broadly defined as the following:

- Logical Lint

- Code errors

- Code with potentially unintended results

- Dangerous code patterns

- Stylistic Lint

- Code not conforming to defined conventions

There are also code analysis tools that provide other insights into your code. While maybe not linters by definition, these tools are usually used side-by-side with linters. They too hope to improve the quality of the code.

Finally, there are tools that automatically format code to some specification. These automated tools ensure that our inferior human minds don’t mess up conventions.

What Are My Linter Options For Python?

Before delving into your options, it’s important to recognize that some “linters” are just multiple linters packaged nicely together. Some popular examples of those combo-linters are the following:

Flake8: Capable of detecting both logical and stylistic lint. It adds the style and complexity checks of pycodestyle to the logical lint detection of PyFlakes. It combines the following linters:

- PyFlakes

- pycodestyle (formerly pep8)

- Mccabe

Pylama: A code audit tool composed of a large number of linters and other tools for analyzing code. It combines the following:

- pycodestyle (formerly pep8)

- pydocstyle (formerly pep257)

- PyFlakes

- Mccabe

- Pylint

- Radon

- gjslint

Here are some stand-alone linters categorized with brief descriptions:

| Linter | Category | Description |

|---|---|---|

| Pylint | Logical & Stylistic | Checks for errors, tries to enforce a coding standard, looks for code smells |

| PyFlakes | Logical | Analyzes programs and detects various errors |

| pycodestyle | Stylistic | Checks against some of the style conventions in PEP 8 |

| pydocstyle | Stylistic | Checks compliance with Python docstring conventions |

| Bandit | Logical | Analyzes code to find common security issues |

| MyPy | Logical | Checks for optionally-enforced static types |

And here are some code analysis and formatting tools:

| Tool | Category | Description |

|---|---|---|

| Mccabe | Analytical | Checks McCabe complexity |

| Radon | Analytical | Analyzes code for various metrics (lines of code, complexity, and so on) |

| Black | Formatter | Formats Python code without compromise |

| Isort | Formatter | Formats imports by sorting alphabetically and separating into sections |

Comparing Python Linters

Let’s get a better idea of what different linters are capable of catching and what the output looks like. To do this, I ran the same code through a handful of different linters with the default settings.

The code I ran through the linters is below. It contains various logical and stylistic issues:

"""code_with_lint.pyExample Code with lots of lint!"""importiofrommathimport*fromtimeimporttimesome_global_var='GLOBAL VAR NAMES SHOULD BE IN ALL_CAPS_WITH_UNDERSCOES'defmultiply(x,y):""" This returns the result of a multiplation of the inputs"""some_global_var='this is actually a local variable...'result=x*yreturnresultifresult==777:print("jackpot!")defis_sum_lucky(x,y):"""This returns a string describing whether or not the sum of input is lucky This function first makes sure the inputs are valid and then calculates the sum. Then, it will determine a message to return based on whether or not that sum should be considered "lucky""""ifx!=None:ifyisnotNone:result=x+y;ifresult==7:return'a lucky number!'else:return('an unlucky number!')return('just a normal number')classSomeClass:def__init__(self,some_arg,some_other_arg,verbose=False):self.some_other_arg=some_other_argself.some_arg=some_arglist_comprehension=[((100/value)*pi)forvalueinsome_argifvalue!=0]time=time()fromdatetimeimportdatetimedate_and_time=datetime.now()returnThe comparison below shows the linters I used and their runtime for analyzing the above file. I should point out that these aren’t all entirely comparable as they serve different purposes. PyFlakes, for example, does not identify stylistic errors like Pylint does.

| Linter | Command | Time |

|---|---|---|

| Pylint | pylint code_with_lint.py | 1.16s |

| PyFlakes | pyflakes code_with_lint.py | 0.15s |

| pycodestyle | pycodestyle code_with_lint.py | 0.14s |

| pydocstyle | pydocstyle code_with_lint.py | 0.21s |

For the outputs of each, see the sections below.

Pylint

Pylint is one of the oldest linters (circa 2006) and is still well-maintained. Some might call this software battle-hardened. It’s been around long enough that contributors have fixed most major bugs and the core features are well-developed.

The common complaints against Pylint are that it is slow, too verbose by default, and takes a lot of configuration to get it working the way you want. Slowness aside, the other complaints are somewhat of a double-edged sword. Verbosity can be because of thoroughness. Lots of configuration can mean lots of adaptability to your preferences.

Without further ado, the output after running Pylint against the lint-filled code from above:

No config file found, using default configuration

************* Module code_with_lint

W: 23, 0: Unnecessary semicolon (unnecessary-semicolon)

C: 27, 0: Unnecessary parens after 'return' keyword (superfluous-parens)

C: 27, 0: No space allowed after bracket

return( 'an unlucky number!')

^ (bad-whitespace)

C: 29, 0: Unnecessary parens after 'return' keyword (superfluous-parens)

C: 33, 0: Exactly one space required after comma

def __init__(self, some_arg, some_other_arg, verbose = False):

^ (bad-whitespace)

C: 33, 0: No space allowed around keyword argument assignment

def __init__(self, some_arg, some_other_arg, verbose = False):

^ (bad-whitespace)

C: 34, 0: Exactly one space required around assignment

self.some_other_arg = some_other_arg

^ (bad-whitespace)

C: 35, 0: Exactly one space required around assignment

self.some_arg = some_arg

^ (bad-whitespace)

C: 40, 0: Final newline missing (missing-final-newline)

W: 6, 0: Redefining built-in 'pow' (redefined-builtin)

W: 6, 0: Wildcard import math (wildcard-import)

C: 11, 0: Constant name "some_global_var" doesn't conform to UPPER_CASE naming style (invalid-name)

C: 13, 0: Argument name "x" doesn't conform to snake_case naming style (invalid-name)

C: 13, 0: Argument name "y" doesn't conform to snake_case naming style (invalid-name)

C: 13, 0: Missing function docstring (missing-docstring)

W: 14, 4: Redefining name 'some_global_var' from outer scope (line 11) (redefined-outer-name)

W: 17, 4: Unreachable code (unreachable)

W: 14, 4: Unused variable 'some_global_var' (unused-variable)

...

R: 24,12: Unnecessary "else" after "return" (no-else-return)

R: 20, 0: Either all return statements in a function should return an expression, or none of them should. (inconsistent-return-statements)

C: 31, 0: Missing class docstring (missing-docstring)

W: 37, 8: Redefining name 'time' from outer scope (line 9) (redefined-outer-name)

E: 37,15: Using variable 'time' before assignment (used-before-assignment)

W: 33,50: Unused argument 'verbose' (unused-argument)

W: 36, 8: Unused variable 'list_comprehension' (unused-variable)

W: 39, 8: Unused variable 'date_and_time' (unused-variable)

R: 31, 0: Too few public methods (0/2) (too-few-public-methods)

W: 5, 0: Unused import io (unused-import)

W: 6, 0: Unused import acos from wildcard import (unused-wildcard-import)

...

W: 9, 0: Unused time imported from time (unused-import)

Note that I’ve condensed this with ellipses for similar lines. It’s quite a bit to take in, but there is a lot of lint in this code.

Note that Pylint prefixes each of the problem areas with a R, C, W, E, or F, meaning:

- [R]efactor for a “good practice” metric violation

- [C]onvention for coding standard violation

- [W]arning for stylistic problems, or minor programming issues

- [E]rror for important programming issues (i.e. most probably bug)

- [F]atal for errors which prevented further processing

The above list is directly from Pylint’s user guide.

PyFlakes

Pyflakes “makes a simple promise: it will never complain about style, and it will try very, very hard to never emit false positives”. This means that Pyflakes won’t tell you about missing docstrings or argument names not conforming to a naming style. It focuses on logical code issues and potential errors.

The benefit here is speed. PyFlakes runs in a fraction of the time Pylint takes.

Output after running against lint-filled code from above:

code_with_lint.py:5: 'io' imported but unused

code_with_lint.py:6: 'from math import *' used; unable to detect undefined names

code_with_lint.py:14: local variable 'some_global_var' is assigned to but never used

code_with_lint.py:36: 'pi' may be undefined, or defined from star imports: math

code_with_lint.py:36: local variable 'list_comprehension' is assigned to but never used

code_with_lint.py:37: local variable 'time' (defined in enclosing scope on line 9) referenced before assignment

code_with_lint.py:37: local variable 'time' is assigned to but never used

code_with_lint.py:39: local variable 'date_and_time' is assigned to but never used

The downside here is that parsing this output may be a bit more difficult. The various issues and errors are not labeled or organized by type. Depending on how you use this, that may not be a problem at all.

pycodestyle (formerly pep8)

Used to check some style conventions from PEP8. Naming conventions are not checked and neither are docstrings. The errors and warnings it does catch are categorized in this table.

Output after running against lint-filled code from above:

code_with_lint.py:13:1: E302 expected 2 blank lines, found 1

code_with_lint.py:15:15: E225 missing whitespace around operator

code_with_lint.py:20:1: E302 expected 2 blank lines, found 1

code_with_lint.py:21:10: E711 comparison to None should be 'if cond is not None:'

code_with_lint.py:23:25: E703 statement ends with a semicolon

code_with_lint.py:27:24: E201 whitespace after '('

code_with_lint.py:31:1: E302 expected 2 blank lines, found 1

code_with_lint.py:33:58: E251 unexpected spaces around keyword / parameter equals

code_with_lint.py:33:60: E251 unexpected spaces around keyword / parameter equals

code_with_lint.py:34:28: E221 multiple spaces before operator

code_with_lint.py:34:31: E222 multiple spaces after operator

code_with_lint.py:35:22: E221 multiple spaces before operator

code_with_lint.py:35:31: E222 multiple spaces after operator

code_with_lint.py:36:80: E501 line too long (83 > 79 characters)

code_with_lint.py:40:15: W292 no newline at end of file

The nice thing about this output is that the lint is labeled by category. You can choose to ignore certain errors if you don’t care to adhere to a specific convention as well.

pydocstyle (formerly pep257)

Very similar to pycodestyle, except instead of checking against PEP8 code style conventions, it checks docstrings against conventions from PEP257.

Output after running against lint-filled code from above:

code_with_lint.py:1 at module level:

D200: One-line docstring should fit on one line with quotes (found 3)

code_with_lint.py:1 at module level:

D400: First line should end with a period (not '!')

code_with_lint.py:13 in public function `multiply`:

D103: Missing docstring in public function

code_with_lint.py:20 in public function `is_sum_lucky`:

D103: Missing docstring in public function

code_with_lint.py:31 in public class `SomeClass`:

D101: Missing docstring in public class

code_with_lint.py:33 in public method `__init__`:

D107: Missing docstring in __init__

Again, like pycodestyle, pydocstyle labels and categorizes the various errors it finds. And the list doesn’t conflict with anything from pycodestyle since all the errors are prefixed with a D for docstring. A list of those errors can be found here.

Code Without Lint

You can adjust the previously lint-filled code based on the linter’s output and you’ll end up with something like the following:

"""Example Code with less lint."""frommathimportpifromtimeimporttimefromdatetimeimportdatetimeSOME_GLOBAL_VAR='GLOBAL VAR NAMES SHOULD BE IN ALL_CAPS_WITH_UNDERSCOES'defmultiply(first_value,second_value):"""Return the result of a multiplation of the inputs."""result=first_value*second_valueifresult==777:print("jackpot!")returnresultdefis_sum_lucky(first_value,second_value):""" Return a string describing whether or not the sum of input is lucky. This function first makes sure the inputs are valid and then calculates the sum. Then, it will determine a message to return based on whether or not that sum should be considered "lucky"."""iffirst_valueisnotNoneandsecond_valueisnotNone:result=first_value+second_valueifresult==7:message='a lucky number!'else:message='an unlucky number!'else:message='an unknown number! Could not calculate sum...'returnmessageclassSomeClass:"""Is a class docstring."""def__init__(self,some_arg,some_other_arg):"""Initialize an instance of SomeClass."""self.some_other_arg=some_other_argself.some_arg=some_arglist_comprehension=[((100/value)*pi)forvalueinsome_argifvalue!=0]current_time=time()date_and_time=datetime.now()print(f'created SomeClass instance at unix time: {current_time}')print(f'datetime: {date_and_time}')print(f'some calculated values: {list_comprehension}')defsome_public_method(self):"""Is a method docstring."""passdefsome_other_public_method(self):"""Is a method docstring."""passThat code is lint-free according to the linters above. While the logic itself is mostly nonsensical, you can see that at a minimum, consistency is enforced.

In the above case, we ran linters after writing all the code. However, that’s not the only way to go about checking code quality.

When Can I Check My Code Quality?

You can check your code’s quality:

- As you write it

- When it’s checked in

- When you’re running your tests

It’s useful to have linters run against your code frequently. If automation and consistency aren’t there, it’s easy for a large team or project to lose sight of the goal and start creating lower quality code. It happens slowly, of course. Some poorly written logic or maybe some code with formatting that doesn’t match the neighboring code. Over time, all that lint piles up. Eventually, you can get stuck with something that’s buggy, hard to read, hard to fix, and a pain to maintain.

To avoid that, check code quality often!

As You Write

You can use linters as you write code, but configuring your environment to do so may take some extra work. It’s generally a matter of finding the plugin for your IDE or editor of choice. In fact, most IDEs will already have linters built in.

Here’s some general info on Python linting for various editors:

Before You Check In Code

If you’re using Git, Git hooks can be set up to run your linters before committing. Other version control systems have similar methods to run scripts before or after some action in the system. You can use these methods to block any new code that doesn’t meet quality standards.

While this may seem drastic, forcing every bit of code through a screening for lint is an important step towards ensuring continued quality. Automating that screening at the front gate to your code may be the best way to avoid lint-filled code.

When Running Tests

You can also place linters directly into whatever system you may use for continuous integration. The linters can be set up to fail the build if the code doesn’t meet quality standards.

Again, this may seem like a drastic step, especially if there are already lots of linter errors in the existing code. To combat this, some continuous integration systems will allow you the option of only failing the build if the new code increases the number of linter errors that were already present. That way you can start improving quality without doing a whole rewrite of your existing code base.

Conclusion

High-quality code does what it’s supposed to do without breaking. It is easy to read, maintain, and extend. It functions without problems or defects and is written so that it’s easy for the next person to work with.

Hopefully it goes without saying that you should strive to have such high-quality code. Luckily, there are methods and tools to help improve code quality.

Style guides will bring consistency to your code. PEP8 is a great starting point for Python. Linters will help you identify problem areas and inconsistencies. You can use linters throughout the development process, even automating them to flag lint-filled code before it gets too far.

Having linters complain about style also avoids the need for style discussions during code reviews. Some people may find it easier to receive candid feedback from these tools instead of a team member. Additionally, some team members may not want to “nitpick” style during code reviews. Linters avoid the politics, save time, and complain about any inconsistency.

In addition, all the linters mentioned in this article have various command line options and configurations that let you tailor the tool to your liking. You can be as strict or as loose as you want, which is an important thing to realize.

Improving code quality is a process. You can take steps towards improving it without completely disallowing all nonconformant code. Awareness is a great first step. It just takes a person, like you, to first realize how important high-quality code is.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Made With Mu: Keynoting with Mu

EuroPython (one of the world’s largest, most friendly and culturally diverse Python programming conferences) happened last week in Scotland. Sadly, for personal reasons, I was unable to attend. However, it was great to hear via social media that Mu was used in the opening conference keynote by Pythonista-extraordinaire David Beazley. Not only is it wonderful to see Mu used by someone so well respected in the Python community at such an auspicious occasion, but David’s use of Mu is a wonderful example of Mu’s use as a pedagogical tool. Remember, Mu is not only for learners, but is also for those who support them (i.e. teachers).

What do I mean by pedagogical tool?

The stereotypical example is a blackboard upon which the teacher chalks explanations, examples and exercises for their students. Teaching involves a lot of performative explanation – showing, telling and describing things as you go along; traditionally with the aid of a blackboard. This is a very effective pedagogical technique since students can interrupt and ask questions as the lesson unfolds.

David used Mu as a sort of interactive coding blackboard in his keynote introducing the Thredo project. He even encouraged listeners in the auditorium to interrupt as he live-coded his Python examples.

David and I have never met in real life, although our paths have crossed online via Python (and, incidentally, via our shared love of buzzing down long brass tubes). So I emailed him to find out how he found using Mu. Here are some extracts from his reply (used with permission):

There are some who seem to think that one should start off using a fancy IDE such as PyCharm, VSCode, Jupyter Lab or something along those lines and start piling on a whole bunch of complex tooling. I frankly disagree with a lot of that. A simple editor is exactly what’s useful in teaching because it cuts out a lot of unnecessary distractions.

From a more practical point of view, I choose to use Mu for my talk precisely because it was extremely simple. I do a lot of live-coding in talks and for that, I usually try to keep the environment minimal. […] Part of the appeal of Mu is that I could code and use the REPL without cluttering the screen with any other unnecessary cruft. For example, I could use it without having to switch between terminal windows and I could show code and its output at the same time in a fairly easy way.

This sort of feedback makes me incredibly happy. David obviously “gets it” and I hope his example points the way for others to exploit the potential of Mu as a pedagogical tool. David also gave some helpful suggestions which I hope we’ll address in the next release.

David’s keynote is a fascinating exposition of what you may want from a Pythonic threading library – it also has a wonderful twist at the end which made me smile. I’d like to encourage you to watch the whole presentation either via EuroPython’s live video stream of the event or David’s own screen cast of the keynote, embedded below:

Finally, the exposure of Mu via EuroPython had a fun knock on effect: someone submitted the Mu website to Hacker News (one of the world’s most popular news aggregation websites for programmers). By mid-afternoon Mu was voted the top story and I had lots of fun answering people’s questions and reading their comments relating to Mu.

My favourite comment, which I’ll paraphrase below, will become Mu’s tag-line:

“Python is a language that helps make code readable, Mu is an editor that helps make code writeable.”

Bhishan Bhandari: Python Lists

The intentions of this article is to host a set of example operations that can be performed around lists, a crucial data structure in Python. Lists In Python, List is an object that contains a sequence of other arbitrary objects. Lists unlike tuples are mutable objects. Defining a list Lists are defined by enclosing a […]

The post Python Lists appeared first on The Tara Nights.

Sandipan Dey: Some Image Processing Problems

Nikolaos Diamantis: Lucy's Secret Number Puzzle

Bill Ward / AdminTome: Aggregated Audit Logging with Google Cloud and Python

In this post, we will be aggregating all of our logs into Google BigQuery Audit Logs. Using big data techniques we can simply our audit log aggregation in the cloud.

Essentially, we are going to take some Apache2 server access logs from a production web server (this one in fact), convert the log file line-by-line to JSON data, publish that JSON data to a Google PubSub topic, transform the data using Google DataFlow, and store the resulting log file in Google BigQuery long term storage. It sounds a lot harder than it actually is.

Why go through this?

This gives us the ability to do some big things with our data using big data principles. For example, with the infrastructure as outlined we have a log analyzer akin to Splunk but we can use standard SQL to analyze the logs. We could pump the data into Graphite/Graphana to visually identify trends. And the best part is that it only takes a few lines of Python code!

Just so you know, looking at my actual production logs in this screenshot, I just realized I have my http reverse proxy misconfigured. The IP address in the log should be the IP address from my web site visitor. There you go, the benefits of Big Data in action!

Assumptions

I am going to assume that you have a Google GCP account. If not then you can signup for the Google Cloud Platform Free Tier.

Please note: The Google solutions I outline in this post can cost you some money. Check the Google Cloud Platform Pricing Calculator to see what you can expect. You will need to associate a billing account to your project in order to follow along with this article.

I also assume you know how to create a service account in IAM. Refer to the Creating and Managing Service Accounts document for help completing this.

Finally, I assume that you know how to enable the API for Google PubSub. If you don’t then don’t worry there will be a popup when you enable Google PubSub that tells you what to do.

Configuring Google PubSub

Lets get down to the nitty gritty. The first thing we need to do is enable Google PubSub for our Project. This article assumes you already have a project created in GCP. If you don’t you can follow the Creating and Managing Projects document on Google Cloud.

Next go to Google PubSub from the main GCP menu on the right.

If this is your first time here, it will tell you to create a new topic.

Topic names must look like this projects/{project}/topics/{topic}. My topic was projects/admintome-bigdata-test/topics/www_logs.

Click on Create and your topic is created. Pretty simple stuff so far.

Later when we start developing our application in Python we will push JSON messages to this topic. Next we need to configure Google DataFlow to transform our JSON data into something we can insert into our Google BigQuery table.

Configuring Google DataFlow

Google was nice to us and includes a ton of templates that allow us to connect many of the GCP products to each other to provide robust cloud solutions.

Before we actually go to configure Google DataFlow we need to have a Google Storage Bucket configured. If you don’t know how to do this follow the Creating Storage Buckets document on GCP. Now that you have a Google Storage Bucket ready make sure you remember the Storage Bucket name because we will need it next.

First, go to DataFlow from the GCP menu.

Again, if this is your first time here GCP will ask you to create a job from template.

Here you can see that i gave it a Job name of www-logs. Under Cloud DataFlow template select PubSub to BigQuery.

Next we need to enter the Cloud Pub/Sub input topic. Given my example topic from above, I set this to projects/admintome-bigdata-test/topics/www_logs.

Now we need to tell it the Google BigQuery output table to use. In this example, I use admintome-bigdata-test:www_logs.access_logs.

Now it is time to enter that Google Storage bucket information I talked about earlier. The bucket I created was named admintomebucket.

In the Temporary Location field enter ‘gs://{bucketname}/tmp’.

After you click on create you will see a pretty diagram of the DataFlow:

What all do we see here? Well the first task in the flow is a ReadPubsubMessages task that will consume messages from the topic we gave earlier. The next task is the ConvertMessageToTable task which takes our JSON data and converts it to a table row. It then tries to append the table we specified in the parameters of the DataFlow. If it is successful then you will have a new table in BigQuery. Any problems then it creates a new table called access_logs_error_records. If this table doesn’t exit it will create the table first. Actually, this is true for our original table too. We will verify this later after we write and deploy our Python application.

Python BigQuery Audit Logs

Now for the fun part of creating our BigQuery Audit Logs solution. Lets get to coding.

Preparing a Python environment. Use virtualenv or pyenv to create a python virtual environment for our application.

Next we need to install some Python modules to work with Google Cloud.

$ pip install google-cloud-pubsub

Here is the code to our application:

producer.py

import time

import datetime

import json

from google.cloud import bigquery

from google.cloud import pubsub_v1

def parse_log_line(line):

try:

print("raw: {}".format(line))

strptime = datetime.datetime.strptime

temp_log = line.split(' ')

entry = {}

entry['ipaddress'] = temp_log[0]

time = temp_log[3][1::]

entry['time'] = strptime(

time, "%d/%b/%Y:%H:%M:%S").strftime("%Y-%m-%d %H:%M")

request = "".join((temp_log[5], temp_log[6], temp_log[7]))

entry['request'] = request

entry['status_code'] = int(temp_log[8])

entry['size'] = int(temp_log[9])

entry['client'] = temp_log[11]

return entry

except ValueError:

print("Got back a log line I don't understand: {}".format(line))

return None

def show_entry(entry):

print("ip: {} time: {} request: {} status_code: {} size: {} client: {}".format(

entry['ipaddress'],

entry['time'],

entry['request'],

entry['status_code'],

entry['size'],

entry['client']))

def follow(syslog_file):

publisher = pubsub_v1.PublisherClient()

topic_path = publisher.topic_path(

'admintome-bigdata-test', 'www_logs')

syslog_file.seek(0, 2)

while True:

line = syslog_file.readline()

if not line:

time.sleep(0.1)

continue

else:

entry = parse_log_line(line)

if not entry:

continue

show_entry(entry)

row = (

entry['ipaddress'],

entry['time'],

entry['request'],

entry['status_code'],

entry['size'],

entry['client']

)

result = publisher.publish(topic_path, json.dumps(entry).encode())

print("payload: {}".format(json.dumps(entry).encode()))

print("Result: {}".format(result.result()))

f = open("/var/log/apache2/access.log", "rt")

follow(f)

The first function we use is the follow function which is from a StackOverFlow Answer to tail a log file. The follow function reads a line from a log file (/var/log/apache/access.log) as it arrives and sends it to the parse_log_line function.

The parse_log_line function is a crude function that parses the standard Apache2 access.log lines and creates a dict of the values called entry. This function could use a lot of work, I know. It then returns the entry dict back to our follow function.

The follow function then converts the entry dict into JSON and sends it to our Google PubSub Topic with these lines:

publisher = pubsub_v1.PublisherClient()

topic_path = publisher.topic_path('admintome-bigdata-test', 'www_logs')

result = publisher.publish(topic_path, json.dumps(entry).encode())

That’s all there is to it. Deploy the application to your production web server and run it and you should see your log files sent to your Google PubSub Topic.

Viewing BigQuery Audit Logs

Now we have data being published to our Google PubSub, and the Google DataFlow process is pushing that to our BigQuery table, we can examine our results.

Go to BigQuery in the GCP menu.

You will see our BigQuery resource admintome-bigdata-test.

You can also see our tables under www_logs: access_logs. If DataFlow had any issues adding rows to your table (like mine did at first) then you will see the associated error_records table.

Click on access_logs and you will see the Query editor. Enter a simple SQL query to look at your data.

SELECT * FROM www_logs.access_logs

Click on the Run query button and you should see your logs!

Using simple SQL queries we can combine this table with other tables and get some meaningful insights into our website’s performance using BigQuery Audit Logs!

I hope you enjoyed this post. If you did then please share it on Facebook, Twitter and Google+. Also be sure to comment below, I would love to hear from you.

Click here for more great Big Data articles on AdminTome Blog.

The post Aggregated Audit Logging with Google Cloud and Python appeared first on AdminTome Blog.

Mike Driscoll: Jupyter Notebook 101: Table of Contents

I am about halfway through the Kickstarter campaign for my new book, Jupyter Notebook 101 and thought it would be fun to share my current tentative table of contents:

- Intro

- Chapter 1: Creating Notebooks

- Chapter 2: Rich Text (Markdown, images, etc)

- Chapter 3: Configuring Your Notebooks

- Chapter 4: Distributing Notebooks

- Chapter 5: Notebook Extensions

- Chapter 6: Notebook Widgets

- Chapter 7: Converting Notebooks into Other Formats

- Chapter 8: Creating Presentations with Notebooks

- Appendix A: Magic Commands

The table of contents are liable to change in content or order. I will try to cover all of these topics in one form or another though. I am also looking into a couple of other topics that I will try to include in the book if there is time, such as unit testing a Notebook. Some of my backers have also asked for sections on managing Jupyter across Python versions, using Conda and if you can use Notebooks as programs. I will look into these too to determine if they are within scope for the book and if I have the time to add them.

EuroPython: EuroPython 2018: Please send in your feedback

EuroPython 2018 is over now and so it’s time to ask around for what we can improve next year. If you attended EuroPython 2018, please take a few moments and fill in our feedback form:

EuroPython 2018 Feedback Form

We will leave the feedback form online for a few weeks and then use the information as basis for the work on EuroPython 2019 and also intend to post a summary of the multiple choice questions (not the comments to protect your privacy) on our website.

Many thanks in advance.

Enjoy,

–

EuroPython 2018 Team

https://ep2018.europython.eu/

https://www.europython-society.org/

Sandipan Dey: Solving Some Image Processing Problems with Python libraries

Tryton News: Newsletter August 2018

@ced wrote:

This month, progress has been made on the user interface and on increasing the performance.

Changes for the user

Limit size of titles

The titles of the tabs, wizard or form can be constructed from multiple sources. But the result may be too big to fit correctly on the screen. We ellipsize them when they are larger than 80 chars and we display the full title as tool-tip.

Show number and reference

The record name of the invoice, sale and purchase shows now the document number but also the reference number (between square brackets). This ease the work of accountant when reviewing accounting moves and their origins.

New module

product_price_list_parentThe product_price_list_parent module adds a Parent to the price list and the keyword parent_unit_price for the formula which contains the unit price computed by the parent price list.

With the module, it is possible to create cascading price lists.New header bar

The desktop client uses an header bar to provide global features like the configuration menu, the action entry and the favorites. This gives more vertical spaces for the application.

Grace period after confirmation purchase or sale

We added a configurable grace period to the confirmation of purchase and sale. This period allow to reset to draft the order after it was confirmed but before the automatic processing. This feature works only if Tryton is configured with worker queue.

Counting wizard for inventory

We added a wizard on the inventory to ease the counting of product. The wizard asks for the product then for the quantity to add.

If the rounding of the unit of measure is 1, then the default value for the added quantity is also 1. This is to allow fast encoding when counting units.New module for production outsourcing

The production outsourcing module allows to outsource production order per routing. When such outsourced production is set to waiting, a purchase order is created and its cost is added to the production.

New module for unit on stock lot

The stock_lot_unit module allows to define a unit and quantity on stock lot.

Lots with unit have the following properties:

- no shipment may contain a summed quantity for a lot greater than the quantity of the lot.

- no move related to a lot with a unit may concern a quantity greater than the quantity of the lot.

Changes for the developer

Fill main language in configuration

At the database initialization, the main language defined by the configuration files is stored in the

ir.configurationsingleton.Validation of Dictionary field values

We add a domain field to the dictionary schema. It is validated using the dictionary value on the client side only.

PYSON is more flexible with Boolean

The PYSON syntax is strongly typed to detect type error earlier. But for developer, it can be annoying to cast expression into

BooleanforIf,AndandOroperators. We changed their internal to automatically convert the expression into aBooleanwhen needed.Reduce new transaction started by

CacheFor performance reason, we improved the

Cacheto not start a new transaction when it is not needed. We also delayed the synchronization of the cache between host to 5 minutes.Stop idle database connection pool

For the PostgreSQL backend, we use a connection pool which keep by default 1 connection open. But on setup with a lot of database keeping this connection can be too expensive especially if it is almost never used. Now after 30 minutes of unused the pool is closed which free the connections.

The pool fortemplate1does not keep any connection at all in order to avoid lock when database is created.Using subrepository

We started an experiment to replace hgnested by subrepository. The main repository is available at tryton-env. If the result is positive, hgnested will be deactivated on hg.tryton.org.

Use of GtkApplication

The desktop client is using GtkApplication to manage the initialization, the application uniqueness, the menus and URL opens.

Now the client asks before starting for the connection parameters which can no more be changed without closing or starting a new application. The custom IPC has been replaced by the GtkApplication command line signal management.Improve index creation

It is now possible to create index with SQL expression (instead of only columns) and with where clause. This allow to create indexes tailored for some specific queries.

There is only one limitation with the SQLite backend which can not run the creation query if it has parameters. In such case, no index is created and a warning is displayed.Add accessor for TableHandler

In the same way as we have

__table__method to retrieve the SQL table of theModelSQL, we added__table_handler__method to retrieve an instance ofTableHandlerfor the class. This simplify the code to write to make modification on the table schema like creating an index or make a migration.Transactional queue

The first result of the crowed funding campaign of B2CK landed in Tryton.

Tryton can be configured to use workers to execute tasks asynchronously. Any Model method can be queued by calling it from theModel.__queue__attribute. The method must be an instance method or a class-method that takes a list of records as first argument. The other arguments must be JSON-ifiable.

The task posting can be configured using the context variable:queue_name,queue_scheduled_atandqueue_expected_at. The queue dispatches the tasks evenly to the available workers and each worker uses a configurable pool of processes (by default the number of CPU) to execute them. The workers can be run an a different machine as long as they have access to the database.

If Tryton has no worker configured, the tasks will be run directly at the end of the transaction.The processing of sales and purchases have already been adapted to use the queue.

Support of Python 3.7

The version 3.7 of Python has been released about 1 month ago. The development version of Tryton has been updated to support it. So this version of Python will be supported on the next release.

Posts: 1

Participants: 1

PyCharm: PyCharm 2018.2 and pytest Fixtures

Python has long had a culture of testing and pytest has emerged as the clear favorite for testing frameworks. PyCharm has long had very good “visual testing” features, including good support for pytest. One place we were weak: pytest “fixtures”, a wonderful feature that streamlines test setup. PyCharm 2018.2 put a lot of work and emphasis towards making pytest fixtures a pleasure to work with, as shown in the What’s New video.

This tutorial walks you through the pytest fixture support added to PyCharm 2018.2. Except for “visual coverage”, PyCharm Community and Professional Editions share all the same pytest features. We’ll use Community Edition for this tutorial and demonstrate:

- Autocomplete fixtures from various sources

- Quick documentation and navigation to fixtures

- Renaming a fixture from either the definition or a usage

- Support for pytest’s parametrize

Want the finished code? It’s in available in a GitHub repo.

What are pytest Fixtures?

In pytest you write your tests as functions (or methods.) When writing a lot of tests, you frequently have the same boilerplate over and over as you setup data. Fixtures let you move that out of your test, into a callable which returns what you need.

Sounds simple enough, but pytest adds a bunch of facilities tailored to the kinds of things you run into when writing a big pile of tests:

- Simply put the name of the fixture in your test function’s arguments and pytest will find it and pass it in

- Fixtures can be located from various places: local file, a conftest.py in the current (or any parent) directory, any imported code that has a @pytest.fixture decorator, and pytest built-in fixtures

- Fixtures can do a return or a yield, the latter leading to useful teardown-like patterns

- You can speed up your tests by flagging how often a fixture should be computed

- Interesting ways to parameterize fixtures for reuse

Read the documentation on fixtures and be dazzled by how many itches they’ve scratched over the decade of development, plus the vast ecosystem of pytest plugins.

Tutorial Scenario

This tutorial needs a sample application, which you can browse from the sample repo. It’s a tiny application for managing a girl’s lacrosse league: Players, Teams, Games. Tiny means tiny: it only has enough to illustrate pytest fixtures. No database, no UI. But it includes those things needed for actual business policies that make sense for testing.

Specifically: add a Player to a Team, create a Game between a Home Team and Visitor team, record the score, and show who won (or tie.) Surprisingly, it’s enough to exercise some of the pytest features (and was actually written with test-driven development.)

Setup

To follow along at home, make sure you have Python 3.7 (it uses dataclasses) and Pipenv installed. Clone the repo at https://github.com/pauleveritt/laxleague and make sure Pipenv has created an interpreter for you. (You can do both of those steps from within PyCharm.)

Then, open the directory in PyCharm and make sure you have set the Python Integrated Tools -> Default Test Runner to pytest.

Now you’re ready to follow the material below. Right-click on the tests directory and choose Run ‘pytest in tests’. If all the tests pass correctly, you’re setup.

Using Fixtures

Before getting to PyCharm 2018.2’s (frankly, awesome) fixture support, let’s get you situated with the code and existing tests.

We want to test a player, which is implemented as a Python 3.7 dataclass:

@dataclass

class Player:

first_name: str

last_name: str

jersey: int

def __post_init__(self):

ln = self.last_name.lower()

fn = self.first_name.lower()

self.id = f'{ln}-{fn}-{self.jersey}'It’s simple enough to write a test to see if the id was constructed correctly:

def test_constructor(): p = Player(first_name='Jane', last_name='Jones', jersey=11) assert 'Jane' == p.first_name assert 'Jones' == p.last_name assert 'jones-jane-11' == p.id

But we might write lots of tests with a sample player. Let’s make a pytest fixture with a sample player:

@pytest.fixture def player_one() -> Player: """ Return a sample player Mary Smith #10 """ yield Player(first_name='Mary', last_name='Smith', jersey=10)

Now it’s a lot easier to test construction, along with anything else on the Player:

def test_player(player_one): assert 'Mary' == player_one.first_name

We can get more re-use by moving this fixture to a conftest.py file in that test’s directory, or a parent directory. And along the way, we could give that player’s fixture a friendlier name:

@pytest.fixture(name='mary') def player_one() -> Player: """ Return a sample player Mary Smith #10 """ yield Player(first_name='Mary', last_name='Smith', jersey=10)

The test would then ask for mary instead of player_one: