How to seamlessly support typing.Protocol on Python versions older and newer than 3.8. At the same time.

Hynek Schlawack: typing.Protocol Across Python Versions

ItsMyCode: [Solved] ImportError: No module named matplotlib.pyplot

The ImportError: No module named matplotlib.pyplot occurs if you have not installed the Matplotlib library in Python and trying to run the script which has matplotlib related code. Another issue might be that you are not importing the matplotlib.pyplot properly in your Python code.

In this tutorial, let’s look at installing the matplotlib module correctly in different operating systems and solve No module named matplotlib.pyplot.

ImportError: No module named matplotlib.pyplot

Matplotlib is a comprehensive library for creating static, animated, and interactive visualizations in Python.

Matplotlib is not a built-in module (it doesn’t come with the default python installation) in Python, you need to install it explicitly using the pip installer and then use it.

If you looking at how to install pip or if you are getting an error installing pip checkout pip: command not found to resolve the issue.

Matplotlib releases are available as wheel packages for macOS, Windows and Linux on PyPI. Install it using pip:

Install Matplotlib in OSX/Linux

The recommended way to install the matplotlib module is using pip or pip3 for Python3 if you have installed pip already.

Using Python 2

$ sudo pip install matplotlibUsing Python 3

$ sudo pip3 install matplotlibAlternatively, if you have easy_install in your system, you can install matplotlib using the below command.

Using easy install

$ sudo easy_install -U matplotlibFor CentOs

$ yum install python-matplotlibFor Ubuntu

To install matplotlib module on Debian/Ubuntu :

$ sudo apt-get install python3-matplotlibInstall Matplotlib in Windows

In the case of windows, you can use pip or pip3 based on the Python version, you have to install the matplotlib module.

$ pip3 install matplotlibIf you have not added the pip to the environment variable path, you can run the below command in Python 3, which will install the matplotlib module.

$ py -m pip install matplotlibInstall Matplotlib in Anaconda

Matplotlib is available both via the anaconda main channel and it can be installed using the following command.

$ conda install matplotlib

You can also install it via the conda-forge community channel by running the below command.

$ conda install -c conda-forge matplotlib

In case you have installed it properly but it still throws an error, then you need to check the import statement in your code.

In order to plot the charts properly, you need to import the matplotlib as shown below.

# importing the matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

# car sales data

total_sales = [3000, 2245, 1235, 5330, 4200]

location = ['Bangalore', 'Delhi', 'Chennai', 'Mumbai', 'Kolkatta']

# Seaborn color palette to plot pie chart

colors = sns.color_palette('pastel')

# create pie chart using matplotlib

plt.pie(total_sales, labels=location, colors=colors)

plt.show()Malthe Borch: PowerShell Remoting on Windows using Airflow

Apache Airflow is an open-source platform that allows you to programmatically author, schedule and monitor workflows. It comes with out-of-the-box integration to lots of systems, but the adage that the devil's in the details holds true with integration in general and remote execution is no exception – in particular PowerShell Remoting which comes with Windows as part of WinRM (Windows Remote Management).

In this post, I'll share some insights from a recent project on how to use Airflow to orchestrate the execution of Windows jobs without giving up on security.

Traditionally, job scheduling was done using agent software. An agent running locally as a system service would wake up and execute jobs at the scheduled time, reporting results back to a central system.

The configuration of the job schedule is either done by logging into the system itself or using a control channel. For example, the agent might connect to a central system to pull down work orders.

Meanwhile, Airflow has no such agents! Conveniently, WinRM works in push mode. It's a service running on Windows that you connect to using HTTP (or HTTPS). It's basically like connecting to a database and running a stored procedure.

From a security perspective, push mode is fundamentally different because traffic is initiated externally. While we might want to implement a thin agent to overcome this difference, such code is a liability on its own. Luckily, PowerShell Remoting comes with a framework that allows us to substantially limit the attack surface.

The aptly named Just-Enough-Administration (JEA) framework is basically sudo on steroids. It allows us to use PowerShell as an API, constraining the remote management interface to a configurable set of commands and executing as a specific user.

We can avoid running arbitrary code entirely by encapsulating the implementation details in predefined commands. In addition, we also separate the remote user that connects to the WinRM service from the user context that executes commands.

You can use PowerShell Remoting without JEA and/or constrained endpoints. But the intersection of Airflow and Windows is typically a bigger company or organization where security concerns mean that you want both of these.

As an aside, I mentioned stored procedures earlier on. Using JEA to change context to a different user is equivalent of Definer's Rights vs Invoker's Rights. Arguably, in a system-to-system integration, using Definer's Rights is helpful in reducing the attack surface because you can define and encapsulate the required functionality.

The steps required to register a JEA configuration are relatively straight-forward. I won't describe them in detail here but the following bullets should give an overview:

In summary, registering a JEA configuration can be as simple as defining a single role capabilities file and running a command to register the configuration.

Now, enter Airflow!

To get started, you'll need to add the PowerShell Remoting Protocol Provider to your Airflow installation.

Add a connection by providing the hostname of your Windows machine, username and password. If you're using HTTP (rather than HTTPS) then you should set up the connection to require Kerberos authentication such that credentials are not sent in clear text (in addition, WinRM will encrypt the protocol traffic using the Kerberos session key).

To require Kerberos authentication, provide {"auth": "kerberos"} in the connection extras. Most of the extra configuration options from the underlying Python library pypsrp are available as connection extras. For example, a JEA configuration (if using) can be specified using the "configuration_name" key.

You will need to install additional Python packages to use Kerberos. Here's a requirements file with the necessary dependencies:

apache-airflow-providers-microsoft-psrp gssapi krb5 pypsrp[kerberos]

Finally, a note on transport security. When WinRM is used with an HTTP listener, Kerberos authentication (acting as trusted 3rd party) supplants the use of SSL/TLS through the transparent encryption scheme employed by the protocol. You can configure WinRM to support only Kerberos (by default, "Negotiate" is also enabled) to ensure that all connections are secured in this way. Note that your IT department might still insist on using HTTPS.

Historically, Windows machines feel worse over time for no particular reason. It's common to restart them once in a while. We can use Airflow to do that!

from airflow.providers.microsoft.psrp.operators.psrp import PSRPOperator

default_args = {

"psrp_conn_id": <connection id>

}

with DAG(..., default_args=default_args) as dag:

# "task_id" defaults to the value of "cmdlet" so can omit it here.

restart_computer = PSRPOperator(cmdlet="Restart-Computer", parameters={"Force": None})This will restart the computer forcefully (which is not a good idea, but it illustrates the use of parameters). In the example, "Force" is a switch so we pass a value of None– but values can be numbers, strings, lists and even dictionaries.

In the first example, we saw how task_id defaults to the value of cmdlet– that is sometimes useful, but it's not the only way we can cut verbosity.

PowerShell cmdlets (and functions which for our purposes are the same thing) follow the naming convention verb-noun. When we define our own commands, we can for example use the verb "Invoke", e.g. "Invoke-Job1". But invoking stuff is something we do all the time in Airflow and we don't want our task ids to have this meaningless prefix all over the place.

Here's an example of fixing that, making good use of Airflow's templating syntax:

from airflow.providers.microsoft.psrp.operators.psrp import PSRPOperator

default_args = {

"psrp_conn_id": <connection id>,

"cmdlet": "Invoke-{{ task.task_id }}",

}

with DAG(..., default_args=default_args) as dag:

# "cmdlet" here will be provided automatically as "Invoke-Job1".

job1 = PSRPOperator(task_id="Job1")Windows can have its verb-noun naming convention and we get to have short task ids.

By default, Airflow serializes operator output using XComs – a simple means of passing state between tasks.

Since XComs must be JSON-serializable, the PSRPOperator automatically converts PowerShell output values to JSON using ConvertTo-Json and then deserializes in Python before Airflow will then reserialize it when saving the XComs result to the database – there's room for optimization there! The point is that most of the time, you don't have to worry about it.

You can for example list a directory using Get-ChildItem and the resulting table will be returned as a list of dicts. Note that PowerShell has some flattening magic which generally does the right thing in terms of return values:

That is, functions don't really return a single value. Instead, there is a stream of output values stemming from each command being executed.

With do_xcom_push set to false, no XComs are saved and the conversion to JSON also does not happen.

PowerShell has a number of other streams besides the output stream. These are logged to Airflow's task log by default. Unlike the default logging setup, the debug is also included unless explicitly turned off logging_level– one justification for this is given in the next section.

In traditional automation, command echoing has been a simple way to figure out what a script is doing. PowerShell is a different beast altogether, but it is possible to expose the commands being executed using Set-PSDebug.

from pypsrp.powershell import Command, CommandParameter

PS_DEBUG = Command(

cmd="Set-PSDebug",

args=(CommandParameter(name="Trace", value=1), ),

is_script=False,

)

default_args = {

"psrp_conn_id": <connection id>,

"psrp_session_init": PS_DEBUG,

}This requires that Set-PSDebug is listed under "VisibleCmdlets" in the role capabilities (like ConvertTo-Json if using XComs).

A tracing line will be sent for each line passed over during execution at logging level debug, but as mentioned above, this will nonetheless get included in the task log by default. Don't enable this and have a loop that iterates hundreds of times. You will quickly fill up the task log with useless messages.

Happy remoting!

IslandT: Move chess piece on the chessboard with python

Hello, it is me again and this is the second article about the chess game project which I have created earlier with python. In this article, I have updated the previous python program which will now be able to relocate the chess piece on the chessboard to a new location after I have clicked on any square on the chessboard. This is the first step to move the chess piece on the chessboard because after this, I will make the piece moves slowly by sliding it along the path to its destination as well as making sure the piece can move to that square, for example, a pawn can only move in the up or down direction and can only move sideways if it consumes another piece. All these will take another level of planning but for now, let us just concentrate on the relocation of the piece.

The pawn will originally be situated on one of the squares just like what you have seen in the previous article but after I have clicked on a new square it will relocate to that new square disregarding whether it can moves there or not!

The entire plan to achieve this is to get the dictionary key of that square and then plug in the key to the chess_dict dictionary to get the coordinates needed to draw the new position of the sprite.

# print the square name which you have clicked on

for key, value in chess_dict.items():

if (x * width, y * width) == (value[0],value[1]):

print(key)

previous_square_list.append(key) #insert the next square

if len(previous_square_list) > 1:

previous_square_list.remove(previous_square_list[0])

The above snippet will save the new key whenever a user clicked on one of the squares on the chessboard.

This will draw the chess piece on the new location…

#draw the position of the pawn sprite

if len(previous_square_list) == 0:

screen.blit(pawn0, (0, 64)) # just testing...

else:

screen.blit(pawn0,(chess_dict[previous_square_list[0]])) # this will draw the pawn on new position

At first, when there is no click the piece will appear in the original location, after someone has clicked on it once the chess piece will get drawn to the new location.

Here is the entire code…

import sys, pygame

import math

pygame.init()

size = width, height = 512, 512

white = 255, 178, 102

black = 126, 126, 126

hightlight = 192, 192, 192

title = "IslandT Chess"

width = 64 # width of the square

original_color = ''

#empty chess dictionary

chess_dict = {}

#chess square list

chess_square_list = [

"a8", "b8", "c8", "d8", "e8", "f8", "g8", "h8",

"a7", "b7", "c7", "d7", "e7", "f7", "g7", "h7",

"a6", "b6", "c6", "d6", "e6", "f6", "g6", "h6",

"a5", "b5", "c5", "d5", "e5", "f5", "g5", "h5",

"a4", "b4", "c4", "d4", "e4", "f4", "g4", "h4",

"a3", "b3", "c3", "d3", "e3", "f3", "g3", "h3",

"a2", "b2", "c2", "d2", "e2", "f2", "g2", "h2",

"a1", "b1", "c1", "d1", "e1", "f1", "g1", "h1"

]

# chess square position

chess_square_position = []

#pawn image

pawn0 = pygame.image.load("pawn.png")

# create a list to map name of column and row

for i in range(0, 8) : # control row

for j in range(0, 8): # control column

chess_square_position.append((j * width, i * width))

# create a dictionary to map name of column and row

for n in range(0, len(chess_square_position)):

chess_dict[chess_square_list[n]] = chess_square_position[n]

screen = pygame.display.set_mode(size)

pygame.display.set_caption(title)

rect_list = list() # this is the list of brown rectangle

#the previously touched square

previous_square_list = []

# used this loop to create a list of brown rectangles

for i in range(0, 8): # control the row

for j in range(0, 8): # control the column

if i % 2 == 0: # which means it is an even row

if j % 2 != 0: # which means it is an odd column

rect_list.append(pygame.Rect(j * width, i * width, width, width))

else:

if j % 2 == 0: # which means it is an even column

rect_list.append(pygame.Rect(j * width, i * width, width, width))

# create main surface and fill the base color with light brown color

chess_board_surface = pygame.Surface(size)

chess_board_surface.fill(white)

# next draws the dark brown rectangles on the chess board surface

for chess_rect in rect_list:

pygame.draw.rect(chess_board_surface, black, chess_rect)

while True:

# displayed the chess surface

#screen.blit(chess_board_surface, (0, 0))

# displayed the chess surface

screen.blit(chess_board_surface, (0, 0))

#draw the position of the pawn sprite

if len(previous_square_list) == 0:

screen.blit(pawn0, (0, 64)) # just testing...

else:

screen.blit(pawn0,(chess_dict[previous_square_list[0]])) # this will draw the pawn on new position

for event in pygame.event.get():

if event.type == pygame.QUIT: sys.exit()

elif event.type == pygame.MOUSEBUTTONDOWN:

pos = event.pos

x = math.floor(pos[0] / width)

y = math.floor(pos[1] / width)

# print the square name which you have clicked on

for key, value in chess_dict.items():

if (x * width, y * width) == (value[0],value[1]):

print(key)

previous_square_list.append(key) #insert the next square

if len(previous_square_list) > 1:

previous_square_list.remove(previous_square_list[0])

original_color = chess_board_surface.get_at((x * width, y * width ))

pygame.draw.rect(chess_board_surface, hightlight, pygame.Rect((x) * width, (y) * width, 64, 64))

elif event.type == pygame.MOUSEBUTTONUP:

pos = event.pos

x = math.floor(pos[0] / width)

y = math.floor(pos[1] / width)

pygame.draw.rect(chess_board_surface, original_color, pygame.Rect((x) * width, (y) * width, 64, 64))

pygame.display.update()

Here is the outcome…

I hope you like it, the next step is to make the piece slide along the board as well as to allow it to move in the direction it is supposed to move to!

PyPy: Natural Language Processing for Icelandic with PyPy: A Case Study

Natural Language Processing for Icelandic with PyPy: A Case Study

Icelandic is one of the smallest languages of the world, with about 370.000 speakers. It is a language in the Germanic family, most similar to Norwegian, Danish and Swedish, but closer to the original Old Norse spoken throughout Scandinavia until about the 14th century CE.

As with other small languages, there are worries that the language may not survive in a digital world, where all kinds of fancy applications are developed first - and perhaps only - for the major languages. Voice assistants, chatbots, spelling and grammar checking utilities, machine translation, etc., are increasingly becoming staples of our personal and professional lives, but if they don’t exist for Icelandic, Icelanders will gravitate towards English or other languages where such tools are readily available.

Iceland is a technology-savvy country, with world-leading adoption rates of the Internet, PCs and smart devices, and a thriving software industry. So the government figured that it would be worthwhile to fund a 5-year plan to build natural language processing (NLP) resources and other infrastructure for the Icelandic language. The project focuses on collecting data and developing open source software for a range of core applications, such as tokenization, vocabulary lookup, n-gram statistics, part-of-speech tagging, named entity recognition, spelling and grammar checking, neural language models and speech processing.

My name is Vilhjálmur Þorsteinsson, and I’m the founder and CEO of a software startup Miðeind in Reykjavík, Iceland, that employs 10 software engineers and linguists and focuses on NLP and AI for the Icelandic language. The company participates in the government’s language technology program, and has contributed significantly to the program’s core tools (e.g., a tokenizer and a parser), spelling and grammar checking modules, and a neural machine translation stack.

When it came to a choice of programming languages and development tools for the government program, the requirements were for a major, well supported, vendor-and-OS-agnostic FOSS platform with a large and diverse community, including in the NLP space. The decision to select Python as a foundational language for the project was a relatively easy one. That said, there was a bit of trepidation around the well known fact that CPython can be slow for inner-core tasks, such as tokenization and parsing, that can see heavy workloads in production.

I first became aware of PyPy in early 2016 when I was developing a crossword game Netskrafl in Python 2.7 for Google App Engine. I had a utility program that compressed a dictionary into a Directed Acyclic Word Graph and was taking 160 seconds to run on CPython 2.7, so I tried PyPy and to my amazement saw a 4x speedup (down to 38 seconds), with literally no effort besides downloading the PyPy runtime.

This led me to select PyPy as the default Python interpreter for my company’s Python development efforts as well as for our production websites and API servers, a role in which it remains to this day. We have followed PyPy’s upgrades along the way, being just about to migrate our minimally required language version from 3.6 to 3.7.

In NLP, speed and memory requirements can be quite important for software usability. On the other hand, NLP logic and algorithms are often complex and challenging to program, so programmer productivity and code clarity are also critical success factors. A pragmatic approach balances these factors, avoids premature optimization and seeks a careful compromise between maximal run-time efficiency and minimal programming and maintenance effort.

Turning to our use cases, our Icelandic text tokenizer "Tokenizer" is fairly light, runs tight loops and performs a large number of small, repetitive operations. It runs very well on PyPy’s JIT and has not required further optimization.

Our Icelandic parser Greynir (known on PyPI as reynir) is, if I may say so myself, a piece of work. It parses natural language text according to a hand-written context-free grammar, using an Earley-type algorithm as enhanced by Scott and Johnstone. The CFG contains almost 7,000 nonterminals and 6,000 terminals, and the parser handles ambiguity as well as left, right and middle recursion. It returns a packed parse forest for each input sentence, which is then pruned by a scoring heuristic down to a single best result tree.

This parser was originally coded in pure Python and turned out to be unusably slow when run on CPython - but usable on PyPy, where it was 3-4x faster. However, when we started applying it to heavier production workloads, it became apparent that it needed to be faster still. We then proceeded to convert the innermost Earley parsing loop from Python to tight C++ and to call it from PyPy via CFFI, with callbacks for token-terminal matching functions (“business logic”) that remained on the Python side. This made the parser much faster (on the order of 100x faster than the original on CPython) and quick enough for our production use cases. Even after moving much of the heavy processing to C++ and using CFFI, PyPy still gives a significant speed boost over CPython.

Connecting C++ code with PyPy proved to be quite painless using CFFI, although we had to figure out a few magic incantations in our build module to make it compile smoothly during setup from source on Windows and MacOS in addition to Linux. Of course, we build binary PyPy and CPython wheels for the most common targets so most users don’t have to worry about setup requirements.

With the positive experience from the parser project, we proceeded to take a similar approach for two other core NLP packages: our compressed vocabulary package BinPackage (known on PyPI as islenska) and our trigrams database package Icegrams. These packages both take large text input (3.1 million word forms with inflection data in the vocabulary case; 100 million tokens in the trigrams case) and compress it into packed binary structures. These structures are then memory-mapped at run-time using mmap and queried via Python functions with a lookup time in the microseconds range. The low-level data structure navigation is done in C++, called from Python via CFFI. The ex-ante preparation, packing, bit-fiddling and data structure generation is fast enough with PyPy, so we haven’t seen a need to optimize that part further.

To showcase our tools, we host public (and open source) websites such as greynir.is for our parsing, named entity recognition and query stack and yfirlestur.is for our spell and grammar checking stack. The server code on these sites is all Python running on PyPy using Flask, wrapped in gunicorn and hosted on nginx. The underlying database is PostgreSQL accessed via SQLAlchemy and psycopg2cffi. This setup has served us well for 6 years and counting, being fast, reliable and having helpful and supporting communities.

As can be inferred from the above, we are avid fans of PyPy and commensurately thankful for the great work by the PyPy team over the years. PyPy has enabled us to use Python for a larger part of our toolset than CPython alone would have supported, and its smooth integration with C/C++ through CFFI has helped us attain a better tradeoff between performance and programmer productivity in our projects. We wish for PyPy a great and bright future and also look forward to exciting related developments on the horizon, such as HPy.

Podcast.__init__: Achieve Repeatable Builds Of Your Software On Any Machine With Earthly

Summary

It doesn’t matter how amazing your application is if you are unable to deliver it to your users. Frustrated with the rampant complexity involved in building and deploying software Vlad A. Ionescu created the Earthly tool to reduce the toil involved in creating repeatable software builds. In this episode he explains the complexities that are inherent to building software projects and how he designed the syntax and structure of Earthly to make it easy to adopt for developers across all language environments. By adopting Earthly you can use the same techniques for building on your laptop and in your CI/CD pipelines.

Announcements

- Hello and welcome to Podcast.__init__, the podcast about Python’s role in data and science.

- When you’re ready to launch your next app or want to try a project you hear about on the show, you’ll need somewhere to deploy it, so take a look at our friends over at Linode. With the launch of their managed Kubernetes platform it’s easy to get started with the next generation of deployment and scaling, powered by the battle tested Linode platform, including simple pricing, node balancers, 40Gbit networking, dedicated CPU and GPU instances, and worldwide data centers. Go to pythonpodcast.com/linode and get a $100 credit to try out a Kubernetes cluster of your own. And don’t forget to thank them for their continued support of this show!

- Your host as usual is Tobias Macey and today I’m interviewing Vlad A. Ionescu about Earthly, a syntax and runtime for software builds to reduce friction between development and delivery

Interview

- Introductions

- How did you get introduced to Python?

- Can you describe what Earthly is and the story behind it?

- What are the core principles that engineers should consider when designing their build and delivery process?

- What are some of the common problems that engineers run into when they are designing their build process?

- What are some of the challenges that are unique to the Python ecosystem?

- What is the role of Earthly in the overall software lifecycle?

- What are the other tools/systems that a team is likely to use alongside Earthly?

- What are the components that Earthly might replace?

- How is Earthly implemented?

- What were the core design requirements when you first began working on it?

- How have the design and goals of Earthly changed or evolved as you have explored the problem further?

- What is the workflow for a Python developer to get started with Earthly?

- How can Earthly help with the challenge of managing Javascript and CSS assets for web application projects?

- What are some of the challenges (technical, conceptual, or organizational) that an engineer or team might encounter when adopting Earthly?

- What are some of the features or capabilities of Earthly that are overlooked or misunderstood that you think are worth exploring?

- What are the most interesting, innovative, or unexpected ways that you have seen Earthly used?

- What are the most interesting, unexpected, or challenging lessons that you have learned while working on Earthly?

- When is Earthly the wrong choice?

- What do you have planned for the future of Earthly?

Keep In Touch

- @VladAIonescu on Twitter

- Website

Picks

- Tobias

- Shape Up book

- Vlad

- High Output Management by Andy Grove

Closing Announcements

- Thank you for listening! Don’t forget to check out our other show, the Data Engineering Podcast for the latest on modern data management.

- Visit the site to subscribe to the show, sign up for the mailing list, and read the show notes.

- If you’ve learned something or tried out a project from the show then tell us about it! Email hosts@podcastinit.com) with your story.

- To help other people find the show please leave a review on iTunes and tell your friends and co-workers

Links

- Earthly

- Bazel

- Pants

- ARM

- AWS Graviton

- Apple M1 CPU

- Qemu

- Phoenix web framework for Elixir language

The intro and outro music is from Requiem for a Fish The Freak Fandango Orchestra / CC BY-SA

Python GUIs: Packaging PyQt5 applications into a macOS app with PyInstaller (updated for 2022)

There is not much fun in creating your own desktop applications if you can't share them with other people — whether than means publishing it commercially, sharing it online or just giving it to someone you know. Sharing your apps allows other people to benefit from your hard work!

The good news is there are tools available to help you do just that with your Python applications which work well with apps built using PyQt5. In this tutorial we'll look at the most popular tool for packaging Python applications: PyInstaller.

This tutorial is broken down into a series of steps, using PyInstaller to build first simple, and then more complex PyQt5 applications into distributable macOS app bundles. You can choose to follow it through completely, or skip to the parts that are most relevant to your own project.

We finish off by building a macOS Disk Image, the usual method for distributing applications on macOS.

You always need to compile your app on your target system. So, if you want to create a Mac .app you need to do this on a Mac, for an EXE you need to use Windows.

Example Disk Image Installer for macOS

Example Disk Image Installer for macOS

If you're impatient, you can download the Example Disk Image for macOS first.

Requirements

PyInstaller works out of the box with PyQt5 and as of writing, current versions of PyInstaller are compatible with Python 3.6+. Whatever project you're working on, you should be able to package your apps.

You can install PyInstaller using pip.

pip3 install PyInstaller

If you experience problems packaging your apps, your first step should always be to update your PyInstaller and hooks package the latest versions using

pip3 install --upgrade PyInstaller pyinstaller-hooks-contrib

The hooks module contains package-specific packaging instructions for PyInstaller which is updated regularly.

Install in virtual environment (optional)

You can also opt to install PyQt5 and PyInstaller in a virtual environment (or your applications virtual environment) to keep your environment clean.

python3 -m venv packenv

Once created, activate the virtual environment by running from the command line —

call packenv\scripts\activate.bat

Finally, install the required libraries. For PyQt5 you would use —

pip3 install PyQt5 PyInstaller

Getting Started

It's a good idea to start packaging your application from the very beginning so you can confirm that packaging is still working as you develop it. This is particularly important if you add additional dependencies. If you only think about packaging at the end, it can be difficult to debug exactly where the problems are.

For this example we're going to start with a simple skeleton app, which doesn't do anything interesting. Once we've got the basic packaging process working, we'll extend the application to include icons and data files. We'll confirm the build as we go along.

To start with, create a new folder for your application and then add the following skeleton app in a file named app.py. You can also download the source code and associated files

from PyQt5 import QtWidgets

import sys

class MainWindow(QtWidgets.QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("Hello World")

l = QtWidgets.QLabel("My simple app.")

l.setMargin(10)

self.setCentralWidget(l)

self.show()

if __name__ == '__main__':

app = QtWidgets.QApplication(sys.argv)

w = MainWindow()

app.exec()

This is a basic bare-bones application which creates a custom QMainWindow and adds a simple widget QLabel to it. You can run this app as follows.

python app.py

This should produce the following window (on macOS).

Simple skeleton app in PyQt5

Simple skeleton app in PyQt5

Building a basic app

Now we have our simple application skeleton in place, we can run our first build test to make sure everything is working.

Open your terminal (command prompt) and navigate to the folder containing your project. You can now run the following command to run the PyInstaller build.

pyinstaller --windowed app.py

The --windowed flag is neccessary to tell PyInstaller to build a macOS .app bundle.

You'll see a number of messages output, giving debug information about what PyInstaller is doing. These are useful for debugging issues in your build, but can otherwise be ignored. The output that I get for running the command on my system is shown below.

martin@MacBook-Pro pyqt5 % pyinstaller --windowed app.py

74 INFO: PyInstaller: 4.8

74 INFO: Python: 3.9.9

83 INFO: Platform: macOS-10.15.7-x86_64-i386-64bit

84 INFO: wrote /Users/martin/app/pyqt5/app.spec

87 INFO: UPX is not available.

88 INFO: Extending PYTHONPATH with paths

['/Users/martin/app/pyqt5']

447 INFO: checking Analysis

451 INFO: Building because inputs changed

452 INFO: Initializing module dependency graph...

455 INFO: Caching module graph hooks...

463 INFO: Analyzing base_library.zip ...

3914 INFO: Processing pre-find module path hook distutils from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/pre_find_module_path/hook-distutils.py'.

3917 INFO: distutils: retargeting to non-venv dir '/usr/local/Cellar/python@3.9/3.9.9/Frameworks/Python.framework/Versions/3.9/lib/python3.9'

6928 INFO: Caching module dependency graph...

7083 INFO: running Analysis Analysis-00.toc

7091 INFO: Analyzing /Users/martin/app/pyqt5/app.py

7138 INFO: Processing module hooks...

7139 INFO: Loading module hook 'hook-PyQt6.QtWidgets.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7336 INFO: Loading module hook 'hook-xml.etree.cElementTree.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7337 INFO: Loading module hook 'hook-lib2to3.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7360 INFO: Loading module hook 'hook-PyQt6.QtGui.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7397 INFO: Loading module hook 'hook-PyQt6.QtCore.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7422 INFO: Loading module hook 'hook-encodings.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7510 INFO: Loading module hook 'hook-distutils.util.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7513 INFO: Loading module hook 'hook-pickle.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7515 INFO: Loading module hook 'hook-heapq.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7517 INFO: Loading module hook 'hook-difflib.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7519 INFO: Loading module hook 'hook-PyQt6.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7564 INFO: Loading module hook 'hook-multiprocessing.util.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7565 INFO: Loading module hook 'hook-sysconfig.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7574 INFO: Loading module hook 'hook-xml.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7677 INFO: Loading module hook 'hook-distutils.py' from '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks'...

7694 INFO: Looking for ctypes DLLs

7712 INFO: Analyzing run-time hooks ...

7715 INFO: Including run-time hook '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/rthooks/pyi_rth_subprocess.py'

7719 INFO: Including run-time hook '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/rthooks/pyi_rth_pkgutil.py'

7722 INFO: Including run-time hook '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/rthooks/pyi_rth_multiprocessing.py'

7726 INFO: Including run-time hook '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/rthooks/pyi_rth_inspect.py'

7727 INFO: Including run-time hook '/usr/local/lib/python3.9/site-packages/PyInstaller/hooks/rthooks/pyi_rth_pyqt6.py'

7736 INFO: Looking for dynamic libraries

7977 INFO: Looking for eggs

7977 INFO: Using Python library /usr/local/Cellar/python@3.9/3.9.9/Frameworks/Python.framework/Versions/3.9/Python

7987 INFO: Warnings written to /Users/martin/app/pyqt5/build/app/warn-app.txt

8019 INFO: Graph cross-reference written to /Users/martin/app/pyqt5/build/app/xref-app.html

8032 INFO: checking PYZ

8035 INFO: Building because toc changed

8035 INFO: Building PYZ (ZlibArchive) /Users/martin/app/pyqt5/build/app/PYZ-00.pyz

8390 INFO: Building PYZ (ZlibArchive) /Users/martin/app/pyqt5/build/app/PYZ-00.pyz completed successfully.

8397 INFO: EXE target arch: x86_64

8397 INFO: Code signing identity: None

8398 INFO: checking PKG

8398 INFO: Building because /Users/martin/app/pyqt5/build/app/PYZ-00.pyz changed

8398 INFO: Building PKG (CArchive) app.pkg

8415 INFO: Building PKG (CArchive) app.pkg completed successfully.

8417 INFO: Bootloader /usr/local/lib/python3.9/site-packages/PyInstaller/bootloader/Darwin-64bit/runw

8417 INFO: checking EXE

8418 INFO: Building because console changed

8418 INFO: Building EXE from EXE-00.toc

8418 INFO: Copying bootloader EXE to /Users/martin/app/pyqt5/build/app/app

8421 INFO: Converting EXE to target arch (x86_64)

8449 INFO: Removing signature(s) from EXE

8484 INFO: Appending PKG archive to EXE

8486 INFO: Fixing EXE headers for code signing

8496 INFO: Rewriting the executable's macOS SDK version (11.1.0) to match the SDK version of the Python library (10.15.6) in order to avoid inconsistent behavior and potential UI issues in the frozen application.

8499 INFO: Re-signing the EXE

8547 INFO: Building EXE from EXE-00.toc completed successfully.

8549 INFO: checking COLLECT

WARNING: The output directory "/Users/martin/app/pyqt5/dist/app" and ALL ITS CONTENTS will be REMOVED! Continue? (y/N)y

On your own risk, you can use the option `--noconfirm` to get rid of this question.

10820 INFO: Removing dir /Users/martin/app/pyqt5/dist/app

10847 INFO: Building COLLECT COLLECT-00.toc

12460 INFO: Building COLLECT COLLECT-00.toc completed successfully.

12469 INFO: checking BUNDLE

12469 INFO: Building BUNDLE because BUNDLE-00.toc is non existent

12469 INFO: Building BUNDLE BUNDLE-00.toc

13848 INFO: Moving BUNDLE data files to Resource directory

13901 INFO: Signing the BUNDLE...

16049 INFO: Building BUNDLE BUNDLE-00.toc completed successfully.

If you look in your folder you'll notice you now have two new folders dist and build.

build & dist folders created by PyInstaller

build & dist folders created by PyInstaller

Below is a truncated listing of the folder content, showing the build and dist folders.

.

&boxvr&boxh&boxh app.py

&boxvr&boxh&boxh app.spec

&boxvr&boxh&boxh build

&boxv &boxur&boxh&boxh app

&boxv &boxvr&boxh&boxh Analysis-00.toc

&boxv &boxvr&boxh&boxh COLLECT-00.toc

&boxv &boxvr&boxh&boxh EXE-00.toc

&boxv &boxvr&boxh&boxh PKG-00.pkg

&boxv &boxvr&boxh&boxh PKG-00.toc

&boxv &boxvr&boxh&boxh PYZ-00.pyz

&boxv &boxvr&boxh&boxh PYZ-00.toc

&boxv &boxvr&boxh&boxh app

&boxv &boxvr&boxh&boxh app.pkg

&boxv &boxvr&boxh&boxh base_library.zip

&boxv &boxvr&boxh&boxh warn-app.txt

&boxv &boxur&boxh&boxh xref-app.html

&boxur&boxh&boxh dist

&boxvr&boxh&boxh app

&boxv &boxvr&boxh&boxh libcrypto.1.1.dylib

&boxv &boxvr&boxh&boxh PyQt5

&boxv ...

&boxv &boxvr&boxh&boxh app

&boxv &boxur&boxh&boxh Qt5Core

&boxur&boxh&boxh app.app

The build folder is used by PyInstaller to collect and prepare the files for bundling, it contains the results of analysis and some additional logs. For the most part, you can ignore the contents of this folder, unless you're trying to debug issues.

The dist (for "distribution") folder contains the files to be distributed. This includes your application, bundled as an executable file, together with any associated libraries (for example PyQt5) and binary .so files.

Since we provided the --windowed flag above, PyInstaller has actually created two builds for us. The folder app is a simple folder containing everything you need to be able to run your app. PyInstaller also creates an app bundle app.app which is what you will usually distribute to users.

The app folder is a useful debugging tool, since you can easily see the libraries and other packaged data files.

You can try running your app yourself now, either by double-clicking on the app bundle, or by running the executable file, named app.exe from the dist folder. In either case, after a short delay you'll see the familiar window of your application pop up as shown below.

Simple app, running after being packaged

Simple app, running after being packaged

In the same folder as your Python file, alongside the build and dist folders PyInstaller will have also created a .spec file. In the next section we'll take a look at this file, what it is and what it does.

The Spec file

The .spec file contains the build configuration and instructions that PyInstaller uses to package up your application. Every PyInstaller project has a .spec file, which is generated based on the command line options you pass when running pyinstaller.

When we ran pyinstaller with our script, we didn't pass in anything other than the name of our Python application file and the --windowed flag. This means our spec file currently contains only the default configuration. If you open it, you'll see something similar to what we have below.

# -*- mode: python ; coding: utf-8 -*-

block_cipher = None

a = Analysis(['app.py'],

pathex=[],

binaries=[],

datas=[],

hiddenimports=[],

hookspath=[],

hooksconfig={},

runtime_hooks=[],

excludes=[],

win_no_prefer_redirects=False,

win_private_assemblies=False,

cipher=block_cipher,

noarchive=False)

pyz = PYZ(a.pure, a.zipped_data,

cipher=block_cipher)

exe = EXE(pyz,

a.scripts,

[],

exclude_binaries=True,

name='app',

debug=False,

bootloader_ignore_signals=False,

strip=False,

upx=True,

console=False,

disable_windowed_traceback=False,

target_arch=None,

codesign_identity=None,

entitlements_file=None )

coll = COLLECT(exe,

a.binaries,

a.zipfiles,

a.datas,

strip=False,

upx=True,

upx_exclude=[],

name='app')

app = BUNDLE(coll,

name='app.app',

icon=None,

bundle_identifier=None)

The first thing to notice is that this is a Python file, meaning you can edit it and use Python code to calculate values for the settings. This is mostly useful for complex builds, for example when you are targeting different platforms and want to conditionally define additional libraries or dependencies to bundle.

Because we used the --windowed command line flag, the EXE(console=) attribute is set to False. If this is True a console window will be shown when your app is launched -- not what you usually want for a GUI application.

Once a .spec file has been generated, you can pass this to pyinstaller instead of your script to repeat the previous build process. Run this now to rebuild your executable.

pyinstaller app.spec

The resulting build will be identical to the build used to generate the .spec file (assuming you have made no changes). For many PyInstaller configuration changes you have the option of passing command-line arguments, or modifying your existing .spec file. Which you choose is up to you.

Tweaking the build

So far we've created a simple first build of a very basic application. Now we'll look at a few of the most useful options that PyInstaller provides to tweak our build. Then we'll go on to look at building more complex applications.

Naming your app

One of the simplest changes you can make is to provide a proper "name" for your application. By default the app takes the name of your source file (minus the extension), for example main or app. This isn't usually what you want.

You can provide a nicer name for PyInstaller to use for the app (and dist folder) by editing the .spec file to add a name= under the EXE, COLLECT and BUNDLE blocks.

exe = EXE(pyz,

a.scripts,

[],

exclude_binaries=True,

name='Hello World',

debug=False,

bootloader_ignore_signals=False,

strip=False,

upx=True,

console=False

)

coll = COLLECT(exe,

a.binaries,

a.zipfiles,

a.datas,

strip=False,

upx=True,

upx_exclude=[],

name='Hello World')

app = BUNDLE(coll,

name='Hello World.app',

icon=None,

bundle_identifier=None)

The name under EXE is the name of the executable file, the name under BUNDLE is the name of the app bundle.

Alternatively, you can re-run the pyinstaller command and pass the -n or --name configuration flag along with your app.py script.

pyinstaller -n "Hello World" --windowed app.py

# or

pyinstaller --name "Hello World" --windowed app.py

The resulting app file will be given the name Hello World.app and the unpacked build placed in the folder dist\Hello World\.

Application with custom name "Hello World"

The name of the .spec file is taken from the name passed in on the command line, so this will also create a new spec file for you, called Hello World.spec in your root folder.

Make sure you delete the old app.spec file to avoid getting confused editing the wrong one.

Application icon

By default PyInstaller app bundles come with the following icon in place.

![]() Default PyInstaller application icon, on app bundle

Default PyInstaller application icon, on app bundle

You will probably want to customize this to make your application more recognisable. This can be done easily by passing the --icon command line argument, or editing the icon= parameter of the BUNDLE section of your .spec file. For macOS app bundles you need to provide an .icns file.

app = BUNDLE(coll,

name='Hello World.app',

icon='Hello World.icns',

bundle_identifier=None)

To create macOS icons from images you can use the image2icon tool.

If you now re-run the build (by using the command line arguments, or running with your modified .spec file) you'll see the specified icon file is now set on your application bundle.

![]() Custom application icon on the app bundle

Custom application icon on the app bundle

On macOS application icons are taken from the application bundle. If you repackage your app and run the bundle you will see your app icon on the dock!

![]() Custom application icon on the dock

Custom application icon on the dock

Data files and Resources

So far our application consists of just a single Python file, with no dependencies. Most real-world applications a bit more complex, and typically ship with associated data files such as icons or UI design files. In this section we'll look at how we can accomplish this with PyInstaller, starting with a single file and then bundling complete folders of resources.

First let's update our app with some more buttons and add icons to each.

from PyQt5.QtWidgets import QMainWindow, QApplication, QLabel, QVBoxLayout, QPushButton, QWidget

from PyQt5.QtGui import QIcon

import sys

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("Hello World")

layout = QVBoxLayout()

label = QLabel("My simple app.")

label.setMargin(10)

layout.addWidget(label)

button1 = QPushButton("Hide")

button1.setIcon(QIcon("icons/hand.png"))

button1.pressed.connect(self.lower)

layout.addWidget(button1)

button2 = QPushButton("Close")

button2.setIcon(QIcon("icons/lightning.png"))

button2.pressed.connect(self.close)

layout.addWidget(button2)

container = QWidget()

container.setLayout(layout)

self.setCentralWidget(container)

self.show()

if __name__ == '__main__':

app = QApplication(sys.argv)

w = MainWindow()

app.exec_()

In the folder with this script, add a folder icons which contains two icons in PNG format, hand.png and lightning.png. You can create these yourself, or get them from the source code download for this tutorial.

Run the script now and you will see a window showing two buttons with icons.

![]() Window with two buttons with icons.

Window with two buttons with icons.

Even if you don't see the icons, keep reading!

Dealing with relative paths

There is a gotcha here, which might not be immediately apparent. To demonstrate it, open up a shell and change to the folder where our script is located. Run it with

python3 app.py

If the icons are in the correct location, you should see them. Now change to the parent folder, and try and run your script again (change <folder> to the name of the folder your script is in).

cd ..

python3 <folder>/app.py

![]() Window with two buttons with icons missing.

Window with two buttons with icons missing.

The icons don't appear. What's happening?

We're using relative paths to refer to our data files. These paths are relative to the current working directory -- not the folder your script is in. So if you run the script from elsewhere it won't be able to find the files.

One common reason for icons not to show up, is running examples in an IDE which uses the project root as the current working directory.

This is a minor issue before the app is packaged, but once it's installed it will be started with it's current working directory as the root / folder -- your app won't be able to find anything. We need to fix this before we go any further, which we can do by making our paths relative to our application folder.

In the updated code below, we define a new variable basedir, using os.path.dirname to get the containing folder of __file__ which holds the full path of the current Python file. We then use this to build the relative paths for icons using os.path.join().

Since our app.py file is in the root of our folder, all other paths are relative to that.

from PyQt5.QtWidgets import QMainWindow, QApplication, QLabel, QVBoxLayout, QPushButton, QWidget

from PyQt5.QtGui import QIcon

import sys, os

basedir = os.path.dirname(__file__)

class MainWindow(QMainWindow):

def __init__(self):

super().__init__()

self.setWindowTitle("Hello World")

layout = QVBoxLayout()

label = QLabel("My simple app.")

label.setMargin(10)

layout.addWidget(label)

button1 = QPushButton("Hide")

button1.setIcon(QIcon(os.path.join(basedir, "icons", "hand.png")))

button1.pressed.connect(self.lower)

layout.addWidget(button1)

button2 = QPushButton("Close")

button2.setIcon(QIcon(os.path.join(basedir, "icons", "lightning.png")))

button2.pressed.connect(self.close)

layout.addWidget(button2)

container = QWidget()

container.setLayout(layout)

self.setCentralWidget(container)

self.show()

if __name__ == '__main__':

app = QApplication(sys.argv)

w = MainWindow()

app.exec_()

Try and run your app again from the parent folder -- you'll find that the icons now appear as expected on the buttons, no matter where you launch the app from.

Packaging the icons

So now we have our application showing icons, and they work wherever the application is launched from. Package the application again with pyinstaller "Hello World.spec" and then try and run it again from the dist folder as before. You'll notice the icons are missing again.

![]() Window with two buttons with icons missing.

Window with two buttons with icons missing.

The problem now is that the icons haven't been copied to the dist/Hello World folder -- take a look in it. Our script expects the icons to be a specific location relative to it, and if they are not, then nothing will be shown.

This same principle applies to any other data files you package with your application, including Qt Designer UI files, settings files or source data. You need to ensure that relative path structures are replicated after packaging.

Bundling data files with PyInstaller

For the application to continue working after packaging, the files it depends on need to be in the same relative locations.

To get data files into the dist folder we can instruct PyInstaller to copy them over.

PyInstaller accepts a list of individual paths to copy, together with a folder path relative to the dist/<app name> folder where it should to copy them to. As with other options, this can be specified by command line arguments or in the .spec file.

Files specified on the command line are added using --add-data, passing the source file and destination folder separated by a colon :.

The path separator is platform-specific: Linux or Mac use :, on Windows use ;

pyinstaller --windowed --name="Hello World" --icon="Hello World.icns" --add-data="icons/hand.png:icons" --add-data="icons/lightning.png:icons" app.py

Here we've specified the destination location as icons. The path is relative to the root of our application's folder in dist -- so dist/Hello World with our current app. The path icons means a folder named icons under this location, so dist/Hello World/icons. Putting our icons right where our application expects to find them!

You can also specify data files via the datas list in the Analysis section of the spec file, shown below.

a = Analysis(['app.py'],

pathex=[],

binaries=[],

datas=[('icons/hand.png', 'icons'), ('icons/lightning.png', 'icons')],

hiddenimports=[],

hookspath=[],

runtime_hooks=[],

excludes=[],

win_no_prefer_redirects=False,

win_private_assemblies=False,

cipher=block_cipher,

noarchive=False)

Then rebuild from the .spec file with

pyinstaller "Hello World.spec"In both cases we are telling PyInstaller to copy the specified files to the location ./icons/ in the output folder, meaning dist/Hello World/icons. If you run the build, you should see your .png files are now in the in dist output folder, under a folder named icons.

![]() The icon file copied to the dist folder

The icon file copied to the dist folder

If you run your app from dist you should now see the icon icons in your window as expected!

![]() Window with two buttons with icons, finally!

Window with two buttons with icons, finally!

Bundling data folders

Usually you will have more than one data file you want to include with your packaged file. The latest PyInstaller versions let you bundle folders just like you would files, keeping the sub-folder structure.

Let's update our configuration to bundle our icons folder in one go, so it will continue to work even if we add more icons in future.

To copy the icons folder across to our build application, we just need to add the folder to our .spec file Analysis block. As for the single file, we add it as a tuple with the source path (from our project folder) and the destination folder under the resulting folder in dist.

# ...

a = Analysis(['app.py'],

pathex=[],

binaries=[],

datas=[('icons', 'icons')], # tuple is (source_folder, destination_folder)

hiddenimports=[],

hookspath=[],

hooksconfig={},

runtime_hooks=[],

excludes=[],

win_no_prefer_redirects=False,

win_private_assemblies=False,

cipher=block_cipher,

noarchive=False)

# ...

If you run the build using this spec file you'll see the icons folder copied across to the dist\Hello World folder. If you run the application from the folder, the icons will display as expected -- the relative paths remain correct in the new location.

Alternatively, you can bundle your data files using Qt's QResource architecture. See our tutorial for more information.

Building the App bundle into a Disk Image

So far we've used PyInstaller to bundle the application into macOS app, along with the associated data files. The output of this bundling process is a folder and an macOS app bundle, named Hello World.app.

If you try and distribute this app bundle, you'll notice a problem: the app bundle is actually just a special folder. While macOS displays it as an application, if you try and share it, you'll actually be sharing hundreds of individual files. To distribute the app properly, we need some way to package it into a single file.

The easiest way to do this is to use a .zip file. You can zip the folder and give this to someone else to unzip on their own computer, giving them a complete app bundle they can copy to their Applications folder.

However, if you've install macOS applications before you'll know this isn't the usual way to do it. Usually you get a Disk Image.dmg file, which when opened shows the application bundle, and a link to your Applications folder. To install the app, you just drag it across to the target.

To make our app look as professional as possible, we should copy this expected behaviour. Next we'll look at how to take our app bundle and package it into a macOS Disk Image.

Making sure the build is ready.

If you've followed the tutorial so far, you'll already have your app ready in the /dist folder. If not, or yours isn't working you can also download the source code files for this tutorial which includes a sample .spec file. As above, you can run the same build using the provided Hello World.spec file.

pyinstaller "Hello World.spec"This packages everything up as an app bundle in the dist/ folder, with a custom icon. Run the app bundle to ensure everything is bundled correctly, and you should see the same window as before with the icons visible.

![]() Window with two icons, and a button.

Window with two icons, and a button.

Creating an Disk Image

Now we've successfully bundled our application, we'll next look at how we can take our app bundle and use it to create a macOS Disk Image for distribution.

To create our Disk Image we'll be using the create-dmg tool. This is a command-line tool which provides a simple way to build disk images automatically. If you are using Homebrew, you can install create-dmg with the following command.

brew install create-dmg

...otherwise, see the Github repository for instructions.

The create-dmg tool takes a lot of options, but below are the most useful.

create-dmg --help

create-dmg 1.0.9

Creates a fancy DMG file.

Usage: create-dmg [options] <output_name.dmg> <source_folder>

All contents of <source_folder> will be copied into the disk image.

Options:

--volname <name>

set volume name (displayed in the Finder sidebar and window title)

--volicon <icon.icns>

set volume icon

--background <pic.png>

set folder background image (provide png, gif, or jpg)

--window-pos <x> <y>

set position the folder window

--window-size <width> <height>

set size of the folder window

--text-size <text_size>

set window text size (10-16)

--icon-size <icon_size>

set window icons size (up to 128)

--icon file_name <x> <y>

set position of the file's icon

--hide-extension <file_name>

hide the extension of file

--app-drop-link <x> <y>

make a drop link to Applications, at location x,y

--no-internet-enable

disable automatic mount & copy

--add-file <target_name> <file>|<folder> <x> <y>

add additional file or folder (can be used multiple times)

-h, --help

display this help screen

The most important thing to notice is that the command requires a <source folder> and all contents of that folder will be copied to the Disk Image. So to build the image, we first need to put our app bundle in a folder by itself.

Rather than do this manually each time you want to build a Disk Image I recommend creating a shell script. This ensures the build is reproducible, and makes it easier to configure.

Below is a working script to create a Disk Image from our app. It creates a temporary folder dist/dmg where we'll put the things we want to go in the Disk Image -- in our case, this is just the app bundle, but you can add other files if you like. Then we make sure the folder is empty (in case it still contains files from a previous run). We copy our app bundle into the folder, and finally check to see if there is already a .dmg file in dist and if so, remove it too. Then we're ready to run the create-dmg tool.

#!/bin/sh

# Create a folder (named dmg) to prepare our DMG in (if it doesn't already exist).

mkdir -p dist/dmg

# Empty the dmg folder.

rm -r dist/dmg/*

# Copy the app bundle to the dmg folder.

cp -r "dist/Hello World.app" dist/dmg

# If the DMG already exists, delete it.

test -f "dist/Hello World.dmg"&& rm "dist/Hello World.dmg"

create-dmg \

--volname "Hello World" \

--volicon "Hello World.icns" \

--window-pos 200 120 \

--window-size 600 300 \

--icon-size 100 \

--icon "Hello World.app" 175 120 \

--hide-extension "Hello World.app" \

--app-drop-link 425 120 \

"dist/Hello World.dmg" \

"dist/dmg/"The options we pass to create-dmg set the dimensions of the Disk Image window when it is opened, and positions of the icons in it.

Save this shell script in the root of your project, named e.g. builddmg.sh. To make it possible to run, you need to set the execute bit with.

chmod +x builddmg.sh

With that, you can now build a Disk Image for your Hello World app with the command.

./builddmg.sh

This will take a few seconds to run, producing quite a bit of output.

No such file or directory

Creating disk image...

...............................................................

created: /Users/martin/app/dist/rw.Hello World.dmg

Mounting disk image...

Mount directory: /Volumes/Hello World

Device name: /dev/disk2

Making link to Applications dir...

/Volumes/Hello World

Copying volume icon file 'Hello World.icns'...

Running AppleScript to make Finder stuff pretty: /usr/bin/osascript "/var/folders/yf/1qvxtg4d0vz6h2y4czd69tf40000gn/T/createdmg.tmp.XXXXXXXXXX.RvPoqdr0""Hello World"

waited 1 seconds for .DS_STORE to be created.

Done running the AppleScript...

Fixing permissions...

Done fixing permissions

Blessing started

Blessing finished

Deleting .fseventsd

Unmounting disk image...

hdiutil: couldn't unmount "disk2" - Resource busy

Wait a moment...

Unmounting disk image...

"disk2" ejected.

Compressing disk image...

Preparing imaging engine…

Reading Protective Master Boot Record (MBR : 0)…

(CRC32 $38FC6E30: Protective Master Boot Record (MBR : 0))

Reading GPT Header (Primary GPT Header : 1)…

(CRC32 $59C36109: GPT Header (Primary GPT Header : 1))

Reading GPT Partition Data (Primary GPT Table : 2)…

(CRC32 $528491DC: GPT Partition Data (Primary GPT Table : 2))

Reading (Apple_Free : 3)…

(CRC32 $00000000: (Apple_Free : 3))

Reading disk image (Apple_HFS : 4)…

...............................................................................

(CRC32 $FCDC1017: disk image (Apple_HFS : 4))

Reading (Apple_Free : 5)…

...............................................................................

(CRC32 $00000000: (Apple_Free : 5))

Reading GPT Partition Data (Backup GPT Table : 6)…

...............................................................................

(CRC32 $528491DC: GPT Partition Data (Backup GPT Table : 6))

Reading GPT Header (Backup GPT Header : 7)…

...............................................................................

(CRC32 $56306308: GPT Header (Backup GPT Header : 7))

Adding resources…

...............................................................................

Elapsed Time: 3.443s

File size: 23178950 bytes, Checksum: CRC32 $141F3DDC

Sectors processed: 184400, 131460 compressed

Speed: 18.6Mbytes/sec

Savings: 75.4%

created: /Users/martin/app/dist/Hello World.dmg

hdiutil does not support internet-enable. Note it was removed in macOS 10.15.

Disk image done

While it's building, the Disk Image will pop up. Don't get too excited yet, it's still building. Wait for the script to complete, and you will find the finished .dmg file in the dist/ folder.

The Disk Image created in the dist folder

The Disk Image created in the dist folder

Running the installer

Double-click the Disk Image to open it, and you'll see the usual macOS install view. Click and drag your app across the the Applications folder to install it.

The Disk Image contains the app bundle and a shortcut to the applications folder

The Disk Image contains the app bundle and a shortcut to the applications folder

If you open the Showcase view (press F4) you will see your app installed. If you have a lot of apps, you can search for it by typing "Hello"

The app installed on macOS

The app installed on macOS

Repeating the build

Now you have everything set up, you can create a new app bundle & Disk Image of your application any time, by running the two commands from the command line.

pyinstaller "Hello World.spec"

./builddmg.sh

It's that simple!

Wrapping up

In this tutorial we've covered how to build your PyQt5 applications into a macOS app bundle using PyInstaller, including adding data files along with your code. Then we walked through the process of creating a Disk Image to distribute your app to others. Following these steps you should be able to package up your own applications and make them available to other people.

For a complete view of all PyInstaller bundling options take a look at the PyInstaller usage documentation.

For more, see the complete PyQt5 tutorial.

Mike Driscoll: PyDev of the Week: Batuhan Taskaya

This week we welcome Batuhan Taskaya (@isidentical) as our PyDev of the Week! Batuhan is a core developer of the Python language. Batuhan is also a maintainer of multiple Python packages including parso and Black.

You can see what else Batuhan is up to by checking out his website or GitHub profile.

Let's take a few moments to get to know Batuhan better!

Can you tell us a little about yourself (hobbies, education, etc):

Hey there! My name is Batuhan, and I'm a software engineer who loves to work on developer tools to improve the overall productivity of the Python ecosystem.

I pretty much fill all my free time with open source maintenance and other programming related activities. If I am not programming at that time, I am probably reading a paper about PLT or watching some sci-fi show. I am a huge fan of the Stargate franchise.

Why did you start using Python?

I was always intrigued by computers but didn't do anything related to programming until I started using GNU/Linux on my personal computer (namely Ubuntu 12.04). Back then, I was searching for something to pass the time and found Python.

Initially, I was mind-blown by the responsiveness of the REPL. I typed `2 + 2`, it replied `4` back to me. Such a joy! For someone with literally zero programming experience, it was a very friendly environment. Later, I started following some tutorials, writing more code and repeating that process until I got a good grasp of the Python language and programming in general.

What other programming languages do you know and which is your favourite?

After being exposed to the level of elegancy and the simplicity in Python, I set the bar too high for adopting a new language. C is a great example where the language (in its own terms) is very straightforward, and currently, it is the only language I actively use apart from Python. I also think it goes really well when paired with Python, which might not be surprised considering the CPython itself and the extension modules are written in C.

If we let the mainstream languages go, I love building one-off compilers for weird/esoteric languages.

What projects are you working on now?

Most of my work revolves around CPython, which is the reference implementation of the Python language. In terms of the core, I specialize in the parser and the compiler. But outside of it, I maintain the ast module, and a few others.

One of the recent changes I've collaborated (with Pablo Galindo Salgado an Ammar Askar) on CPython was the new fancy tracebacks which I hope will really increase the productivity of the Python developers:

Traceback (most recent call last):

File "query.py", line 37, in <module>

magic_arithmetic('foo')

^^^^^^^^^^^^^^^^^^^^^^^

File "query.py", line 18, in magic_arithmetic

return add_counts(x) / 25

^^^^^^^^^^^^^

File "query.py", line 24, in add_counts

return 25 + query_user(user1) + query_user(user2)

^^^^^^^^^^^^^^^^^

File "query.py", line 32, in query_user

return 1 + query_count(db, response['a']['b']['c']['user'], retry=True)

~~~~~~~~~~~~~~~~~~^^^^^

TypeError: 'NoneType' object is not subscriptable

Alongside that, I help maintain projects like

and I am a core member of the fsspec.

Which Python libraries are your favorite (core or 3rd party)?

It might be a bit obvious, but I love the ast module. Apart from that, I enjoy using dataclasses and pathlib.

I generally avoid using dependencies since nearly %99 of the time, I can simply use the stdlib. But there is one exception, rich. For the last three months, nearly every script I've written uses it. It is such a beauty (both in terms of the UI and the API). I also really love pytest and pre-commit.

Not as a library, though one of my favorite projects from the python ecosystem is PyPy. It brings an entirely new python runtime, which depending on your work can be 1000X faster (or just 4X in general).

Is there anything else you’d like to say?

I've recently started a GitHub Sponsors Page, and if any of my work directly touches you (or your company) please consider sponsoring me!

Thanks for the interview Mike, and I hope people reading the article enjoyed it as much as I enjoyed answering these questions!

Thanks for doing the interview, Batuhan!

The post PyDev of the Week: Batuhan Taskaya appeared first on Mouse Vs Python.

Matt Layman: Episode 16 - Setting Your Sites

Real Python: Python News: What's New From January 2022?

In January 2022, the code formatter Black saw its first non-beta release and published a new stability policy. IPython, the powerful interactive Python shell, marked the release of version 8.0, its first major version release in three years. Additionally, PEP 665, aimed at making reproducible installs easier by specifying a format for lock files, was rejected. Last but not least, a fifteen-year-old memory leak bug in Python was fixed.

Let’s dive into the biggest Python news stories from the past month!

Free Bonus:Click here to get a Python Cheat Sheet and learn the basics of Python 3, like working with data types, dictionaries, lists, and Python functions.

Black No Longer Beta

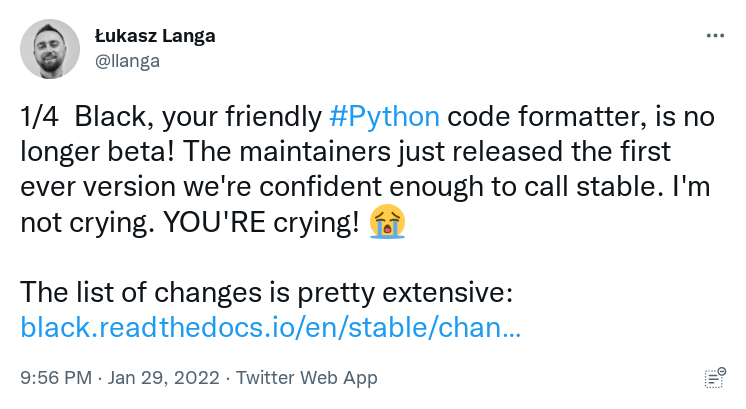

The developers of Black, an opinionated code formatter, are now confident enough to call the latest release stable. This announcement brings Black out of beta for the first time:

Image source

Image sourceCode formatting can be the source of a surprising amount of conflict among developers. This is why code formatters, or linters, help enforce style conventions to maintain consistency across a whole codebase. Linters suggest changes, while code formatters rewrite your code:

This makes your codebase more consistent, helps catch errors early, and makes code easier to scan.

YAPF is an example of a formatter. It comes with the PEP 8 style guide as a default, but it’s not strongly opinionated, giving you a lot of control over its configuration.

Black goes further: it comes with a PEP 8 compliant style, but on the whole, it’s not configurable. The idea behind disallowing configuration is that you free up your brain to focus on the actual code by relinquishing control over style. Many believe this restriction gives them much more freedom to be creative coders. But of course, not everyone likes to give up this control!

One crucial feature of opinionated formatters like Black is that they make your diffs much more informative. If you’ve ever committed a cleanup or formatting commit to your version control system, you may have inadvertently polluted your diff.

Read the full article at https://realpython.com/python-news-january-2022/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

death and gravity: Dealing with YAML with arbitrary tags in Python

... in which we use PyYAML to safely read and write YAML with any tags, in a way that's as straightforward as interacting with built-in types.

If you're in a hurry, you can find the code at the end.

Contents- Why is this useful?

- A note on PyYAML extensibility

- Preserving tags

- Unhashable keys

- Conclusion

- Bonus: hashable wrapper

- Bonus: broken YAML

Why is this useful?#

People mostly use YAML as a friendlier alternative to JSON1, but it can do way more.

Among others, it can represent user-defined and native data structures.

Say you need to read (or write) an AWS Cloud Formation template:

EC2Instance:Type:AWS::EC2::InstanceProperties:ImageId:!FindInMap[AWSRegionArch2AMI,!Ref'AWS::Region',!FindInMap[AWSInstanceType2Arch,!RefInstanceType,Arch],]InstanceType:!RefInstanceType>>> yaml.safe_load(text)Traceback (most recent call last):...yaml.constructor.ConstructorError: could not determine a constructor for the tag '!FindInMap' in "<unicode string>", line 4, column 14: ImageId: !FindInMap [ ^... or, you need to safely read untrusted YAML that represents Python objects:

!!python/object/new:module.Class{ attribute:value}>>> yaml.safe_load(text)Traceback (most recent call last):...yaml.constructor.ConstructorError: could not determine a constructor for the tag 'tag:yaml.org,2002:python/object/new:module.Class' in "<unicode string>", line 1, column 1: !!python/object/new:module.Class ... ^Warning

Historically, yaml.load(thing) was unsafe for untrusted data,

because it allowed running arbitrary code.

Consider using safe_load() instead.

For example, you could do this:

>>> yaml.load("!!python/object/new:os.system [echo WOOSH. YOU HAVE been compromised]")WOOSH. YOU HAVE been compromised0There were a bunch of CVEs about it.

To address the issue, load() requires an explicit Loader since PyYAML 6.

Also, version 5 added two new functions and corresponding loaders:

full_load()resolves all tags except those known to be unsafe (note that this was broken before 5.4, and thus vulnerable)unsafe_load()resolves all tags, even those known to be unsafe (the oldload()behavior)

safe_load() resolves only basic tags, remaining the safest.

Can't I just get the data, without it being turned into objects?

You can! The YAML spec says:

In a given processing environment, there need not be an available native type corresponding to a given tag. If a node’s tag is unavailable, a YAML processor will not be able to construct a native data structure for it. In this case, a complete representation may still be composed and an application may wish to use this representation directly.

And PyYAML obliges:

>>> text="""\... one: !myscalar string... two: !mysequence [1, 2]... """>>> yaml.compose(text)MappingNode( tag='tag:yaml.org,2002:map', value=[ ( ScalarNode(tag='tag:yaml.org,2002:str', value='one'), ScalarNode(tag='!myscalar', value='string'), ), ( ScalarNode(tag='tag:yaml.org,2002:str', value='two'), SequenceNode( tag='!mysequence', value=[ ScalarNode(tag='tag:yaml.org,2002:int', value='1'), ScalarNode(tag='tag:yaml.org,2002:int', value='2'), ], ), ), ],)>>> print(yaml.serialize(_))one: !myscalar 'string'two: !mysequence [1, 2]... the spec didn't say the representation has to be concise. ¯\_(ツ)_/¯