Are there certain parts of Python that just seem magic? Like how are dictionaries so much faster than looping over a list to find an item. How does a generator remember the state of the variables each time it yields a value and why do you never have to allocate memory like other languages? It turns out, CPython, the most popular Python runtime is written in human-readable C and Python code. This tutorial will walk you through the CPython source code.

You’ll cover all the concepts behind the internals of CPython, how they work and visual explanations as you go.

You’ll learn how to:

- Read and navigate the source code

- Compile CPython from source code

- Navigate and comprehend the inner workings of concepts like lists, dictionaries, and generators

- Run the test suite

- Modify or upgrade components of the CPython library to contribute them to future versions

Yes, this is a very long article. If you just made yourself a fresh cup of tea, coffee or your favorite beverage, it’s going to be cold by the end of Part 1.

This tutorial is split into five parts. Take your time for each part and make sure you try out the demos and the interactive components. You can feel a sense of achievement that you grasp the core concepts of Python that can make you a better Python programmer.

Free Bonus:5 Thoughts On Python Mastery, a free course for Python developers that shows you the roadmap and the mindset you'll need to take your Python skills to the next level.

Part 1: Introduction to CPython

When you type python at the console or install a Python distribution from python.org, you are running CPython. CPython is one of the many Python runtimes, maintained and written by different teams of developers. Some other runtimes you may have heard are PyPy, Cython, and Jython.

The unique thing about CPython is that it contains both a runtime and the shared language specification that all Python runtimes use. CPython is the “official,” or reference implementation of Python.

The Python language specification is the document that the description of the Python language. For example, it says that assert is a reserved keyword, and that [] is used for indexing, slicing, and creating empty lists.

Think about what you expect to be inside the Python distribution on your computer:

- When you type

python without a file or module, it gives an interactive prompt. - You can import built-in modules from the standard library like

json. - You can install packages from the internet using

pip. - You can test your applications using the built-in

unittest library.

These are all part of the CPython distribution. There’s a lot more than just a compiler.

Note: This article is written against version 3.8.0b3 of the CPython source code.

What’s in the Source Code?

The CPython source distribution comes with a whole range of tools, libraries, and components. We’ll explore those in this article. First we are going to focus on the compiler.

To download a copy of the CPython source code, you can use git to pull the latest version to a working copy locally:

git clone https://github.com/python/cpython

Note: If you don’t have Git available, you can download the source in a ZIP file directly from the GitHub website.

Inside of the newly downloaded cpython directory, you will find the following subdirectories:

cpython/

│

├── Doc ← Source for the documentation

├── Grammar ← The a computer-readable language definition

├── Include ← The C header files

├── Lib ← Standard library modules written in Python

├── Mac ← macOS support files

├── Misc ← Miscellaneous files

├── Modules ← Standard Library Modules written in C

├── Objects ← Core types and the object model

├── Parser ← The Python parser source code

├── PC ← Windows build support files

├── PCbuild ← Windows build support files for older Windows versions

├── Programs ← Source code for the python executable and other binaries

├── Python ← The CPython interpreter source code

└── Tools ← Standalone tools useful for building or extending Python

Next, we’ll compile CPython from the source code. This step requires a C compiler, and some build tools, which depend on the operating system you’re using.

Compiling CPython (macOS)

Compiling CPython on macOS is straightforward. You will first need the essential C compiler toolkit. The Command Line Development Tools is an app that you can update in macOS through the App Store. You need to perform the initial installation on the terminal.

To open up a terminal in macOS, go to the Launchpad, then Other then choose the Terminal app. You will want to save this app to your Dock, so right-click the Icon and select Keep in Dock.

Now, within the terminal, install the C compiler and toolkit by running the following:

This command will pop up with a prompt to download and install a set of tools, including Git, Make, and the GNU C compiler.

You will also need a working copy of OpenSSL to use for fetching packages from the PyPi.org website. If you later plan on using this build to install additional packages, SSL validation is required.

The simplest way to install OpenSSL on macOS is by using HomeBrew. If you already have HomeBrew installed, you can install the dependencies for CPython with the brew install command:

$ brew install openssl xz zlib

Now that you have the dependencies, you can run the configure script, enabling SSL support by discovering the location that HomeBrew installed to and enabling the debug hooks --with-pydebug:

$CPPFLAGS="-I$(brew --prefix zlib)/include"\LDFLAGS="-L$(brew --prefix zlib)/lib"\

./configure --with-openssl=$(brew --prefix openssl) --with-pydebug

This will generate a Makefile in the root of the repository that you can use to automate the build process. The ./configure step only needs to be run once. You can build the CPython binary by running:

The -j2 flag allows make to run 2 jobs simultaneously. If you have 4 cores, you can change this to 4. The -s flag stops the Makefile from printing every command it runs to the console. You can remove this, but the output is very verbose.

During the build, you may receive some errors, and in the summary, it will notify you that not all packages could be built. For example, _dbm, _sqlite3, _uuid, nis, ossaudiodev, spwd, and _tkinter would fail to build with this set of instructions. That’s okay if you aren’t planning on developing against those packages. If you are, then check out the dev guide website for more information.

The build will take a few minutes and generate a binary called python.exe. Every time you make changes to the source code, you will need to re-run make with the same flags.

The python.exe binary is the debug binary of CPython. Execute python.exe to see a working REPL:

$ ./python.exe

Python 3.8.0b3 (tags/v3.8.0b3:4336222407, Aug 21 2019, 10:00:03) [Clang 10.0.1 (clang-1001.0.46.4)] on darwinType "help", "copyright", "credits" or "license" for more information.>>>

Note:

Yes, that’s right, the macOS build has a file extension for .exe. This is not because it’s a Windows binary. Because macOS has a case-insensitive filesystem and when working with the binary, the developers didn’t want people to accidentally refer to the directory Python/ so .exe was appended to avoid ambiguity.

If you later run make install or make altinstall, it will rename the file back to python.

Compiling CPython (Linux)

For Linux, the first step is to download and install make, gcc, configure, and pkgconfig.

For Fedora Core, RHEL, CentOS, or other yum-based systems:

$ sudo yum install yum-utils

For Debian, Ubuntu, or other apt-based systems:

$ sudo apt install build-essential

Then install the required packages, for Fedora Core, RHEL, CentOS or other yum-based systems:

$ sudo yum-builddep python3

For Debian, Ubuntu, or other apt-based systems:

$ sudo apt install libssl-dev zlib1g-dev libncurses5-dev \

libncursesw5-dev libreadline-dev libsqlite3-dev libgdbm-dev \

libdb5.3-dev libbz2-dev libexpat1-dev liblzma-dev libffi-dev

Now that you have the dependencies, you can run the configure script, enabling the debug hooks --with-pydebug:

$ ./configure --with-pydebug

Review the output to ensure that OpenSSL support was marked as YES. Otherwise, check with your distribution for instructions on installing the headers for OpenSSL.

Next, you can build the CPython binary by running the generated Makefile:

During the build, you may receive some errors, and in the summary, it will notify you that not all packages could be built. That’s okay if you aren’t planning on developing against those packages. If you are, then check out the dev guide website for more information.

The build will take a few minutes and generate a binary called python. This is the debug binary of CPython. Execute ./python to see a working REPL:

$ ./python

Python 3.8.0b3 (tags/v3.8.0b3:4336222407, Aug 21 2019, 10:00:03) [Clang 10.0.1 (clang-1001.0.46.4)] on darwinType "help", "copyright", "credits" or "license" for more information.>>>

Compiling CPython (Windows)

Inside the PC folder is a Visual Studio project file for building and exploring CPython. To use this, you need to have Visual Studio installed on your PC.

The newest version of Visual Studio, Visual Studio 2019, makes it easier to work with Python and the CPython source code, so it is recommended for use in this tutorial. If you already have Visual Studio 2017 installed, that would also work fine.

None of the paid features are required for compiling CPython or this tutorial. You can use the Community edition of Visual Studio, which is available for free from Microsoft’s Visual Studio website.

Once you’ve downloaded the installer, you’ll be asked to select which components you want to install. The bare minimum for this tutorial is:

- The Python Development workload

- The optional Python native development tools

- Python 3 64-bit (3.7.2) (can be deselected if you already have Python 3.7 installed)

Any other optional features can be deselected if you want to be more conscientious with disk space:

![Visual Studio Options Window]()

The installer will then download and install all of the required components. The installation could take an hour, so you may want to read on and come back to this section.

Once the installer has completed, click the Launch button to start Visual Studio. You will be prompted to sign in. If you have a Microsoft account you can log in, or skip that step.

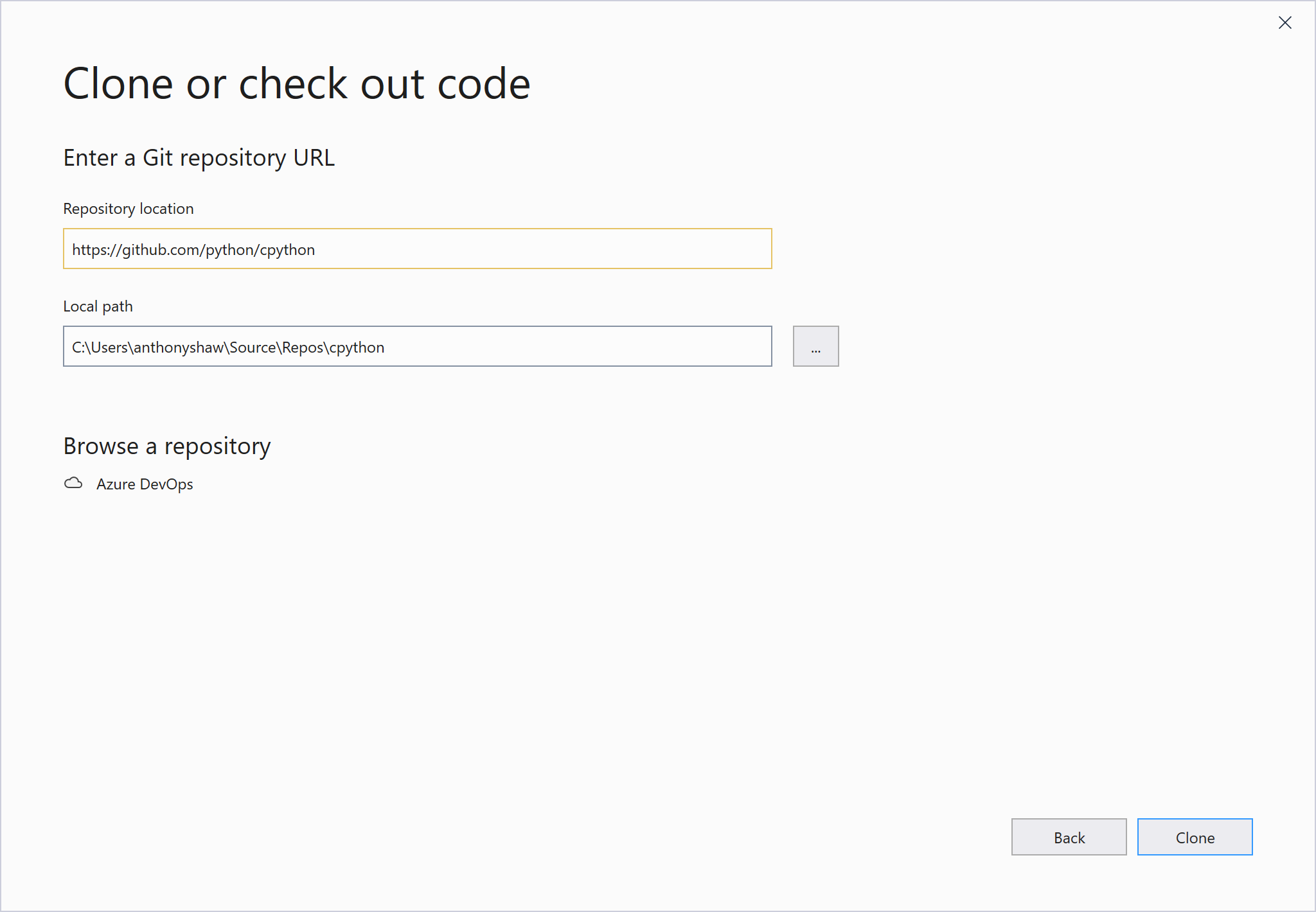

Once Visual Studio starts, you will be prompted to Open a Project. A shortcut to getting started with the Git configuration and cloning CPython is to choose the Clone or check out code option:

![Choosing a Project Type in Visual Studio]()

For the project URL, type https://github.com/python/cpython to clone:

![Cloning projects in Visual Studio]()

Visual Studio will then download a copy of CPython from GitHub using the version of Git bundled with Visual Studio. This step also saves you the hassle of having to install Git on Windows. The download may take 10 minutes.

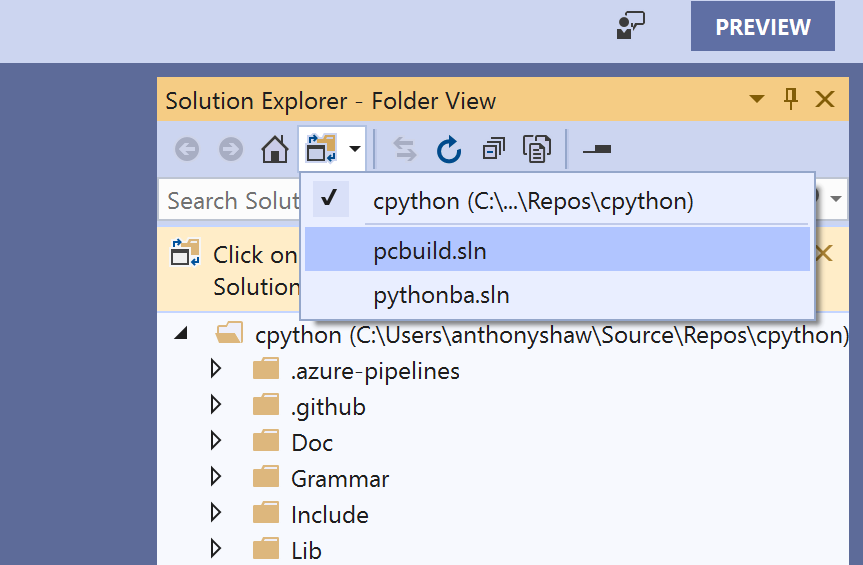

Once the project has downloaded, you need to point it to the pcbuild Solution file, by clicking on Solutions and Projects and selecting pcbuild.sln:

![Selecting a solution]()

When the solution is loaded, it will prompt you to retarget the project’s inside the solution to the version of the C/C++ compiler you have installed. Visual Studio will also target the version of the Windows SDK you have installed.

Ensure that you change the Windows SDK version to the newest installed version and the platform toolset to the latest version. If you missed this window, you can right-click on the Solution in the Solutions and Projects window and click Retarget Solution.

Once this is complete, you need to download some source files to be able to build the whole CPython package. Inside the PCBuild folder there is a .bat file that automates this for you. Open up a command-line prompt inside the downloaded PCBuild and run get_externals.bat:

> get_externals.batUsing py -3.7 (found 3.7 with py.exe)Fetching external libraries...Fetching bzip2-1.0.6...Fetching sqlite-3.21.0.0...Fetching xz-5.2.2...Fetching zlib-1.2.11...Fetching external binaries...Fetching openssl-bin-1.1.0j...Fetching tcltk-8.6.9.0...Finished.

Next, back within Visual Studio, build CPython by pressing Ctrl+Shift+B, or choosing Build Solution from the top menu. If you receive any errors about the Windows SDK being missing, make sure you set the right targeting settings in the Retarget Solution window. You should also see Windows Kits inside your Start Menu, and Windows Software Development Kit inside of that menu.

The build stage could take 10 minutes or more for the first time. Once the build is completed, you may see a few warnings that you can ignore and eventual completion.

To start the debug version of CPython, press F5 and CPython will start in Debug mode straight into the REPL:

![CPython debugging Windows]()

Once this is completed, you can run the Release build by changing the build configuration from Debug to Release on the top menu bar and rerunning Build Solution again.

You now have both Debug and Release versions of the CPython binary within PCBuild\win32\.

You can set up Visual Studio to be able to open a REPL with either the Release or Debug build by choosing Tools->Python->Python Environments from the top menu:

![Choosing Python environments]()

Then click Add Environment and then target the Debug or Release binary. The Debug binary will end in _d.exe, for example, python_d.exe and pythonw_d.exe. You will most likely want to use the debug binary as it comes with Debugging support in Visual Studio and will be useful for this tutorial.

In the Add Environment window, target the python_d.exe file as the interpreter inside the PCBuild/win32 and the pythonw_d.exe as the windowed interpreter:

![Adding an environment in VS2019]()

Now, you can start a REPL session by clicking Open Interactive Window in the Python Environments window and you will see the REPL for the compiled version of Python:

![Python Environment REPL]()

During this tutorial there will be REPL sessions with example commands. I encourage you to use the Debug binary to run these REPL sessions in case you want to put in any breakpoints within the code.

Lastly, to make it easier to navigate the code, in the Solution View, click on the toggle button next to the Home icon to switch to Folder view:

![Switching Environment Mode]()

Now you have a version of CPython compiled and ready to go, let’s find out how the CPython compiler works.

What Does a Compiler Do?

The purpose of a compiler is to convert one language into another. Think of a compiler like a translator. You would hire a translator to listen to you speaking in English and then speak in Japanese:

![Translating from English to Japanese]()

Some compilers will compile into a low-level machine code which can be executed directly on a system. Other compilers will compile into an intermediary language, to be executed by a virtual machine.

One important decision to make when choosing a compiler is the system portability requirements. Java and .NET CLR will compile into an Intermediary Language so that the compiled code is portable across multiple systems architectures. C, Go, C++, and Pascal will compile into a low-level executable that will only work on systems similar to the one it was compiled.

Because Python applications are typically distributed as source code, the role of the Python runtime is to convert the Python source code and execute it in one step. Internally, the CPython runtime does compile your code. A popular misconception is that Python is an interpreted language. It is actually compiled.

Python code is not compiled into machine-code. It is compiled into a special low-level intermediary language called bytecode that only CPython understands. This code is stored in .pyc files in a hidden directory and cached for execution. If you run the same Python application twice without changing the source code, it’ll always be much faster the second time. This is because it loads the compiled bytecode and executes it directly.

Why Is CPython Written in C and Not Python?

The C in CPython is a reference to the C programming language, implying that this Python distribution is written in the C language.

This statement is largely true: the compiler in CPython is written in pure C. However, many of the standard library modules are written in pure Python or a combination of C and Python.

So why is CPython written in C and not Python?

The answer is located in how compilers work. There are two types of compiler:

- Self-hosted compilers are compilers written in the language they compile, such as the Go compiler.

- Source-to-source compilers are compilers written in another language that already have a compiler.

If you’re writing a new programming language from scratch, you need an executable application to compile your compiler! You need a compiler to execute anything, so when new languages are developed, they’re often written first in an older, more established language.

A good example would be the Go programming language. The first Go compiler was written in C, then once Go could be compiled, the compiler was rewritten in Go.

CPython kept its C heritage: many of the standard library modules, like the ssl module or the sockets module, are written in C to access low-level operating system APIs.

The APIs in the Windows and Linux kernels for creating network sockets, working with the filesystem or interacting with the display are all written in C. It made sense for Python’s extensibility layer to be focused on the C language. Later in this article, we will cover the Python Standard Library and the C modules.

There is a Python compiler written in Python called PyPy. PyPy’s logo is an Ouroboros to represent the self-hosting nature of the compiler.

Another example of a cross-compiler for Python is Jython. Jython is written in Java and compiles from Python source code into Java bytecode. In the same way that CPython makes it easy to import C libraries and use them from Python, Jython makes it easy to import and reference Java modules and classes.

The Python Language Specification

Contained within the CPython source code is the definition of the Python language. This is the reference specification used by all the Python interpreters.

The specification is in both human-readable and machine-readable format. Inside the documentation is a detailed explanation of the Python language, what is allowed, and how each statement should behave.

Documentation

Located inside the Doc/reference directory are reStructuredText explanations of each of the features in the Python language. This forms the official Python reference guide on docs.python.org.

Inside the directory are the files you need to understand the whole language, structure, and keywords:

cpython/Doc/reference

|

├── compound_stmts.rst

├── datamodel.rst

├── executionmodel.rst

├── expressions.rst

├── grammar.rst

├── import.rst

├── index.rst

├── introduction.rst

├── lexical_analysis.rst

├── simple_stmts.rst

└── toplevel_components.rst

Inside compound_stmts.rst, the documentation for compound statements, you can see a simple example defining the with statement.

The with statement can be used in multiple ways in Python, the simplest being the instantiation of a context-manager and a nested block of code:

You can assign the result to a variable using the as keyword:

You can also chain context managers together with a comma:

Next, we’ll explore the computer-readable documentation of the Python language.

Grammar

The documentation contains the human-readable specification of the language, and the machine-readable specification is housed in a single file, Grammar/Grammar.

The Grammar file is written in a context-notation called Backus-Naur Form (BNF). BNF is not specific to Python and is often used as the notation for grammars in many other languages.

The concept of grammatical structure in a programming language is inspired by Noam Chomsky’s work on Syntactic Structures in the 1950s!

Python’s grammar file uses the Extended-BNF (EBNF) specification with regular-expression syntax. So, in the grammar file you can use:

* for repetition+ for at-least-once repetition[] for optional parts| for alternatives() for grouping

If you search for the with statement in the grammar file, at around line 80 you’ll see the definitions for the with statement:

with_stmt: 'with' with_item (',' with_item)* ':' suite

with_item: test ['as' expr]

Anything in quotes is a string literal, which is how keywords are defined. So the with_stmt is specified as:

- Starting with the word

with - Followed by a

with_item, which is a test and (optionally), the word as, and an expression - Following one or many items, each separated by a comma

- Ending with a

: - Followed by a

suite

There are references to some other definitions in these two lines:

suite refers to a block of code with one or multiple statementstest refers to a simple statement that is evaluatedexpr refers to a simple expression

If you want to explore those in detail, the whole of the Python grammar is defined in this single file.

If you want to see a recent example of how grammar is used, in PEP 572 the colon equals operator was added to the grammar file in this Git commit.

Using pgen

The grammar file itself is never used by the Python compiler. Instead, a parser table created by a tool called pgen is used. pgen reads the grammar file and converts it into a parser table. If you make changes to the grammar file, you must regenerate the parser table and recompile Python.

Note: The pgen application was rewritten in Python 3.8 from C to pure Python.

To see pgen in action, let’s change part of the Python grammar. Around line 51 you will see the definition of a pass statement:

Change that line to accept the keyword 'pass' or 'proceed' as keywords:

pass_stmt: 'pass' | 'proceed'

Now you need to rebuild the grammar files.

On macOS and Linux, run make regen-grammar to run pgen over the altered grammar file. For Windows, there is no officially supported way of running pgen. However, you can clone my fork and run build.bat --regen from within the PCBuild directory.

You should see an output similar to this, showing that the new Include/graminit.h and Python/graminit.c files have been generated:

# Regenerate Doc/library/token-list.inc from Grammar/Tokens

# using Tools/scripts/generate_token.py

...

python3 ./Tools/scripts/update_file.py ./Include/graminit.h ./Include/graminit.h.new

python3 ./Tools/scripts/update_file.py ./Python/graminit.c ./Python/graminit.c.new

With the regenerated parser tables, you need to recompile CPython to see the new syntax. Use the same compilation steps you used earlier for your operating system.

If the code compiled successfully, you can execute your new CPython binary and start a REPL.

In the REPL, you can now try defining a function and instead of using the pass statement, use the proceed keyword alternative that you compiled into the Python grammar:

Python 3.8.0b3 (tags/v3.8.0b3:4336222407, Aug 21 2019, 10:00:03)

[Clang 10.0.1 (clang-1001.0.46.4)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> def example():

... proceed

...

>>> example()

Well done! You’ve changed the CPython syntax and compiled your own version of CPython. Ship it!

Next, we’ll explore tokens and their relationship to grammar.

Tokens

Alongside the grammar file in the Grammar folder is a Tokens file, which contains each of the unique types found as a leaf node in a parse tree. We will cover parser trees in depth later.

Each token also has a name and a generated unique ID. The names are used to make it simpler to refer to in the tokenizer.

Note: The Tokens file is a new feature in Python 3.8.

For example, the left parenthesis is called LPAR, and semicolons are called SEMI. You’ll see these tokens later in the article:

LPAR '('

RPAR ')'

LSQB '['

RSQB ']'

COLON ':'

COMMA ','

SEMI ';'

As with the Grammar file, if you change the Tokens file, you need to run pgen again.

To see tokens in action, you can use the tokenize module in CPython. Create a simple Python script called test_tokens.py:

# Hello world!defmy_function():proceed

For the rest of this tutorial, ./python.exe will refer to the compiled version of CPython. However, the actual command will depend on your system.

For Windows:

For Linux:

For macOS:

Then pass this file through a module built into the standard library called tokenize. You will see the list of tokens, by line and character. Use the -e flag to output the exact token name:

$ ./python.exe -m tokenize -e test_tokens.py

0,0-0,0: ENCODING 'utf-8' 1,0-1,14: COMMENT '# Hello world!'1,14-1,15: NL '\n' 2,0-2,3: NAME 'def' 2,4-2,15: NAME 'my_function' 2,15-2,16: LPAR '(' 2,16-2,17: RPAR ')' 2,17-2,18: COLON ':' 2,18-2,19: NEWLINE '\n' 3,0-3,3: INDENT ' ' 3,3-3,7: NAME 'proceed' 3,7-3,8: NEWLINE '\n' 4,0-4,0: DEDENT '' 4,0-4,0: ENDMARKER '' In the output, the first column is the range of the line/column coordinates, the second column is the name of the token, and the final column is the value of the token.

In the output, the tokenize module has implied some tokens that were not in the file. The ENCODING token for utf-8, and a blank line at the end, giving DEDENT to close the function declaration and an ENDMARKER to end the file.

It is best practice to have a blank line at the end of your Python source files. If you omit it, CPython adds it for you, with a tiny performance penalty.

The tokenize module is written in pure Python and is located in Lib/tokenize.py within the CPython source code.

Important: There are two tokenizers in the CPython source code: one written in Python, demonstrated here, and another written in C.

The tokenizer written in Python is meant as a utility, and the one written in C is used by the Python compiler. They have identical output and behavior. The version written in C is designed for performance and the module in Python is designed for debugging.

To see a verbose readout of the C tokenizer, you can run Python with the -d flag. Using the test_tokens.py script you created earlier, run it with the following:

$ ./python.exe -d test_tokens.py

Token NAME/'def' ... It's a keyword DFA 'file_input', state 0: Push 'stmt' DFA 'stmt', state 0: Push 'compound_stmt' DFA 'compound_stmt', state 0: Push 'funcdef' DFA 'funcdef', state 0: Shift.Token NAME/'my_function' ... It's a token we know DFA 'funcdef', state 1: Shift.Token LPAR/'(' ... It's a token we know DFA 'funcdef', state 2: Push 'parameters' DFA 'parameters', state 0: Shift.Token RPAR/')' ... It's a token we know DFA 'parameters', state 1: Shift. DFA 'parameters', state 2: Direct pop.Token COLON/':' ... It's a token we know DFA 'funcdef', state 3: Shift.Token NEWLINE/'' ... It's a token we know DFA 'funcdef', state 5: [switch func_body_suite to suite] Push 'suite' DFA 'suite', state 0: Shift.Token INDENT/'' ... It's a token we know DFA 'suite', state 1: Shift.Token NAME/'proceed' ... It's a keyword DFA 'suite', state 3: Push 'stmt'... ACCEPT.In the output, you can see that it highlighted proceed as a keyword. In the next chapter, we’ll see how executing the Python binary gets to the tokenizer and what happens from there to execute your code.

Now that you have an overview of the Python grammar and the relationship between tokens and statements, there is a way to convert the pgen output into an interactive graph.

Here is a screenshot of the Python 3.8a2 grammar:

![Python 3.8 DFA node graph]()

The Python package used to generate this graph, instaviz, will be covered in a later chapter.

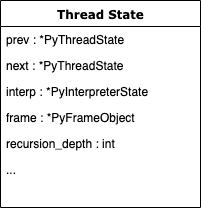

Memory Management in CPython

Throughout this article, you will see references to a PyArena object. The arena is one of CPython’s memory management structures. The code is within Python/pyarena.c and contains a wrapper around C’s memory allocation and deallocation functions.

In a traditionally written C program, the developer should allocate memory for data structures before writing into that data. This allocation marks the memory as belonging to the process with the operating system.

It is also up to the developer to deallocate, or “free,” the allocated memory when its no longer being used and return it to the operating system’s block table of free memory.

If a process allocates memory for a variable, say within a function or loop, when that function has completed, the memory is not automatically given back to the operating system in C. So if it hasn’t been explicitly deallocated in the C code, it causes a memory leak. The process will continue to take more memory each time that function runs until eventually, the system runs out of memory, and crashes!

Python takes that responsibility away from the programmer and uses two algorithms: a reference counter and a garbage collector.

Whenever an interpreter is instantiated, a PyArena is created and attached one of the fields in the interpreter. During the lifecycle of a CPython interpreter, many arenas could be allocated. They are connected with a linked list. The arena stores a list of pointers to Python Objects as a PyListObject. Whenever a new Python object is created, a pointer to it is added using PyArena_AddPyObject(). This function call stores a pointer in the arena’s list, a_objects.

The PyArena serves a second function, which is to allocate and reference a list of raw memory blocks. For example, a PyList would need extra memory if you added thousands of additional values. The PyList object’s C code does not allocate memory directly. The object gets raw blocks of memory from the PyArena by calling PyArena_Malloc() from the PyObject with the required memory size. This task is completed by another abstraction in Objects/oballoc.c. In the object allocation module, memory can be allocated, freed, and reallocated for a Python Object.

A linked list of allocated blocks is stored inside the arena, so that when an interpreter is stopped, all managed memory blocks can be deallocated in one go using PyArena_Free().

Take the PyListObject example. If you were to .append() an object to the end of a Python list, you don’t need to reallocate the memory used in the existing list beforehand. The .append() method calls list_resize() which handles memory allocation for lists. Each list object keeps a list of the amount of memory allocated. If the item you’re appending will fit inside the existing free memory, it is simply added. If the list needs more memory space, it is expanded. Lists are expanded in length as 0, 4, 8, 16, 25, 35, 46, 58, 72, 88.

PyMem_Realloc() is called to expand the memory allocated in a list. PyMem_Realloc() is an API wrapper for pymalloc_realloc().

Python also has a special wrapper for the C call malloc(), which sets the max size of the memory allocation to help prevent buffer overflow errors (See PyMem_RawMalloc()).

In summary:

- Allocation of raw memory blocks is done via

PyMem_RawAlloc(). - The pointers to Python objects are stored within the

PyArena. PyArena also stores a linked-list of allocated memory blocks.

More information on the API is detailed on the CPython documentation.

Reference Counting

To create a variable in Python, you have to assign a value to a uniquely named variable:

Whenever a value is assigned to a variable in Python, the name of the variable is checked within the locals and globals scope to see if it already exists.

Because my_variable is not already within the locals() or globals() dictionary, this new object is created, and the value is assigned as being the numeric constant 180392.

There is now one reference to my_variable, so the reference counter for my_variable is incremented by 1.

You will see function calls Py_INCREF() and Py_DECREF() throughout the C source code for CPython. These functions increment and decrement the count of references to that object.

References to an object are decremented when a variable falls outside of the scope in which it was declared. Scope in Python can refer to a function or method, a comprehension, or a lambda function. These are some of the more literal scopes, but there are many other implicit scopes, like passing variables to a function call.

The handling of incrementing and decrementing references based on the language is built into the CPython compiler and the core execution loop, ceval.c, which we will cover in detail later in this article.

Whenever Py_DECREF() is called, and the counter becomes 0, the PyObject_Free() function is called. For that object PyArena_Free() is called for all of the memory that was allocated.

Garbage Collection

How often does your garbage get collected? Weekly, or fortnightly?

When you’re finished with something, you discard it and throw it in the trash. But that trash won’t get collected straight away. You need to wait for the garbage trucks to come and pick it up.

CPython has the same principle, using a garbage collection algorithm. CPython’s garbage collector is enabled by default, happens in the background and works to deallocate memory that’s been used for objects which are no longer in use.

Because the garbage collection algorithm is a lot more complex than the reference counter, it doesn’t happen all the time, otherwise, it would consume a huge amount of CPU resources. It happens periodically, after a set number of operations.

CPython’s standard library comes with a Python module to interface with the arena and the garbage collector, the gc module. Here’s how to use the gc module in debug mode:

>>>>>> importgc>>> gc.set_debug(gc.DEBUG_STATS)

This will print the statistics whenever the garbage collector is run.

You can get the threshold after which the garbage collector is run by calling get_threshold():

>>>>>> gc.get_threshold()(700, 10, 10)

You can also get the current threshold counts:

>>>>>> gc.get_count()(688, 1, 1)

Lastly, you can run the collection algorithm manually:

This will call collect() inside the Modules/gcmodule.c file which contains the implementation of the garbage collector algorithm.

Conclusion

In Part 1, you covered the structure of the source code repository, how to compile from source, and the Python language specification. These core concepts will be critical in Part 2 as you dive deeper into the Python interpreter process.

Part 2: The Python Interpreter Process

Now that you’ve seen the Python grammar and memory management, you can follow the process from typing python to the part where your code is executed.

There are five ways the python binary can be called:

- To run a single command with

-c and a Python command - To start a module with

-m and the name of a module - To run a file with the filename

- To run the

stdin input using a shell pipe - To start the REPL and execute commands one at a time

The three source files you need to inspect to see this process are:

Programs/python.c is a simple entry point.Modules/main.c contains the code to bring together the whole process, loading configuration, executing code and clearing up memory.Python/initconfig.c loads the configuration from the system environment and merges it with any command-line flags.

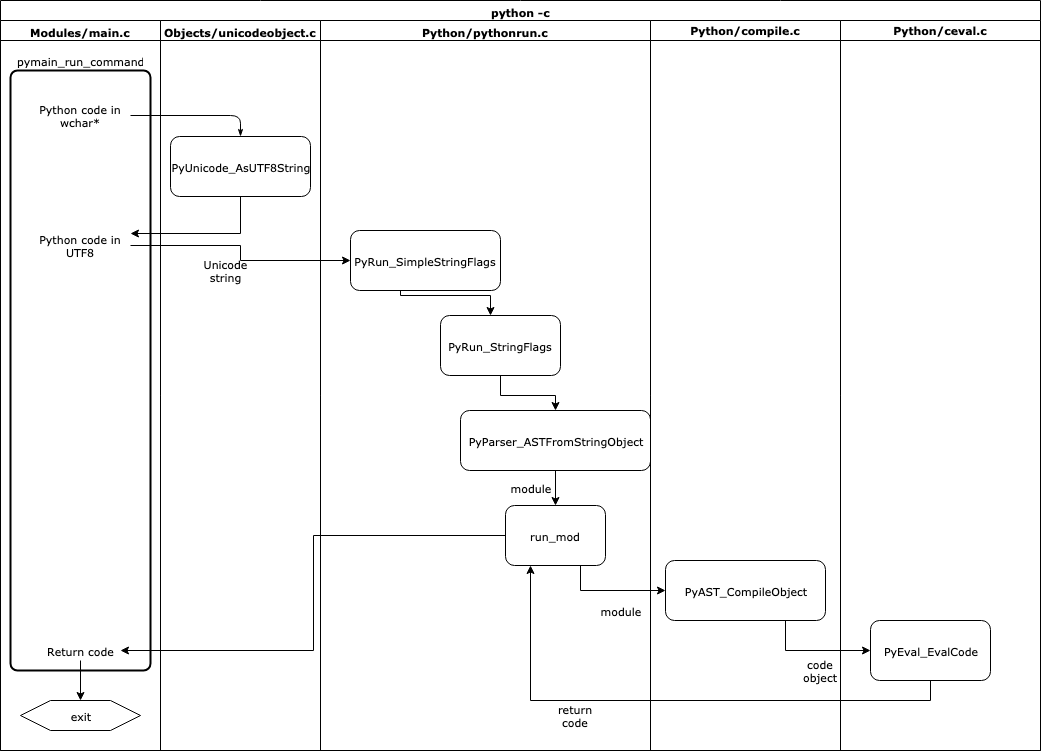

This diagram shows how each of those functions is called:

![Python run swim lane diagram]()

The execution mode is determined from the configuration.

The CPython source code style:

There is an official style guide for the CPython C code, designed originally in 2001 and updated for modern versions.

There are some naming standards which help when navigating the source code:

Use a Py prefix for public functions, never for static functions. The Py_ prefix is reserved for global service routines like Py_FatalError. Specific groups of routines (like specific object type APIs) use a longer prefix, such as PyString_ for string functions.

Public functions and variables use MixedCase with underscores, like this: PyObject_GetAttr, Py_BuildValue, PyExc_TypeError.

Occasionally an “internal” function has to be visible to the loader. We use the _Py prefix for this, for example, _PyObject_Dump.

Macros should have a MixedCase prefix and then use upper case, for example PyString_AS_STRING, Py_PRINT_RAW.

Establishing Runtime Configuration

![Python run swim lane diagram]()

In the swimlanes, you can see that before any Python code is executed, the runtime first establishes the configuration.

The configuration of the runtime is a data structure defined in Include/cpython/initconfig.h named PyConfig.

The configuration data structure includes things like:

- Runtime flags for various modes like debug and optimized mode

- The execution mode, such as whether a filename was passed,

stdin was provided or a module name - Extended option, specified by

-X <option> - Environment variables for runtime settings

The configuration data is primarily used by the CPython runtime to enable and disable various features.

Python also comes with several Command Line Interface Options. In Python you can enable verbose mode with the -v flag. In verbose mode, Python will print messages to the screen when modules are loaded:

$ ./python.exe -v -c "print('hello world')"# installing zipimport hook

import zipimport # builtin# installed zipimport hook

...You will see a hundred lines or more with all the imports of your user site-packages and anything else in the system environment.

You can see the definition of this flag within Include/cpython/initconfig.h inside the struct for PyConfig:

/* --- PyConfig ---------------------------------------------- */typedefstruct{int_config_version;/* Internal configuration version, used for ABI compatibility */int_config_init;/* _PyConfigInitEnum value */.../* If greater than 0, enable the verbose mode: print a message each time a module is initialized, showing the place (filename or built-in module) from which it is loaded. If greater or equal to 2, print a message for each file that is checked for when searching for a module. Also provides information on module cleanup at exit. Incremented by the -v option. Set by the PYTHONVERBOSE environment variable. If set to -1 (default), inherit Py_VerboseFlag value. */intverbose;In Python/coreconfig.c, the logic for reading settings from environment variables and runtime command-line flags is established.

In the config_read_env_vars function, the environment variables are read and used to assign the values for the configuration settings:

staticPyStatusconfig_read_env_vars(PyConfig*config){PyStatusstatus;intuse_env=config->use_environment;/* Get environment variables */_Py_get_env_flag(use_env,&config->parser_debug,"PYTHONDEBUG");_Py_get_env_flag(use_env,&config->verbose,"PYTHONVERBOSE");_Py_get_env_flag(use_env,&config->optimization_level,"PYTHONOPTIMIZE");_Py_get_env_flag(use_env,&config->inspect,"PYTHONINSPECT");For the verbose setting, you can see that the value of PYTHONVERBOSE is used to set the value of &config->verbose, if PYTHONVERBOSE is found. If the environment variable does not exist, then the default value of -1 will remain.

Then in config_parse_cmdline within coreconfig.c again, the command-line flag is used to set the value, if provided:

staticPyStatusconfig_parse_cmdline(PyConfig*config,PyWideStringList*warnoptions,Py_ssize_t*opt_index){...switch(c){...case'v':config->verbose++;break;.../* This space reserved for other options */default:/* unknown argument: parsing failed */config_usage(1,program);return_PyStatus_EXIT(2);}}while(1);This value is later copied to a global variable Py_VerboseFlag by the _Py_GetGlobalVariablesAsDict function.

Within a Python session, you can access the runtime flags, like verbose mode, quiet mode, using the sys.flags named tuple.

The -X flags are all available inside the sys._xconfig dictionary:

>>>$ ./python.exe -X dev -q >>> importsys>>> sys.flagssys.flags(debug=0, inspect=0, interactive=0, optimize=0, dont_write_bytecode=0, no_user_site=0, no_site=0, ignore_environment=0, verbose=0, bytes_warning=0, quiet=1, hash_randomization=1, isolated=0, dev_mode=True, utf8_mode=0)>>> sys._xoptions{'dev': True} As well as the runtime configuration in coreconfig.h, there is also the build configuration, which is located inside pyconfig.h in the root folder. This file is created dynamically in the configure step in the build process, or by Visual Studio for Windows systems.

You can see the build configuration by running:

$ ./python.exe -m sysconfig

Once CPython has the runtime configuration and the command-line arguments, it can establish what it needs to execute.

This task is handled by the pymain_main function inside Modules/main.c. Depending on the newly created config instance, CPython will now execute code provided via several options.

The simplest is providing CPython a command with the -c option and a Python program inside quotes.

For example:

$ ./python.exe -c "print('hi')"hiHere is the full flowchart of how this happens:

![Flow chart of pymain_run_command]()

First, the pymain_run_command() function is executed inside Modules/main.c taking the command passed in -c as an argument in the C type wchar_t*. The wchar_t* type is often used as a low-level storage type for Unicode data across CPython as the size of the type can store UTF8 characters.

When converting the wchar_t* to a Python string, the Objects/unicodetype.c file has a helper function PyUnicode_FromWideChar() that returns a PyObject, of type str. The encoding to UTF8 is then done by PyUnicode_AsUTF8String() on the Python str object to convert it to a Python bytes object.

Once this is complete, pymain_run_command() will then pass the Python bytes object to PyRun_SimpleStringFlags() for execution, but first converting the bytes to a str type again:

staticintpymain_run_command(wchar_t*command,PyCompilerFlags*cf){PyObject*unicode,*bytes;intret;unicode=PyUnicode_FromWideChar(command,-1);if(unicode==NULL){gotoerror;}if(PySys_Audit("cpython.run_command","O",unicode)<0){returnpymain_exit_err_print();}bytes=PyUnicode_AsUTF8String(unicode);Py_DECREF(unicode);if(bytes==NULL){gotoerror;}ret=PyRun_SimpleStringFlags(PyBytes_AsString(bytes),cf);Py_DECREF(bytes);return(ret!=0);error:PySys_WriteStderr("Unable to decode the command from the command line:\n");returnpymain_exit_err_print();}The conversion of wchar_t* to Unicode, bytes, and then a string is roughly equivalent to the following:

unicode=str(command)bytes_=bytes(unicode.encode('utf8'))# call PyRun_SimpleStringFlags with bytes_The PyRun_SimpleStringFlags() function is part of Python/pythonrun.c. It’s purpose is to turn this simple command into a Python module and then send it on to be executed.

Since a Python module needs to have __main__ to be executed as a standalone module, it creates that automatically:

intPyRun_SimpleStringFlags(constchar*command,PyCompilerFlags*flags){PyObject*m,*d,*v;m=PyImport_AddModule("__main__");if(m==NULL)return-1;d=PyModule_GetDict(m);v=PyRun_StringFlags(command,Py_file_input,d,d,flags);if(v==NULL){PyErr_Print();return-1;}Py_DECREF(v);return0;}Once PyRun_SimpleStringFlags() has created a module and a dictionary, it calls PyRun_StringFlags(), which creates a fake filename and then calls the Python parser to create an AST from the string and return a module, mod:

PyObject*PyRun_StringFlags(constchar*str,intstart,PyObject*globals,PyObject*locals,PyCompilerFlags*flags){...mod=PyParser_ASTFromStringObject(str,filename,start,flags,arena);if(mod!=NULL)ret=run_mod(mod,filename,globals,locals,flags,arena);PyArena_Free(arena);returnret;You’ll dive into the AST and Parser code in the next section.

Another way to execute Python commands is by using the -m option with the name of a module.

A typical example is python -m unittest to run the unittest module in the standard library.

Being able to execute modules as scripts were initially proposed in PEP 338 and then the standard for explicit relative imports defined in PEP366.

The use of the -m flag implies that within the module package, you want to execute whatever is inside __main__. It also implies that you want to search sys.path for the named module.

This search mechanism is why you don’t need to remember where the unittest module is stored on your filesystem.

Inside Modules/main.c there is a function called when the command-line is run with the -m flag. The name of the module is passed as the modname argument.

CPython will then import a standard library module, runpy and execute it using PyObject_Call(). The import is done using the C API function PyImport_ImportModule(), found within the Python/import.c file:

staticintpymain_run_module(constwchar_t*modname,intset_argv0){PyObject*module,*runpy,*runmodule,*runargs,*result;runpy=PyImport_ImportModule("runpy");...runmodule=PyObject_GetAttrString(runpy,"_run_module_as_main");...module=PyUnicode_FromWideChar(modname,wcslen(modname));...runargs=Py_BuildValue("(Oi)",module,set_argv0);...result=PyObject_Call(runmodule,runargs,NULL);...if(result==NULL){returnpymain_exit_err_print();}Py_DECREF(result);return0;}In this function you’ll also see 2 other C API functions: PyObject_Call() and PyObject_GetAttrString(). Because PyImport_ImportModule() returns a PyObject*, the core object type, you need to call special functions to get attributes and to call it.

In Python, if you had an object and wanted to get an attribute, then you could call getattr(). In the C API, this call is PyObject_GetAttrString(), which is found in Objects/object.c. If you wanted to run a callable, you would give it parentheses, or you can run the __call__() property on any Python object. The __call__() method is implemented inside Objects/object.c:

hi="hi!"hi.upper()==hi.upper.__call__()# this is the same

The runpy module is written in pure Python and located in Lib/runpy.py.

Executing python -m <module> is equivalent to running python -m runpy <module>. The runpy module was created to abstract the process of locating and executing modules on an operating system.

runpy does a few things to run the target module:

- Calls

__import__() for the module name you provided - Sets

__name__ (the module name) to a namespace called __main__ - Executes the module within the

__main__ namespace

The runpy module also supports executing directories and zip files.

If the first argument to python was a filename, such as python test.py, then CPython will open a file handle, similar to using open() in Python and pass the handle to PyRun_SimpleFileExFlags() inside Python/pythonrun.c.

There are 3 paths this function can take:

- If the file path is a

.pyc file, it will call run_pyc_file(). - If the file path is a script file (

.py) it will run PyRun_FileExFlags(). - If the filepath is

stdin because the user ran command | python then treat stdin as a file handle and run PyRun_FileExFlags().

intPyRun_SimpleFileExFlags(FILE*fp,constchar*filename,intcloseit,PyCompilerFlags*flags){...m=PyImport_AddModule("__main__");...if(maybe_pyc_file(fp,filename,ext,closeit)){...v=run_pyc_file(pyc_fp,filename,d,d,flags);}else{/* When running from stdin, leave __main__.__loader__ alone */if(strcmp(filename,"<stdin>")!=0&&set_main_loader(d,filename,"SourceFileLoader")<0){fprintf(stderr,"python: failed to set __main__.__loader__\n");ret=-1;gotodone;}v=PyRun_FileExFlags(fp,filename,Py_file_input,d,d,closeit,flags);}...returnret;}For stdin and basic script files, CPython will pass the file handle to PyRun_FileExFlags() located in the pythonrun.c file.

The purpose of PyRun_FileExFlags() is similar to PyRun_SimpleStringFlags() used for the -c input. CPython will load the file handle into PyParser_ASTFromFileObject(). We’ll cover the Parser and AST modules in the next section.

Because this is a full script, it doesn’t need the PyImport_AddModule("__main__"); step used by -c:

PyObject*PyRun_FileExFlags(FILE*fp,constchar*filename_str,intstart,PyObject*globals,PyObject*locals,intcloseit,PyCompilerFlags*flags){...mod=PyParser_ASTFromFileObject(fp,filename,NULL,start,0,0,...ret=run_mod(mod,filename,globals,locals,flags,arena);}Identical to PyRun_SimpleStringFlags(), once PyRun_FileExFlags() has created a Python module from the file, it sent it to run_mod() to be executed.

run_mod() is found within Python/pythonrun.c, and sends the module to the AST to be compiled into a code object. Code objects are a format used to store the bytecode operations and the format kept in .pyc files:

staticPyObject*run_mod(mod_tymod,PyObject*filename,PyObject*globals,PyObject*locals,PyCompilerFlags*flags,PyArena*arena){PyCodeObject*co;PyObject*v;co=PyAST_CompileObject(mod,filename,flags,-1,arena);if(co==NULL)returnNULL;if(PySys_Audit("exec","O",co)<0){Py_DECREF(co);returnNULL;}v=run_eval_code_obj(co,globals,locals);Py_DECREF(co);returnv;}We will cover the CPython compiler and bytecodes in the next section. The call to run_eval_code_obj() is a simple wrapper function that calls PyEval_EvalCode() in the Python/eval.c file. The PyEval_EvalCode() function is the main evaluation loop for CPython, it iterates over each bytecode statement and executes it on your local machine.

In the PyRun_SimpleFileExFlags() there was a clause for the user providing a file path to a .pyc file. If the file path ended in .pyc then instead of loading the file as a plain text file and parsing it, it will assume that the .pyc file contains a code object written to disk.

The run_pyc_file() function inside Python/pythonrun.c then marshals the code object from the .pyc file by using the file handle. Marshaling is a technical term for copying the contents of a file into memory and converting them to a specific data structure. The code object data structure on the disk is the CPython compiler’s way to caching compiled code so that it doesn’t need to parse it every time the script is called:

staticPyObject*run_pyc_file(FILE*fp,constchar*filename,PyObject*globals,PyObject*locals,PyCompilerFlags*flags){PyCodeObject*co;PyObject*v;...v=PyMarshal_ReadLastObjectFromFile(fp);...if(v==NULL||!PyCode_Check(v)){Py_XDECREF(v);PyErr_SetString(PyExc_RuntimeError,"Bad code object in .pyc file");gotoerror;}fclose(fp);co=(PyCodeObject*)v;v=run_eval_code_obj(co,globals,locals);if(v&&flags)flags->cf_flags|=(co->co_flags&PyCF_MASK);Py_DECREF(co);returnv;}Once the code object has been marshaled to memory, it is sent to run_eval_code_obj(), which calls Python/ceval.c to execute the code.

Lexing and Parsing

In the exploration of reading and executing Python files, we dived as deep as the parser and AST modules, with function calls to PyParser_ASTFromFileObject().

Sticking within Python/pythonrun.c, the PyParser_ASTFromFileObject() function will take a file handle, compiler flags and a PyArena instance and convert the file object into a node object using PyParser_ParseFileObject().

With the node object, it will then convert that into a module using the AST function PyAST_FromNodeObject():

mod_tyPyParser_ASTFromFileObject(FILE*fp,PyObject*filename,constchar*enc,intstart,constchar*ps1,constchar*ps2,PyCompilerFlags*flags,int*errcode,PyArena*arena){...node*n=PyParser_ParseFileObject(fp,filename,enc,&_PyParser_Grammar,start,ps1,ps2,&err,&iflags);...if(n){flags->cf_flags|=iflags&PyCF_MASK;mod=PyAST_FromNodeObject(n,flags,filename,arena);PyNode_Free(n);...returnmod;}For PyParser_ParseFileObject() we switch to Parser/parsetok.c and the parser-tokenizer stage of the CPython interpreter. This function has two important tasks:

- Instantiate a tokenizer state

tok_state using PyTokenizer_FromFile() in Parser/tokenizer.c - Convert the tokens into a concrete parse tree (a list of

node) using parsetok() in Parser/parsetok.c

node*PyParser_ParseFileObject(FILE*fp,PyObject*filename,constchar*enc,grammar*g,intstart,constchar*ps1,constchar*ps2,perrdetail*err_ret,int*flags){structtok_state*tok;...if((tok=PyTokenizer_FromFile(fp,enc,ps1,ps2))==NULL){err_ret->error=E_NOMEM;returnNULL;}...returnparsetok(tok,g,start,err_ret,flags);}tok_state (defined in Parser/tokenizer.h) is the data structure to store all temporary data generated by the tokenizer. It is returned to the parser-tokenizer as the data structure is required by parsetok() to develop the concrete syntax tree.

Inside parsetok(), it will use the tok_state structure and make calls to tok_get() in a loop until the file is exhausted and no more tokens can be found.

tok_get(), defined in Parser/tokenizer.c behaves like an iterator. It will keep returning the next token in the parse tree.

tok_get() is one of the most complex functions in the whole CPython codebase. It has over 640 lines and includes decades of heritage with edge cases, new language features, and syntax.

One of the simpler examples would be the part that converts a newline break into a NEWLINE token:

staticinttok_get(structtok_state*tok,char**p_start,char**p_end){.../* Newline */if(c=='\n'){tok->atbol=1;if(blankline||tok->level>0){gotonextline;}*p_start=tok->start;*p_end=tok->cur-1;/* Leave '\n' out of the string */tok->cont_line=0;if(tok->async_def){/* We're somewhere inside an 'async def' function, and we've encountered a NEWLINE after its signature. */tok->async_def_nl=1;}returnNEWLINE;}...}In this case, NEWLINE is a token, with a value defined in Include/token.h. All tokens are constant int values, and the Include/token.h file was generated earlier when we ran make regen-grammar.

The node type returned by PyParser_ParseFileObject() is going to be essential for the next stage, converting a parse tree into an Abstract-Syntax-Tree (AST):

typedefstruct_node{shortn_type;char*n_str;intn_lineno;intn_col_offset;intn_nchildren;struct_node*n_child;intn_end_lineno;intn_end_col_offset;}node;Since the CST is a tree of syntax, token IDs, and symbols, it would be difficult for the compiler to make quick decisions based on the Python language.

That is why the next stage is to convert the CST into an AST, a much higher-level structure. This task is performed by the Python/ast.c module, which has both a C and Python API.

Before you jump into the AST, there is a way to access the output from the parser stage. CPython has a standard library module parser, which exposes the C functions with a Python API.

The module is documented as an implementation detail of CPython so that you won’t see it in other Python interpreters. Also the output from the functions is not that easy to read.

The output will be in the numeric form, using the token and symbol numbers generated by the make regen-grammar stage, stored in Include/token.h and Include/symbol.h:

>>>>>> frompprintimportpprint>>> importparser>>> st=parser.expr('a + 1')>>> pprint(parser.st2list(st))[258, [332, [306, [310, [311, [312, [313, [316, [317, [318, [319, [320, [321, [322, [323, [324, [325, [1, 'a']]]]]], [14, '+'], [321, [322, [323, [324, [325, [2, '1']]]]]]]]]]]]]]]]], [4, ''], [0, '']] To make it easier to understand, you can take all the numbers in the symbol and token modules, put them into a dictionary and recursively replace the values in the output of parser.st2list() with the names:

importsymbolimporttokenimportparserdeflex(expression):symbols={v:kfork,vinsymbol.__dict__.items()ifisinstance(v,int)}tokens={v:kfork,vintoken.__dict__.items()ifisinstance(v,int)}lexicon={**symbols,**tokens}st=parser.expr(expression)st_list=parser.st2list(st)defreplace(l:list):r=[]foriinl:ifisinstance(i,list):r.append(replace(i))else:ifiinlexicon:r.append(lexicon[i])else:r.append(i)returnrreturnreplace(st_list)You can run lex() with a simple expression, like a + 1 to see how this is represented as a parser-tree:

>>>>>> frompprintimportpprint>>> pprint(lex('a + 1'))['eval_input', ['testlist', ['test', ['or_test', ['and_test', ['not_test', ['comparison', ['expr', ['xor_expr', ['and_expr', ['shift_expr', ['arith_expr', ['term', ['factor', ['power', ['atom_expr', ['atom', ['NAME', 'a']]]]]], ['PLUS', '+'], ['term', ['factor', ['power', ['atom_expr', ['atom', ['NUMBER', '1']]]]]]]]]]]]]]]]], ['NEWLINE', ''], ['ENDMARKER', '']] In the output, you can see the symbols in lowercase, such as 'test' and the tokens in uppercase, such as 'NUMBER'.

Abstract Syntax Trees

The next stage in the CPython interpreter is to convert the CST generated by the parser into something more logical that can be executed. The structure is a higher-level representation of the code, called an Abstract Syntax Tree (AST).

ASTs are produced inline with the CPython interpreter process, but you can also generate them in both Python using the ast module in the Standard Library as well as through the C API.

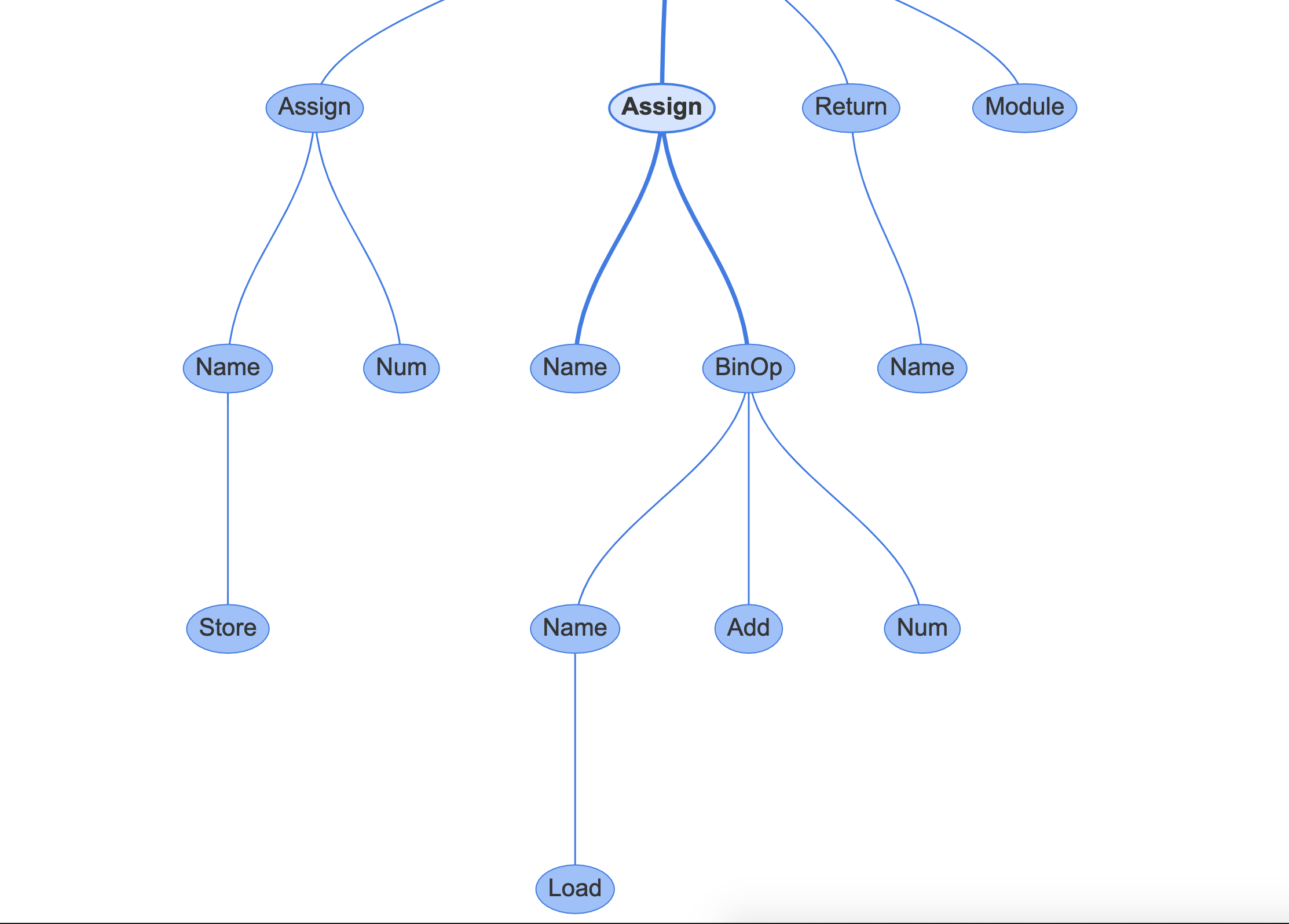

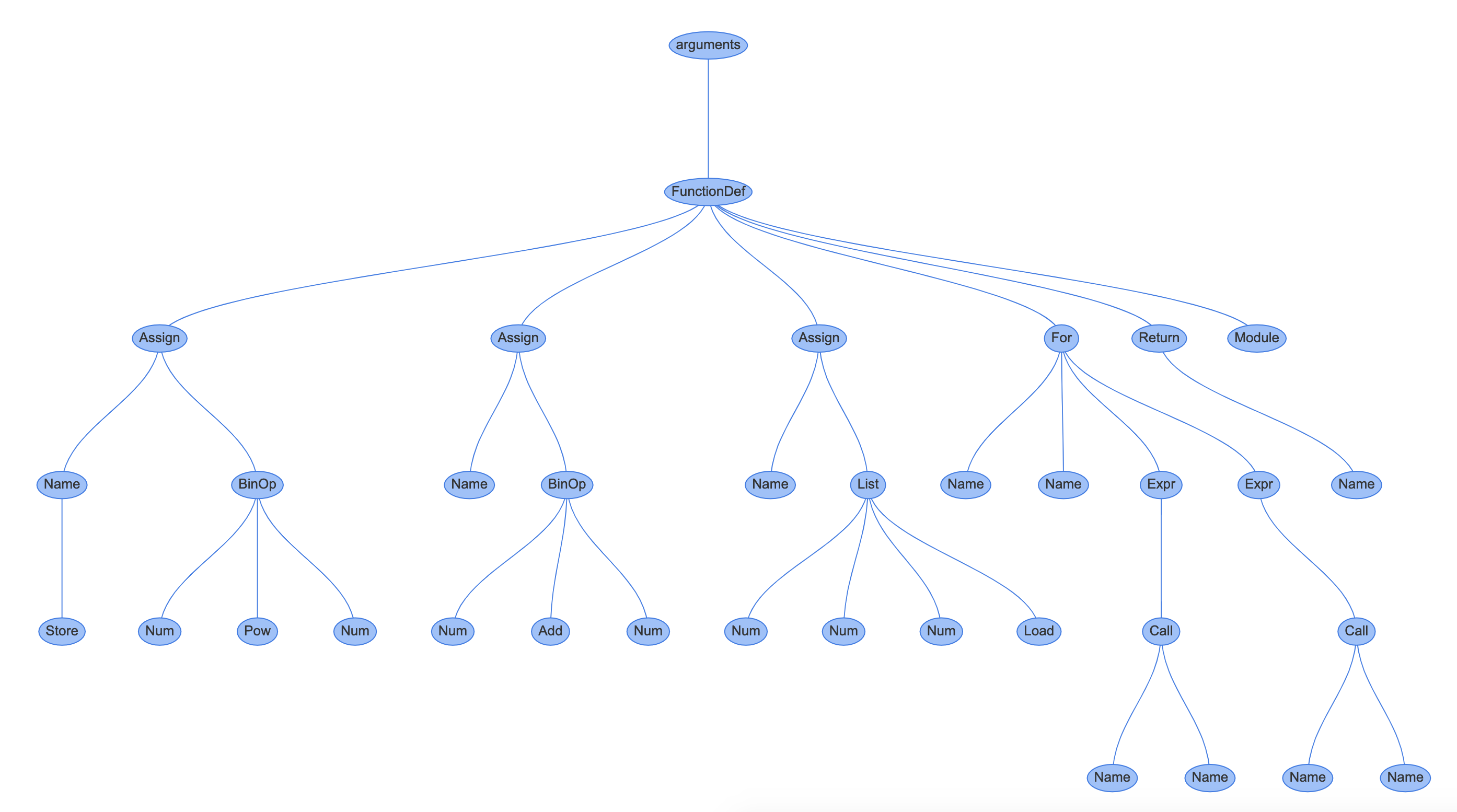

Before diving into the C implementation of the AST, it would be useful to understand what an AST looks like for a simple piece of Python code.

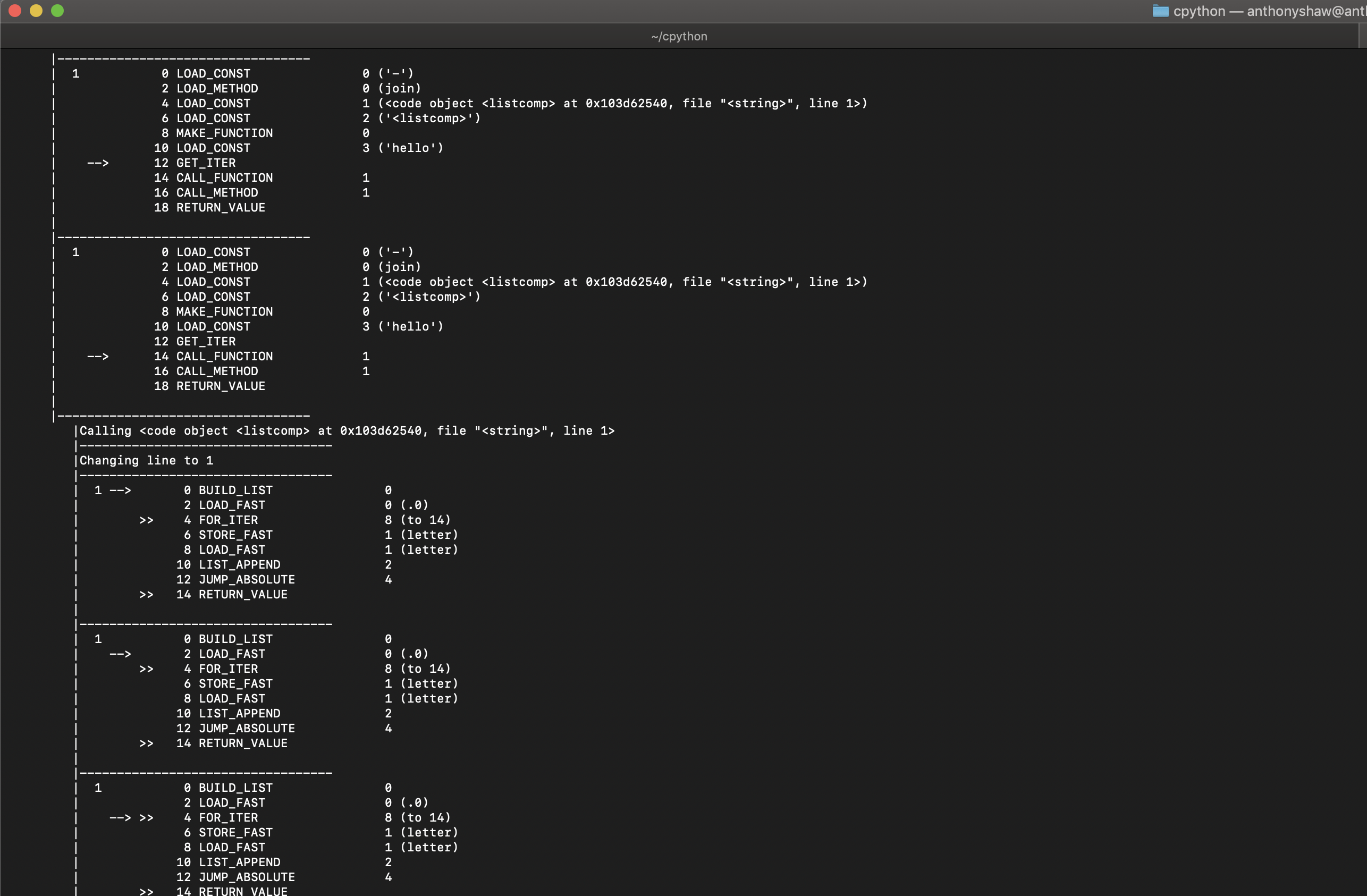

To do this, here’s a simple app called instaviz for this tutorial. It displays the AST and bytecode instructions (which we’ll cover later) in a Web UI.

To install instaviz:

Then, open up a REPL by running python at the command line with no arguments:

>>>>>> importinstaviz>>> defexample(): a = 1 b = a + 1 return b>>> instaviz.show(example)

You’ll see a notification on the command-line that a web server has started on port 8080. If you were using that port for something else, you can change it by calling instaviz.show(example, port=9090) or another port number.

In the web browser, you can see the detailed breakdown of your function:

![Instaviz screenshot]()

The bottom left graph is the function you declared in REPL, represented as an Abstract Syntax Tree. Each node in the tree is an AST type. They are found in the ast module, and all inherit from _ast.AST.

Some of the nodes have properties which link them to child nodes, unlike the CST, which has a generic child node property.

For example, if you click on the Assign node in the center, this links to the line b = a + 1:

![Instaviz screenshot 2]()

It has two properties:

targets is a list of names to assign. It is a list because you can assign to multiple variables with a single expression using unpackingvalue is the value to assign, which in this case is a BinOp statement, a + 1.

If you click on the BinOp statement, it shows the properties of relevance:

left: the node to the left of the operatorop: the operator, in this case, an Add node (+) for additionright: the node to the right of the operator

![Instaviz screenshot 3]()

Compiling an AST in C is not a straightforward task, so the Python/ast.c module is over 5000 lines of code.

There are a few entry points, forming part of the AST’s public API. In the last section on the lexer and parser, you stopped when you’d reached the call to PyAST_FromNodeObject(). By this stage, the Python interpreter process had created a CST in the format of node * tree.

Jumping then into PyAST_FromNodeObject() inside Python/ast.c, you can see it receives the node * tree, the filename, compiler flags, and the PyArena.

The return type from this function is mod_ty, defined in Include/Python-ast.h. mod_ty is a container structure for one of the 5 module types in Python:

ModuleInteractiveExpressionFunctionTypeSuite

In Include/Python-ast.h you can see that an Expression type requires a field body, which is an expr_ty type. The expr_ty type is also defined in Include/Python-ast.h:

enum_mod_kind{Module_kind=1,Interactive_kind=2,Expression_kind=3,FunctionType_kind=4,Suite_kind=5};struct_mod{enum_mod_kindkind;union{struct{asdl_seq*body;asdl_seq*type_ignores;}Module;struct{asdl_seq*body;}Interactive;struct{expr_tybody;}Expression;struct{asdl_seq*argtypes;expr_tyreturns;}FunctionType;struct{asdl_seq*body;}Suite;}v;};The AST types are all listed in Parser/Python.asdl. You will see the module types, statement types, expression types, operators, and comprehensions all listed. The names of the types in this document relate to the classes generated by the AST and the same classes named in the ast standard module library.

The parameters and names in Include/Python-ast.h correlate directly to those specified in Parser/Python.asdl:

-- ASDL's 5 builtin types are:

-- identifier, int, string, object, constant

module Python

{

mod = Module(stmt* body, type_ignore *type_ignores)

| Interactive(stmt* body)

| Expression(expr body)

| FunctionType(expr* argtypes, expr returns)

The C header file and structures are there so that the Python/ast.c program can quickly generate the structures with pointers to the relevant data.

Looking at PyAST_FromNodeObject() you can see that it is essentially a switch statement around the result from TYPE(n). TYPE() is one of the core functions used by the AST to determine what type a node in the concrete syntax tree is. In the case of PyAST_FromNodeObject() it’s just looking at the first node, so it can only be one of the module types defined as Module, Interactive, Expression, FunctionType.

The result of TYPE() will be either a symbol or token type, which we’re very familiar with by this stage.

For file_input, the results should be a Module. Modules are a series of statements, of which there are a few types. The logic to traverse the children of n and create statement nodes is within ast_for_stmt(). This function is called either once, if there is only 1 statement in the module, or in a loop if there are many. The resulting Module is then returned with the PyArena.

For eval_input, the result should be an Expression. The result from CHILD(n ,0), which is the first child of n is passed to ast_for_testlist() which returns an expr_ty type. This expr_ty is sent to Expression() with the PyArena to create an expression node, and then passed back as a result:

mod_tyPyAST_FromNodeObject(constnode*n,PyCompilerFlags*flags,PyObject*filename,PyArena*arena){...switch(TYPE(n)){casefile_input:stmts=_Py_asdl_seq_new(num_stmts(n),arena);if(!stmts)gotoout;for(i=0;i<NCH(n)-1;i++){ch=CHILD(n,i);if(TYPE(ch)==NEWLINE)continue;REQ(ch,stmt);num=num_stmts(ch);if(num==1){s=ast_for_stmt(&c,ch);if(!s)gotoout;asdl_seq_SET(stmts,k++,s);}else{ch=CHILD(ch,0);REQ(ch,simple_stmt);for(j=0;j<num;j++){s=ast_for_stmt(&c,CHILD(ch,j*2));if(!s)gotoout;asdl_seq_SET(stmts,k++,s);}}}/* Type ignores are stored under the ENDMARKER in file_input. */...res=Module(stmts,type_ignores,arena);break;caseeval_input:{expr_tytestlist_ast;/* XXX Why not comp_for here? */testlist_ast=ast_for_testlist(&c,CHILD(n,0));if(!testlist_ast)gotoout;res=Expression(testlist_ast,arena);break;}casesingle_input:...break;casefunc_type_input:......returnres;}Inside the ast_for_stmt() function, there is another switch statement for each possible statement type (simple_stmt, compound_stmt, and so on) and the code to determine the arguments to the node class.

One of the simpler functions is for the power expression, i.e., 2**4 is 2 to the power of 4. This function starts by getting the ast_for_atom_expr(), which is the number 2 in our example, then if that has one child, it returns the atomic expression. If it has more than one child, it will get the right-hand (the number 4) and return a BinOp (binary operation) with the operator as Pow (power), the left hand of e (2), and the right hand of f (4):

staticexpr_tyast_for_power(structcompiling*c,constnode*n){/* power: atom trailer* ('**' factor)* */expr_tye;REQ(n,power);e=ast_for_atom_expr(c,CHILD(n,0));if(!e)returnNULL;if(NCH(n)==1)returne;if(TYPE(CHILD(n,NCH(n)-1))==factor){expr_tyf=ast_for_expr(c,CHILD(n,NCH(n)-1));if(!f)returnNULL;e=BinOp(e,Pow,f,LINENO(n),n->n_col_offset,n->n_end_lineno,n->n_end_col_offset,c->c_arena);}returne;}You can see the result of this if you send a short function to the instaviz module:

>>>>>> deffoo(): 2**4>>> importinstaviz>>> instaviz.show(foo)

![Instaviz screenshot 4]()

In the UI you can also see the corresponding properties:

![Instaviz screenshot 5]()

In summary, each statement type and expression has a corresponding ast_for_*() function to create it. The arguments are defined in Parser/Python.asdl and exposed via the ast module in the standard library. If an expression or statement has children, then it will call the corresponding ast_for_* child function in a depth-first traversal.

Conclusion

CPython’s versatility and low-level execution API make it the ideal candidate for an embedded scripting engine. You will see CPython used in many UI applications, such as Game Design, 3D graphics and system automation.

The interpreter process is flexible and efficient, and now you have an understanding of how it works you’re ready to understand the compiler.

Part 3: The CPython Compiler and Execution Loop

In Part 2, you saw how the CPython interpreter takes an input, such as a file or string, and converts it into a logical Abstract Syntax Tree. We’re still not at the stage where this code can be executed. Next, we have to go deeper to convert the Abstract Syntax Tree into a set of sequential commands that the CPU can understand.

Compiling

Now the interpreter has an AST with the properties required for each of the operations, functions, classes, and namespaces. It is the job of the compiler to turn the AST into something the CPU can understand.

This compilation task is split into 2 parts:

- Traverse the tree and create a control-flow-graph, which represents the logical sequence for execution

- Convert the nodes in the CFG to smaller, executable statements, known as byte-code

Earlier, we were looking at how files are executed, and the PyRun_FileExFlags() function in Python/pythonrun.c. Inside this function, we converted the FILE handle into a mod, of type mod_ty. This task was completed by PyParser_ASTFromFileObject(), which in turns calls the tokenizer, parser-tokenizer and then the AST:

PyObject*PyRun_FileExFlags(FILE*fp,constchar*filename_str,intstart,PyObject*globals,PyObject*locals,intcloseit,PyCompilerFlags*flags){...mod=PyParser_ASTFromFileObject(fp,filename,NULL,start,0,0,...ret=run_mod(mod,filename,globals,locals,flags,arena);}The resulting module from the call to is sent to run_mod() still in Python/pythonrun.c. This is a small function that gets a PyCodeObject from PyAST_CompileObject() and sends it on to run_eval_code_obj(). You will tackle run_eval_code_obj() in the next section:

staticPyObject*run_mod(mod_tymod,PyObject*filename,PyObject*globals,PyObject*locals,PyCompilerFlags*flags,PyArena*arena){PyCodeObject*co;PyObject*v;co=PyAST_CompileObject(mod,filename,flags,-1,arena);if(co==NULL)returnNULL;if(PySys_Audit("exec","O",co)<0){Py_DECREF(co);returnNULL;}v=run_eval_code_obj(co,globals,locals);Py_DECREF(co);returnv;}The PyAST_CompileObject() function is the main entry point to the CPython compiler. It takes a Python module as its primary argument, along with the name of the file, the globals, locals, and the PyArena all created earlier in the interpreter process.

We’re starting to get into the guts of the CPython compiler now, with decades of development and Computer Science theory behind it. Don’t be put off by the language. Once we break down the compiler into logical steps, it’ll make sense.

Before the compiler starts, a global compiler state is created. This type, compiler is defined in Python/compile.c and contains properties used by the compiler to remember the compiler flags, the stack, and the PyArena:

structcompiler{PyObject*c_filename;structsymtable*c_st;PyFutureFeatures*c_future;/* pointer to module's __future__ */PyCompilerFlags*c_flags;intc_optimize;/* optimization level */intc_interactive;/* true if in interactive mode */intc_nestlevel;PyObject*c_const_cache;/* Python dict holding all constants, including names tuple */structcompiler_unit*u;/* compiler state for current block */PyObject*c_stack;/* Python list holding compiler_unit ptrs */PyArena*c_arena;/* pointer to memory allocation arena */};Inside PyAST_CompileObject(), there are 11 main steps happening:

- Create an empty

__doc__ property to the module if it doesn’t exist. - Create an empty

__annotations__ property to the module if it doesn’t exist. - Set the filename of the global compiler state to the filename argument.

- Set the memory allocation arena for the compiler to the one used by the interpreter.

- Copy any

__future__ flags in the module to the future flags in the compiler. - Merge runtime flags provided by the command-line or environment variables.

- Enable any

__future__ features in the compiler. - Set the optimization level to the provided argument, or default.

- Build a symbol table from the module object.

- Run the compiler with the compiler state and return the code object.

- Free any allocated memory by the compiler.

PyCodeObject*PyAST_CompileObject(mod_tymod,PyObject*filename,PyCompilerFlags*flags,intoptimize,PyArena*arena){structcompilerc;PyCodeObject*co=NULL;PyCompilerFlagslocal_flags;intmerged;if(!__doc__){// 1.__doc__=PyUnicode_InternFromString("__doc__");if(!__doc__)returnNULL;}if(!__annotations__){__annotations__=PyUnicode_InternFromString("__annotations__");// 2.if(!__annotations__)returnNULL;}if(!compiler_init(&c))returnNULL;Py_INCREF(filename);c.c_filename=filename;// 3.c.c_arena=arena;// 4.c.c_future=PyFuture_FromASTObject(mod,filename);// 5.if(c.c_future==NULL)gotofinally;if(!flags){local_flags.cf_flags=0;local_flags.cf_feature_version=PY_MINOR_VERSION;flags=&local_flags;}merged=c.c_future->ff_features|flags->cf_flags;// 6.c.c_future->ff_features=merged;// 7.flags->cf_flags=merged;c.c_flags=flags;c.c_optimize=(optimize==-1)?Py_OptimizeFlag:optimize;// 8.c.c_nestlevel=0;if(!_PyAST_Optimize(mod,arena,c.c_optimize)){gotofinally;}c.c_st=PySymtable_BuildObject(mod,filename,c.c_future);// 9.if(c.c_st==NULL){if(!PyErr_Occurred())PyErr_SetString(PyExc_SystemError,"no symtable");gotofinally;}co=compiler_mod(&c,mod);// 10.finally:compiler_free(&c);// 11.assert(co||PyErr_Occurred());returnco;}Future Flags and Compiler Flags

Before the compiler runs, there are two types of flags to toggle the features inside the compiler. These come from two places:

- The interpreter state, which may have been command-line options, set in

pyconfig.h or via environment variables - The use of

__future__ statements inside the actual source code of the module

To distinguish the two types of flags, think that the __future__ flags are required because of the syntax or features in that specific module. For example, Python 3.7 introduced delayed evaluation of type hints through the annotations future flag:

from__future__importannotations

The code after this statement might use unresolved type hints, so the __future__ statement is required. Otherwise, the module wouldn’t import. It would be unmaintainable to manually request that the person importing the module enable this specific compiler flag.

The other compiler flags are specific to the environment, so they might change the way the code executes or the way the compiler runs, but they shouldn’t link to the source in the same way that __future__ statements do.

One example of a compiler flag would be the -O flag for optimizing the use of assert statements. This flag disables any assert statements, which may have been put in the code for debugging purposes.

It can also be enabled with the PYTHONOPTIMIZE=1 environment variable setting.

Symbol Tables

In PyAST_CompileObject() there was a reference to a symtable and a call to PySymtable_BuildObject() with the module to be executed.

The purpose of the symbol table is to provide a list of namespaces, globals, and locals for the compiler to use for referencing and resolving scopes.

The symtable structure in Include/symtable.h is well documented, so it’s clear what each of the fields is for. There should be one symtable instance for the compiler, so namespacing becomes essential.

If you create a function called resolve_names() in one module and declare another function with the same name in another module, you want to be sure which one is called. The symtable serves this purpose, as well as ensuring that variables declared within a narrow scope don’t automatically become globals (after all, this isn’t JavaScript):

structsymtable{PyObject*st_filename;/* name of file being compiled, decoded from the filesystem encoding */struct_symtable_entry*st_cur;/* current symbol table entry */struct_symtable_entry*st_top;/* symbol table entry for module */PyObject*st_blocks;/* dict: map AST node addresses * to symbol table entries */PyObject*st_stack;/* list: stack of namespace info */PyObject*st_global;/* borrowed ref to st_top->ste_symbols */intst_nblocks;/* number of blocks used. kept for consistency with the corresponding compiler structure */PyObject*st_private;/* name of current class or NULL */PyFutureFeatures*st_future;/* module's future features that affect the symbol table */intrecursion_depth;/* current recursion depth */intrecursion_limit;/* recursion limit */};Some of the symbol table API is exposed via the symtable module in the standard library. You can provide an expression or a module an receive a symtable.SymbolTable instance.

You can provide a string with a Python expression and the compile_type of "eval", or a module, function or class, and the compile_mode of "exec" to get a symbol table.

Looping over the elements in the table we can see some of the public and private fields and their types:

>>>>>> importsymtable>>> s=symtable.symtable('b + 1',filename='test.py',compile_type='eval')>>> [symbol.__dict__forsymbolins.get_symbols()][{'_Symbol__name': 'b', '_Symbol__flags': 6160, '_Symbol__scope': 3, '_Symbol__namespaces': ()}] The C code behind this is all within Python/symtable.c and the primary interface is the PySymtable_BuildObject() function.

Similar to the top-level AST function we covered earlier, the PySymtable_BuildObject() function switches between the mod_ty possible types (Module, Expression, Interactive, Suite, FunctionType), and visits each of the statements inside them.

Remember, mod_ty is an AST instance, so the will now recursively explore the nodes and branches of the tree and add entries to the symtable:

structsymtable*PySymtable_BuildObject(mod_tymod,PyObject*filename,PyFutureFeatures*future){structsymtable*st=symtable_new();asdl_seq*seq;inti;PyThreadState*tstate;intrecursion_limit=Py_GetRecursionLimit();...st->st_top=st->st_cur;switch(mod->kind){caseModule_kind:seq=mod->v.Module.body;for(i=0;i<asdl_seq_LEN(seq);i++)if(!symtable_visit_stmt(st,(stmt_ty)asdl_seq_GET(seq,i)))gotoerror;break;caseExpression_kind:...caseInteractive_kind:...caseSuite_kind:...caseFunctionType_kind:...}...}So for a module, PySymtable_BuildObject() will loop through each statement in the module and call symtable_visit_stmt(). The symtable_visit_stmt() is a huge switch statement with a case for each statement type (defined in Parser/Python.asdl).

For each statement type, there is specific logic to that statement type. For example, a function definition has particular logic for:

- If the recursion depth is beyond the limit, raise a recursion depth error

- The name of the function to be added as a local variable

- The default values for sequential arguments to be resolved

- The default values for keyword arguments to be resolved

- Any annotations for the arguments or the return type are resolved

- Any function decorators are resolved

- The code block with the contents of the function is visited in

symtable_enter_block() - The arguments are visited

- The body of the function is visited

Note: If you’ve ever wondered why Python’s default arguments are mutable, the reason is in this function. You can see they are a pointer to the variable in the symtable. No extra work is done to copy any values to an immutable type.

staticintsymtable_visit_stmt(structsymtable*st,stmt_tys){if(++st->recursion_depth>st->recursion_limit){// 1.PyErr_SetString(PyExc_RecursionError,"maximum recursion depth exceeded during compilation");VISIT_QUIT(st,0);}switch(s->kind){caseFunctionDef_kind:if(!symtable_add_def(st,s->v.FunctionDef.name,DEF_LOCAL))// 2.VISIT_QUIT(st,0);if(s->v.FunctionDef.args->defaults)// 3.VISIT_SEQ(st,expr,s->v.FunctionDef.args->defaults);if(s->v.FunctionDef.args->kw_defaults)// 4.VISIT_SEQ_WITH_NULL(st,expr,s->v.FunctionDef.args->kw_defaults);if(!symtable_visit_annotations(st,s,s->v.FunctionDef.args,// 5.s->v.FunctionDef.returns))VISIT_QUIT(st,0);if(s->v.FunctionDef.decorator_list)// 6.VISIT_SEQ(st,expr,s->v.FunctionDef.decorator_list);if(!symtable_enter_block(st,s->v.FunctionDef.name,// 7.FunctionBlock,(void*)s,s->lineno,s->col_offset))VISIT_QUIT(st,0);VISIT(st,arguments,s->v.FunctionDef.args);// 8.VISIT_SEQ(st,stmt,s->v.FunctionDef.body);// 9.if(!symtable_exit_block(st,s))VISIT_QUIT(st,0);break;caseClassDef_kind:{...}caseReturn_kind:...caseDelete_kind:...caseAssign_kind:...caseAnnAssign_kind:...Once the resulting symtable has been created, it is sent back to be used for the compiler.

Core Compilation Process

Now that the PyAST_CompileObject() has a compiler state, a symtable, and a module in the form of the AST, the actual compilation can begin.

The purpose of the core compiler is to:

- Convert the state, symtable, and AST into a Control-Flow-Graph (CFG)

- Protect the execution stage from runtime exceptions by catching any logic and code errors and raising them here

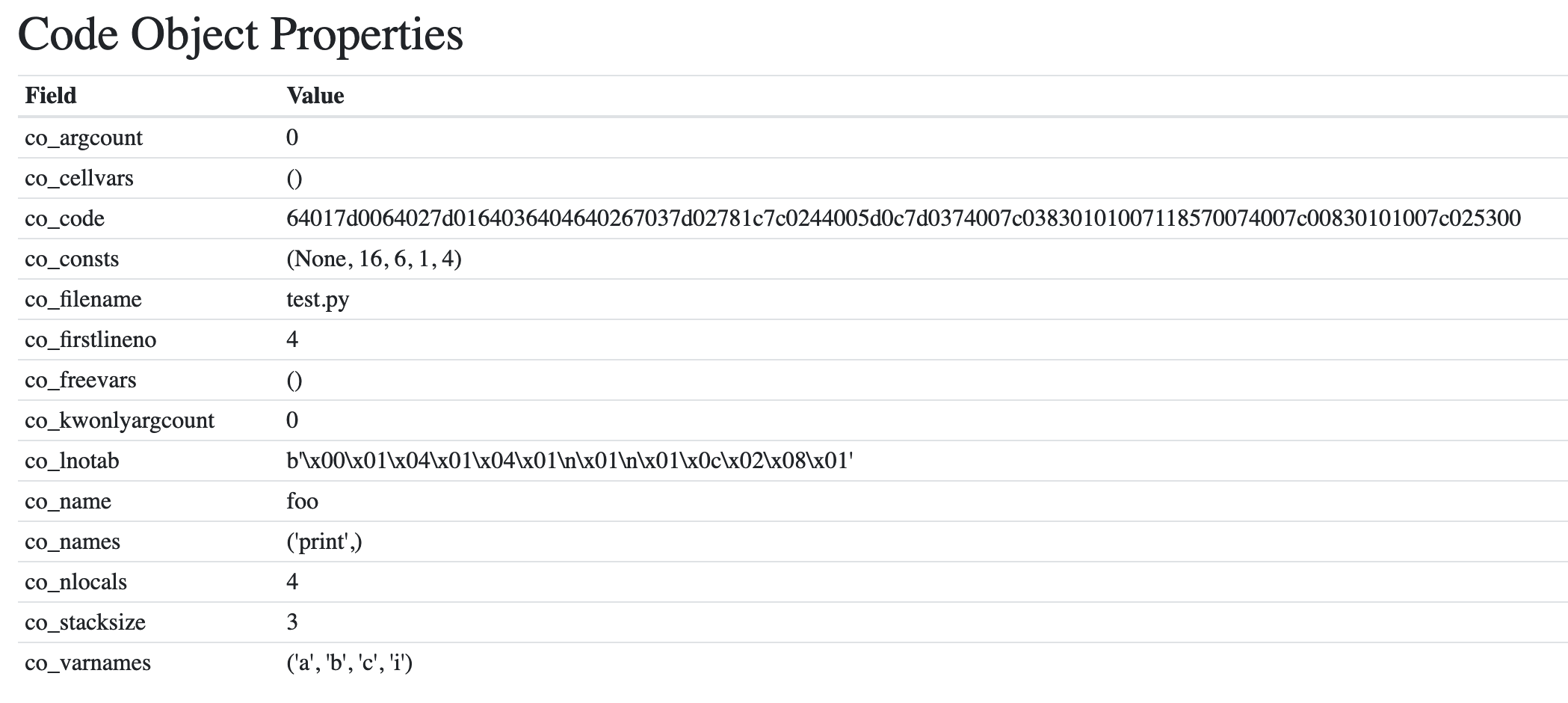

You can call the CPython compiler in Python code by calling the built-in function compile(). It returns a code object instance: