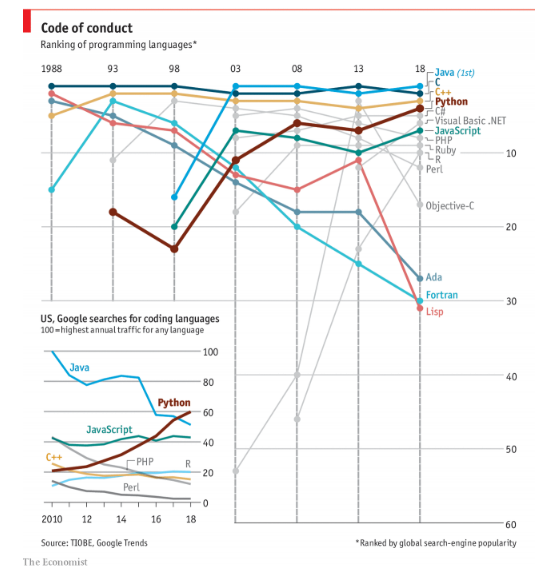

It’s the era of big data, and every day more and more business are trying to leverage their data to make informed decisions. Many businesses are turning to Python’s powerful data science ecosystem to analyze their data, as evidenced by Python’s rising popularity in the data science realm.

One thing every data science practitioner must keep in mind is how a dataset may be biased. Drawing conclusions from biased data can lead to costly mistakes.

There are many ways bias can creep into a dataset. If you’ve studied some statistics, you’re probably familiar with terms like reporting bias, selection bias and sampling bias. There is another type of bias that plays an important role when you are dealing with numeric data: rounding bias.

In this article, you will learn:

- Why the way you round numbers is important

- How to round a number according to various rounding strategies, and how to implement each method in pure Python

- How rounding affects data, and which rounding strategy minimizes this effect

- How to round numbers in NumPy arrays and Pandas DataFrames

- When to apply different rounding strategies

Take the Quiz: Test your knowledge with our interactive “Rounding Numbers in Python” quiz. Upon completion you will receive a score so you can track your learning progress over time.

Click here to start the quiz »

This article is not a treatise on numeric precision in computing, although we will touch briefly on the subject. Only a familiarity with the fundamentals of Python is necessary, and the math involved here should feel comfortable to anyone familiar with the equivalent of high school algebra.

Let’s start by looking at Python’s built-in rounding mechanism.

Python’s Built-in round() Function

Python has a built-in round() function that takes two numeric arguments, n and ndigits, and returns the number n rounded to ndigits. The ndigits argument defaults to zero, so leaving it out results in a number rounded to an integer. As you’ll see, round() may not work quite as you expect.

The way most people are taught to round a number goes something like this:

Round the number n to p decimal places by first shifting the decimal point in n by p places by multiplying n by 10ᵖ (10 raised to the pth power) to get a new number m.

Then look at the digit d in the first decimal place of m. If d is less than 5, round m down to the nearest integer. Otherwise, round m up.

Finally, shift the decimal point back p places by dividing m by 10ᵖ.

It’s a straightforward algorithm! For example, the number 2.5 rounded to the nearest whole number is 3. The number 1.64 rounded to one decimal place is 1.6.

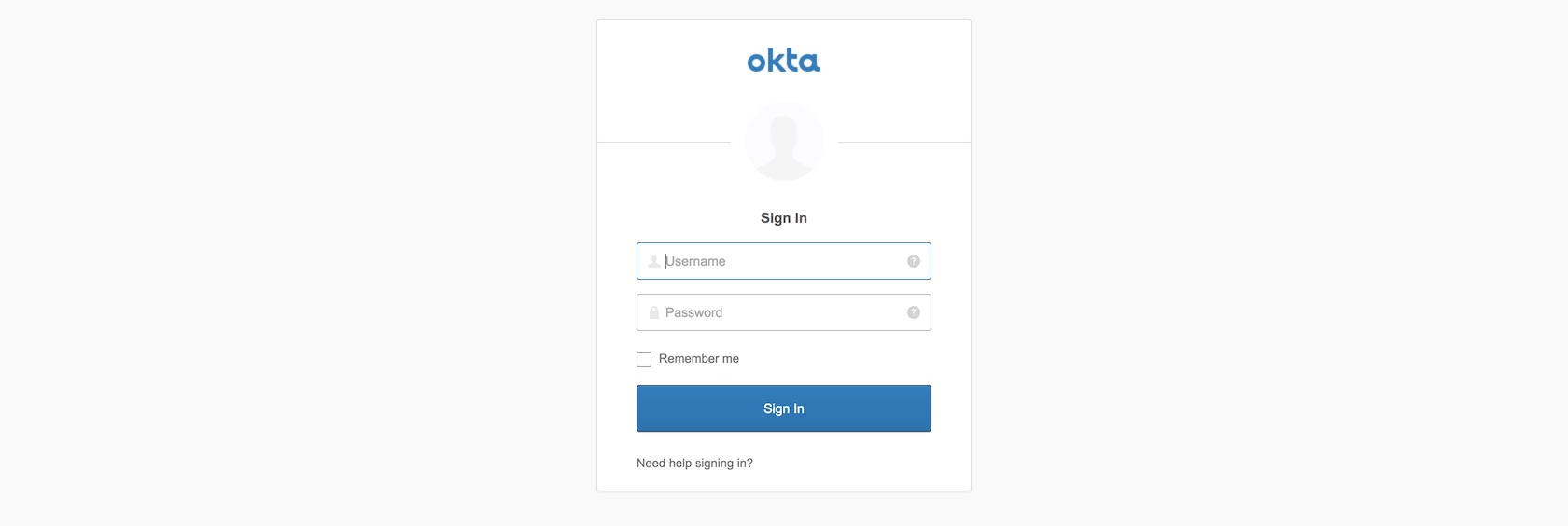

Now open up an interpreter session and round 2.5 to the nearest whole number using Python’s built-in round() function:

Gasp!

How does round() handle the number 1.5?

So, round() rounds 1.5 up to 2, and 2.5 down to 2!

Before you go raising an issue on the Python bug tracker, let me assure you that round(2.5) is supposed to return 2. There is a good reason why round() behaves the way it does.

In this article, you’ll learn that there are more ways to round a number than you might expect, each with unique advantages and disadvantages. round() behaves according to a particular rounding strategy—which may or may not be the one you need for a given situation.

You might be wondering, “Can the way I round numbers really have that much of an impact?” Let’s take a look at just how extreme the effects of rounding can be.

How Much Impact Can Rounding Have?

Suppose you have an incredibly lucky day and find $100 on the ground. Rather than spending all your money at once, you decide to play it smart and invest your money by buying some shares of different stocks.

The value of a stock depends on supply and demand. The more people there are who want to buy a stock, the more value that stock has, and vice versa. In high volume stock markets, the value of a particular stock can fluctuate on a second-by-second basis.

Let’s run a little experiment. We’ll pretend the overall value of the stocks you purchased fluctuates by some small random number each second, say between $0.05 and -$0.05. This fluctuation may not necessarily be a nice value with only two decimal places. For example, the overall value may increase by $0.031286 one second and decrease the next second by $0.028476.

You don’t want to keep track of your value to the fifth or sixth decimal place, so you decide to chop everything off after the third decimal place. In rounding jargon, this is called truncating the number to the third decimal place. There’s some error to be expected here, but by keeping three decimal places, this error couldn’t be substantial. Right?

To run our experiment using Python, let’s start by writing a truncate() function that truncates a number to three decimal places:

>>>>>> deftruncate(n):... returnint(n*1000)/1000

The truncate() function works by first shifting the decimal point in the number n three places to the right by multiplying n by 1000. The integer part of this new number is taken with int(). Finally, the decimal point is shifted three places back to the left by dividing n by 1000.

Next, let’s define the initial parameters of the simulation. You’ll need two variables: one to keep track of the actual value of your stocks after the simulation is complete and one for the value of your stocks after you’ve been truncating to three decimal places at each step.

Start by initializing these variables to 100:

>>>>>> actual_value,truncated_value=100,100

Now let’s run the simulation for 1,000,000 seconds (approximately 11.5 days). For each second, generate a random value between -0.05 and 0.05 with the uniform() function in the random module, and then update actual and truncated:

>>>>>> importrandom>>> random.seed(100)>>> for_inrange(1000000):... randn=random.uniform(-0.05,0.05)... actual_value=actual_value+randn... truncated_value=truncate(truncated_value+randn)...>>> actual_value96.45273913513529>>> truncated_value0.239

The meat of the simulation takes place in the for loop, which loops over the range(1000000) of numbers between 0 and 999,999. The value taken from range() at each step is stored in the variable _, which we use here because we don’t actually need this value inside of the loop.

At each step of the loop, a new random number between -0.05 and 0.05 is generated using random.randn() and assigned to the variable randn. The new value of your investment is calculated by adding randn to actual_value, and the truncated total is calculated by adding randn to truncated_value and then truncating this value with truncate().

As you can see by inspecting the actual_value variable after running the loop, you only lost about $3.55. However, if you’d been looking at truncated_value, you’d have thought that you’d lost almost all of your money!

Note: In the above example, the random.seed() function is used to seed the pseudo-random number generator so that you can reproduce the output shown here.

To learn more about randomness in Python, check out Real Python’s Generating Random Data in Python (Guide).

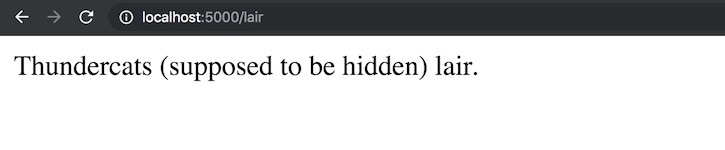

Ignoring for the moment that round() doesn’t behave quite as you expect, let’s try re-running the simulation. We’ll use round() this time to round to three decimal places at each step, and seed() the simulation again to get the same results as before:

>>>>>> random.seed(100)>>> actual_value,rounded_value=100,100>>> for_inrange(1000000):... randn=random.uniform(-0.05,0.05)... actual_value=actual_value+randn... rounded_value=round(rounded_value+randn,3)...>>> actual_value96.45273913513529>>> rounded_value96.258

What a difference!

Shocking as it may seem, this exact error caused quite a stir in the early 1980s when the system designed for recording the value of the Vancouver Stock Exchange truncated the overall index value to three decimal places instead of rounding. Rounding errors have swayed elections and even resulted in the loss of life.

How you round numbers is important, and as a responsible developer and software designer, you need to know what the common issues are and how to deal with them. Let’s dive in and investigate what the different rounding methods are and how you can implement each one in pure Python.

A Menagerie of Methods

There are a plethora of rounding strategies, each with advantages and disadvantages. In this section, you’ll learn about some of the most common techniques, and how they can influence your data.

Truncation

The simplest, albeit crudest, method for rounding a number is to truncate the number to a given number of digits. When you truncate a number, you replace each digit after a given position with 0. Here are some examples:

| Value | Truncated To | Result |

|---|

| 12.345 | Tens place | 10 |

| 12.345 | Ones place | 12 |

| 12.345 | Tenths place | 12.3 |

| 12.345 | Hundredths place | 12.34 |

You’ve already seen one way to implement this in the truncate() function from the How Much Impact Can Rounding Have? section. In that function, the input number was truncated to three decimal places by:

- Multiplying the number by

1000 to shift the decimal point three places to the right - Taking the integer part of that new number with

int() - Shifting the decimal place three places back to the left by dividing by

1000

You can generalize this process by replacing 1000 with the number 10ᵖ (10 raised to the pth power), where p is the number of decimal places to truncate to:

deftruncate(n,decimals=0):multiplier=10**decimalsreturnint(n*multiplier)/multiplier

In this version of truncate(), the second argument defaults to 0 so that if no second argument is passed to the function, then truncate() returns the integer part of whatever number is passed to it.

The truncate() function works well for both positive and negative numbers:

>>>>>> truncate(12.5)12.0>>> truncate(-5.963,1)-5.9>>> truncate(1.625,2)1.62

You can even pass a negative number to decimals to truncate to digits to the left of the decimal point:

>>>>>> truncate(125.6,-1)120.0>>> truncate(-1374.25,-3)-1000.0

When you truncate a positive number, you are rounding it down. Likewise, truncating a negative number rounds that number up. In a sense, truncation is a combination of rounding methods depending on the sign of the number you are rounding.

Let’s take a look at each of these rounding methods individually, starting with rounding up.

Rounding Up

The second rounding strategy we’ll look at is called “rounding up.” This strategy always rounds a number up to a specified number of digits. The following table summarizes this strategy:

| Value | Round Up To | Result |

|---|

| 12.345 | Tens place | 20 |

| 12.345 | Ones place | 13 |

| 12.345 | Tenths place | 12.4 |

| 12.345 | Hundredths place | 12.35 |

To implement the “rounding up” strategy in Python, we’ll use the ceil() function from the math module.

The ceil() function gets its name from the term “ceiling,” which is used in mathematics to describe the nearest integer that is greater than or equal to a given number.

Every number that is not an integer lies between two consecutive integers. For example, the number 1.2 lies in the interval between 1 and 2. The “ceiling” is the greater of the two endpoints of the interval. The lesser of the two endpoints in called the “floor.” Thus, the ceiling of 1.2 is 2, and the floor of 1.2 is 1.

In mathematics, a special function called the ceiling function maps every number to its ceiling. To allow the ceiling function to accept integers, the ceiling of an integer is defined to be the integer itself. So the ceiling of the number 2 is 2.

In Python, math.ceil() implements the ceiling function and always returns the nearest integer that is greater than or equal to its input:

>>>>>> importmath>>> math.ceil(1.2)2>>> math.ceil(2)2>>> math.ceil(-0.5)0

Notice that the ceiling of -0.5 is 0, not -1. This makes sense because 0 is the nearest integer to -0.5 that is greater than or equal to -0.5.

Let’s write a function called round_up() that implements the “rounding up” strategy:

defround_up(n,decimals=0):multiplier=10**decimalsreturnmath.ceil(n*multiplier)/multiplier

You may notice that round_up() looks a lot like truncate(). First, the decimal point in n is shifted the correct number of places to the right by multiplying n by 10 ** decimals. This new value is rounded up to the nearest integer using math.ceil(), and then the decimal point is shifted back to the left by dividing by 10 ** decimals.

This pattern of shifting the decimal point, applying some rounding method to round to an integer, and then shifting the decimal point back will come up over and over again as we investigate more rounding methods. This is, after all, the mental algorithm we humans use to round numbers by hand.

Let’s look at how well round_up() works for different inputs:

>>>>>> round_up(1.1)2.0>>> round_up(1.23,1)1.3>>> round_up(1.543,2)1.55

Just like truncate(), you can pass a negative value to decimals:

>>>>>> round_up(22.45,-1)30.0>>> round_up(1352,-2)1400

When you pass a negative number to decimals, the number in the first argument of round_up() is rounded to the correct number of digits to the left of the decimal point.

Take a guess at what round_up(-1.5) returns:

>>>>>> round_up(-1.5)-1.0

Is -1.0 what you expected?

If you examine the logic used in defining round_up()—in particular, the way the math.ceil() function works—then it makes sense that round_up(-1.5) returns -1.0. However, some people naturally expect symmetry around zero when rounding numbers, so that if 1.5 gets rounded up to 2, then -1.5 should get rounded up to -2.

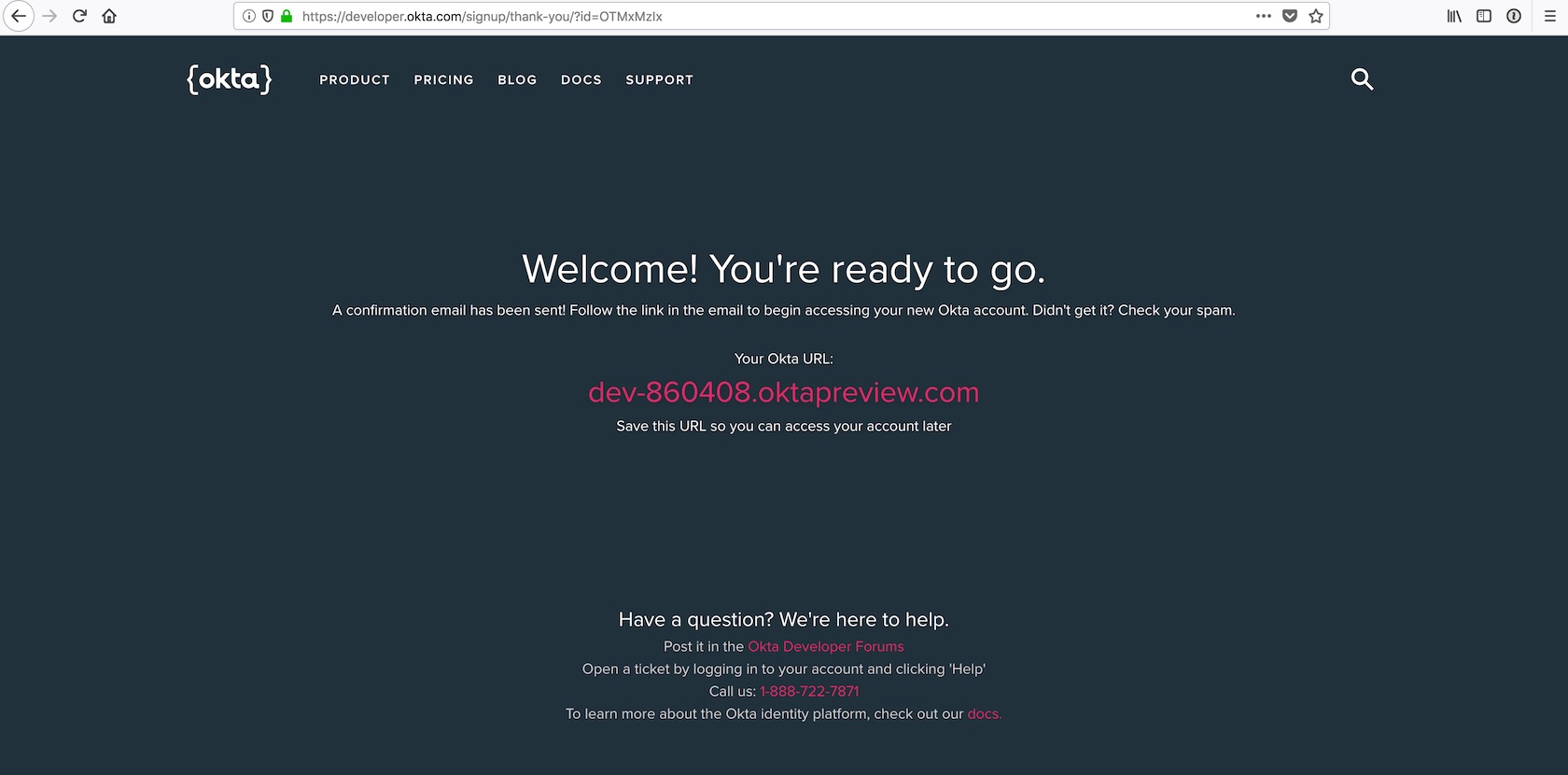

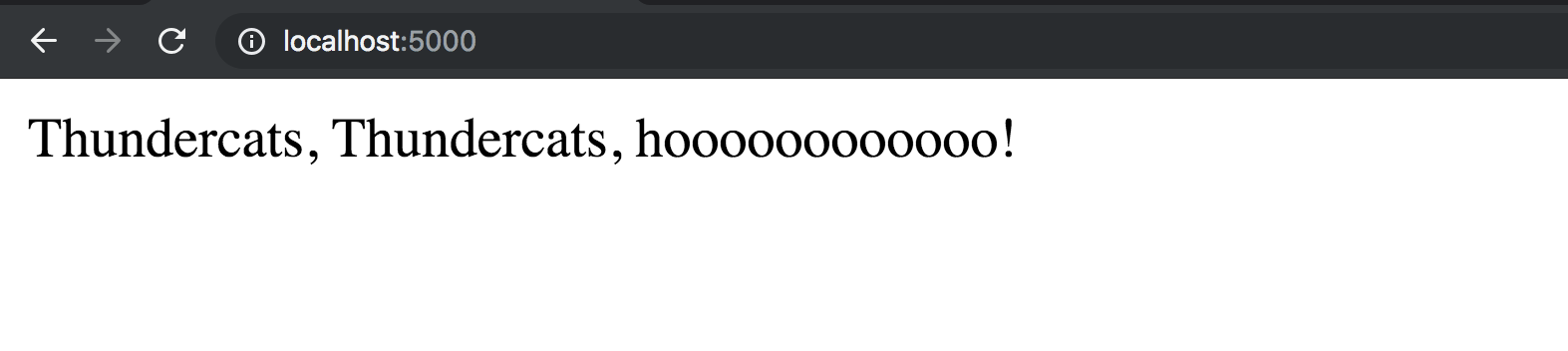

Let’s establish some terminology. For our purposes, we’ll use the terms “round up” and “round down” according to the following diagram:

![Round up to the right and down to the left]()

Round up to the right and down to the left. (Image: David Amos)

Rounding up always rounds a number to the right on the number line, and rounding down always rounds a number to the left on the number line.

Rounding Down

The counterpart to “rounding up” is the “rounding down” strategy, which always rounds a number down to a specified number of digits. Here are some examples illustrating this strategy:

| Value | Rounded Down To | Result |

|---|

| 12.345 | Tens place | 10 |

| 12.345 | Ones place | 12 |

| 12.345 | Tenths place | 12.3 |

| 12.345 | Hundredths place | 12.34 |

To implement the “rounding down” strategy in Python, we can follow the same algorithm we used for both trunctate() and round_up(). First shift the decimal point, then round to an integer, and finally shift the decimal point back.

In round_up(), we used math.ceil() to round up to the ceiling of the number after shifting the decimal point. For the “rounding down” strategy, though, we need to round to the floor of the number after shifting the decimal point.

Lucky for us, the math module has a floor() function that returns the floor of its input:

>>>>>> math.floor(1.2)1>>> math.floor(-0.5)-1

Here’s the definition of round_down():

defround_down(n,decimals=0):multiplier=10**decimalsreturnmath.floor(n*multiplier)/multiplier

That looks just like round_up(), except math.ceil() has been replaced with math.floor().

You can test round_down() on a few different values:

>>>>>> round_down(1.5)1>>> round_down(1.37,1)1.3>>> round_down(-0.5)-1

The effects of round_up() and round_down() can be pretty extreme. By rounding the numbers in a large dataset up or down, you could potentially remove a ton of precision and drastically alter computations made from the data.

Before we discuss any more rounding strategies, let’s stop and take a moment to talk about how rounding can make your data biased.

Interlude: Rounding Bias

You’ve now seen three rounding methods: truncate(), round_up(), and round_down(). All three of these techniques are rather crude when it comes to preserving a reasonable amount of precision for a given number.

There is one important difference between truncate() and round_up() and round_down() that highlights an important aspect of rounding: symmetry around zero.

Recall that round_up() isn’t symmetric around zero. In mathematical terms, a function f(x) is symmetric around zero if, for any value of x, f(x) + f(-x) = 0. For example, round_up(1.5) returns 2, but round_up(-1.5) returns -1. The round_down() function isn’t symmetric around 0, either.

On the other hand, the truncate() function is symmetric around zero. This is because, after shifting the decimal point to the right, truncate() chops off the remaining digits. When the initial value is positive, this amounts to rounding the number down. Negative numbers are rounded up. So, truncate(1.5) returns 1, and truncate(-1.5) returns -1.

The concept of symmetry introduces the notion of rounding bias, which describes how rounding affects numeric data in a dataset.

The “rounding up” strategy has a round towards positive infinity bias, because the value is always rounded up in the direction of positive infinity. Likewise, the “rounding down” strategy has a round towards negative infinity bias.

The “truncation” strategy exhibits a round towards negative infinity bias on positive values and a round towards positive infinity for negative values. Rounding functions with this behavior are said to have a round towards zero bias, in general.

Let’s see how this works in practice. Consider the following list of floats:

>>>>>> data=[1.25,-2.67,0.43,-1.79,4.32,-8.19]

Let’s compute the mean value of the values in data using the statistics.mean() function:

>>>>>> importstatistics>>> statistics.mean(data)-1.1083333333333332

Now apply each of round_up(), round_down(), and truncate() in a list comprehension to round each number in data to one decimal place and calculate the new mean:

>>>>>> ru_data=[round_up(n,1)fornindata]>>> ru_data[1.3, -2.6, 0.5, -1.7, 4.4, -8.1]>>> statistics.mean(ru_data)-1.0333333333333332>>> rd_data=[round_down(n,1)fornindata]>>> statistics.mean(rd_data)-1.1333333333333333>>> tr_data=[truncate(n,1)fornindata]>>> statistics.mean(tr_data)-1.0833333333333333

After every number in data is rounded up, the new mean is about -1.033, which is greater than the actual mean of about 1.108. Rounding down shifts the mean downwards to about -1.133. The mean of the truncated values is about -1.08 and is the closest to the actual mean.

This example does not imply that you should always truncate when you need to round individual values while preserving a mean value as closely as possible. The data list contains an equal number of positive and negative values. The truncate() function would behave just like round_up() on a list of all positive values, and just like round_down() on a list of all negative values.

What this example does illustrate is the effect rounding bias has on values computed from data that has been rounded. You will need to keep these effects in mind when drawing conclusions from data that has been rounded.

Typically, when rounding, you are interested in rounding to the nearest number with some specified precision, instead of just rounding everything up or down.

For example, if someone asks you to round the numbers 1.23 and 1.28 to one decimal place, you would probably respond quickly with 1.2 and 1.3. The truncate(), round_up(), and round_down() functions don’t do anything like this.

What about the number 1.25? You probably immediately think to round this to 1.3, but in reality, 1.25 is equidistant from 1.2 and 1.3. In a sense, 1.2 and 1.3 are both the nearest numbers to 1.25 with single decimal place precision. The number 1.25 is called a tie with respect to 1.2 and 1.3. In cases like this, you must assign a tiebreaker.

The way that most people are taught break ties is by rounding to the greater of the two possible numbers.

Rounding Half Up

The “rounding half up” strategy rounds every number to the nearest number with the specified precision, and breaks ties by rounding up. Here are some examples:

| Value | Round Half Up To | Result |

|---|

| 13.825 | Tens place | 10 |

| 13.825 | Ones place | 14 |

| 13.825 | Tenths place | 13.8 |

| 13.825 | Hundredths place | 13.83 |

To implement the “rounding half up” strategy in Python, you start as usual by shifting the decimal point to the right by the desired number of places. At this point, though, you need a way to determine if the digit just after the shifted decimal point is less than or greater than or equal to 5.

One way to do this is to add 0.5 to the shifted value and then round down with math.floor(). This works because:

If the digit in the first decimal place of the shifted value is less than five, then adding 0.5 won’t change the integer part of the shifted value, so the floor is equal to the integer part.

If the first digit after the decimal place is greater than or equal to 5, then adding 0.5 will increase the integer part of the shifted value by 1, so the floor is equal to this larger integer.

Here’s what this looks like in Python:

defround_half_up(n,decimals=0):multiplier=10**decimalsreturnmath.floor(n*multiplier+0.5)/multiplier

Notice that round_half_up() looks a lot like round_down(). This might be somewhat counter-intuitive, but internally round_half_up() only rounds down. The trick is to add the 0.5 after shifting the decimal point so that the result of rounding down matches the expected value.

Let’s test round_half_up() on a couple of values to see that it works:

>>>>>> round_half_up(1.23,1)1.2>>> round_half_up(1.28,1)1.3>>> round_half_up(1.25,1)1.3

Since round_half_up() always breaks ties by rounding to the greater of the two possible values, negative values like -1.5 round to -1, not to -2:

>>>>>> round_half_up(-1.5)-1.0>>> round_half_up(-1.25,1)-1.2

Great! You can now finally get that result that the built-in round() function denied to you:

>>>>>> round_half_up(2.5)3.0

Before you get too excited though, let’s see what happens when you try and round -1.225 to 2 decimal places:

>>>>>> round_half_up(-1.225,2)-1.23

Wait. We just discussed how ties get rounded to the greater of the two possible values. -1.225 is smack in the middle of -1.22 and -1.23. Since -1.22 is the greater of these two, round_half_up(-1.225, 2) should return -1.22. But instead, we got -1.23.

Is there a bug in the round_half_up() function?

When round_half_up() rounds -1.225 to two decimal places, the first thing it does is multiply -1.225 by 100. Let’s make sure this works as expected:

>>>>>> -1.225*100-122.50000000000001

Well… that’s wrong! But it does explain why round_half_up(-1.225, 2) returns -1.23. Let’s continue the round_half_up() algorithm step-by-step, utilizing _ in the REPL to recall the last value output at each step:

>>>>>> _+0.5-122.00000000000001>>> math.floor(_)-123>>> _/100-1.23

Even though -122.00000000000001 is really close to -122, the nearest integer that is less than or equal to it is -123. When the decimal point is shifted back to the left, the final value is -1.23.

Well, now you know how round_half_up(-1.225, 2) returns -1.23 even though there is no logical error, but why does Python say that -1.225 * 100 is -122.50000000000001? Is there a bug in Python?

Aside: In a Python interpreter session, type the following:

>>>>>> 0.1+0.1+0.10.30000000000000004

Seeing this for the first time can be pretty shocking, but this is a classic example of floating-point representation error. It has nothing to do with Python. The error has to do with how machines store floating-point numbers in memory.

Most modern computers store floating-point numbers as binary decimals with 53-bit precision. Only numbers that have finite binary decimal representations that can be expressed in 53 bits are stored as an exact value. Not every number has a finite binary decimal representation.

For example, the decimal number 0.1 has a finite decimal representation, but infinite binary representation. Just like the fraction 1/3 can only be represented in decimal as the infinitely repeating decimal 0.333..., the fraction 1/10 can only be expressed in binary as the infinitely repeating decimal 0.0001100110011....

A value with an infinite binary representation is rounded to an approximate value to be stored in memory. The method that most machines use to round is determined according to the IEEE-754 standard, which specifies rounding to the nearest representable binary fraction.

The Python docs have a section called Floating Point Arithmetic: Issues and Limitations which has this to say about the number 0.1:

On most machines, if Python were to print the true decimal value of the binary approximation stored for 0.1, it would have to display

>>>>>> 0.10.1000000000000000055511151231257827021181583404541015625

That is more digits than most people find useful, so Python keeps the number of digits manageable by displaying a rounded value instead

Just remember, even though the printed result looks like the exact value of 1/10, the actual stored value is the nearest representable binary fraction. (Source)

For a more in-depth treatise on floating-point arithmetic, check out David Goldberg’s article What Every Computer Scientist Should Know About Floating-Point Arithmetic, originally published in the journal ACM Computing Surveys, Vol. 23, No. 1, March 1991.

The fact that Python says that -1.225 * 100 is -122.50000000000001 is an artifact of floating-point representation error. You might be asking yourself, “Okay, but is there a way to fix this?” A better question to ask yourself is “Do I need to fix this?”

Floating-point numbers do not have exact precision, and therefore should not be used in situations where precision is paramount. For applications where the exact precision is necessary, you can use the Decimal class from Python’s decimal module. You’ll learn more about the Decimal class below.

If you have determined that Python’s standard float class is sufficient for your application, some occasional errors in round_half_up() due to floating-point representation error shouldn’t be a concern.

Now that you’ve gotten a taste of how machines round numbers in memory, let’s continue our discussion on rounding strategies by looking at another way to break a tie.

Rounding Half Down

The “rounding half down” strategy rounds to the nearest number with the desired precision, just like the “rounding half up” method, except that it breaks ties by rounding to the lesser of the two numbers. Here are some examples:

| Value | Round Half Down To | Result |

|---|

| 13.825 | Tens place | 10 |

| 13.825 | Ones place | 14 |

| 13.825 | Tenths place | 13.8 |

| 13.825 | Hundredths place | 13.82 |

You can implement the “rounding half down” strategy in Python by replacing math.floor() in the round_half_up() function with math.ceil() and subtracting 0.5 instead of adding:

defround_half_down(n,decimals=0):multiplier=10**decimalsreturnmath.ceil(n*multiplier-0.5)/multiplier

Let’s check round_half_down() against a few test cases:

>>>>>> round_half_down(1.5)1.0>>> round_half_down(-1.5)-2.0>>> round_half_down(2.25,1)2.2

Both round_half_up() and round_half_down() have no bias in general. However, rounding data with lots of ties does introduce a bias. For an extreme example, consider the following list of numbers:

>>>>>> data=[-2.15,1.45,4.35,-12.75]

Let’s compute the mean of these numbers:

>>>>>> statistics.mean(data)-2.275

Next, compute the mean on the data after rounding to one decimal place with round_half_up() and round_half_down():

>>>>>> rhu_data=[round_half_up(n,1)fornindata]>>> statistics.mean(rhu_data)-2.2249999999999996>>> rhd_data=[round_half_down(n,1)fornindata]>>> statistics.mean(rhd_data)-2.325

Every number in data is a tie with respect to rounding to one decimal place. The round_half_up() function introduces a round towards positive infinity bias, and round_half_down() introduces a round towards negative infinity bias.

The remaining rounding strategies we’ll discuss all attempt to mitigate these biases in different ways.

Rounding Half Away From Zero

If you examine round_half_up() and round_half_down() closely, you’ll notice that neither of these functions is symmetric around zero:

>>>>>> round_half_up(1.5)2.0>>> round_half_up(-1.5)-1.0>>> round_half_down(1.5)1.0>>> round_half_down(-1.5)-2.0

One way to introduce symmetry is to always round a tie away from zero. The following table illustrates how this works:

| Value | Round Half Away From Zero To | Result |

|---|

| 15.25 | Tens place | 20 |

| 15.25 | Ones place | 15 |

| 15.25 | Tenths place | 15.3 |

| -15.25 | Tens place | -20 |

| -15.25 | Ones place | -15 |

| -15.25 | Tenths place | -15.3 |

To implement the “rounding half away from zero” strategy on a number n, you start as usual by shifting the decimal point to the right a given number of places. Then you look at the digit d immediately to the right of the decimal place in this new number. At this point, there are four cases to consider:

- If

n is positive and d >= 5, round up - If

n is positive and d < 5, round down - If

n is negative and d >= 5, round down - If

n is negative and d < 5, round up

After rounding according to one of the above four rules, you then shift the decimal place back to the left.

Given a number n and a value for decimals, you could implement this in Python by using round_half_up() and round_half_down():

ifn>=0:rounded=round_half_up(n,decimals)else:rounded=round_half_down(n,decimals)

That’s easy enough, but there’s actually a simpler way!

If you first take the absolute value of n using Python’s built-in abs() function, you can just use round_half_up() to round the number. Then all you need to do is give the rounded number the same sign as n. One way to do this is using the math.copysign() function.

math.copysign() takes two numbers a and b and returns a with the sign of b:

>>>>>> math.copysign(1,-2)-1.0

Notice that math.copysign() returns a float, even though both of its arguments were integers.

Using abs(), round_half_up() and math.copysign(), you can implement the “rounding half away from zero” strategy in just two lines of Python:

defround_half_away_from_zero(n,decimals=0):rounded_abs=round_half_up(abs(n),decimals)returnmath.copysign(rounded_abs,n)

In round_half_away_from_zero(), the absolute value of n is rounded to decimals decimal places using round_half_up() and this result is assigned to the variable rounded_abs. Then the original sign of n is applied to rounded_abs using math.copysign(), and this final value with the correct sign is returned by the function.

Checking round_half_away_from_zero() on a few different values shows that the function behaves as expected:

>>>>>> round_half_away_from_zero(1.5)2.0>>> round_half_away_from_zero(-1.5)-2.0>>> round_half_away_from_zero(-12.75,1)-12.8

The round_half_away_from_zero() function rounds numbers the way most people tend to round numbers in everyday life. Besides being the most familiar rounding function you’ve seen so far, round_half_away_from_zero() also eliminates rounding bias well in datasets that have an equal number of positive and negative ties.

Let’s check how well round_half_away_from_zero() mitigates rounding bias in the example from the previous section:

>>>>>> data=[-2.15,1.45,4.35,-12.75]>>> statistics.mean(data)-2.275>>> rhaz_data=[round_half_away_from_zero(n,1)fornindata]>>> statistics.mean(rhaz_data)-2.2750000000000004

The mean value of the numbers in data is preserved almost exactly when you round each number in data to one decimal place with round_half_away_from_zero()!

However, round_half_away_from_zero() will exhibit a rounding bias when you round every number in datasets with only positive ties, only negative ties, or more ties of one sign than the other. Bias is only mitigated well if there are a similar number of positive and negative ties in the dataset.

How do you handle situations where the number of positive and negative ties are drastically different? The answer to this question brings us full circle to the function that deceived us at the beginning of this article: Python’s built-in round() function.

Rounding Half To Even

One way to mitigate rounding bias when rounding values in a dataset is to round ties to the nearest even number at the desired precision. Here are some examples of how to do that:

| Value | Round Half To Even To | Result |

|---|

| 15.255 | Tens place | 20 |

| 15.255 | Ones place | 15 |

| 15.255 | Tenths place | 15.2 |

| 15.255 | Hundredths place | 15.26 |

The “rounding half to even strategy” is the strategy used by Python’s built-in round() function and is the default rounding rule in the IEEE-754 standard. This strategy works under the assumption that the probabilities of a tie in a dataset being rounded down or rounded up are equal. In practice, this is usually the case.

Now you know why round(2.5) returns 2. It’s not a mistake. It is a conscious design decision based on solid recommendations.

To prove to yourself that round() really does round to even, try it on a few different values:

>>>>>> round(4.5)4>>> round(3.5)4>>> round(1.75,1)1.8>>> round(1.65,1)1.6

The round() function is nearly free from bias, but it isn’t perfect. For example, rounding bias can still be introduced if the majority of the ties in your dataset round up to even instead of rounding down. Strategies that mitigate bias even better than “rounding half to even” do exist, but they are somewhat obscure and only necessary in extreme circumstances.

Finally, round() suffers from the same hiccups that you saw in round_half_up() thanks to floating-point representation error:

>>>>>> # Expected value: 2.68>>> round(2.675,2)2.67

You shouldn’t be concerned with these occasional errors if floating-point precision is sufficient for your application.

When precision is paramount, you should use Python’s Decimal class.

The Decimal Class

Python’s decimal module is one of those “batteries-included” features of the language that you might not be aware of if you’re new to Python. The guiding principle of the decimal module can be found in the documentation:

Decimal “is based on a floating-point model which was designed with people in mind, and necessarily has a paramount guiding principle – computers must provide an arithmetic that works in the same way as the arithmetic that people learn at school.” – excerpt from the decimal arithmetic specification. (Source)

The benefits of the decimal module include:

- Exact decimal representation:

0.1 is actually0.1, and 0.1 + 0.1 + 0.1 - 0.3 returns 0, as you’d expect. - Preservation of significant digits: When you add

1.20 and 2.50, the result is 3.70 with the trailing zero maintained to indicate significance. - User-alterable precision: The default precision of the

decimal module is twenty-eight digits, but this value can be altered by the user to match the problem at hand.

Let’s explore how rounding works in the decimal module. Start by typing the following into a Python REPL:

>>>>>> importdecimal>>> decimal.getcontext()Context( prec=28, rounding=ROUND_HALF_EVEN, Emin=-999999, Emax=999999, capitals=1, clamp=0, flags=[], traps=[ InvalidOperation, DivisionByZero, Overflow ])

decimal.getcontext() returns a Context object representing the default context of the decimal module. The context includes the default precision and the default rounding strategy, among other things.

As you can see in the example above, the default rounding strategy for the decimal module is ROUND_HALF_EVEN. This aligns with the built-in round() function and should be the preferred rounding strategy for most purposes.

Let’s declare a number using the decimal module’s Decimal class. To do so, create a new Decimal instance by passing a string containing the desired value:

>>>>>> fromdecimalimportDecimal>>> Decimal("0.1")Decimal('0.1') Note: It is possible to create a Decimal instance from a floating-point number, but doing so introduces floating-point representation error right off the bat. For example, check out what happens when you create a Decimal instance from the floating-point number 0.1:

>>>>>> Decimal(0.1)Decimal('0.1000000000000000055511151231257827021181583404541015625') In order to maintain exact precision, you must create Decimal instances from strings containing the decimal numbers you need.

Just for fun, let’s test the assertion that Decimal maintains exact decimal representation:

>>>>>> Decimal('0.1')+Decimal('0.1')+Decimal('0.1')Decimal('0.3') Ahhh. That’s satisfying, isn’t it?

Rounding a Decimal is done with the .quantize() method:

>>>>>> Decimal("1.65").quantize(Decimal("1.0"))Decimal('1.6') Okay, that probably looks a little funky, so let’s break that down. The Decimal("1.0") argument in .quantize() determines the number of decimal places to round the number. Since 1.0 has one decimal place, the number 1.65 rounds to a single decimal place. The default rounding strategy is “rounding half to even,” so the result is 1.6.

Recall that the round() function, which also uses the “rounding half to even strategy,” failed to round 2.675 to two decimal places correctly. Instead of 2.68, round(2.675, 2) returns 2.67. Thanks to the decimal modules exact decimal representation, you won’t have this issue with the Decimal class:

>>>>>> Decimal("2.675").quantize(Decimal("1.00"))Decimal('2.68') Another benefit of the decimal module is that rounding after performing arithmetic is taken care of automatically, and significant digits are preserved. To see this in action, let’s change the default precision from twenty-eight digits to two, and then add the numbers 1.23 and 2.32:

>>>>>> decimal.getcontext().prec=2>>> Decimal("1.23")+Decimal("2.32")Decimal('3.6') To change the precision, you call decimal.getcontext() and set the .prec attribute. If setting the attribute on a function call looks odd to you, you can do this because .getcontext() returns a special Context object that represents the current internal context containing the default parameters used by the decimal module.

The exact value of 1.23 plus 2.32 is 3.55. Since the precision is now two digits, and the rounding strategy is set to the default of “rounding half to even,” the value 3.55 is automatically rounded to 3.6.

To change the default rounding strategy, you can set the decimal.getcontect().rounding property to any one of several flags. The following table summarizes these flags and which rounding strategy they implement:

| Flag | Rounding Strategy |

|---|

decimal.ROUND_CEILING | Rounding up |

decimal.ROUND_FLOOR | Rounding down |

decimal.ROUND_DOWN | Truncation |

decimal.ROUND_UP | Rounding away from zero |

decimal.ROUND_HALF_UP | Rounding half away from zero |

decimal.ROUND_HALF_DOWN | Rounding half towards zero |

decimal.ROUND_HALF_EVEN | Rounding half to even |

decimal.ROUND_05UP | Rounding up and rounding towards zero |

The first thing to notice is that the naming scheme used by the decimal module differs from what we agreed to earlier in the article. For example, decimal.ROUND_UP implements the “rounding away from zero” strategy, which actually rounds negative numbers down.

Secondly, some of the rounding strategies mentioned in the table may look unfamiliar since we haven’t discussed them. You’ve already seen how decimal.ROUND_HALF_EVEN works, so let’s take a look at each of the others in action.

The decimal.ROUND_CEILING strategy works just like the round_up() function we defined earlier:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_CEILING>>> Decimal("1.32").quantize(Decimal("1.0"))Decimal('1.4')>>> Decimal("-1.32").quantize(Decimal("1.0"))Decimal('-1.3') Notice that the results of decimal.ROUND_CEILING are not symmetric around zero.

The decimal.ROUND_FLOOR strategy works just like our round_down() function:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_FLOOR>>> Decimal("1.32").quantize(Decimal("1.0"))Decimal('1.3')>>> Decimal("-1.32").quantize(Decimal("1.0"))Decimal('-1.4') Like decimal.ROUND_CEILING, the decimal.ROUND_FLOOR strategy is not symmetric around zero.

The decimal.ROUND_DOWN and decimal.ROUND_UP strategies have somewhat deceptive names. Both ROUND_DOWN and ROUND_UP are symmetric around zero:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_DOWN>>> Decimal("1.32").quantize(Decimal("1.0"))Decimal('1.3')>>> Decimal("-1.32").quantize(Decimal("1.0"))Decimal('-1.3')>>> decimal.getcontext().rounding=decimal.ROUND_UP>>> Decimal("1.32").quantize(Decimal("1.0"))Decimal('1.4')>>> Decimal("-1.32").quantize(Decimal("1.0"))Decimal('-1.4') The decimal.ROUND_DOWN strategy rounds numbers towards zero, just like the truncate() function. On the other hand, decimal.ROUND_UP rounds everything away from zero. This is a clear break from the terminology we agreed to earlier in the article, so keep that in mind when you are working with the decimal module.

There are three strategies in the decimal module that allow for more nuanced rounding. The decimal.ROUND_HALF_UP method rounds everything to the nearest number and breaks ties by rounding away from zero:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_HALF_UP>>> Decimal("1.35").quantize(Decimal("1.0"))Decimal('1.4')>>> Decimal("-1.35").quantize(Decimal("1.0"))Decimal('-1.4') Notice that decimal.ROUND_HALF_UP works just like our round_half_away_from_zero() and not like round_half_up().

There is also a decimal.ROUND_HALF_DOWN strategy that breaks ties by rounding towards zero:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_HALF_DOWN>>> Decimal("1.35").quantize(Decimal("1.0"))Decimal('1.3')>>> Decimal("-1.35").quantize(Decimal("1.0"))Decimal('-1.3') The final rounding strategy available in the decimal module is very different from anything we have seen so far:

>>>>>> decimal.getcontext().rounding=decimal.ROUND_05UP>>> Decimal("1.38").quantize(Decimal("1.0"))Decimal('1.3')>>> Decimal("1.35").quantize(Decimal("1.0"))Decimal('1.3')>>> Decimal("-1.35").quantize(Decimal("1.0"))Decimal('-1.3') In the above examples, it looks as if decimal.ROUND_05UP rounds everything towards zero. In fact, this is exactly how decimal.ROUND_05UP works, unless the result of rounding ends in a 0 or 5. In that case, the number gets rounded away from zero:

>>>>>> Decimal("1.49").quantize(Decimal("1.0"))Decimal('1.4')>>> Decimal("1.51").quantize(Decimal("1.0"))Decimal('1.6') In the first example, the number 1.49 is first rounded towards zero in the second decimal place, producing 1.4. Since 1.4 does not end in a 0 or a 5, it is left as is. On the other hand, 1.51 is rounded towards zero in the second decimal place, resulting in the number 1.5. This ends in a 5, so the first decimal place is then rounded away from zero to 1.6.

In this section, we have only focused on the rounding aspects of the decimal module. There are a large number of other features that make decimal an excellent choice for applications where the standard floating-point precision is inadequate, such as banking and some problems in scientific computing.

For more information on Decimal, check out the Quick-start Tutorial in the Python docs.

Next, let’s turn our attention to two staples of Python’s scientific computing and data science stacks: NumPy and Pandas.

Rounding NumPy Arrays

In the domains of data science and scientific computing, you often store your data as a NumPy array. One of NumPy’s most powerful features is its use of vectorization and broadcasting to apply operations to an entire array at once instead of one element at a time.

Let’s generate some data by creating a 3×4 NumPy array of pseudo-random numbers:

>>>>>> importnumpyasnp>>> np.random.seed(444)>>> data=np.random.randn(3,4)>>> dataarray([[ 0.35743992, 0.3775384 , 1.38233789, 1.17554883], [-0.9392757 , -1.14315015, -0.54243951, -0.54870808], [ 0.20851975, 0.21268956, 1.26802054, -0.80730293]])

First, we seed the np.random module so that you can easily reproduce the output. Then a 3×4 NumPy array of floating-point numbers is created with np.random.randn().

To round all of the values in the data array, you can pass data as the argument to the np.around() function. The desired number of decimal places is set with the decimals keyword argument. The round half to even strategy is used, just like Python’s built-in round() function.

For example, the following rounds all of the values in data to three decimal places:

>>>>>> np.around(data,decimals=3)array([[ 0.357, 0.378, 1.382, 1.176], [-0.939, -1.143, -0.542, -0.549], [ 0.209, 0.213, 1.268, -0.807]])

np.around() is at the mercy of floating-point representation error, just like round() is.

For example, the value in the third row of the first column in the data array is 0.20851975. When you round this to three decimal places using the “rounding half to even” strategy, you expect the value to be 0.208. But you can see in the output from np.around() that the value is rounded to 0.209. However, the value 0.3775384 in the first row of the second column rounds correctly to 0.378.

If you need to round the data in your array to integers, NumPy offers several options:

The np.ceil() function rounds every value in the array to the nearest integer greater than or equal to the original value:

>>>>>> np.ceil(data)array([[ 1., 1., 2., 2.], [-0., -1., -0., -0.], [ 1., 1., 2., -0.]])

Hey, we discovered a new number! Negative zero!

Actually, the IEEE-754 standard requires the implementation of both a positive and negative zero. What possible use is there for something like this? Wikipedia knows the answer:

Informally, one may use the notation “−0” for a negative value that was rounded to zero. This notation may be useful when a negative sign is significant; for example, when tabulating Celsius temperatures, where a negative sign means below freezing. (Source)

To round every value down to the nearest integer, use np.floor():

>>>>>> np.floor(data)array([[ 0., 0., 1., 1.], [-1., -2., -1., -1.], [ 0., 0., 1., -1.]])

You can also truncate each value to its integer component with np.trunc():

>>>>>> np.trunc(data)array([[ 0., 0., 1., 1.], [-0., -1., -0., -0.], [ 0., 0., 1., -0.]])

Finally, to round to the nearest integer using the “rounding half to even” strategy, use np.rint():

>>>>>> np.rint(data)array([[ 0., 0., 1., 1.], [-1., -1., -1., -1.], [ 0., 0., 1., -1.]])

You might have noticed that a lot of the rounding strategies we discussed earlier are missing here. For the vast majority of situations, the around() function is all you need. If you need to implement another strategy, such as round_half_up(), you can do so with a simple modification:

defround_half_up(n,decimals=0):multiplier=10**decimals# Replace math.floor with np.floorreturnnp.floor(n*multiplier+0.5)/multiplier

Thanks to NumPy’s vectorized operations, this works just as you expect:

>>>>>> round_half_up(data,decimals=2)array([[ 0.36, 0.38, 1.38, 1.18], [-0.94, -1.14, -0.54, -0.55], [ 0.21, 0.21, 1.27, -0.81]])

Now that you’re a NumPy rounding master, let’s take a look at Python’s other data science heavy-weight: the Pandas library.

Rounding Pandas Series and DataFrame

The Pandas library has become a staple for data scientists and data analysts who work in Python. In the words of Real Python’s own Joe Wyndham:

Pandas is a game-changer for data science and analytics, particularly if you came to Python because you were searching for something more powerful than Excel and VBA. (Source)

Note: Before you continue, you’ll need to pip3 install pandas if you don’t already have it in your environment. As was the case for NumPy, if you installed Python with Anaconda, you should be ready to go!

The two main Pandas data structures are the DataFrame, which in very loose terms works sort of like an Excel spreadsheet, and the Series, which you can think of as a column in a spreadsheet. Both Series and DataFrame objects can also be rounded efficiently using the Series.round() and DataFrame.round() methods:

>>>>>> importpandasaspd>>> # Re-seed np.random if you closed your REPL since the last example>>> np.random.seed(444)>>> series=pd.Series(np.random.randn(4))>>> series0 0.3574401 0.3775382 1.3823383 1.175549dtype: float64>>> series.round(2)0 0.361 0.382 1.383 1.18dtype: float64>>> df=pd.DataFrame(np.random.randn(3,3),columns=["A","B","C"])>>> df A B C0 -0.939276 -1.143150 -0.5424401 -0.548708 0.208520 0.2126902 1.268021 -0.807303 -3.303072>>> df.round(3) A B C0 -0.939 -1.143 -0.5421 -0.549 0.209 0.2132 1.268 -0.807 -3.303

The DataFrame.round() method can also accept a dictionary or a Series, to specify a different precision for each column. For instance, the following examples show how to round the first column of df to one decimal place, the second to two, and the third to three decimal places:

>>>>>> # Specify column-by-column precision with a dictionary>>> df.round({"A":1,"B":2,"C":3}) A B C0 -0.9 -1.14 -0.5421 -0.5 0.21 0.2132 1.3 -0.81 -3.303>>> # Specify column-by-column precision with a Series>>> decimals=pd.Series([1,2,3],index=["A","B","C"])>>> df.round(decimals) A B C0 -0.9 -1.14 -0.5421 -0.5 0.21 0.2132 1.3 -0.81 -3.303 If you need more rounding flexibility, you can apply NumPy’s floor(), ceil(), and rint() functions to Pandas Series and DataFrame objects:

>>>>>> np.floor(df) A B C0 -1.0 -2.0 -1.01 -1.0 0.0 0.02 1.0 -1.0 -4.0>>> np.ceil(df) A B C0 -0.0 -1.0 -0.01 -0.0 1.0 1.02 2.0 -0.0 -3.0>>> np.rint(df) A B C0 -1.0 -1.0 -1.01 -1.0 0.0 0.02 1.0 -1.0 -3.0

The modified round_half_up() function from the previous section will also work here:

>>>>>> round_half_up(df,decimals=2) A B C0 -0.94 -1.14 -0.541 -0.55 0.21 0.212 1.27 -0.81 -3.30

Congratulations, you’re well on your way to rounding mastery! You now know that there are more ways to round a number than there are taco combinations. (Well… maybe not!) You can implement numerous rounding strategies in pure Python, and you have sharpened your skills on rounding NumPy arrays and Pandas Series and DataFrame objects.

There’s just one more step: knowing when to apply the right strategy.

Applications and Best Practices

The last stretch on your road to rounding virtuosity is understanding when to apply your newfound knowledge. In this section, you’ll learn some best practices to make sure you round your numbers the right way.

Store More and Round Late

When you deal with large sets of data, storage can be an issue. In most relational databases, each column in a table is designed to store a specific data type, and numeric data types are often assigned precision to help conserve memory.

For example, a temperature sensor may report the temperature in a long-running industrial oven every ten seconds accurate to eight decimal places. The readings from this are used to detect abnormal fluctuations in temperature that could indicate the failure of a heating element or some other component. So, there might be a Python script running that compares each incoming reading to the last to check for large fluctuations.

The readings from this sensor are also stored in a SQL database so that the daily average temperature inside the oven can be computed each day at midnight. The manufacturer of the heating element inside the oven recommends replacing the component whenever the daily average temperature drops .05 degrees below normal.

For this calculation, you only need three decimal places of precision. But you know from the incident at the Vancouver Stock Exchange that removing too much precision can drastically affect your calculation.

If you have the space available, you should store the data at full precision. If storage is an issue, a good rule of thumb is to store at least two or three more decimal places of precision than you need for your calculation.

Finally, when you compute the daily average temperature, you should calculate it to the full precision available and round the final answer.

Obey Local Currency Regulations

When you order a cup of coffee for $2.40 at the coffee shop, the merchant typically adds a required tax. The amount of that tax depends a lot on where you are geographically, but for the sake of argument, let’s say it’s 6%. The tax to be added comes out to $0.144. Should you round this up to $0.15 or down to $0.14? The answer probably depends on the regulations set forth by the local government!

Situations like this can also arise when you are converting one currency to another. In 1999, the European Commission on Economical and Financial Affairs codified the use of the “rounding half away from zero” strategy when converting currencies to the Euro, but other currencies may have adopted different regulations.

Another scenario, “Swedish rounding”, occurs when the minimum unit of currency at the accounting level in a country is smaller than the lowest unit of physical currency. For example, if a cup of coffee costs $2.54 after tax, but there are no 1-cent coins in circulation, what do you do? The buyer won’t have the exact amount, and the merchant can’t make exact change.

How situations like this are handled is typically determined by a country’s government. You can find a list of rounding methods used by various countries on Wikipedia.

If you are designing software for calculating currencies, you should always check the local laws and regulations in your users’ locations.

When In Doubt, Round Ties To Even

When you are rounding numbers in large datasets that are used in complex computations, the primary concern is limiting the growth of the error due to rounding.

Of all the methods we’ve discussed in this article, the “rounding half to even” strategy minimizes rounding bias the best. Fortunately, Python, NumPy, and Pandas all default to this strategy, so by using the built-in rounding functions you’re already well protected!

Summary

Whew! What a journey this has been!

In this article, you learned that:

There are various rounding strategies, which you now know how to implement in pure Python.

Every rounding strategy inherently introduces a rounding bias, and the “rounding half to even” strategy mitigates this bias well, most of the time.

The way in which computers store floating-point numbers in memory naturally introduces a subtle rounding error, but you learned how to work around this with the decimal module in Python’s standard library.

You can round NumPy arrays and Pandas Series and DataFrame objects.

There are best practices for rounding with real-world data.

Take the Quiz: Test your knowledge with our interactive “Rounding Numbers in Python” quiz. Upon completion you will receive a score so you can track your learning progress over time.

Click here to start the quiz »

If you are interested in learning more and digging into the nitty-gritty details of everything we’ve covered, the links below should keep you busy for quite a while.

At the very least, if you’ve enjoyed this article and learned something new from it, pass it on to a friend or team member! Be sure to share your thoughts with us in the comments. We’d love to hear some of your own rounding-related battle stories!

Happy Pythoning!

Additional Resources

Rounding strategies and bias:

Floating-point and decimal specifications:

Interesting Reads:

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]