Codementor: A simple timer using Asyncio.

Codementor: How to scrape data from a website using Python

Catalin George Festila: Windows - test Django version 2.1.1 .

Add your python to path environment variable under Windows O.S.

Create your working folder:

C:\Python364>mkdir mywebsiteC:\Python364>cd mywebsiteC:\Python364\mywebsite>python -m venv myvenv

C:\Python364\mywebsite>myvenv\Scripts\activate

(myvenv) C:\Python364\mywebsite>python -m pip install --upgrade pip

(myvenv) C:\Python364\mywebsite>pip3.6 install django

Collecting django

...(myvenv) C:\Python364\mywebsite>pip3.6 install django

Requirement already satisfied: django in c:\python364\mywebsite\myvenv\lib\

site-packages (2.1.1)

Requirement already satisfied: pytz in c:\python364\mywebsite\myvenv\lib\

site-packages (from django) (2018.5)(myvenv) C:\Python364\mywebsite>cd myvenv

(myvenv) C:\Python364\mywebsite\myvenv>cd Scripts

(myvenv) C:\Python364\mywebsite\myvenv\Scripts>django-admin.exe startproject mysite

(myvenv) C:\Python364\mywebsite\myvenv\Scripts>dir my*

(myvenv) C:\Python364\mywebsite\myvenv\Scripts>cd mysite

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite&(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>cd mysite

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\mysite>notepad settings.pyTIME_ZONE = 'Europe/Paris'ALLOWED_HOSTS = ['192.168.0.185','mysite.com'](myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\mysite>cd ..

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, sessions

Running migrations:

Applying contenttypes.0001_initial... OK

Applying auth.0001_initial... OK

Applying admin.0001_initial... OK

Applying admin.0002_logentry_remove_auto_add... OK

Applying admin.0003_logentry_add_action_flag_choices... OK

Applying contenttypes.0002_remove_content_type_name... OK

Applying auth.0002_alter_permission_name_max_length... OK

Applying auth.0003_alter_user_email_max_length... OK

Applying auth.0004_alter_user_username_opts... OK

Applying auth.0005_alter_user_last_login_null... OK

Applying auth.0006_require_contenttypes_0002... OK

Applying auth.0007_alter_validators_add_error_messages... OK

Applying auth.0008_alter_user_username_max_length... OK

Applying auth.0009_alter_user_last_name_max_length... OK

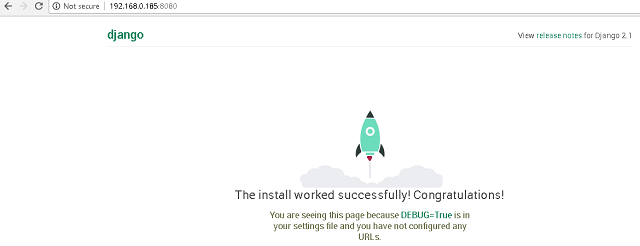

Applying sessions.0001_initial... OK(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py runserver

192.168.0.185:8080

Performing system checks...

System check identified no issues (0 silenced).

September 07, 2018 - 16:30:13

Django version 2.1.1, using settings 'mysite.settings'

Starting development server at http://192.168.0.185:8080/

Quit the server with CTRL-BREAK.

[07/Sep/2018 16:30:16] "GET / HTTP/1.1" 200 16348

[07/Sep/2018 16:30:21] "GET / HTTP/1.1" 200 16348

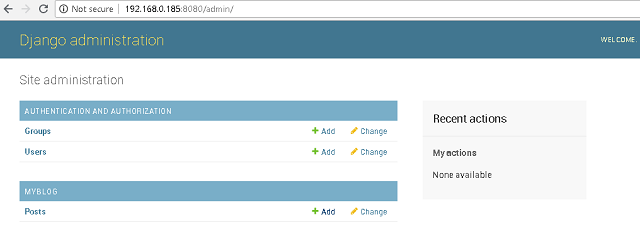

Let's start django application named myblog and add to settings.py :

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py startapp

myblog

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>dir

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>cd mysite

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\mysite>notepad settings.py# Application definition

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'myblog',

](myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\mysite>cd ..

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>cd myblog

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\myblog>notepad models.pyfrom django.db import models

# Create your models here.

from django.utils import timezone

from django.contrib.auth.models import User

class Post(models.Model):

author = models.ForeignKey(User,on_delete=models.PROTECT)

title = models.CharField(max_length=200)

text = models.TextField()

create_date = models.DateTimeField(default=timezone.now)

published_date = models.DateTimeField(blank=True, null=True)

def publish(self):

self.publish_date = timezone.now()

self.save()

def __str__(self):

return self.title

myblog :

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\myblog>cd ..

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py

makemigrations myblog

Migrations for 'myblog':

myblog\migrations\0001_initial.py

- Create model Post

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py migrate

myblog

Operations to perform:

Apply all migrations: myblog

Running migrations:

Applying myblog.0001_initial... OK(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>cd myblog

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\myblog>notepad admin.py(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite\myblog>cd ..

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py runserver

192.168.0.185:8080

Performing system checks...

System check identified no issues (0 silenced).

September 07, 2018 - 17:19:00

Django version 2.1.1, using settings 'mysite.settings'

Starting development server at http://192.168.0.185:8080/

Quit the server with CTRL-BREAK.

If you see some errors this will be fix later.

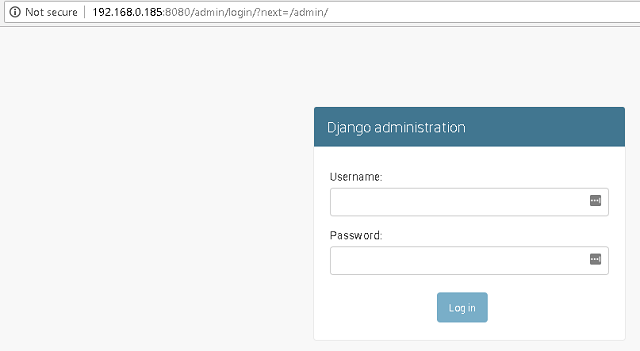

Let's make a super user with this command:

(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py

createsuperuser

Username (leave blank to use 'catafest'): catafest

Email address: catafest@yahoo.com

Password:

Password (again):

This password is too short. It must contain at least 8 characters.

Bypass password validation and create user anyway? [y/N]: y

Superuser created successfully.(myvenv) C:\Python364\mywebsite\myvenv\Scripts\mysite>python manage.py runserver

192.168.0.185:8080

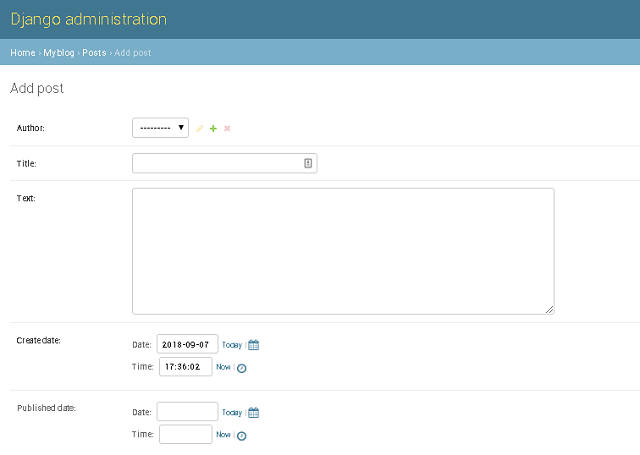

Click on Add button to add your post.

The result is this:

I don't make settings for url and view. This will be changed by users.

Corey Goldberg: Python - Using Chrome Extensions With Selenium WebDriver

Continuum Analytics Blog: TensorFlow in Anaconda

By Jonathan Helmus TensorFlow is a Python library for high-performance numerical calculations that allows users to create sophisticated deep learning and machine learning applications. Released as open source software in 2015, TensorFlow has seen tremendous growth and popularity in the data science community. There are a number of methods that can be used to install …

Read more →

The post TensorFlow in Anaconda appeared first on Anaconda.

NumFOCUS: Public Institutions and Open Source Software (Matthew Rocklin guest blog)

The post Public Institutions and Open Source Software (Matthew Rocklin guest blog) appeared first on NumFOCUS.

Codementor: 2 Ways to make Celery 4 run on Windows

Yasoob Khalid: Sending & Sniffing WLAN Beacon Frames using Scapy

Hi everyone! Over the summers I did research on Wi-Fi and part of my research involved sending and receiving different kinds of IEEE 802.11 packets. I did most of this stuff using Scapy so I thought why not create a tutorial about it? When I started my research I had to look through multiple online articles/tutorials in order to get started with Scapy. In order to save you time I am going to bring you up-to speed by running you through a short tutorial.

Goal: Our end goal is to create two scripts. The first script will send WLAN beacon frames and the second script will intercept those beacon frames. Both of these scripts will be running on two different systems. We want to be able to send beacon frames from one system and intercept them from the second one.

For those of you who don’t know what a beacon frame is (Wikipedia):

Beacon frame is one of the management frames in IEEE 802.11 based WLANs. It contains all the information about the network. Beacon frames are transmitted periodically, they serve to announce the presence of a wireless LAN and to synchronise the members of the service set. Beacon frames are transmitted by the access point(AP) in an infrastructure basic service set (BSS). In IBSS network beacon generation is distributed among the stations.

Sending Beacon frames:

First of all we import all of the required modules and then define some variables. Modify the sender address to the MAC address of your WiFi card and change the iface to your wireless interface name (common variants are wlan0 and mon0). Scapy works with “layers” so we then define the Dot11 layer with some parameters. The type parameter = 0 simply means that this is a management frame and the subtype = 8 means that this is a beacon frame. Addr1 is the receiver address. In our case this is going to be a broadcast (‘ff:ff:ff:ff:ff:ff’ is the broadcast MAC address) because we aren’t sending the beacon to a specific device. Then we create a Dot11Beacon layer. Most of the time we don’t need to pass any parameters to a layer because Scapy has sane defaults which work most of the time. After that we create a Dot11Elt layer which takes the SSID of the fake access point as an input along with some other SSID related inputs. SSID is the name of the network which you see while scanning for WiFi on other devices. Lastly we stack the layers in order and add a RadioTap layer at the bottom. Now that the layers are all set-up we just need to send the frame and the sendp function does exactly that.

Sniffing Beacon Frames

The sniffing part is pretty similar to the sending part. The code is as follows:

Firstly, we import the Dot11 layer and the sniff function. We create a packet filter function which takes a packet as an input. Then it checks whether the packet has a Dot11 layer or not. Then it checks if it is a beacon or not (type & subtype). Lastly, it adds it to the ap_list list and prints out the MAC address and the SSID on screen.

I hope you all found this short walk-through helpful. I love how powerful Scapy is. We have only scratched the surface in this post. You can do all sorts of packet manipulation stuff in Scapy. The official docs are really good and the WLAN BY GERMAN ENGINEERING blog is also super helpful.

See you next time!

Nikola: Nikola v8.0.0rc1 is out!

On behalf of the Nikola team, I am pleased to announce the immediate availability of Nikola v8.0.0rc1. This is the end of the road to Nikola version 8. Within 48 hours, assuming there are no grave bugs reported in that timeframe, we intend to release v8 final.

What is Nikola?

Nikola is a static site and blog generator, written in Python. It can use Mako and Jinja2 templates, and input in many popular markup formats, such as reStructuredText and Markdown — and can even turn Jupyter Notebooks into blog posts! It also supports image galleries, and is multilingual. Nikola is flexible, and page builds are extremely fast, courtesy of doit (which is rebuilding only what has been changed).

Find out more at the website: https://getnikola.com/

Downloads

Install using pip install Nikola==8.0.0rc1.

If you want to upgrade to Nikola v8, make sure to read the Upgrading blog post.

Changes

Features

- Add Vietnamese translation by Hoai-Thu Vuong

- Don’t generate gallery index if the destination directory is site root and it would conflict with blog index (Issue #3133)

- All built-in themes now support updated timestamp fields in posts. The update time, if it is specified and different from the posting time, will be displayed as "{postDate} (${messages("updated")} {updateDate})". If no update time is specified, the posting time will be displayed alone.

- All built-in themes now support the DATE_FANCINESS option.

- Theme bundles are now parsed using the configparser module and can support newlines inside entries as well as comments

- Make bootstrap4 navbar color configurable with THEME_CONFIG['navbar_light'] (Issue #2863)

Bugfixes

- Use UTF-8 instead of system encoding for gallery metadata.yml file

- Do not remove first heading in document (reST document title) if USE_REST_DOCINFO_METADATA is disabled (Issue #3124)

- Remove NO_DOCUTILS_TITLE_TRANSFORM setting, this is now default behavior if USE_REST_DOCINFO_METADATA is disabled (Issue #2382, #3124)

- Enforce trailing slash for directories in nikola auto (Issue #3140)

- Galleries with baguetteBox don’t glitch out on the first image anymore (Issue #3141)

Removed features

- Moved tag_cloud_data.json generation to a separate tagcloud plugin (Issue #1696)

- The webassets library is no longer required, we now manually bundle files (Issue #3074)

Weekly Python StackOverflow Report: (cxlii) stackoverflow python report

Between brackets: [question score / answers count]

Build date: 2018-09-08 19:57:14 GMT

- Converting list of lists into a dictionary of dictionaries in Python - [13/5]

- Back and forth loop Python - [10/8]

- When and how should I use super? - [9/1]

- Why are properties class attributes in Python? - [6/5]

- Manipulate pandas dataframe to display desired output - [6/5]

- Why is python dict creation from list of tuples 3x slower than from kwargs - [6/3]

- Get all possible str partitions of any length - [6/3]

- Insert element into numpy array and get all rolled permutations - [6/3]

- Unable to click on signs on a map - [6/2]

- Generate a list a(n) is not of the form prime + a(k), k < n - [6/2]

Reuven Lerner: Announcing: Weekly Python Exercise, Autumn 2018 cohort

Just about every day for the last decade, I’ve taught Python to developers at companies around the world. And if there’s anything that those developers want, it’s to improve their Python fluency.

Just about every day for the last decade, I’ve taught Python to developers at companies around the world. And if there’s anything that those developers want, it’s to improve their Python fluency.

Being a more fluent Python developer doesn’t only mean being able to solve problems faster and better — although these are nice benefits, for sure!

Being a more fluent Python developers means that you can solve bigger, more complex problems. That’s not only worth something to you, but to your employer, as well.

Developers know this, and are always asking me how they can improve their skills once my courses are over.

My solution is Weekly Python Exercise, a year-long course in which you get to improve your existing Python skills, and learn new ones, as you solve a new exercise each week. Because learning is always more effective with other people, WPE students can use our private forum to discuss solutions, collaborate on the best strategies, and even (much as I hate to admit it) tell me when my solution could have been better.

Oh, and there are live, monthly office hours as well, when you can ask me questions about the exercises. Or Python in general. Or anything, really. It’s your chance to pick my brain, in real time.

If you are tired of searching Stack Overflow every time you start a new Python project, and want to become a more fluent developer, then Weekly Python Exercise is for you.

Note that it’s not a course for beginners! WPE is meant for people who have already learned Python, and are using it, but want to gain fluency. We’ll be dealing with all sorts of advanced topics, too — from inner functions to generators to decorators to object-oriented techniques.

Sound good? Learn more at https://WeeklyPythonExercise.com/. And if you have any questions, then don’t hesitate to e-mail me at reuven@lerner.co.il. I’ll be delighted to answer your question!

The post Announcing: Weekly Python Exercise, Autumn 2018 cohort appeared first on Lerner Consulting Blog.

Filipe Saraiva: Akademy 2018

Look for your favorite KDE contributor at Akademy 2018 Group Photo

This year I was in Vienna to attend Akademy 2018, the annual KDE world summit. It was my fourth Akademy after Berlin’2012 (in fact, Desktop Summit ), Brno’2014, and Berlin’2016 (together with QtCon). Interesting, I go to Akademy each 2 years – let’s try to improve it next year.

After a really long travel I could meet gearheads from all parts of world, including Brazil, old and new friends working together to improve the free software computing experience, each one giving a small (and someone, giant) parts to make this dream in reality. Always I meet these people I feel me recharged to continue my work on this dream.

I loved the talk of Volker about KDE Itinerary, a new application to manage passbook files related to travels. It think an app just to manage all kind of passbook files (like tickets for concerts, theaters, and more) like this could be a interesting addition to KDE software family, and a step behind KDE Itinerary. Anyway, waiting for news from this software.

The talk about creating transitions in Kdenlive make me think on how interesting could be a plugin to run bash (or python, maybe) in order to automate several steps in some works performed by editors in this software. Maybe use KDE Store to share it… well, ideas.

I know Camilo during the event. His talk about vvave give me hope for a new and great audio player application for our family, as we had in the past.

The last talk called my attention was Nate about some ideas to improve our ecosystem. Nate is doing a great work at the usability side, polishing our software in several ways. I recommend his blog to follow this work. Despite I agree in general – like, we need improve our PIM suite – I have some disagreements with others, like the idea of KDE developers contributing to LibreOffice. LibreOffice is a completely different source code with different technologies and practices, there are several (and for most of us, not mapped) influences from different organizations inside the LibreOffice Foundation directing the development of the suite, and in the last we have Calligra Suite – the idea sounded as “let’s drop Calligra”. But it was just a disagreements for a small suggestion, nothing more.

After the talk sessions, Akademy had a plenty of BoFs sessions for all kind of preferences. I attended to KDE and Qt (it is always interesting to keep eyes on this topic), KDE Phabricator (Phabricator is an amazing set of tool but suffers because the competitors are much better known and the usability must be improved), and MyCroft (I like the idea of integration between MyCroft and Plasma, specially for specific use cases like assistant to disabled people – I am thinking about it since some months).

This year Aracele, Sandro and me hosted a BoF titled “KDE in Americas”. The idea was present some of our achievements for KDE in South America and discuss with fellows from Central and North America about a “continental” event, bringing back the old CampKDE to a new edition together with LaKademy (the secret name is LaKamp :D). This ideia must be matured in the future.

This year I tried to host a BoF about KDE in Science and Cantor, but unfortunately I didn’t have feedback on potential attendees. Let’s see if in future we can host some of them.

Akademy is an amazing event where you can meet KDE contributors from different tasks and cultures, discuss to them, get feedback for current projects and build new ones. I would like to thanks KDE e.V. for the sponsorship and expect see you again next year (let’s try to insert a disturbance in this biannual sequence) at Akademy or, in some weeks at LaKademy!

Brazilians at Akademy 2018: KDHelio, Caio, Sandro, Filipe (me), Tomaz (below), Eliakin, Aracele, and Lays

PyPy Development: The First 15 Years of PyPy — a Personal Retrospective

A few weeks ago I (=Carl Friedrich Bolz-Tereick) gave a keynote at ICOOOLPS in Amsterdam with the above title. I was very happy to have been given that opportunity, since a number of our papers have been published at ICOOOLPS, including the very first one I published when I'd just started my PhD. I decided to turn the talk manuscript into a (longish) blog post, to make it available to a wider audience. Note that this blog post describes my personal recollections and research, it is thus necessarily incomplete and coloured by my own experiences.

PyPy has turned 15 years old this year, so I decided that that's a good reason to dig into and talk about the history of the project so far. I'm going to do that using the lens of how performance developed over time, which is from something like 2000x slower than CPython, to roughly 7x faster. In this post I am going to present the history of the project, and also talk about some lessons that we learned.

The post does not make too many assumptions about any prior knowledge of what PyPy is, so if this is your first interaction with it, welcome! I have tried to sprinkle links to earlier blog posts and papers into the writing, in case you want to dive deeper into some of the topics.

As a disclaimer, in this post I am going to mostly focus on ideas, and not explain who had or implemented them. A huge amount of people contributed to the design, the implementation, the funding and the organization of PyPy over the years, and it would be impossible to do them all justice.

2003: Starting the Project

On the technical level PyPy is a Python interpreter written in Python, which is where the name comes from. It also has an automatically generated JIT compiler, but I'm going to introduce that gradually over the rest of the blog post, so let's not worry about it too much yet. On the social level PyPy is an interesting mixture of a open source project, that sometimes had research done in it.

The project got started in late 2002 and early 2003. To set the stage, at that point Python was a significantly less popular language than it is today. Python 2.2 was the version at the time, Python didn't even have a bool type yet.

In fall 2002 the PyPy project was started by a number of Python programmers on a mailing list who said something like (I am exaggerating somewhat) "Python is the greatest most wonderful most perfect language ever, we should use it for absolutely everything. Well, what aren't we using it for? The Python virtual machine itself is written in C, that's bad. Let's start a project to fix that."

Originally that project was called "minimal python", or "ptn", later gradually renamed to PyPy. Here's the mailing list post to announce the project more formally:

Minimal Python Discussion, Coding and Sprint -------------------------------------------- We announce a mailinglist dedicated to developing a "Minimal Python" version. Minimal means that we want to have a very small C-core and as much as possible (re)implemented in python itself. This includes (parts of) the VM-Code.

Why would that kind of project be useful? Originally it wasn't necessarily meant to be useful as a real implementation at all, it was more meant as a kind of executable explanation of how Python works, free of the low level details of CPython. But pretty soon there were then also plans for how the virtual machine (VM) could be bootstrapped to be runnable without an existing Python implementation, but I'll get to that further down.

2003: Implementing the Interpreter

In early 2003 a group of Python people met in Hildesheim (Germany) for the first of many week long development sprints, organized by Holger Krekel. During that week a group of people showed up and started working on the core interpreter. In May 2003 a second sprint was organized by Laura Creighton and Jacob Halén in Gothenburg (Sweden). And already at that sprint enough of the Python bytecodes and data structures were implemented to make it possible to run a program that computed how much money everybody had to pay for the food bills of the week. And everybody who's tried that for a large group of people knows that that’s an amazingly complex mathematical problem.

In the next two years, the project continued as a open source project with various contributors working on it in their free time, and meeting for the occasional sprint. In that time, the rest of the core interpreter and the core data types were implemented.

There's not going to be any other code in this post, but to give a bit of a flavor of what the Python interpreter at that time looked like, here's the implementation of the DUP_TOP bytecode after these first sprints. As you can see, it's in Python, obviously, and it has high level constructs such as method calls to do the stack manipulations:

defDUP_TOP(f):w_1=f.valuestack.top()f.valuestack.push(w_1)Here's the early code for integer addition:

defint_int_add(space,w_int1,w_int2):x=w_int1.intvaly=w_int2.intvaltry:z=x+yexceptOverflowError:raiseFailedToImplement(space.w_OverflowError,space.wrap("integer addition"))returnW_IntObject(space,z)(the currentimplementations look slightly but not fundamentally different.)

Early organizational ideas

Some of the early organizational ideas of the project were as follows. Since the project was started on a sprint and people really liked that style of working PyPy continued to be developed on various subsequent sprints.

From early on there was a very heavy emphasis on testing. All the parts of the interpreter that were implemented had a very careful set of unit tests to make sure that they worked correctly. From early on, there was a continuous integration infrastructure, which grew over time (nowadays it is very natural for people to have automated tests, and the concept of green/red builds: but embracing this workflow in the early 2000s was not really mainstream yet, and it is probably one of the reasons behind PyPy's success).

At the sprints there was also an emphasis on doing pair programming to make sure that everybody understood the codebase equally. There was also a heavy emphasis on writing good code and on regularly doing refactorings to make sure that the codebase remained nice, clean and understandable. Those ideas followed from the early thoughts that PyPy would be a sort of readable explanation of the language.

There was also a pretty fundamental design decision made at the time. That was that the project should stay out of language design completely. Instead it would follow CPython's lead and behave exactly like that implementation in all cases. The project therefore committed to being almost quirk-to-quirk compatible and to implement even the more obscure (and partially unnecessary) corner cases of CPython.

All of these principles continue pretty much still today (There are a few places where we had to deviate from being completely compatible, they are documented here).

2004-2007: EU-Funding

While all this coding was going on it became clear pretty soon that the goals that various participants had for the project would be very hard to achieve with just open source volunteers working on the project in their spare time. Particularly also the sprints became expensive given that those were just volunteers doing this as a kind of weird hobby. Therefore a couple of people of the project got together to apply for an EU grant in the framework programme 6 to solve these money problems. In mid-2004 that application proved to be successful. And so the project got a grant of a 1.3 million Euro for two years to be able to employ some of the core developers and to make it possible for them work on the project full time. The EU grant went to seven small-to-medium companies and Uni Düsseldorf. The budget also contained money to fund sprints, both for the employed core devs as well as other open source contributors.

The EU project started in December 2004 and that was a fairly heavy change in pace for the project. Suddenly a lot of people were working full time on it, and the pace and the pressure picked up quite a lot. Originally it had been a leisurely project people worked on for fun. But afterwards people discovered that doing this kind of work full time becomes slightly less fun, particularly also if you have to fulfill the ambitious technical goals that the EU proposal contained. And the proposal indeed contained a bit everything to increase its chance of acceptance, such as aspect oriented programming, semantic web, logic programming, constraint programming, and so on. Unfortunately it turned out that those things then have to be implemented, which can be called the first thing we learned: if you promise something to the EU, you'll have to actually go do it (After the funding ended, a lot of these features were actually removed from the project again, at a cleanup sprint).

2005: Bootstrapping PyPy

So what were the actually useful things done as part of the EU project?

One of the most important goals that the EU project was meant to solve was the question of how to turn PyPy into an actually useful VM for Python. The bootstrapping plans were taken quite directly from Squeak, which is a Smalltalk VM written in a subset of Smalltalk called Slang, which can then be bootstrapped to C code. The plan for PyPy was to do something similar, to define a restricted subset of Python called RPython, restricted in such a way that it should be possible to statically compile RPython programs to C code. Then the Python interpreter should only use that subset, of course.

The main difference from the Squeak approach is that Slang, the subset of Squeak used there, is actually quite a low level language. In a way, you could almost describe it as C with Smalltalk syntax. RPython was really meant to be a much higher level language, much closer to Python, with full support for single inheritance classes, and most of Python's built-in data structures.

(BTW, you don’t have to understand any of the illustrations in this blog post, they are taken from talks and project reports we did over the years so they are of archaeological interest only and I don’t understand most of them myself.)

From 2005 on, work on the RPython type inference engine and C backend started in earnest, which was sort of co-developed with the RPython language definition and the PyPy Python interpreter. This is also roughly the time that I joined the project as a volunteer.

And at the second sprint I went to, in July 2005, two and a half years after the project got started, we managed to bootstrap the PyPy interpreter to C for the first time. When we ran the compiled program, it of course immediately segfaulted. The reason for that was that the C backend had turned characters into signed chars in C, while the rest of the infrastructure assumed that they were unsigned chars. After we fixed that, the second attempt worked and we managed to run an incredibly complex program, something like 6 * 7. That first bootstrapped version was really really slow, a couple of hundred times slower than CPython.

The bootstrapping process of RPython has a number of nice benefits, a big one being that a number of the properties of the generated virtual machine don't have to expressed in the interpreter. The biggest example of this is garbage collection. RPython is a garbage collected language, and the interpreter does not have to care much about GC in most cases. When the C source code is generated, a GC is automatically inserted. This is a source of great flexibility. Over time we experimented with a number of different GC approaches, from reference counting to Boehm to our current incremental generational collector. As an aside, for a long time we were also working on other backends to the RPython language and hoped to be able to target Java and .NET as well. Eventually we abandoned this strand of work, however.

RPython's Modularity Problems

Now we come to the first thing I would say we learned in the project, which is that the quality of tools we thought of as internal things still matters a lot. One of the biggest technical mistakes we've made in the project was that we designed RPython without any kind of story for modularity. There is no concept of modules in the language or any other way to break up programs into smaller components. We always thought that it would be ok for RPython to be a little bit crappy. It was meant to be this sort of internal language with not too many external users. And of course that turned out to be completely wrong later.

That lack of modularity led to various problems that persist until today. The biggest one is that there is no separate compilation for RPython programs at all! You always need to compile all the parts of your VM together, which leads to infamously bad compilation times.

Also by not considering the modularity question we were never forced to fix some internal structuring issues of the RPython compiler itself. Various layers of the compiler keep very badly defined and porous interfaces between them. This was made possible by being able to work with all the program information in one heap, making the compiler less approachable and maintainable than it maybe could be.

Of course this mistake just got more and more costly to fix over time, and so it means that so far nobody has actually done it. Not thinking more carefully about RPython's design, particularly its modularity story, is in my opinion the biggest technical mistake the project made.

2006: The Meta-JIT

After successfully bootstrapping the VM we did some fairly straightforward optimizations on the interpreter and the C backend and managed to reduce the slowdown versus CPython to something like 2-5 times slower. That's great! But of course not actually useful in practice. So where do we go from here?

One of the not so secret goals of Armin Rigo, one of the PyPy founders, was to use PyPy together with some advanced partial evaluation magic sauce to somehow automatically generate a JIT compiler from the interpreter. The goal was something like, "you write your interpreter in RPython, add a few annotations and then we give you a JIT for free for the language that that interpreter implements."

Where did the wish for that approach come from, why not just write a JIT for Python manually in the first place? Armin had actually done just that before he co-founded PyPy, in a project called Psyco. Psyco was an extension module for CPython that contained a method-based JIT compiler for Python code. And Psyco proved to be an amazingly frustrating compiler to write. There were two main reasons for that. The first reason was that Python is actually quite a complex language underneath its apparent simplicity. The second reason for the frustration was that Python was and is very much an alive language, that gains new features in the language core in every version. So every time a new Python version came out, Armin had to do fundamental changes and rewrites to Psyco, and he was getting pretty frustrated with it. So he hoped that that effort could be diminished by not writing the JIT for PyPy by hand at all. Instead, the goal was to generate a method-based JIT from the interpreter automatically. By taking the interpreter, and applying a kind of advanced transformation to it, that would turn it into a method-based JIT. And all that would still be translated into a C-based VM, of course.

Slide from Psyco presentation at EuroPython 2002

The First JIT Generator

From early 2006 on until the end of the EU project a lot of work went into writing such a JIT generator. The idea was to base it on runtime partial evaluation. Partial evaluation is an old idea in computer science. It's supposed to be a way to automatically turn interpreters for a language into a compiler for that same language. Since PyPy was trying to generate a JIT compiler, which is in any case necessary to get good performance for a dynamic language like Python, the partial evaluation was going to happen at runtime.

There are various ways to look at partial evaluation, but if you've never heard of it before, a simple way to view it is that it will compile a Python function by gluing together the implementations of the bytecodes of that function and optimizing the result.

The main new ideas of PyPy's partial-evaluation based JIT generator as opposed to earlier partial-evaluation approaches are the ideas of "promote" and the idea of "virtuals". Both of these techniques had already been present (in a slightly less general form) in Psyco, and the goal was to keep using them in PyPy. Both of these techniques also still remain in use today in PyPy. I'm going on a slight technical diversion now, to give a high level explanation of what those ideas are for.

Promote

One important ingredient of any JIT compiler is the ability to do runtime feedback. Runtime feedback is most commonly used to know something about which concrete types are used by a program in practice. Promote is basically a way to easily introduce runtime feedback into the JIT produced by the JIT generator. It's an annotation the implementer of a language can use to express their wish that specialization should happen at this point. This mechanism can be used to express all kinds of runtime feedback, moving values from the interpreter into the compiler, whether they be types or other things.

Virtuals

Virtuals are a very aggressive form of partial escape analysis. A dynamic language often puts a lot of pressure on the garbage collector, since most primitive types (like integers, floats and strings) are boxed in the heap, and new boxes are allocated all the time.

With the help of virtuals a very significant portion of all allocations in the generated machine code can be completely removed. Even if they can't be removed, often the allocation can be delayed or moved into an error path, or even into a deoptimization path, and thus disappear from the generated machine code completely.

This optimization really is the super-power of PyPy's optimizer, since it doesn't work only for primitive boxes but for any kind of object allocated on the heap with a predictable lifetime.

As an aside, while this kind of partial escape analysis is sort of new for object-oriented languages, it has actually existed in Prolog-based partial evaluation systems since the 80s, because it's just extremely natural there.

JIT Status 2007

So, back to our history. We're now in 2007, at the end of the EU project (you can find the EU-reports we wrote during the projects here). The EU project successfully finished, we survived the final review with the EU. So, what's the 2007 status of the JIT generator? It works kind of, it can be applied to PyPy. It produces a VM with a JIT that will turn Python code into machine code at runtime and run it. However, that machine code is not particularly fast. Also, it tends to generate many megabytes of machine code even for small Python programs. While it's always faster than PyPy without JIT, it's only sometimes faster than CPython, and most of the time Psyco still beats it. On the one hand, this is still an amazing achievement! It's arguably the biggest application of partial evaluation at this point in time! On the other hand, it was still quite disappointing in practice, particularly since some of us had believed at the time that it should have been possible to reach and then surpass the speed of Psyco with this approach.

2007: RSqueak and other languages

After the EU project ended we did all kinds of things. Like sleep for a month for example, and have the cleanup sprint that I already mentioned. We also had a slightly unusual sprint in Bern, with members of the Software Composition Group of Oscar Nierstrasz. As I wrote above, PyPy had been heavily influenced by Squeak Smalltalk, and that group is a heavy user of Squeak, so we wanted to see how to collaborate with them. At the beginning of the sprint, we decided together that the goal of that week should be to try to write a Squeak virtual machine in RPython, and at the end of the week we'd gotten surprisingly far with that goal. Basically most of the bytecodes and the Smalltalk object system worked, we had written an image loader and could run some benchmarks (during the sprint we also regularly updated a blog, the success of which led us to start the PyPy blog).

The development of the Squeak interpreter was very interesting for the project, because it was the first real step that moved RPython from being an implementation detail of PyPy to be a more interesting project in its own right. Basically a language to write interpreters in, with the eventual promise to get a JIT for that language almost for free. That Squeak implementation is now called RSqueak ("Research Squeak").

I'll not go into more details about any of the other language implementations in RPython in this post, but over the years we've had a large variety of language of them done by various people and groups, most of them as research vehicles, but also some as real language implementations. Some very cool research results came out of these efforts, here's a slightly outdated list of some of them.

The use of RPython for other languages complicated the PyPy narrative a lot, and in a way we never managed to recover the simplicity of the original project description "PyPy is Python in Python". Because now it's something like "we have this somewhat strange language, a subset of Python, that's called RPython, and it's good to write interpreters in. And if you do that, we'll give you a JIT for almost free. And also, we used that language to write a Python implementation, called PyPy.". It just doesn't roll off the tongue as nicely.

2008-2009: Four More JIT Generators

Back to the JIT. After writing the first JIT generator as part of the EU project, with somewhat mixed results, we actually wrote several more JIT generator prototypes with different architectures to try to solve some of the problems of the first approach. To give an impression of these prototypes, here’s a list of them.

The second JIT generator we started working on in 2008 behaved exactly like the first one, but had a meta-interpreter based architecture, to make it more flexible and easier to experiment with. The meta-interpreter was called the "rainbow interpreter", and in general the JIT is an area where we went somewhat overboard with borderline silly terminology, with notable occurrences of "timeshifter", "blackhole interpreter" etc.

The third JIT generator was an experiment based on the second one which changed compilation strategy. While the previous two had compiled many control flow paths of the currently compiled function eagerly, that third JIT was sort of maximally lazy and stopped compilation at every control flow split to avoid guessing which path would actually be useful later when executing the code. This was an attempt to reduce the problem of the first JIT generating way too much machine code. Only later, when execution went down one of the not yet compiled paths would it continue compiling more code. This gives an effect similar to that of lazy basic block versioning.

The fourth JIT generator was a pretty strange prototype, a runtime partial evaluator for Prolog, to experiment with various specialization trade-offs. It had an approach that we gave a not at all humble name, called "perfect specialization".

The fifth JIT generator is the one that we are still using today. Instead of generating a method-based JIT compiler from our interpreter we switched to generating a tracing JIT compiler. Tracing JIT compilers were sort of the latest fashion at the time, at least for a little while.

2009: Meta-Tracing

So, how did that tracing JIT generator work? A tracing JIT generates code by observing and logging the execution of the running program. This yields a straight-line trace of operations, which are then optimized and compiled into machine code. Of course most tracing systems mostly focus on tracing loops.

As we discovered, it's actually quite simple to apply a tracing JIT to a generic interpreter, by not tracing the execution of the user program directly, but by instead tracing the execution of the interpreter while it is running the user program (here's the paper we wrote about this approach).

So that's what we implemented. Of course we kept the two successful parts of the first JIT, promote and virtuals (both links go to the papers about these features in the meta-tracing context).

Why did we Abandon Partial Evaluation?

So one question I get sometimes asked when telling this story is, why did we think that tracing would work better than partial evaluation (PE)? One of the hardest parts of compilers in general and partial evaluation based systems in particular is the decision when and how much to inline, how much to specialize, as well as the decision when to split control flow paths. In the PE based JIT generator we never managed to control that question. Either the JIT would inline too much, leading to useless compilation of all kinds of unlikely error cases. Or it wouldn't inline enough, preventing necessary optimizations.

Meta tracing solves this problem with a hammer, it doesn't make particularly complex inlining decisions at all. It instead decides what to inline by precisely following what a real execution through the program is doing. Its inlining decisions are therefore very understandable and predictable, and it basically only has one heuristic based on whether the called function contains a loop or not: If the called function contains a loop, we'll never inline it, if it doesn't we always try to inline it. That predictability is actually what was the most helpful, since it makes it possible for interpreter authors to understand why the JIT did what it did and to actually influence its inlining decisions by changing the annotations in the interpreter source. It turns out that simple is better than complex.

2009-2011: The PyJIT Eurostars Project

While we were writing all these JIT prototypes, PyPy had sort of reverted back to being a volunteer-driven open source project (although some of us, like Antonio Cuni and I, had started working for universities and other project members had other sources of funding). But again, while we did the work it became clear that to get an actually working fast PyPy with generated JIT we would need actual funding again for the project. So we applied to the EU again, this time for a much smaller project with less money, in the Eurostars framework. We got a grant for three participants, merlinux, OpenEnd and Uni Düsseldorf, on the order of a bit more than half a million euro. That money was specifically for JIT development and JIT testing infrastructure.

Tracing JIT improvements

When writing the grant we had sat together at a sprint and discussed extensively and decided that we would not switch JIT generation approaches any more. We all liked the tracing approach well enough and thought it was promising. So instead we agreed to try in earnest to make the tracing JIT really practical. So in the Eurostars project we started with implementing sort of fairly standard JIT compiler optimizations for the meta-tracing JIT, such as:

constant folding

dead code elimination

better heap optimizations

faster deoptimization (which is actually a bit of a mess in the meta-approach)

and dealing more efficiently with Python frames objects and the features of Python's debugging facilities

2010: speed.pypy.org

In 2010, to make sure that we wouldn't accidentally introduce speed regressions while working on the JIT, we implemented infrastructure to build PyPy and run our benchmarks nightly. Then, the http://speed.pypy.org website was implemented by Miquel Torres, a volunteer. The website shows the changes in benchmark performance compared to the previous n days. It didn't sound too important at first, but this was (and is) a fantastic tool, and an amazing motivator over the next years, to keep continually improving performance.

Continuous Integration

This actually leads me to something else that I'd say we learned, which is that continuous integration is really awesome, and completely transformative to have for a project. This is not a particularly surprising insight nowadays in the open source community, it's easy to set up continuous integration on Github using Travis or some other CI service. But I still see a lot of research projects that don't have tests, that don't use CI, so I wanted to mention it anyway. As I mentioned earlier in the post, PyPy has a quite serious testing culture, with unit tests written for new code, regression tests for all bugs, and integration tests using the CPython test suite. Those tests are run nightly on a number of architectures and operating systems.

Having all this kind of careful testing is of course necessary, since PyPy is really trying to be a Python implementation that people actually use, not just write papers about. But having all this infrastructure also had other benefits, for example it allows us to trust newcomers to the project very quickly. Basically after your first patch gets accepted, you immediately get commit rights to the PyPy repository. If you screw up, the tests (or the code reviews) are probably going to catch it, and that reduction to the barrier to contributing is just super great.

This concludes my advertisement for testing in this post.

2010: Implementing Python Objects with Maps

So, what else did we do in the Eurostars project, apart from adding traditional compiler optimizations to the tracing JIT and setting up CI infrastructure? Another strand of work, that went on sort of concurrently to the JIT generator improvements, were deep rewrites in the Python runtime, and the Python data structures. I am going to write about two exemplary ones here.

The first such rewrite is fairly standard. Python instances are similar to Javascript objects, in that you can add arbitrary attributes to them at runtime. Originally Python instances were backed by a dictionary in PyPy, but of course in practice most instances of the same class have the same set of attribute names. Therefore we went and implemented Self style maps, which are often called hidden classes in the JS world to represent instances instead. This has two big benefits, it allows you to generate much better machine code for instance attribute access and makes instances use a lot less memory.

2011: Container Storage Strategies

Another important change in the PyPy runtime was rewriting the Python container data structures, such as lists, dictionaries and sets. A fairly straightforward observation about how those are used is that in a significant percentage of cases they contain type-homogeneous data. As an example it's quite common to have lists of only integers, or lists of only strings. So we changed the list, dict and set implementations to use something we called storage strategies. With storage strategies these data structures use a more efficient representations if they contain only primitives of the same type, such as ints, floats, strings. This makes it possible to store the values without boxing them in the underlying data structure. Therefore read and write access are much faster for such type homogeneous containers. Of course when later another data type gets added to such a list, the existing elements need to all be boxed at that point, which is expensive. But we did a study and found out that that happens quite rarely in practice. A lot of that work was done by Lukas Diekmann.

Deep Changes in the Runtime are Necessary

These two are just two examples for a number of fairly fundamental changes in the PyPy runtime and PyPy data structures, probably the two most important ones, but we did many others. That leads me to another thing we learned. If you want to generate good code for a complex dynamic language such as Python, it's actually not enough at all to have a good code generator and good compiler optimizations. That's not going to help you, if your runtime data-structures aren't in a shape where it's possible to generate efficient machine code to access them.

Maybe this is well known in the VM and research community. However it's the main mistake that in my opinion every other Python JIT effort has made in the last 10 years, where most projects said something along the lines of "we're not changing the existing CPython data structures at all, we'll just let LLVM inline enough C code of the runtime and then it will optimize all the overhead away". That never works very well.

JIT Status 2011

So, here we are at the end of the Eurostars project, what's the status of the JIT? Well, it seems this meta-tracing stuff really works! We finally started actually believing in it, when we reached the point in 2010 where self-hosting PyPy was actually faster than bootstrapping the VM on CPython. Speeding up the bootstrapping process is something that Psyco never managed at all, so we considered this a quite important achievement. At the end of Eurostars, we were about 4x faster than CPython on our set of benchmarks.

2012-2017: Engineering and Incremental Progress

2012 the Eurostars project was finished and PyPy reverted yet another time back to be an open source project. From then on, we've had a more diverse set of sources of funding: we received some crowd funding via the Software Freedom Conservancy and contracts of various sizes from companies to implement various specific features, often handled by Baroque Software. Over the next couple of years we revamped various parts of the VM. We improved the GC in major ways. We optimized the implementation of the JIT compiler to improve warmuptimes. We implemented backends for various CPU architectures (including PowerPC and s390x). We tried to reduce the number of performance cliffs and make the JIT useful in a broader set of cases.

Another strand of work was to push quite significantly to be more compatible with CPython, particularly the Python 3 line as well as extension module support. Other compatibility improvements we did was making sure that virtualenv works with PyPy, better support for distutils and setuptools and similar improvements. The continually improving performance as well better compatibility with the ecosystem tools led to the first fewusers of PyPy in industry.

CPyExt

Another very important strand of work that took a lot of effort in recent years was CPyExt. One of the main blockers of PyPy adoption had always been the fact that a lot of people need specific C-extension modules at least in some parts of their program, and telling them to reimplement everything in Python is just not a practical solution. Therefore we worked on CPyExt, an emulation layer to make it possible to run CPython C-extension modules in PyPy. Doing that was a very painful process, since the CPython extension API leaks a lot of CPython implementation details, so we had to painstakingly emulate all of these details to make it possible to run extensions. That this works at all remains completely amazing to me! But nowadays CPyExt is even getting quite good, a lot of the big numerical libraries such as Numpy and Pandas are now supported (for a while we had worked hard on a reimplementation of Numpy called NumPyPy, but eventually realized that it would never be complete and useful enough). However, calling CPyExt modules from PyPy can still be very slow, which makes it impractical for some applications that's why we are working on it.

Not thinking about C-extension module emulation earlier in the project history was a pretty bad strategic mistake. It had been clear for a long time that getting people to just stop using all their C-extension modules was never going to work, despite our efforts to give them alternatives, such as cffi. So we should have thought of a story for all the existing C-extension modules earlier in the project. Not starting CPyExt earlier was mostly a failure of our imagination (and maybe a too high pain threshold): We didn't believe this kind of emulation was going to be practical, until somebody went and tried it.

Python 3

Another main focus of the last couple of years has been to catch up with the CPython 3 line. Originally we had ignored Python 3 for a little bit too long, and were trailing several versions behind. In 2016 and 2017 we had a grant from the Mozilla open source support program of $200'000 to be able to catch up with Python 3.5. This work is now basically done, and we are starting to target CPython 3.6 and will have to look into 3.7 in the near future.

Incentives of OSS compared to Academia

So, what can be learned from those more recent years? One thing we can observe is that a lot of the engineering work we did in that time is not really science as such. A lot of the VM techniques we implemented are kind of well known, and catching up with new Python features is also not particularly deep researchy work. Of course this kind of work is obviously super necessary if you want people to use your VM, but it would be very hard to try to get research funding for it. PyPy managed quite well over its history to balance phases of more research oriented work, and more product oriented ones. But getting this balance somewhat right is not easy, and definitely also involves a lot of luck. And, as has been discussed a lot, it's actually very hard to find funding for open source work, both within and outside of academia.

Meta-Tracing really works!

Let me end with what, in my opinion, is the main positive technical result of PyPy the project. Which is that the whole idea of using a meta-tracing JIT can really work! Currently PyPy is about 7 times faster than CPython on a broad set of benchmarks. Also, one of the very early motivations for using a meta-jitting approach in PyPy, which was to not have to adapt the JIT to new versions of CPython proved to work: indeed we didn't have to change anything in the JIT infrastructure to support Python 3.

RPython has also worked and improved performance for a number of other languages. Some of these interpreters had wildly different architectures. AST-based interpreters, bytecode based, CPU emulators, really inefficient high-level ones that allocate continuation objects all the time, and so on. This shows that RPython also gives you a lot of freedom in deciding how you want to structure the interpreter and that it can be applied to languages of quite different paradigms.

I'll end with a list of the people that have contributed code to PyPy over its history, more than 350 of them. I'd like to thank all of them and the various roles they played. To the next 15 years!

Acknowledgements

A lot of people helped me with this blog post. Tim Felgentreff made me give the keynote, which lead me to start collecting the material. Samuele Pedroni gave essential early input when I just started planning the talk, and also gave feedback on the blog post. Maciej Fijałkowski gave me feedback on the post, in particular important insight about the more recent years of the project. Armin Rigo discussed the talk slides with me, and provided details about the early expectations about the first JIT's hoped-for performance. Antonio Cuni gave substantial feedback and many very helpful suggestions for the blog post. Michael Hudson-Doyle also fixed a number of mistakes in the post and rightfully complained about the lack of mention of the GC. Christian Tismer provided access to his copy of early Python-de mailing list posts. Matti Picus pointed out a number of things I had forgotten and fixed a huge number of typos and awkward English, including my absolute inability to put commas correctly. All remaining errors are of course my own.

The No Title® Tech Blog: Count Files v1.4 – new features and finally available on PyPI!

The latest release of this file counting command-line utility has just been released. It is a platform independent (pure Python) package that makes it easy to count files by extension. This version has some useful new features, it was tested in a wide range on environments and, for the first time, it is now available on PyPI, which means you can just pip install it as any other Python package.

Stefan Behnel: What CPython could use Cython for

There has been a recent discussion about using Cython for CPython development. I think this is a great opportunity for the CPython project to make more efficient use of its scarcest resource: developer time of its spare time contributors and maintainers.

The entry level for new contributors to the CPython project is often perceived to be quite high. While many tasks are actually beginner friendly, such as helping with the documentation or adding features to the Python modules in the stdlib, such important tasks as fixing bugs in the core interpreter, working on data structures, optimising language constructs, or improving the test coverage of the C-API require a solid understanding of C and the CPython C-API.

Since a large part of CPython is implemented in C, and since it exposes a large C-API to extensions and applications, C level testing is key to providing a correct and reliable native API. There were a couple of cases in the past years where new CPython releases actually broke certain parts of the C-API, and it was not noticed until people complained that their applications broke when trying out the new release. This is because the test coverage of the C-API is much lower than the well tested Python level and standard library tests of the runtime. And the main reason for this is that it is much more difficult to write tests in C than in Python, so people have a high incentive to get around it if they can. Since the C-API is used internally inside of the runtime, it is often assumed to be implicitly tested by the Python tests anyway, which raises the bar for an explicit C test even further. But this implicit coverage is not always given, and it also does not reduce the need for regression tests. Cython could help here by making it easier to write C level tests that integrate nicely with the existing Python unit test framework that the CPython project uses.

Basically, writing a C level test in Cython means writing a Python unittest function and then doing an explicit C operation in it that represents the actual test code. Here is an example for testing the PyList_Append C-API function:

fromcpython.objectcimportPyObjectfromcpython.listcimportPyList_Appenddeftest_PyList_Append_on_empty_list():# setup codel=[]assertlen(l)==0value="abc"pyobj_value=<PyObject*>valuerefcount_before=pyobj_value.ob_refcnt# conservative test call, translates to the expected C code,# although with automatic exception propagation if it returns -1:errcode=PyList_Append(l,value)# validationasserterrcode==0assertlen(l)==1assertl[0]isvalueassertpyobj_value.ob_refcnt==refcount_before+1

In the Cython project itself, what we actually do is to write doctests. The functions and classes in a test module are compiled with Cython, and the doctests are then executed in Python, and call the Cython implementations. This provides a very nice and easy way to compare the results of Cython operations with those of Python, and also trivially supports data driven tests, by calling a function multiple times from a doctest, for example:

fromcpython.numbercimportPyNumber_Adddeftest_PyNumber_Add(a,b):""">>> test_PyNumber_Add('abc', 'def') 'abcdef'>>> test_PyNumber_Add('abc', '') 'abc'>>> test_PyNumber_Add(2, 5) 7>>> -2 + 5 3>>> test_PyNumber_Add(-2, 5) 3"""# The following is equivalent to writing "return a + b" in Python or Cython.returnPyNumber_Add(a,b)

This could even trivially be combined with hypothesis and other data driven testing tools.

But Cython's use cases are not limited to testing. Maintenance and feature development would probably benefit even more from a reduced entry level.

Many language optimisations are applied in the AST optimiser these days, and that is implemented in C. However, these tree operations can be fairly complex and are thus non-trivial to implement. Doing that in Python rather than C would be much easier to write and maintain, but since this code is a part of the Python compilation process, there's a chicken-and-egg problem here in addition to the performance problem. Cython could solve both problems and allow for more far-reaching optimisations by keeping the necessary transformation code readable.

Performance is also an issue in other parts of CPython, namely the standard library. Several stdlib modules are compute intensive. Many of them have two implementations: one in Python and a faster one in C, a so-called accelerator module. This means that adding a feature to these modules requires duplicate effort, the proficiency in both Python and C, and a solid understanding of the C-API, reference counting, garbage collection, and what not. On the other hand, many modules that could certainly benefit from native performance lack such an accelerator, e.g. difflib, textwrap, fractions, statistics, argparse, email, urllib.parse and many, many more. The asyncio module is becoming more and more important these days, but its native accelerator only covers a very small part of its large functionality, and it also does not expose a native API that performance hungry async tools could hook into. And even though the native accelerator of the ElementTree module is an almost complete replacement, the somewhat complex serialisation code is still implemented completely in Python, which shows in comparison to the native serialisation in lxml.

Compiling these modules with Cython would speed them up, probably quite visibly. For this use case, it is possible to keep the code entirely in Python, and just add enough type declarations to make it fast when compiled. The typing syntax that PEP-484 and PEP-526 added to Python 3.6 makes this really easy and straight forward. A manually written accelerator module could thus be avoided, and therefore a lot of duplicated functionality and maintenance overhead.

Feature development would also be substantially simplified, especially for new contributors. Since Cython compiles Python code, it would allow people to contribute a Python implementation of a new feature that compiles down to C. And we all know that developing new functionality is much easier in Python than in C. The remaining task is then only to optimise it and not to rewrite it in a different language.

My feeling is that replacing some parts of the CPython C development with Cython has the potential to bring a visible boost for the contributions to the CPython project.

Podcast.__init__: Keep Your Code Clean Using pre-commit with Anthony Sottile

Summary

Maintaining the health and well-being of your software is a never-ending responsibility. Automating away as much of it as possible makes that challenge more achievable. In this episode Anthony Sottile describes his work on the pre-commit framework to simplify the process of writing and distributing functions to make sure that you only commit code that meets your definition of clean. He explains how it supports tools and repositories written in multiple languages, enforces team standards, and how you can start using it today to ship better software.

Preface

- Hello and welcome to Podcast.__init__, the podcast about Python and the people who make it great.

- When you’re ready to launch your next app you’ll need somewhere to deploy it, so check out Linode. With private networking, shared block storage, node balancers, and a 40Gbit network, all controlled by a brand new API you’ve got everything you need to scale up. Go to podcastinit.com/linode to get a $20 credit and launch a new server in under a minute.

- Visit the site to subscribe to the show, sign up for the newsletter, and read the show notes. And if you have any questions, comments, or suggestions I would love to hear them. You can reach me on Twitter at @Podcast__init__ or email hosts@podcastinit.com)

- To help other people find the show please leave a review on iTunes, or Google Play Music, tell your friends and co-workers, and share it on social media.

- Join the community in the new Zulip chat workspace at podcastinit.com/chat

- Your host as usual is Tobias Macey and today I’m interviewing Anthony Sottile about pre-commit, a framework for managing and maintaining hooks for multiple languages

Interview

- Introductions

- How did you get introduced to Python?

- Can you start by describing what a pre-commit hook is and some of the ways that they are useful for developers?

- What was you motivation for creating a framework to manage your pre-commit hooks?

- How does it differ from other projects built to manage these hooks?

- What are the steps for getting someone started with pre-commit in a new project?

- Which other event hooks would be most useful to implement for maintaining the health of a repository?

- What types of operations are most useful for ensuring the health of a project?

- What types of routines should be avoided as a pre-commit step?

- Installing the hooks into a user’s local environment is a manual step, so how do you ensure that all of your developers are using the configured hooks?

- What factors have you found that lead to developers skipping or disabling hooks?

- How is pre-commit implemented and how has that design evolved from when you first started?

- What have been the most difficult aspects of supporting multiple languages and package managers?

- What would you do differently if you started over today?

- Would you still use Python?

- For someone who wants to write a plugin for pre-commit, what are the steps involved?

- What are some of the strangest or most unusual uses of pre-commit hooks that you have seen?

- What are your plans for the future of pre-commit?

Keep In Touch

- asottile on GitHub

- @codewithanthony on Twitter

- anthonywritescode on twitch

- anthonywritescode on YouTube

Picks

- Tobias

- Anthony

Links

The intro and outro music is from Requiem for a Fish The Freak Fandango Orchestra / CC BY-SA

Nigel Babu: Moving from pyrax to libcloud: A story in 3 parts

Softserve is a service that lets our community loan machines to debug test

failures. They create cloud VMs based on the image that we use for our test

machines. We originally used pyrax to create and delete VMs. I spent some

time trying to re-do that with libcloud.

Part 1: Writing libcloud code

I started by writing a simple script that created a VM with libcloud. Then, I modified it to do an SSHKeyDeployment, and further re-wrote that code to work with MultiStepDeployment with two keys. Once I got that working, all I had left was to delete the server. All went well, I plugged in the code and pushed it. Have you seen the bug yet?

Part 2: Deepshikha tries to deploy it

Because I’m an idiot, I didn’t test my code with our application. Our tests don’t actually go and create a cloud server. We ran into bugs. We rolled back and I went about fixing them. The first one we ran into was installing dependencies. Turns out that the process of installing dependencies for libcloud was slightly more complicated for some reason (more on that later!). We needed to pull in a few new devel packages. I sat down and actually fixed all bugs I could trigger. Turns out, there were plenty.

Part 3: I find bugs

Now I ran into subtle installation bugs. Pip would throw up some weird error. The default Python on Centos 7 is pretty old. I upgraded pip and setuptools inside my virtualenv to see if that would solve the pip errors and it did. I suspect some newer packages depend on newer setuptools and pip features and it fails quite badly when those are older.

After that, I ran into an bug that was incredibly satisfying to debug. The logs

had a traceback that said I wasn’t passing a string as pubkey. I couldn’t

reproduce that bug locally. On my local setup, the type was str, so I had to

debug on that server with a few print statements. It turns out that the

variable had type unicode. Well, that’s weird. I don’t know why that’s

happening – unicode sounds right, something is broken on my local setup.

A check for “strings” in python2 should check for str and unicode. The code

does the following check which returns False when pubkey is a unicode:

isinstance(pubkey, basestring)

On a first glance, that looked right. On python2, str and unicode are

instances of basestring. A bit of sleuthing later, I discovered that libcloud

has their own overridden basestring. This does not consider unicode to be an

instance of basestring. I found this definition of basestring for python2:

basestring = unicode = str

That doesn’t work as I expect on Python 2. How did this ever work? Is it that almost everyone passes strings and never runs into this bug? I have a bug filed. When I figure out how to fix this correctly, I’ll send a patch. My first instinct is to replace it with a line that just says

basestring

In python2, that should Just Work™. Link to code in case anyone is curious. One part of me is screaming to replace all of this with a library like six that will handle edge cases way better.

Mike Driscoll: PyDev of the Week: Oliver Bestwalter

This week we welcome Oliver Bestwalter (@obestwalter) as our PyDev of the Week! He is one of the core developers of the tox automation project and the pytest package. He is also a speaker at several Python related conferences. You can learn more about Oliver on his website or on Github. Let’s take a few moment to learn more about Oliver!

Can you tell us a little about yourself?

I was born in West Germany on Star Wars day the year the last man set foot on the moon.

I took on my first job as a Software Developer when I was 39, right after earning my B.Sc. in Computer Engineering (in German: Technische Informatik).

Although I fell in love with computering in my early teens and with the idea of free software in my early twenties, back then I was more into music, literature and sports. In school I was led to believe that I was “not good at maths”, so studying Computer Science or anything technical was not an option. Composing and playing music dominated my teens and twenties. I played several instruments (bass, guitar and keyboards – mainly self-taught) alone, and in different bands. A few recordings from that time are online on Soundcloud.

When I was 28 I had a nasty skateboarding accident and broke my hand and elbow. This rendered me incapable of playing any instrument for over a year. I didn’t cope with that very well and descended into a deep crisis that led me to drop music and my whole social life which had revolved around it. At that point I was pretty isolated without any kind of formal education and honestly didn’t know what to do with my life. I took on a soul-crushingly boring job in the backend (read: loud and dirty part) of a semiconductor fabrication plant. Life wasn’t that great, but in my spare time I picked up my other passion again (computering) and used it to co-found and nurture a web based, not-for-profit support board. My co-founder happened to be a wonderful woman who later became my wife. So you never know what something is good for, I guess.

I tend to turn my hobbies into my job as I did with music back then and with computering now. Good food & drink has occasionally passed my lips, which might be called a hobby, when it is not purely imbibed for sustenance. Enjoying modern art, mostly in the form of films, books, and (very seldom nowadays) computer games are also part of my recreational activities. I am more an indoor enthusiast, but I also might go for a walk now and then. Being more on the introvert spectrum, I need a lot of alone time to recharge, but I also like to hang out with my family and my cat (it might be more appropriate to say that the cat sometimes likes to hang out with us, because she adopted us and definitely is the one calling the shots).

Why did you start using Python?

TL;DR: I don’t really know, but I certainly don’t regret it.

One fine day in 2006 as I travelled through the interwebs, I serendipitously stumbled over the website of a programming language named after my favourite comedy group. I pretty much inhaled the great Python tutorial in one go. I still remember that vividly – it was love at first sight. Those inbuilt data structures! The simplicity! The clarity of the syntax! No curly braces soup! Next I read a hard-copy of the Python 2 incarnation of Dive into Python by Mark Pilgrim and I was completely captivated by that beautiful language and its possibilities. Thank you Guido and Mark!

While still working in that menial job in the factory without any perspective there, learning that language well and figuring out what to do with it became my goal. I started dreaming of being a professional developer creating software using Python. The problem was that Python was far from having hit the mainstream back then and Python jobs weren’t something very common at all. I was also in my mid thirties already and still pretty much convinced that not being “good at maths” would make this an impossible dream anyway. I somehow had made peace with the idea that I would toil away in one McJob after the other for the rest of my life. My wife though wouldn’t have any of this nonsense and said I wouldn’t know if I didn’t try. She was prepared to support us financially during my studies and I didn’t have any excuse anymore (I told you she is wonderful). I decided to get a proper formal education and we moved to the North, where life is cheap and I enrolled in a small university of applied sciences. The first exam I took was maths. I achieved the second best score in that year … “not good at maths” … Ha! Having been out of school for 15 years, I had to catch up a lot, but I put the work in and earned my degree as one of the best in my year. My first job in tech that I hold until today at Avira consists mainly of creating software in Python with a growing amount of time spent mentoring and teaching (especially around test and build automation). So don’t listen to the naysayers or the pessimistic voices in your head! Follow your dreams, kids

What other programming languages do you know and which is your favorite?