Pandas is a foundational library for analytics, data processing, and data science. It’s a huge project with tons of optionality and depth.

This tutorial will cover some lesser-used but idiomatic Pandas capabilities that lend your code better readability, versatility, and speed, à la the Buzzfeed listicle.

If you feel comfortable with the core concepts of Python’s Pandas library, hopefully you’ll find a trick or two in this article that you haven’t stumbled across previously. (If you’re just starting out with the library, 10 Minutes to Pandas is a good place to start.)

Note: The examples in this article are tested with Pandas version 0.23.2 and Python 3.6.6. However, they should also be valid in older versions.

You may have run across Pandas’ rich options and settings system before.

It’s a huge productivity saver to set customized Pandas options at interpreter startup, especially if you work in a scripting environment. You can use pd.set_option() to configure to your heart’s content with a Python or IPython startup file.

The options use a dot notation such as pd.set_option('display.max_colwidth', 25), which lends itself well to a nested dictionary of options:

importpandasaspddefstart():options={'display':{'max_columns':None,'max_colwidth':25,'expand_frame_repr':False,# Don't wrap to multiple pages'max_rows':14,'max_seq_items':50,# Max length of printed sequence'precision':4,'show_dimensions':False},'mode':{'chained_assignment':None# Controls SettingWithCopyWarning}}forcategory,optioninoptions.items():forop,valueinoption.items():pd.set_option(f'{category}.{op}',value)# Python 3.6+if__name__=='__main__':start()delstart# Clean up namespace in the interpreterIf you launch an interpreter session, you’ll see that everything in the startup script has been executed, and Pandas is imported for you automatically with your suite of options:

>>> pd.__name__'pandas'>>> pd.get_option('display.max_rows')14Let’s use some data on abalone hosted by the UCI Machine Learning Repository to demonstrate the formatting that was set in the startup file. The data will truncate at 14 rows with 4 digits of precision for floats:

>>> url=('https://archive.ics.uci.edu/ml/'... 'machine-learning-databases/abalone/abalone.data')>>> cols=['sex','length','diam','height','weight','rings']>>> abalone=pd.read_csv(url,usecols=[0,1,2,3,4,8],names=cols)>>> abalone sex length diam height weight rings0 M 0.455 0.365 0.095 0.5140 151 M 0.350 0.265 0.090 0.2255 72 F 0.530 0.420 0.135 0.6770 93 M 0.440 0.365 0.125 0.5160 104 I 0.330 0.255 0.080 0.2050 75 I 0.425 0.300 0.095 0.3515 86 F 0.530 0.415 0.150 0.7775 20... .................4170 M 0.550 0.430 0.130 0.8395 104171 M 0.560 0.430 0.155 0.8675 84172 F 0.565 0.450 0.165 0.8870 114173 M 0.590 0.440 0.135 0.9660 104174 M 0.600 0.475 0.205 1.1760 94175 F 0.625 0.485 0.150 1.0945 104176 M 0.710 0.555 0.195 1.9485 12You’ll see this dataset pop up in other examples later as well.

2. Make Toy Data Structures With Pandas’ Testing Module

Hidden way down in Pandas’ testing module are a number of convenient functions for quickly building quasi-realistic Series and DataFrames:

>>> importpandas.util.testingastm>>> tm.N,tm.K=15,3# Module-level default rows/columns>>> importnumpyasnp>>> np.random.seed(444)>>> tm.makeTimeDataFrame(freq='M').head() A B C2000-01-31 0.3574 -0.8804 0.26692000-02-29 0.3775 0.1526 -0.48032000-03-31 1.3823 0.2503 0.30082000-04-30 1.1755 0.0785 -0.17912000-05-31 -0.9393 -0.9039 1.1837>>> tm.makeDataFrame().head() A B CnTLGGTiRHF -0.6228 0.6459 0.1251WPBRn9jtsR -0.3187 -0.8091 1.15017B3wWfvuDA -1.9872 -1.0795 0.2987yJ0BTjehH1 0.8802 0.7403 -1.21540luaYUYvy1 -0.9320 1.2912 -0.2907

There are around 30 of these, and you can see the full list by calling dir() on the module object. Here are a few:

>>> [iforiindir(tm)ifi.startswith('make')]['makeBoolIndex', 'makeCategoricalIndex', 'makeCustomDataframe', 'makeCustomIndex', # ..., 'makeTimeSeries', 'makeTimedeltaIndex', 'makeUIntIndex', 'makeUnicodeIndex']These can be useful for benchmarking, testing assertions, and experimenting with Pandas methods that you are less familiar with.

3. Take Advantage of Accessor Methods

Perhaps you’ve heard of the term accessor, which is somewhat like a getter (although getters and setters are used infrequently in Python). For our purposes here, you can think of a Pandas accessor as a property that serves as an interface to additional methods.

Pandas Series have three of them:

>>> pd.Series._accessors{'cat', 'str', 'dt'}Yes, that definition above is a mouthful, so let’s take a look at a few examples before discussing the internals.

.cat is for categorical data, .str is for string (object) data, and .dt is for datetime-like data. Let’s start off with .str: imagine that you have some raw city/state/ZIP data as a single field within a Pandas Series.

Pandas string methods are vectorized, meaning that they operate on the entire array without an explicit for-loop:

>>> addr=pd.Series([... 'Washington, D.C. 20003',... 'Brooklyn, NY 11211-1755',... 'Omaha, NE 68154',... 'Pittsburgh, PA 15211'... ])>>> addr.str.upper()0 WASHINGTON, D.C. 200031 BROOKLYN, NY 11211-17552 OMAHA, NE 681543 PITTSBURGH, PA 15211dtype: object>>> addr.str.count(r'\d')# 5 or 9-digit zip?0 51 92 53 5dtype: int64

For a more involved example, let’s say that you want to separate out the three city/state/ZIP components neatly into DataFrame fields.

You can pass a regular expression to .str.extract() to “extract” parts of each cell in the Series. In .str.extract(), .str is the accessor, and .str.extract() is an accessor method:

>>> regex=(r'(?P<city>[A-Za-z ]+), '# One or more letters... r'(?P<state>[A-Z]{2}) '# 2 capital letters... r'(?P<zip>\d{5}(?:-\d{4})?)')# Optional 4-digit extension...>>> addr.str.replace('.','').str.extract(regex) city state zip0 Washington DC 200031 Brooklyn NY 11211-17552 Omaha NE 681543 Pittsburgh PA 15211This also illustrates what is known as method-chaining, where .str.extract(regex) is called on the result of addr.str.replace('.', ''), which cleans up use of periods to get a nice 2-character state abbreviation.

It’s helpful to know a tiny bit about how these accessor methods work as a motivating reason for why you should use them in the first place, rather than something like addr.apply(re.findall, ...).

Each accessor is itself a bona fide Python class:

These standalone classes are then “attached” to the Series class using a CachedAccessor. It is when the classes are wrapped in CachedAccessor that a bit of magic happens.

CachedAccessor is inspired by a “cached property” design: a property is only computed once per instance and then replaced by an ordinary attribute. It does this by overloading the .__get__() method, which is part of Python’s descriptor protocol.

The second accessor, .dt, is for datetime-like data. It technically belongs to Pandas’ DatetimeIndex, and if called on a Series, it is converted to a DatetimeIndex first:

>>> daterng=pd.Series(pd.date_range('2017',periods=9,freq='Q'))>>> daterng0 2017-03-311 2017-06-302 2017-09-303 2017-12-314 2018-03-315 2018-06-306 2018-09-307 2018-12-318 2019-03-31dtype: datetime64[ns]>>> daterng.dt.day_name()0 Friday1 Friday2 Saturday3 Sunday4 Saturday5 Saturday6 Sunday7 Monday8 Sundaydtype: object>>> # Second-half of year only>>> daterng[daterng.dt.quarter>2]2 2017-09-303 2017-12-316 2018-09-307 2018-12-31dtype: datetime64[ns]>>> daterng[daterng.dt.is_year_end]3 2017-12-317 2018-12-31dtype: datetime64[ns]The third accessor, .cat, is for Categorical data only, which you’ll see shortly in its own section.

4. Create a DatetimeIndex From Component Columns

Speaking of datetime-like data, as in daterng above, it’s possible to create a Pandas DatetimeIndex from multiple component columns that together form a date or datetime:

>>> fromitertoolsimportproduct>>> datecols=['year','month','day']>>> df=pd.DataFrame(list(product([2017,2016],[1,2],[1,2,3])),... columns=datecols)>>> df['data']=np.random.randn(len(df))>>> df year month day data0 2017 1 1 -0.07671 2017 1 2 -1.27982 2017 1 3 0.40323 2017 2 1 1.23774 2017 2 2 -0.20605 2017 2 3 0.61876 2016 1 1 2.37867 2016 1 2 -0.47308 2016 1 3 -2.15059 2016 2 1 -0.634010 2016 2 2 0.796411 2016 2 3 0.0005>>> df.index=pd.to_datetime(df[datecols])>>> df.head() year month day data2017-01-01 2017 1 1 -0.07672017-01-02 2017 1 2 -1.27982017-01-03 2017 1 3 0.40322017-02-01 2017 2 1 1.23772017-02-02 2017 2 2 -0.2060

Finally, you can drop the old individual columns and convert to a Series:

>>> df=df.drop(datecols,axis=1).squeeze()>>> df.head()2017-01-01 -0.07672017-01-02 -1.27982017-01-03 0.40322017-02-01 1.23772017-02-02 -0.2060Name: data, dtype: float64>>> df.index.dtype_str'datetime64[ns]

The intuition behind passing a DataFrame is that a DataFrame resembles a Python dictionary where the column names are keys, and the individual columns (Series) are the dictionary values. That’s why pd.to_datetime(df[datecols].to_dict(orient='list')) would also work in this case. This mirrors the construction of Python’s datetime.datetime, where you pass keyword arguments such as datetime.datetime(year=2000, month=1, day=15, hour=10).

5. Use Categorical Data to Save on Time and Space

One powerful Pandas feature is its Categorical dtype.

Even if you’re not always working with gigabytes of data in RAM, you’ve probably run into cases where straightforward operations on a large DataFrame seem to hang up for more than a few seconds.

Pandas object dtype is often a great candidate for conversion to category data. (object is a container for Python str, heterogeneous data types, or “other” types.) Strings occupy a significant amount of space in memory:

>>> colors=pd.Series([... 'periwinkle',... 'mint green',... 'burnt orange',... 'periwinkle',... 'burnt orange',... 'rose',... 'rose',... 'mint green',... 'rose',... 'navy'... ])...>>> importsys>>> colors.apply(sys.getsizeof)0 591 592 613 594 615 536 537 598 539 53dtype: int64

Note: I used sys.getsizeof() to show the memory occupied by each individual value in the Series. Keep in mind these are Python objects that have some overhead in the first place. (sys.getsizeof('') will return 49 bytes.)

There is also colors.memory_usage(), which sums up the memory usage and relies on the .nbytes attribute of the underlying NumPy array. Don’t get too bogged down in these details: what is important is relative memory usage that results from type conversion, as you’ll see next.

Now, what if we could take the unique colors above and map each to a less space-hogging integer? Here is a naive implementation of that:

>>> mapper={v:kfork,vinenumerate(colors.unique())}>>> mapper{'periwinkle': 0, 'mint green': 1, 'burnt orange': 2, 'rose': 3, 'navy': 4}>>> as_int=colors.map(mapper)>>> as_int0 01 12 23 04 25 36 37 18 39 4dtype: int64>>> as_int.apply(sys.getsizeof)0 241 282 283 244 285 286 287 288 289 28dtype: int64Note: Another way to do this same thing is with Pandas’ pd.factorize(colors):

>>> pd.factorize(colors)[0]array([0, 1, 2, 0, 2, 3, 3, 1, 3, 4])

Either way, you are encoding the object as an enumerated type (categorical variable).

You’ll notice immediately that memory usage is just about cut in half compared to when the full strings are used with object dtype.

Earlier in the section on accessors, I mentioned the .cat (categorical) accessor. The above with mapper is a rough illustration of what is happening internally with Pandas’ Categorical dtype:

“The memory usage of a Categorical is proportional to the number of categories plus the length of the data. In contrast, an object dtype is a constant times the length of the data.” (Source)

In colors above, you have a ratio of 2 values for every unique value (category):

>>> len(colors)/colors.nunique()2.0

As a result, the memory savings from converting to Categorical is good, but not great:

>>> # Not a huge space-saver to encode as Categorical>>> colors.memory_usage(index=False,deep=True)650>>> colors.astype('category').memory_usage(index=False,deep=True)495However, if you blow out the proportion above, with a lot of data and few unique values (think about data on demographics or alphabetic test scores), the reduction in memory required is over 10 times:

>>> manycolors=colors.repeat(10)>>> len(manycolors)/manycolors.nunique()# Much greater than 2.0x20.0>>> manycolors.memory_usage(index=False,deep=True)6500>>> manycolors.astype('category').memory_usage(index=False,deep=True)585A bonus is that computational efficiency gets a boost too: for categorical Series, the string operations are performed on the .cat.categories attribute rather than on each original element of the Series.

In other words, the operation is done once per unique category, and the results are mapped back to the values. Categorical data has a .cat accessor that is a window into attributes and methods for manipulating the categories:

>>> ccolors=colors.astype('category')>>> ccolors.cat.categoriesIndex(['burnt orange', 'mint green', 'navy', 'periwinkle', 'rose'], dtype='object')In fact, you can reproduce something similar to the example above that you did manually:

>>> ccolors.cat.codes0 31 12 03 34 05 46 47 18 49 2dtype: int8

All that you need to do to exactly mimic the earlier manual output is to reorder the codes:

>>> ccolors.cat.reorder_categories(mapper).cat.codes0 01 12 23 04 25 36 37 18 39 4dtype: int8

Notice that the dtype is NumPy’s int8, an 8-bit signed integer that can take on values from -127 to 128. (Only a single byte is needed to represent a value in memory. 64-bit signed ints would be overkill in terms of memory usage.) Our rough-hewn example resulted in int64 data by default, whereas Pandas is smart enough to downcast categorical data to the smallest numerical dtype possible.

Most of the attributes for .cat are related to viewing and manipulating the underlying categories themselves:

>>> [iforiindir(ccolors.cat)ifnoti.startswith('_')]['add_categories', 'as_ordered', 'as_unordered', 'categories', 'codes', 'ordered', 'remove_categories', 'remove_unused_categories', 'rename_categories', 'reorder_categories', 'set_categories']There are a few caveats, though. Categorical data is generally less flexible. For instance, if inserting previously unseen values, you need to add this value to a .categories container first:

>>> ccolors.iloc[5]='a new color'# ...ValueError: Cannot setitem on a Categorical with a new category,set the categories first>>> ccolors=ccolors.cat.add_categories(['a new color'])>>> ccolors.iloc[5]='a new color'# No more ValueError

If you plan to be setting values or reshaping data rather than deriving new computations, Categorical types may be less nimble.

6. Introspect Groupby Objects via Iteration

When you call df.groupby('x'), the resulting Pandas groupby objects can be a bit opaque. This object is lazily instantiated and doesn’t have any meaningful representation on its own.

You can demonstrate with the abalone dataset from example 1:

>>> abalone['ring_quartile']=pd.qcut(abalone.rings,q=4,labels=range(1,5))>>> grouped=abalone.groupby('ring_quartile')>>> grouped<pandas.core.groupby.groupby.DataFrameGroupBy object at 0x11c1169b0>Alright, now you have a groupby object, but what is this thing, and how do I see it?

Before you call something like grouped.apply(func), you can take advantage of the fact that groupby objects are iterable:

>>> help(grouped.__iter__) Groupby iterator Returns ------- Generator yielding sequence of (name, subsetted object) for each group

Each “thing” yielded by grouped.__iter__() is a tuple of (name, subsetted object), where name is the value of the column on which you’re grouping, and subsetted object is a DataFrame that is a subset of the original DataFrame based on whatever grouping condition you specify. That is, the data gets chunked by group:

>>> foridx,frameingrouped:... print(f'Ring quartile: {idx}')... print('-'*16)... print(frame.nlargest(3,'weight'),end='\n\n')...Ring quartile: 1---------------- sex length diam height weight rings ring_quartile2619 M 0.690 0.540 0.185 1.7100 8 11044 M 0.690 0.525 0.175 1.7005 8 11026 M 0.645 0.520 0.175 1.5610 8 1Ring quartile: 2---------------- sex length diam height weight rings ring_quartile2811 M 0.725 0.57 0.190 2.3305 9 21426 F 0.745 0.57 0.215 2.2500 9 21821 F 0.720 0.55 0.195 2.0730 9 2Ring quartile: 3---------------- sex length diam height weight rings ring_quartile1209 F 0.780 0.63 0.215 2.657 11 31051 F 0.735 0.60 0.220 2.555 11 33715 M 0.780 0.60 0.210 2.548 11 3Ring quartile: 4---------------- sex length diam height weight rings ring_quartile891 M 0.730 0.595 0.23 2.8255 17 41763 M 0.775 0.630 0.25 2.7795 12 4165 M 0.725 0.570 0.19 2.5500 14 4Relatedly, a groupby object also has .groups and a group-getter, .get_group():

>>> grouped.groups.keys()dict_keys([1, 2, 3, 4])>>> grouped.get_group(2).head() sex length diam height weight rings ring_quartile2 F 0.530 0.420 0.135 0.6770 9 28 M 0.475 0.370 0.125 0.5095 9 219 M 0.450 0.320 0.100 0.3810 9 223 F 0.550 0.415 0.135 0.7635 9 239 M 0.355 0.290 0.090 0.3275 9 2

This can help you be a little more confident that the operation you’re performing is the one you want:

>>> grouped['height','weight'].agg(['mean','median']) height weight mean median mean medianring_quartile1 0.1066 0.105 0.4324 0.36852 0.1427 0.145 0.8520 0.84403 0.1572 0.155 1.0669 1.06454 0.1648 0.165 1.1149 1.0655

No matter what calculation you perform on grouped, be it a single Pandas method or custom-built function, each of these “sub-frames” is passed one-by-one as an argument to that callable. This is where the term “split-apply-combine” comes from: break the data up by groups, perform a per-group calculation, and recombine in some aggregated fashion.

If you’re having trouble visualizing exactly what the groups will actually look like, simply iterating over them and printing a few can be tremendously useful.

7. Use This Mapping Trick for Membership Binning

Let’s say that you have a Series and a corresponding “mapping table” where each value belongs to a multi-member group, or to no groups at all:

>>> countries=pd.Series([... 'United States',... 'Canada',... 'Mexico',... 'Belgium',... 'United Kingdom',... 'Thailand'... ])...>>> groups={... 'North America':('United States','Canada','Mexico','Greenland'),... 'Europe':('France','Germany','United Kingdom','Belgium')... }In other words, you need to map countries to the following result:

0 North America1 North America2 North America3 Europe4 Europe5 otherdtype: object

What you need here is a function similar to Pandas’ pd.cut(), but for binning based on categorical membership. You can use pd.Series.map(), which you already saw in example #5, to mimic this:

fromtypingimportAnydefmembership_map(s:pd.Series,groups:dict,fillvalue:Any=-1)->pd.Series:# Reverse & expand the dictionary key-value pairsgroups={x:kfork,vingroups.items()forxinv}returns.map(groups).fillna(fillvalue)This should be significantly faster than a nested Python loop through groups for each country in countries.

Here’s a test drive:

>>> membership_map(countries,groups,fillvalue='other')0 North America1 North America2 North America3 Europe4 Europe5 otherdtype: object

Let’s break down what’s going on here. (Sidenote: this is a great place to step into a function’s scope with Python’s debugger, pdb, to inspect what variables are local to the function.)

The objective is to map each group in groups to an integer. However, Series.map() will not recognize 'ab'—it needs the broken-out version with each character from each group mapped to an integer. This is what the dictionary comprehension is doing:

>>> groups=dict(enumerate(('ab','cd','xyz')))>>> {x:kfork,vingroups.items()forxinv}{'a': 0, 'b': 0, 'c': 1, 'd': 1, 'x': 2, 'y': 2, 'z': 2}This dictionary can be passed to s.map() to map or “translate” its values to their corresponding group indices.

8. Understand How Pandas Uses Boolean Operators

You may be familiar with Python’s operator precedence, where and, not, and or have lower precedence than arithmetic operators such as <, <=, >, >=, !=, and ==. Consider the two statements below, where < and > have higher precedence than the and operator:

>>> # Evaluates to "False and True">>> 4<3and5>4False>>> # Evaluates to 4 < 5 > 4>>> 4<(3and5)>4True

Note: It’s not specifically Pandas-related, but 3 and 5 evaluates to 5 because of short-circuit evaluation:

“The return value of a short-circuit operator is the last evaluated argument.” (Source)

Pandas (and NumPy, on which Pandas is built) does not use and, or, or not. Instead, it uses &, |, and ~, respectively, which are normal, bona fide Python bitwise operators.

These operators are not “invented” by Pandas. Rather, &, |, and ~ are valid Python built-in operators that have higher (rather than lower) precedence than arithmetic operators. (Pandas overrides dunder methods like .__ror__() that map to the | operator.) To sacrifice some detail, you can think of “bitwise” as “elementwise” as it relates to Pandas and NumPy:

>>> pd.Series([True,True,False])&pd.Series([True,False,False])0 True1 False2 Falsedtype: bool

It pays to understand this concept in full. Let’s say that you have a range-like Series:

>>> s=pd.Series(range(10))

I would guess that you may have seen this exception raised at some point:

>>> s%2==0&s>3ValueError: The truth value of a Series is ambiguous.Use a.empty, a.bool(), a.item(), a.any() or a.all().

What’s happening here? It’s helpful to incrementally bind the expression with parentheses, spelling out how Python expands this expression step by step:

s%2==0&s>3# Same as above, original expression(s%2)==0&s>3# Modulo is most tightly binding here(s%2)==(0&s)>3# Bitwise-and is second-most-binding(s%2)==(0&s)and(0&s)>3# Expand the statement((s%2)==(0&s))and((0&s)>3)# The `and` operator is least-binding

The expression s % 2 == 0 & s > 3 is equivalent to (or gets treated as) ((s % 2) == (0 & s)) and ((0 & s) > 3). This is called expansion: x < y <= z is equivalent to x < y and y <= z.

Okay, now stop there, and let’s bring this back to Pandas-speak. You have two Pandas Series that we’ll call left and right:

>>> left=(s%2)==(0&s)>>> right=(0&s)>3>>> leftandright# This will raise the same ValueError

You know that a statement of the form left and right is truth-value testing both left and right, as in the following:

>>> bool(left)andbool(right)

The problem is that Pandas developers intentionally don’t establish a truth-value (truthiness) for an entire Series. Is a Series True or False? Who knows? The result is ambiguous:

>>> bool(s)ValueError: The truth value of a Series is ambiguous.Use a.empty, a.bool(), a.item(), a.any() or a.all().

The only comparison that makes sense is an elementwise comparison. That’s why, if an arithmetic operator is involved, you’ll need parentheses:

>>> (s%2==0)&(s>3)0 False1 False2 False3 False4 True5 False6 True7 False8 True9 Falsedtype: bool

In short, if you see the ValueError above pop up with boolean indexing, the first thing you should probably look to do is sprinkle in some needed parentheses.

9. Load Data From the Clipboard

It’s a common situation to need to transfer data from a place like Excel or Sublime Text to a Pandas data structure. Ideally, you want to do this without going through the intermediate step of saving the data to a file and afterwards reading in the file to Pandas.

You can load in DataFrames from your computer’s clipboard data buffer with pd.read_clipboard(). Its keyword arguments are passed on to pd.read_table().

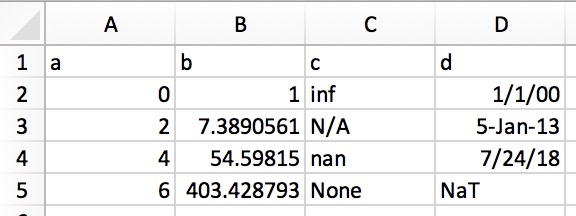

This allows you to copy structured text directly to a DataFrame or Series. In Excel, the data would look something like this:

![Excel Clipboard Data]()

Its plain-text representation (for example, in a text editor) would look like this:

a b c d

0 1 inf 1/1/00

2 7.389056099 N/A 5-Jan-13

4 54.59815003 nan 7/24/18

6 403.4287935 None NaT

Simply highlight and copy the plain text above, and call pd.read_clipboard():

>>> df=pd.read_clipboard(na_values=[None],parse_dates=['d'])>>> df a b c d0 0 1.0000 inf 2000-01-011 2 7.3891 NaN 2013-01-052 4 54.5982 NaN 2018-07-243 6 403.4288 NaN NaT>>> df.dtypesa int64b float64c float64d datetime64[ns]dtype: object

This one’s short and sweet to round out the list. As of Pandas version 0.21.0, you can write Pandas objects directly to gzip, bz2, zip, or xz compression, rather than stashing the uncompressed file in memory and converting it. Here’s an example using the abalone data from trick #1:

abalone.to_json('df.json.gz',orient='records',lines=True,compression='gzip')In this case, the size difference is 11.6x:

>>> importos.path>>> abalone.to_json('df.json',orient='records',lines=True)>>> os.path.getsize('df.json')/os.path.getsize('df.json.gz')11.603035760226396Want to Add to This List? Let Us Know

Hopefully, you were able to pick up a couple of useful tricks from this list to lend your Pandas code better readability, versatility, and performance.

If you have something up your sleeve that’s not covered here, please leave a suggestion in the comments or as a GitHub Gist. We will gladly add to this list and give credit where it’s due.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]