Techiediaries - Django: 10xDev Newsletter #1: Vibe Coding, Clone UIs with AI; Python for Mobile Dev; LynxJS — Tiktok New Framework; New Angular 19, React 19, Laravel 12 Features; AI Fakers in Recruitment; Local-First Apps…

Anarcat: Losing the war for the free internet

Warning: this is a long ramble I wrote after an outage of my home internet. You'll get your regular scheduled programming shortly.

I didn't realize this until relatively recently, but we're at war.

Fascists and capitalists are trying to take over the world, and it's bringing utter chaos.

We're more numerous than them, of course: this is only a handful of people screwing everyone else over, but they've accumulated so much wealth and media control that it's getting really, really hard to move around.

Everything is surveilled: people are carrying tracking and recording devices in their pockets at all time, or they drive around in surveillance machines. Payments are all turning digital. There's cameras everywhere, including in cars. Personal data leaks are so common people kind of assume their personal address, email address, and other personal information has already been leaked.

The internet itself is collapsing: most people are using the network only as a channel to reach a "small" set of "hyperscalers": mind-boggingly large datacenters that don't really operate like the old internet. Once you reach the local endpoint, you're not on the internet anymore. Netflix, Google, Facebook (Instagram, Whatsapp, Messenger), Apple, Amazon, Microsoft (Outlook, Hotmail, etc), all those things are not really the internet anymore.

Those companies operate over the "internet" (as in the TCP/IP network), but they are not an "interconnected network" as much as their own, gigantic silos so much bigger than everything else that they essentially dictate how the network operates, regardless of standards. You access it over "the web" (as in "HTTP") but the fabric is not made of interconnected links that cross sites: all those sites are trying really hard to keep you captive on their platforms.

Besides, you think you're writing an email to the state department, for example, but you're really writing to Microsoft Outlook. That app your university or border agency tells you to install, the backend is not hosted by those institutions, it's on Amazon. Heck, even Netflix is on Amazon.

Meanwhile I've been operating my own mail server first under my bed (yes, really) and then in a cupboard or the basement for almost three decades now. And what for?

So I can tell people I can? Maybe!

I guess the reason I'm doing this is the same reason people are suddenly asking me about the (dead) mesh again. People are worried and scared that the world has been taken over, and they're right: we have gotten seriously screwed.

It's the same reason I keep doing radio, minimally know how to grow food, ride a bike, build a shed, paddle a canoe, archive and document things, talk with people, host an assembly. Because, when push comes to shove, there's no one else who's going to do it for you, at least not the way that benefits the people.

The Internet is one of humanity's greatest accomplishments. Obviously, oligarchs and fascists are trying to destroy it. I just didn't expect the tech bros to be flipping to that side so easily. I thought we were friends, but I guess we are, after all, enemies.

That said, that old internet is still around. It's getting harder to host your own stuff at home, but it's not impossible. Mail is tricky because of reputation, but it's also tricky in the cloud (don't get fooled!), so it's not that much easier (or cheaper) there.

So there's things you can do, if you're into tech.

Share your wifi with your neighbours.

Build a LAN. Throw a wire over to your neighbour too, it works better than wireless.

Host a web server. Build a site with a static site generator and throw it in the wind.

Download and share torrents, and why not a tracker.

Run an IRC server (or Matrix, if you want to federate and lose high availability).

At least use Signal, not Whatsapp or Messenger.

And yes, why not, run a mail server.

Don't write new software, there's plenty of that around already.

(Just kidding, you can write code, cypherpunk.)

You can do many of those things just by setting up a FreedomBox.

That is, after all, the internet: people doing their own thing for their own people.

Otherwise, it's just like sitting in front of the television and watching the ads. Opium of the people, like the good old time.

Disobey. Revolt. Build.

Anarcat: Minor outage at Teksavvy business

This morning, internet was down at home. The last time I had such an issue was in February 2023, when my provider was Oricom. Now I'm with a business service at Teksavvy Internet (TSI), in which I pay 100$ per month for a 250/50 mbps business package, with a static IP address, on which I run, well, everything: email services, this website, etc.

Mitigation

The main problem when the service goes down like this for prolonged outages is email. Mail is pretty resilient to failures like this but after some delay (which varies according to the other end), mail starts to drop. I am actually not sure what the various settings are among different providers, but I would assume mail is typically kept for about 24h, so that's our mark.

Last time, I setup VMs at Linode and Digital Ocean to deal better with this. I have actually kept those VMs running as DNS servers until now, so that part is already done.

I had fantasized about Puppetizing the mail server configuration so

that I could quickly spin up mail exchangers on those machines. But

now I am realizing that my Puppet server is one of the service that's

down, so this would not work, at least not unless the manifests can be

applied without a Puppet server (say with puppet apply).

Thankfully, my colleague groente did amazing work to refactor our Postfix configuration in Puppet at Tor, and that gave me the motivation to reproduce the setup in the lab. So I have finally Puppetized part of my mail setup at home. That used to be hand-crafted experimental stuff documented in a couple of pages in this wiki, but is now being deployed by Puppet.

It's not complete yet: spam filtering (including DKIM checks and

graylisting) are not implemented yet, but that's the next step,

presumably to do during the next outage. The setup should be

deployable with puppet apply, however, and I have refined that

mechanism a little bit, with the run script.

Heck, it's not even deployed yet. But the hard part / grunt work is done.

Other

The outage was "short" enough (5 hours) that I didn't take time to deploy the other mitigations I had deployed in the previous incident.

But I'm starting to seriously consider deploying a web (and caching) reverse proxy so that I endure such problems more gracefully.

Side note on proper servics

Typically, I tend to think of a properly functioning service as having four things:

- backups

- documentation

- monitoring

- automation

- high availability

Yes, I miscounted. This is why you have high availability.

Backups

Duh. If data is maliciously or accidentally destroyed, you need a copy somewhere. Preferably in a way that malicious joe can't get to.

This is harder than you think.

Documentation

I have an entire template for this. Essentially, it boils down to using https://diataxis.fr/ and this "audit" guide. For me, the most important parts are:

- disaster recovery (includes backups, probably)

- playbook

- install/upgrade procedures (see automation)

You probably know this is hard, and this is why you're not doing it. Do it anyways, you'll think it sucks, but you'll be really grateful for whatever scraps you wrote when you're in trouble.

Monitoring

If you don't have monitoring, you'll know it fails too late, and you won't know it recovers. Consider high availability, work hard to reduce noise, and don't have machine wake people up, that's literally torture and is against the Geneva convention.

Consider predictive algorithm to prevent failures, like "add storage within 2 weeks before this disk fills up".

This is harder than you think.

Automation

Make it easy to redeploy the service elsewhere.

Yes, I know you have backups. That is not enough: that typically restores data and while it can also include configuration, you're going to need to change things when you restore, which is what automation (or call it "configuration management" if you will) will do for you anyways.

This also means you can do unit tests on your configuration, otherwise you're building legacy.

This is probably as hard as you think.

High availability

Make it not fail when one part goes down.

Eliminate single points of failures.

This is easier than you think, except for storage and DNS (which, I guess, means it's harder than you think too).

Assessment

In the above 5 items, I check two:

- backups

- documentation

And barely: I'm not happy about the offsite backups, and my documentation is much better at work than at home (and even there, I have a 15 year backlog to catchup on).

I barely have monitoring: Prometheus is scraping parts of the infra, but I don't have any sort of alerting -- by which I don't mean "electrocute myself when something goes wrong", I mean "there's a set of thresholds and conditions that define an outage and I can look at it".

Automation is wildly incomplete. My home server is a random collection of old experiments and technologies, ranging from Apache with Perl and CGI scripts to Docker containers running Golang applications. Most of it is not Puppetized (but the ratio is growing). Puppet itself introduces a huge attack vector with kind of catastrophic lateral movement if the Puppet server gets compromised.

And, fundamentally, I am not sure I can provide high availability in the lab. I'm just this one guy running my home network, and I'm growing older. I'm thinking more about winding things down than building things now, and that's just really sad, because I feel we're losing (well that escalated quickly).

Resolution

In the end, I didn't need any mitigation and the problem fixed itself. I did do quite a bit of cleanup so that feels somewhat good, although I despaired quite a bit at the amount of technical debt I've accumulated in the lab.

Timeline

Times are in UTC-4.

- 6:52: IRC bouncer goes offline

- 9:20: called TSI support, waited on the line 15 minutes then was told I'd get a call back

- 9:54: outage apparently detected by TSI

- 11:00: no response, tried calling back support again

- 11:10: confirmed bonding router outage, no official ETA but "today", source of the 9:54 timestamp above

- 12:08: TPA monitoring notices service restored

- 12:34: call back from TSI; service restored, problem was with the "bonder" configuration on their end, which was "fighting between Montréal and Toronto"

Eli Bendersky: Understanding Numpy's einsum

This is a brief explanation and a cookbook for using numpy.einsum, which lets us use Einstein notation to evaluate operations on multi-dimensional arrays. The focus here is mostly on einsum's explicit mode (with -> and output dimensions explicitly specified in the subscript string) and use cases common in ML papers, though I'll also briefly touch upon other patterns.

Basic use case - matrix multiplication

Let's start with a basic demonstration: matrix multiplication using einsum. Throughout this post, A and B will be these matrices:

>>>A=np.arange(6).reshape(2,3)>>>Aarray([[0,1,2],[3,4,5]])>>>B=np.arange(12).reshape(3,4)+1>>>Barray([[1,2,3,4],[5,6,7,8],[9,10,11,12]])The shapes of A and B let us multiply A @ B to get a (2,4) matrix. This can also be done with einsum, as follows:

>>>np.einsum('ij,jk->ik',A,B)array([[23,26,29,32],[68,80,92,104]])The first parameter to einsum is the subscript string, which describes the operation to perform on the following operands. Its format is a comma-separated list of inputs, followed by -> and an output. An arbitrary number of positional operands follows; they match the comma-separated inputs specified in the subscript. For each input, its shape is a sequence of dimension labels like i (any single letter).

In our example, ij refers to the matrix A - denoting its shape as (i,j), and jk refers to the matrix B - denoting its shape as (j,k). While in the subscript these dimension labels are symbolic, they become concrete when einsum is invoked with actual operands. This is because the shapes of the operands are known at that point.

The following is a simplified mental model of what einsum does (for a more complete description, read An instructional implementation of einsum):

- The output part of the subscript specifies the shape of the output array, expressed in terms of the input dimension labels.

- Whenever a dimension label is repeated in the input and absent in the output - it is contracted (summed). In our example, j is repeated (and doesn't appear in the output), so it's contracted: each output element [ik] is a dot product of the i'th row of the first input with the k'th column of the second input.

We can easily transpose the output, by flipping its shape:

>>>np.einsum('ij,jk->ki',A,B)array([[23,68],[26,80],[29,92],[32,104]])This is equivalent to (A @ B).T.

When reading ML papers, I find that even for such simple cases as basic matrix multiplication, the einsum notation is often preferred to the plain @ (or its function form like np.dot and np.matmul). This is likely because the einsum approach is self-documenting, helping the writer reason through the dimensions more explicitly.

Batched matrix multiplication

Using einsum instead of @ for matmuls as a documentation prop starts making even more sense when the ndim [1] of the inputs grows. For example, we may want to perform matrix multiplication on a whole batch of inputs within a single operation. Suppose we have these arrays:

>>>Ab=np.arange(6*6).reshape(6,2,3)>>>Bb=np.arange(6*12).reshape(6,3,4)Here 6 is the batch dimension. We're multiplying a batch of six (2,3) matrices by a batch of six (3,4) matrices; each matrix in Ab is multiplied by a corresponding matrix in Bb. The result is shaped (6,2,4).

We can perform batched matmul by doing Ab @ Bb - in Numpy this just works: the contraction happens between the last dimension of the first array and the penultimate dimension of the second array. This is repeated for all the dimensions preceding the last two. The shape of the output is (6,2,4), as expected.

With the einsum notation, we can do the same, but in a way that's more self-documenting:

>>>np.einsum('bmd,bdn->bmn',Ab,Bb)This is equivalent to Ab @ Bb, but the subscript string lets us name the dimensions with single letters and makes it easier to follow w.r.t. what's going on. For example, in this case b may stand for batch, m and n may stand for sequence lengths and d could be some sort of model dimension/depth.

Note: while b is repeated in the inputs of the subscript, it also appears in the output; therefore it's not contracted.

Ordering output dimensions

The order of output dimensions in the subscript of einsum allows us to do more than just matrix multiplications; we can also transpose arbitrary dimensions:

>>>Bb.shape(6,3,4)>>>np.einsum('ijk->kij',Bb).shape(4,6,3)This capability is commonly combined with matrix multiplication to specify exactly the order of dimensions in a multi-dimensional batched array multiplication. The following is an example taken directly from the Fast Transformer Decoding paper by Noam Shazeer.

In the section on batched multi-head attention, the paper defines the following arrays:

- M: a tensor with shape (b,m,d) (batch, sequence length, model depth)

- P_k: a tensor with shape (h,d,k) (number of heads, model depth, head size for keys)

Let's define some dimension size constants and random arrays:

>>>m=4;d=3;k=6;h=5;b=10>>>Pk=np.random.randn(h,d,k)>>>M=np.random.randn(b,m,d)The paper performs an einsum to calculate all the keys in one operation:

>>>np.einsum('bmd,hdk->bhmk',M,Pk).shape(10,5,4,6)Note that this involves both contraction (of the d dimension) and ordering of the outputs so that batch comes before heads. Theoretically, we could reverse this order by doing:

>>>np.einsum('bmd,hdk->hbmk',M,Pk).shape(5,10,4,6)And indeed, we could have the output in any order. Obviously, bhmk is the one that makes sense for the specific operation at hand. It's important to highlight the readability of the einsum approach as opposed to a simple M @ Pk, where the dimensions involved are much less clear [2].

Contraction over multiple dimensions

More than one dimension can be contracted in a single einsum, as demonstrated by another example from the same paper:

>>>b=10;n=4;d=3;v=6;h=5>>>O=np.random.randn(b,h,n,v)>>>Po=np.random.randn(h,d,v)>>>np.einsum('bhnv,hdv->bnd',O,Po).shape(10,4,3)Both h and v appear in both inputs of the subscript but not in the output. Therefore, both these dimensions are contracted - each element of the output is a sum across both the h and v dimensions. This would be much more cumbersome to achieve without einsum!

Transposing inputs

When specifying the inputs to einsum, we can transpose them by reordering the dimensions. Recall our matrix A with shape (2,3); we can't multiply A by itself - the shapes don't match, but we can multiply it by its own transpose as in A @ A.T. With einsum, we can do this as follows:

>>>np.einsum('ij,kj->ik',A,A)array([[5,14],[14,50]])Note the order of dimensions in the second input of the subscript: kj instead of jk as before. Since j is still the label repeated in inputs but omitted in the output, it's the one being contracted.

More than two arguments

einsum supports an arbitrary number of inputs; suppose we want to chain-multiply our A and B with this array C:

>>>C=np.arange(20).reshape(4,5)We get:

>>>A@B@Carray([[900,1010,1120,1230,1340],[2880,3224,3568,3912,4256]])With einsum, we do it like this:

>>>np.einsum('ij,jk,kp->ip',A,B,C)array([[900,1010,1120,1230,1340],[2880,3224,3568,3912,4256]])Here as well, I find the explicit dimension names a nice self-documentation feature.

An instructional implementation of einsum

The simplified mental model of how einsum works presented above is not entirely correct, though it's definitely sufficient to understand the most common use cases.

I read a lot of "how einsum works" documents online, and unfortunately they all suffer from similar issues; to put it generously, at the very least they're incomplete.

What I found is that implementing a basic version of einsum is easy; and that, moreover, this implementation serves as a much better explanation and mental model of how einsum works than other attempts [3]. So let's get to it.

We'll use the basic matrix multiplication as a guiding example: 'ij,jk->ik'.

This calculation has two inputs; so let's start by writing a function that takes two arguments [4]:

defcalc(__a,__b):The labels in the subscript specify the dimensions of these inputs, so let's define the dimension sizes explicitly (and also assert that sizes are compatible when a label is repeated in multiple inputs):

i_size=__a.shape[0]j_size=__a.shape[1]assertj_size==__b.shape[0]k_size=__b.shape[1]The output shape is (i,k), so we can create an empty output array:

out=np.zeros((i_size,k_size))And generate a loop over its every element:

foriinrange(i_size):forkinrange(k_size):...returnoutNow, what goes into this loop? It's time to look at the inputs in the subscript. Since there's a contraction on the j label, this means summation over this dimension:

foriinrange(i_size):forkinrange(k_size):forjinrange(j_size):out[i,k]+=__a[i,j]*__b[j,k]returnoutNote how we access out, __a and __b in the loop body; this is derived directly from the subscript 'ij,jk->ik'. In fact, this is how the einsum came to be from Einstein notation - more on this later on.

As another example of how to reason about einsum using this approach, consider the subscript from Contraction over multiple dimensions:

'bhnv,hdv->bnd'

Straight away, we can write out the assignment to the output, following the subscript:

out[b,n,d]+=__a[b,h,n,v]*__b[h,d,v]All that's left is figure out the loops. As discussed earlier, the outer loops are over the output dimensions, with two additional inner loops for the contracted dimensions in the input (v and h in this case). Therefore, the full implementation (omitting the assignments of *_size variables and dimension checks) is:

forbinrange(b_size):forninrange(n_size):fordinrange(d_size):forvinrange(v_size):forhinrange(h_size):out[b,n,d]+=__a[b,h,n,v]*__b[h,d,v]What happens when the einsum subscript doesn't have any contracted dimension? In this case, there's no summation loop; the outer loops (assigning each element of the output array) are simply assigning the product of the appropriate input elements. Here's an example: 'i,j->ij'. As before, we start by setting up dimension sizes and the output array, and then a loop over each output element:

defcalc(__a,__b):i_size=__a.shape[0]j_size=__b.shape[0]out=np.zeros((i_size,j_size))foriinrange(i_size):forjinrange(j_size):out[i,j]=__a[i]*__b[j]returnoutSince there's no dimension in the input that doesn't appear in the output, there's no summation. The result of this computation is the outer product between two 1D input arrays.

I placed a well-documented implementation of this translation on GitHub. The function translate_einsum takes an einsum subscript and emits the text for a Python function that implements it.

Einstein notation

This notation is named after Albert Einstein because he introduced it to physics in his seminal 1916 paper on general relativity. Einstein was dealing with cumbersome nested sums to express operations on tensors and used this notation for brevity.

In physics, tensors typically have both subscripts and superscripts (for covariant and contravariant components), and it's common to encounter systems of equations like this:

\[\begin{align*} B^1=a_{11}A^1+a_{12}A^2+a_{13}A^3=\sum_{j=1}^{3} a_{ij}A^j\\ B^2=a_{21}A^1+a_{22}A^2+a_{23}A^3=\sum_{j=1}^{3} a_{2j}A^j\\ B^3=a_{31}A^1+a_{32}A^2+a_{33}A^3=\sum_{j=1}^{3} a_{3j}A^j\\ \end{align*}\]We can collapse this into a single sum, using a variable i:

\[B^{i}=\sum_{j=1}^{3} a_{ij}A^j\]And observe that since j is duplicated inside the sum (once in a subscript and once in a superscript), we can write this as:

\[B^{i}=a_{ij}A^j\]Where the sum is implied; this is the core of Einstein notation. An observant reader will notice that the original system of equations can easily be expressed as matrix-vector multiplication, but keep a couple of things in mind:

- Matrix notation only became popular in physics after Einstein's work on general relativity (in fact, it was Werner Heisenberg who first introduced it in 1925).

- Einstein notation extends to any number of dimensions. Matrix notation is useful for 2D, but much more difficult to visualize and work with in higher dimensions. In 2D, matrix notation is equivalent to Einstein's.

It should be easy to see the equivalence between this notation and the einsum subscripts discussed in this post. The implicit mode of einsum is even closer to Einstein notation conceptually.

Implicit mode einsum

In implicit mode einsum, the output specification (-> and the labels following it) doesn't exist. Instead, the output shape is inferred from the input labels. For example, here's 2D matrix multiplication:

>>>np.einsum('ij,jk',A,B)array([[23,26,29,32],[68,80,92,104]])In implicit mode, the lexicographic order of labels in each input matters, as it determines the order of dimensions in the output. For example, if we want to (A @ B).T, we can do:

>>>np.einsum('ij,jh',A,B)array([[23,68],[26,80],[29,92],[32,104]])Since h precedes i in lexicographic order, this is equivalent to the explicit subscript 'ij,jh->hi, whereas the original implicit matmul subscript is equivalent to 'ih,jk->ik'.

Implicit mode isn't used much in ML code and papers, as far as I can tell. From my POV, compared to explicit mode it loses a lot of readability and gains very little savings in typing out the output labels.

| [1] | In the sense of numpy.ndim - the number of dimensions in the array. Alternatively this is sometimes called rank, but this is confusing because rank is already a name for something else in linear algebra. |

| [2] | I personally believe that one of the biggest downsides of Numpy and all derived libraries (like JAX, PyTorch and TensorFlow) is that there's no way to annotate and check the shapes of operations. This makes some code much less readable than it could be. einsum mitigates this to some extent. |

| [3] | First seen in this StackOverflow answer. |

| [4] | The reason we use underscores here is to avoid collisions with potential dimension labels named a and b. Since we're doing code generation here, variable shadowing is a common issue; see hygienic macros for additional fun. |

Go Deh: Incremental combinations without caching

|

| Irie server room |

Someone had a problem where they received initial data d1, worked on all r combinations of the data initially received, but by the time they had finished that, they checked and found there was now extra data d2, and they need to, in total, process the r combinations of all data d1+d2.

They don't want to process combinations twice, and the solutions given seemed to generate and store the combinations of d1, then generate the combinations of d1+d2 but test and reject any combination that was found previously.

Seems like a straight-forward answer that is easy to follow, but I thought: Is there a way to create just the extra combinations but without storing all the combinations from before?

My methods

It's out with Vscode as my IDE. I'll be playing with a lot of code that will end up deleted, modified, rerun. I could use a Jupyter notebook, but I can't publish them to my blog satisfactorily. I'll develop a .py file but with cells: a line comment of # %% visually splits the file into cells in the IDE adding buttons and editor commands to execute cells and selected code, in any order, on a restartable kernel running in an interactive window that also runs Ipython.

When doodling like this, I often create long lines, spaced to highlight comparisons between other lines. I refactor names to be concise at the time, as what you can take in at a glance helps find patterns. Because of that, this blog post is not written to be read on the small screens of phones.

So, its combinations; binomials, nCr.

A start

- First thing was to try and see patterns in the combinations of d1+d2 minus those of just d1.

- Dealing with sets of values; sets are unordered, so will at some time need a function to print them in order to aid pattern finding.

- Combinations can be large - use combinations of ints then later work with any type.

- Initial combinations of 0 to n-1 ints I later found to be more awkward to reason about so changed to work with combinations of 1 to n ints. extending to n+x added ints n+1 to n+x to combinations.

Diffs

In the following cell I create combinations for r = 3, and n in some range n_range in c and print successive combinations, and differences between successive combinations.

Cell output:

Patterns

Looking at diffs 4C3 - 3C3 each tuple is like they took 3C2 = {(1,2), (1,3), (2,3)} and tagged the extra 4 on to every inner tuple.

Lets call this modification extending,

5C3 - 4C3 seems to follow the same pattern.

Function extend

Rename nCr to bino and check extend works

nCr was originally working with 0..n-1 and bino was 1..n. Now they both do

Cell output:

Pascal

show that comb(n+1, r) = comb(n, r) + (n+1)* comb(n, r-1)

Pascals rule checker

Diff by 1 extra item "done", attempting diff by 2.

Diff by 2 pattern finding

It was incremental - find a pattern in 3C?, subtract it from 5C3, Find a pattern in 3C? that covers part of the remainder; repeat.

(Where ? <=3).

I also shortened function pf_set to pf so it would take less space when printing formatted expressions in f-strings

Cell output:

Behold Diff by 2

Cell output:

Pascals rules for increasing diffs

I followed the same method for diffs of three and ended up with these three functions:

Generalised Pascals rule

What can I say, I looked for patterns in the pascals rule functions for discrete diffs and tried to find patterns. I looked deeper into identities between the extend functions.

I finally found the following function that passed my tests, (many not shown).

I don't like the ifr-i>0else {()} bit as it doesn't seem elegant. There is probably some identity to be found that would make it disappear but, you know.

Back to the original problem

If comb(d1, r) is processed and then we find an extra d2 items, then we want to process extra_comb(d1, r, d2) where extra_comb does not include or save comb of d1.

We just need to exclude the nCr term in reduction of function pascal_rule_x.

Eventually I arrive at

Tests

END.

Real Python: Quiz: How to Strip Characters From a Python String

In this quiz, you’ll test your understanding of Python’s .strip() method.

You’ll also revisit the related methods .lstrip() and .rstrip(), as well as .removeprefix() and .removesuffix(). These methods are useful for tasks like cleaning user input, standardizing filenames, and preparing data for storage.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Armin Ronacher: Bridging the Efficiency Gap Between FromStr and String

Sometimes in Rust, you need to convert a string into a value of a specific type (for example, converting a string to an integer).

For this, the standard library provides the rather useful FromStr trait. In short, FromStr can convert from a &str into a value of any compatible type. If the conversion fails, an error value is returned. It's unfortunately not guaranteed that this value is an actual Error type, but overall, the trait is pretty useful.

It has however a drawback: it takes a &str and not a String which makes it wasteful in situations where your input is a String. This means that you will end up with a useless clone if do not actually need the conversion. Why would you do that? Well consider this type of API:

letarg1: i64=parser.next_value()?;letarg2: String=parser.next_value()?;In such cases, having a conversion that works directly with String values would be helpful. To solve this, we can introduce a new trait: FromString, which does the following:

- Converts from String to the target type.

- If converting from String to String, bypass the regular logic and make it a no-op.

- Implement this trait for all uses of FromStr that return a error that can be converted into Box<dyn Error> upon failure.

We start by defining a type alias for our error:

pubtypeError=Box<dynstd::error::Error+Send+Sync+'static>;You can be more creative here if you want. The benefit of using this directly is that a lot of types can be converted into that error, even if they are not errors themselves. For instance a FromStr that returns a bare String as error can leverage the standard library's blanket conversion implementation to Error.

Then we define the FromString trait:

pubtraitFromString: Sized{fnfrom_string(s: String)-> Result<Self,Error>;}To implement it, we provide a blanket implementation for all types that implement FromStr, where the error can be converted into our boxed error. As mentioned before, this even works for FromStr where Err: String. We also add a special case for when the input and output types are both String, using transmute_copy to avoid a clone:

usestd::any::TypeId;usestd::mem::{ManuallyDrop,transmute_copy};usestd::str::FromStr;impl<T>FromStringforTwhereT: FromStr<Err: Into<Error>>+'static,{fnfrom_string(s: String)-> Result<Self,Error>{ifTypeId::of::<T>()==TypeId::of::<String>(){Ok(unsafe{transmute_copy(&ManuallyDrop::new(s))})}else{T::from_str(&s).map_err(Into::into)}}}Why transmute_copy? We use it instead of the regular transmute? because Rust requires both types to have a known size at compile time for transmute to work. Due to limitations a generic T has an unknown size which would cause a hypothetical transmute call to fail with a compile time error. There is nightly-only transmute_unchecked which does not have that issue, but sadly we cannot use it. Another, even nicer solution, would be to have specialization, but sadly that is not stable either. It would avoid the use of unsafe though.

We can also add a helper function to make calling this trait easier:

pubfnfrom_string<T,S>(s: S)-> Result<T,Error>whereT: FromString,S: Into<String>,{FromString::from_string(s.into())}The Into might be a bit ridiculous here (isn't the whole point not to clone?), but it makes it easy to test this with static string literals.

Finally here is an example of how to use this:

lets: String=from_string("Hello World").unwrap();leti: i64=from_string("42").unwrap();Hopefully, this utility is useful in your own codebase when wanting to abstract over string conversions.

If you need it exactly as implemented, I also published it as a simple crate.

Postscriptum:

A big thank-you goes to David Tolnay and a few others who pointed out that this can be done with transmute_copy.

Another note: TypeId::of call requires V to be 'static. This is okay for this use, but there are some hypothetical cases where this is not helpful. In that case there is the excellent typeid crate which provides a ConstTypeId, which is like TypeId but is constructible in const in stable Rust.

PyBites: Case Study: Developing and Testing Python Packages with uv

Structuring Python projects properly, especially when developing packages, can often be confusing.

Many developers struggle with common questions:

- How exactly should project folders be organised?

- Should tests be inside or outside the package directory?

- Does the package itself belong at the root level or in a special src directory?

- And how do you properly import and test package functionality from scripts or external test files?

To help clarify these common challenges, I’ll show how I typically set up Python projects and organise package structures using the Python package and environment manager, uv.

The challenge

A typical and recurring problem in Python is how to import code that lives in a different place from where it is called. There are two natural ways to organise your code: modules and packages.

Things are fairly straightforward in the beginning, when you start to organise your code and put some functionality into different modules (aka Python files), but keep all the files in the same directory. This works because Python looks in several places when it resolves import statements, and one of those places is the current working directory, where all the modules are:

$ tree

.

├── main.py

└── utils.pyfrom utils import helper

print(helper())def helper():

return "I am a helper function"$ python main.py

I am a helper functionBut things get a bit tricky once you have enough code and decide to organise your code into folders. Let’s say you’ve moved your helper code into a src directory and you still want to import it into main.py, which is outside the src folder. Will this work? Well, that depends… on your Python version!

With Python 3.3 or higher, you will not see any error:

$ tree

.

├── main.py

└── src

└── utils.pyfrom src.utils import helper

print(helper())def helper():

return "I am a helper function"$ python main.py

I am a helper functionBut if we run the same example using a Python version prior to 3.3, we will encounter the infamous ModuleNotFoundError. This error only occurs prior 3.3 because Python 3.3 introduced implicit namespace packages. As a result, Python can treat directories without an __init__.py as packages when used in import statements—under certain conditions. I won’t go into further detail here, but you can learn more about namespace packages in PEP 420.

Since namespace packages behave slightly differently from standard packages, we should explicitly include an __init__.py file.

However, does this solve all our problems? For this one case, maybe. But, once we move away from the simple assumption that all caller modules reside in the root directory, we encounter the next issue:

$ tree

.

├── scripts

│ └── main.py

└── src

├── __init__.py

└── utils.pyfrom src.utils import helper

print(helper()) # I am a helper functiondef helper():

return "I am a helper function"$ uv run python scripts/main.py

Traceback (most recent call last):

File "/Users/miay/uv_test/default/scripts/main.py", line 2, in <module>

from src.utils import helper

ModuleNotFoundError: No module named 'src'You can solve this problem with some path manipulation, but this is fragile and also frowned upon. I will spend the rest of this article giving you a recipe and some best practices for solving this problem with uv in such a way that you will hopefully never have to experience this problem again…

What is uv?

uv is a powerful and fast Python package and project manager designed as a successor to traditional tools like pip and virtualenv. I’ve found it particularly helpful in providing fast package resolution and seamless environment management, improving my overall development efficiency. It is the closest thing we have to a unified and community accepted way of setting up and managing Python projects.

Astral is doing a great job of both respecting and serving the community, and I hope that we finally have a good chance of putting the old turf wars about the best Python tool behind us and concentrating on the things that really matter: Writing good code!

Setting Up Your Python Project Using uv

Step 1: Installation

The entry point to uv is quite simple. First, you follow the installation instructions provided by the official documentation for your operating system.

In my case, as I am on MacOS and like to use Homebrew wherever possible, it comes down to a single command: brew install uv.

And the really great thing here about uv is: You don’t need to install Python first to install uv! You can install uv in an active Python environment using pip install uv, but you shouldn’t. The reason is simple: uv is written in Rust and is meant to be a self-contained tool. You do not want to be dependent on a specific Python version and you want to use uv without the overhead or potential conflicts introduced by pip’s Python ecosystem.

It is much better to have uv available as a global command line tool that lives outside your Python setup. And that is what you get by installing it via curl, wget, brew or any other way except using pip.

Step 2: Creating a Project

Instead of creating folders manually, I use the uv init command to efficiently set up my project. This command already has a lot of helpful parameters, so let’s try them out to understand what the different options mean for the actual project structure.

The basic command

$ uv initsets up your current folder as a Python project. What does this mean? Well, create an empty folder and try it out. After that you can always run the tree command (if that is available to you) to see a nice tree view of your project structure. In my case, I get the following output for an empty folder initialized with uv:

$ tree -a -L 1

.

├── .git

├── .gitignore

├── .python-version

├── README.md

├── hello.py

├── pyproject.toml

└── uv.lockAs you can see, uv init has already taken care of a number of things and provided us with a good starting point. Specifically, it did the following:

- Initialising a git repository,

- Recording the current Python version with a

.python-versionfile, which will be used by other developers (or our future selves) to initialise the project with the same Python version as we have used, - Creating a

README.mdfile, - Providing a starting point for development with the

hello.pymodule, - Providing a way to manage your project’s metadata with the

pyproject.tomlfile, and finally, - Resolving the exact versions of the dependencies with the

uv.lockfile (currently mostly empty, but it does contain some information about the required Python version).

The pyproject.toml file is special in a number of ways. One important thing to know is that uv recognises a uv-managed project by detecting and inspecting the pyproject.toml file. As always, check the documentation for more information.

Let’s understand the uv init command a little better. As I said, uv init initialises a Python project in the current working directory as the root folder for the project. You can specify a project folder with uv init <project-name>, which will give you a new folder in your current working directory named after your project and with the same contents as we discussed earlier.

However, as I want to develop a package, uv directly supports this use case directly with the --package option:

$ uv init --package my_package

Initialized project `my-package` at `/Users/miay/uv_test/my_package`Looking again at the project structure (I will not include hidden files and folders from now on),

$ tree

.

├── README.md

├── pyproject.toml

└── src

└── my_package

└── __init__.pythere is an interesting change to the uv init command without the --package option: Instead of a hello.py module in the project’s root directory, we have an src folder containing a Python package named after our project (because of the __init__.py file, I guess you remember that about packages, don’t you?).

uv does one more thing so let’s have a look at the pyproject.toml file:

[project]

name = "my-package"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

authors = [

{ name = "Michael Aydinbas", email = "michael.aydinbas@gmail.com" }

]

requires-python = ">=3.13"

dependencies = []

[project.scripts]

my-package = "my_package:main"

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"As well as the “usual” things you would expect to see here, there is also a build-system section, which is only present when using the --package option, which instructs uv to install the code under my_package into the virtual environment managed by uv. In other words, uv will install your package and all its dependencies into the virtual environment so that it can be used by all other scripts and modules, wherever they are.

Let’s summarise the different options. However, for our purposes, we will always use the --package option when working with packages.

| Option | Description |

--app | Create a project for an application. Seems to be the default if nothing else is mentioned. |

--bare | Only create a pyproject.toml and nothing else. |

--lib | Create a project for a library. A library is a project that is intended to be built and distributed as a Python package. Works similar as --package but creates an additional py.typed marker file, used to support typing in a package (based on PEP 561). |

--package | Set up the project to be built as a Python package. Defines a [build-system] for the project.This is the default behavior when using --lib or --build-backend.Includes a [project.scripts] entrypoint and use a src/ project structure. |

--script | Add the dependency to the specified Python script, rather than to a project. |

Step 3: Managing the Virtual Environment

This section is quite short.

You can create the virtual environment with all defined dependencies and the source code developed under src by running

$ uv venv

Using CPython 3.13.2 interpreter at: /opt/homebrew/Caskroom/miniforge/base/bin/python

Creating virtual environment at: .venv

Activate with: source .venv/bin/activateYou can also run uv sync, which will also create the virtual environment if there is none, and synchronise the environment with the latest dependencies from the pyproject.toml file on top.

Step 4: Testing Package Functionalities

Congratulations, you’ve basically reached the point from where it doesn’t really matter where you want to import the code you’re developing under my_package, because it’s installed in the virtual environment and thus known to all Python modules, no matter where they are in the file system. Your package is basically like any other third-party or system package.

To see this in action, let’s demonstrate the two main use cases: testing our package with pytest and importing our package into some scripts in the scripts folder. First, the project structure:

$ tree

.

├── scripts

│ └── main.py

├── src

│ └── my_package

│ ├── __init__.py

│ └── utils.py

└── tests

└── test_utils.pyfrom my_package.utils import helper

def main():

print(helper())

if __name__ == "__main__":

main()def helper():

return "helper"from my_package.utils import helper

def test_helper():

assert helper() == "helper"Note what happens when you create or update your venv:

$ uv sync

Resolved 1 package in 9ms

Built my-package @ file:///Users/miay/uv_test/my_package

Prepared 1 package in 514ms

Installed 1 package in 1ms

+ my-package==0.1.0 (from file:///Users/miay/uv_test/my_package)See that last line? So uv installs the package my-package along with all other dependencies and thus it can be used in any other Python script or module just like any other dependency or library.

As a first step, I am adding pytest as a dev dependency, meaning it will be added in a separate section in the pyproject.toml file as it is meant for development and not needed to run the actual package. You will notice that uv add is not only adding the dependency but also installing it at the same time. With pytest being available, we can run the tests:

$ uv add --dev pytest

$ uv run pytest

============================= test session starts ==============================

platform darwin -- Python 3.13.2, pytest-8.3.5, pluggy-1.5.0

rootdir: /Users/miay/uv_test/my_package

configfile: pyproject.toml

collected 1 item

tests/test_utils.py . [100%]

============================== 1 passed in 0.01s ===============================Running scripts/main.py:

$ uv run scripts/main.py

helperEverything works the same and effortlessly.

What happens if I update my package code? Do I have to run uv sync again? Actually, no. It turns out that uv sync installs the package in the same way as uv pip install -e . would.

Editable install means that changes to the source directory will immediately affect the installed package, without the need to reinstall. Just change your source code and try it out again:

def helper():

return "helper function"$ uv run scripts/main.py

helper function$ uv run pytest

============================= test session starts ==============================

platform darwin -- Python 3.13.2, pytest-8.3.5, pluggy-1.5.0

rootdir: /Users/miay/uv_test/my_package

configfile: pyproject.toml

collected 1 item

tests/test_utils.py F [100%]

=================================== FAILURES ===================================

_________________________________ test_helper __________________________________

def test_helper():

> assert helper() == "helper"

E AssertionError: assert 'helper function' == 'helper'

E

E - helper

E + helper function

tests/test_utils.py:5: AssertionErrorThe main script returning successfully the new return value of the helper() function of the utils module in the my_package package without the need to reinstall the package first. Likewise, the tests are failing now.

On a final mark: Can you use uv with a monorepo setup? This means that uv is used to manage several packages in the same repository. It seems possible, although I have not tried it out, but there are good resources on how to get started using the concept of workspaces.

I hope you have enjoyed following me on this little journey and, as always, I welcome your comments, discussions and questions.

Keep Calm and Code in Python!

Real Python: Quiz: Python Code Quality: Best Practices and Tools

In this quiz, you’ll test your understanding of Python Code Quality: Tools & Best Practices.

By working through this quiz, you’ll revisit the importance of producing high-quality Python code that’s functional, readable, maintainable, efficient, and secure. You’ll also review how to use tools such as linters, formatters, and profilers to help achieve these qualities.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Real Python: Python Code Quality: Best Practices and Tools

Producing high-quality Python code involves using appropriate tools and consistently applying best practices. High-quality code is functional, readable, maintainable, efficient, and secure. It adheres to established standards and has excellent documentation.

You can achieve these qualities by following best practices such as descriptive naming, consistent coding style, modular design, and robust error handling. To help you with all this, you can use tools such as linters, formatters, and profilers.

By the end of this tutorial, you’ll understand that:

- Checking the quality of Python code involves using tools like linters and static type checkers to ensure adherence to coding standards and detect potential errors.

- Writing quality code in Python requires following best practices, such as clear naming conventions, modular design, and comprehensive testing.

- Good Python code is characterized by readability, maintainability, efficiency, and adherence to standards like PEP 8.

- Making Python code look good involves using formatters to ensure consistent styling and readability, aligning with established coding styles.

- Making Python code readable means using descriptive names for variables, functions, classes, modules, and packages.

Read on to learn more about the strategies, tools, and best practices that will help you write high-quality Python code.

Get Your Code:Click here to download the free sample code that you’ll use to learn about Python code quality best practices and tools.

Take the Quiz: Test your knowledge with our interactive “Python Code Quality: Best Practices and Tools” quiz. You’ll receive a score upon completion to help you track your learning progress:

Interactive Quiz

Python Code Quality: Best Practices and ToolsIn this quiz, you'll test your understanding of Python code quality, tools, and best practices. By working through this quiz, you'll revisit the importance of producing high-quality Python code that's functional, readable, maintainable, efficient, and secure.

Defining Code Quality

Of course you want quality code. Who wouldn’t? But what is code quality? It turns out that the term can mean different things to different people.

One way to approach code quality is to look at the two ends of the quality spectrum:

- Low-quality code: It has the minimal required characteristics to be functional.

- High-quality code: It has all the necessary characteristics that make it work reliably, efficiently, and effectively, while also being straightforward to maintain.

In the following sections, you’ll learn about these two quality classifications and their defining characteristics in more detail.

Low-Quality Code

Low-quality code typically has only the minimal required characteristics to be functional. It may not be elegant, efficient, or easy to maintain, but at the very least, it meets the following basic criteria:

- It does what it’s supposed to do. If the code doesn’t meet its requirements, then it isn’t quality code. You build software to perform a task. If it fails to do so, then it can’t be considered quality code.

- It doesn’t contain critical errors. If the code has issues and errors or causes you problems, then you probably wouldn’t call it quality code. If it’s too low-quality and becomes unusable, then if falls below even basic quality standards and you may stop using it altogether.

While simplistic, these two characteristics are generally accepted as the baseline of functional but low-quality code. Low-quality code may work, but it often lacks readability, maintainability, and efficiency, making it difficult to scale or improve.

High-Quality Code

Now, here’s an extended list of the key characteristics that define high-quality code:

- Functionality: Works as expected and fulfills its intended purpose.

- Readability: Is easy for humans to understand.

- Documentation: Clearly explains its purpose and usage.

- Standards Compliance: Adheres to conventions and guidelines, such as PEP 8.

- Reusability: Can be used in different contexts without modification.

- Maintainability: Allows for modifications and extensions without introducing bugs.

- Robustness: Handles errors and unexpected inputs effectively.

- Testability: Can be easily verified for correctness.

- Efficiency: Optimizes time and resource usage.

- Scalability: Handles increased data loads or complexity without degradation.

- Security: Protects against vulnerabilities and malicious inputs.

In short, high-quality code is functional, readable, maintainable, and robust. It follows best practices, including clear naming, consistent coding style, modular design, proper error handling, and adherence to coding standards. It’s also well-documented and easy to test and scale. Finally, high-quality code is efficient and secure, ensuring reliability and safe use.

All the characteristics above allow developers to understand, modify, and extend a Python codebase with minimal effort.

The Importance of Code Quality

To understand why code quality matters, you’ll revisit the characteristics of high-quality code from the previous section and examine their impact:

- Functional code: Ensures correct behavior and expected outcomes.

- Readable code: Makes understanding and maintaining code easier.

- Documented code: Clarifies the correct and recommended way for others to use it.

- Compliant code: Promotes consistency and allows collaboration.

- Reusable code: Saves time by allowing code reuse.

- Maintainable code: Supports updates, improvements, and extensions with ease.

- Robust code: Minimizes crashes and produces fewer edge-case issues.

- Testable code: Simplifies verification of correctness through code testing.

- Efficient code: Runs faster and conserves system resources.

- Scalable code: Supports growing projects and increasing data loads.

- Secure code: Provides safeguards against system loopholes and compromised inputs.

The quality of your code matters because it produces code that’s easier to understand, modify, and extend over time. It leads to faster debugging, smoother feature development, reduced costs, and better user satisfaction while ensuring security and scalability.

Read the full article at https://realpython.com/python-code-quality/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Python Bytes: #425 If You Were a Klingon Programmer

Talk Python to Me: #498: Algorithms for high performance terminal apps

Wingware: Wing Python IDE Version 10.0.9 - March 24, 2025

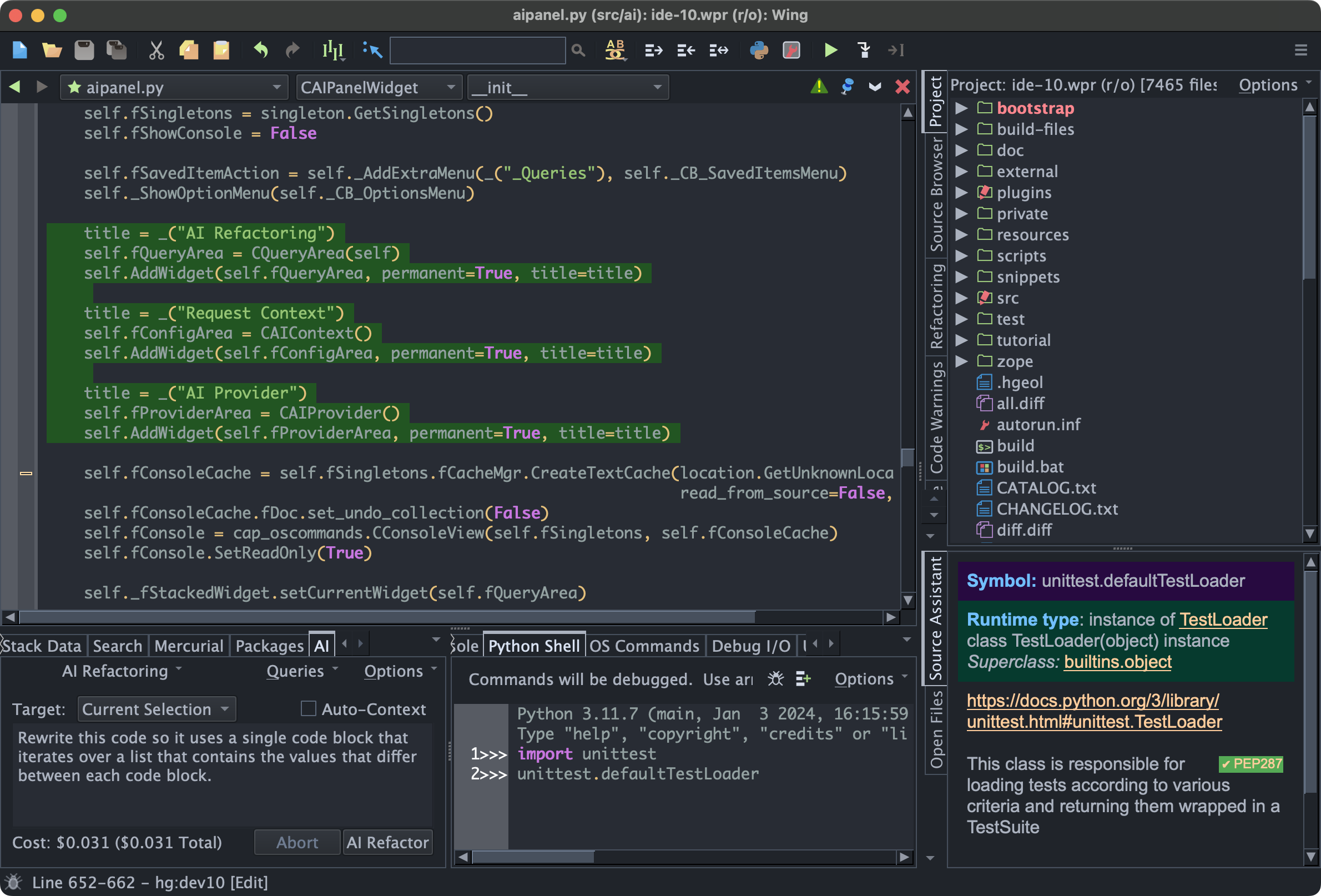

Wing 10.0.9 fixes usability issues with AI supported development, selects text and shows callouts when visiting Find Uses matches, adds User Interface > Fonts > Editor Line Spacing preference, avoids spurious syntax errors on type annotation comments, increases allowed length for Image ID in the Container dialog, and fixes other minor bugs and usability issues.

See the change log for details.

Download Wing 10 Now:Wing Pro | Wing Personal | Wing 101 | Compare Products

![]() What's New in Wing 10

What's New in Wing 10

AI Assisted Development

Wing Pro 10 takes advantage of recent advances in the capabilities of generative AI to provide powerful AI assisted development, including AI code suggestion, AI driven code refactoring, description-driven development, and AI chat. You can ask Wing to use AI to (1) implement missing code at the current input position, (2) refactor, enhance, or extend existing code by describing the changes that you want to make, (3) write new code from a description of its functionality and design, or (4) chat in order to work through understanding and making changes to code.

Examples of requests you can make include:

"Add a docstring to this method" "Create unit tests for class SearchEngine" "Add a phone number field to the Person class" "Clean up this code" "Convert this into a Python generator" "Create an RPC server that exposes all the public methods in class BuildingManager" "Change this method to wait asynchronously for data and return the result with a callback" "Rewrite this threaded code to instead run asynchronously"

Yes, really!

Your role changes to one of directing an intelligent assistant capable of completing a wide range of programming tasks in relatively short periods of time. Instead of typing out code by hand every step of the way, you are essentially directing someone else to work through the details of manageable steps in the software development process.

Support for Python 3.12, 3.13, and ARM64 Linux

Wing 10 adds support for Python 3.12 and 3.13, including (1) faster debugging with PEP 669 low impact monitoring API, (2) PEP 695 parameterized classes, functions and methods, (3) PEP 695 type statements, and (4) PEP 701 style f-strings.

Wing 10 also adds support for running Wing on ARM64 Linux systems.

Poetry Package Management

Wing Pro 10 adds support for Poetry package management in the New Project dialog and the Packages tool in the Tools menu. Poetry is an easy-to-use cross-platform dependency and package manager for Python, similar to pipenv.

Ruff Code Warnings & Reformatting

Wing Pro 10 adds support for Ruff as an external code checker in the CodeWarnings tool, accessed from the Tools menu. Ruff can also be used as a code reformatter in the Source>Reformatting menu group. Ruff is an incredibly fast Python code checker that can replace or supplement flake8, pylint, pep8, and mypy.

![]() Try Wing 10 Now!

Try Wing 10 Now!

Wing 10 is a ground-breaking new release in Wingware's Python IDE product line. Find out how Wing 10 can turbocharge your Python development by trying it today.

Downloads:Wing Pro | Wing Personal | Wing 101 | Compare Products

See Upgrading for details on upgrading from Wing 9 and earlier, and Migrating from Older Versions for a list of compatibility notes.

Hugo van Kemenade: Free-threaded Python on GitHub Actions

GitHub Actions now supports experimental free-threaded CPython!

There are three ways to add it to your test matrix:

- actions/setup-python:

tsuffix - actions/setup-uv:

tsuffix - actions/setup-python:

freethreadedvariable

actions/setup-python: t suffix #

Using actions/setup-python, you

can add the t suffix for Python versions 3.13 and higher: 3.13t and 3.14t.

This is my preferred method, we can clearly see which versions are free-threaded and it’s straightforward to test both regular and free-threaded builds.

on:[push, pull_request, workflow_dispatch]jobs:build:runs-on:${{ matrix.os }}strategy:fail-fast:falsematrix:python-version:["3.13","3.13t",# add this!"3.14","3.14t",# add this!]os:["windows-latest","macos-latest","ubuntu-latest"]steps:- uses:actions/checkout@v4- name:Set up Python ${{ matrix.python-version }}uses:actions/setup-python@v5with:python-version:${{ matrix.python-version }}allow-prereleases:true# needed for 3.14- run:| python --version --version

python -c "import sys; print('sys._is_gil_enabled:', sys._is_gil_enabled())" python -c "import sysconfig; print('Py_GIL_DISABLED:', sysconfig.get_config_var('Py_GIL_DISABLED'))"Regular builds will output something like:

Python 3.14.0a6 (main, Mar 17 2025, 02:44:29) [GCC 13.3.0]

sys._is_gil_enabled: True

Py_GIL_DISABLED: 0

And free-threaded builds will output something like:

Python 3.14.0a6 experimental free-threading build (main, Mar 17 2025, 02:44:30) [GCC 13.3.0]

sys._is_gil_enabled: False

Py_GIL_DISABLED: 1

For example: hugovk/test/actions/runs/14057185035

actions/setup-uv: t suffix #

Similarly, you can install uv with

astral/setup-uv and use that to

set up free-threaded Python using the t suffix.

on:[push, pull_request, workflow_dispatch]jobs:build:runs-on:${{ matrix.os }}strategy:fail-fast:falsematrix:python-version:["3.13","3.13t",# add this!"3.14","3.14t",# add this!]os:["windows-latest","macos-latest","ubuntu-latest"]steps:- uses:actions/checkout@v4- name:Set up Python ${{ matrix.python-version }}uses:astral-sh/setup-uv@v5# change this!with:python-version:${{ matrix.python-version }}enable-cache:false# only needed for this example with no dependencies- run:| python --version --version

python -c "import sys; print('sys._is_gil_enabled:', sys._is_gil_enabled())" python -c "import sysconfig; print('Py_GIL_DISABLED:', sysconfig.get_config_var('Py_GIL_DISABLED'))"For example: hugovk/test/actions/runs/13967959519

actions/setup-python: freethreaded variable #

Back to actions/setup-python, you can also set the freethreaded variable for 3.13

and higher.

on:[push, pull_request, workflow_dispatch]jobs:build:runs-on:${{ matrix.os }}strategy:fail-fast:falsematrix:python-version:["3.13","3.14"]os:["windows-latest","macos-latest","ubuntu-latest"]steps:- uses:actions/checkout@v4- name:Set up Python ${{ matrix.python-version }}uses:actions/setup-python@v5with:python-version:${{ matrix.python-version }}allow-prereleases:true# needed for 3.14freethreaded:true# add this!- run:| python --version --version

python -c "import sys; print('sys._is_gil_enabled:', sys._is_gil_enabled())" python -c "import sysconfig; print('Py_GIL_DISABLED:', sysconfig.get_config_var('Py_GIL_DISABLED'))"For example: hugovk/test/actions/runs/39359291708

PYTHON_GIL=0#

And you may want to set PYTHON_GIL=0 to force Python to keep the GIL disabled, even

after importing a module that doesn’t support running without it.

See Running Python with the GIL Disabled for more info.

With the t suffix:

- name:Set PYTHON_GILif:endsWith(matrix.python-version, 't')run:| echo "PYTHON_GIL=0">> $GITHUB_ENVWith the freethreaded variable:

- name:Set PYTHON_GILif:"${{ matrix.freethreaded }}"run:| echo "PYTHON_GIL=0">> $GITHUB_ENVPlease test! #

For free-threaded Python to succeed and become the default, it’s essential there is ecosystem and community support. Library maintainers: please test it and where needed, adapt your code, and publish free-threaded wheels so others can test their code that depends on yours. Everyone else: please test your code too!

See also #

- Help us test free-threaded Python without the GIL for other ways to test and how to check your build

- Python free-threading guide

- actions/setup-python#973

- actions/setup-python@v5.5.0

Header photo: “Spinning Room, Winding Bobbins with Woolen Yarn for Weaving, Philadelphia, PA” by Library Company of Philadelphia, with no known copyright restrictions.

Seth Michael Larson: Don't bring slop to a slop fight

Whenever I talk about generative AI slop being sent into every conceivable communication platform I see a common suggestion on how to stop the slop from reaching human eyes:

“Just use AI to detect the AI”

We're already seeing companies offer this arrangement as a service. Just a few days ago Cloudflare announced they would use generative AI to create an infinite "labyrinth" for trapping AI crawlers in pages of content and links.

This suggestion is flawed because doing so props up the real problem: generative AI is heavily subsidized. In reality generative AI is so expensive we're talking about restarting nuclear and coal power plants and reopening copper mines, people. There is no universe that this service should allow users to run queries without even a credit card on file.

Today this subsidization is mostly done by venture capital who want to see the technology integrated into as many verticals as possible. The same strategy was used for Uber and WeWork where venture capital allowed those companies to undercut competition to have wider adoption and put competitors out of business.

So using AI to detect and filter AI content just means that there'll be even more generative AI in use, not less. This isn't the signal we want to send to the venture capitalists who are deciding whether to offer these companies more investment money. We want that "monthly active user" (MAU) graph to be flattening or decreasing.

We got a sneak peek at the real price of generative AI from OpenAI where a future top-tier model (as of March 5th, 2025) is supposedly going to be $20,000 USD per month.

That's sounds more like it. The sooner we get to unsubsidized generative AI pricing the better we'll all be, including the planet. So let's hold out for that future and think asymmetrically, not symmetrically, on methods to make generative AI slop not viable until we get there.

Real Python: What Can You Do With Python?

You’ve finished a course or finally made it to the end of a book that teaches you the basics of programming with Python. You’ve learned about variables, lists, tuples, dictionaries, for and while loops, conditional statements, object-oriented concepts, and more. So, what’s next? What can you do with Python nowadays?

Python is a versatile programming language with many use cases in a variety of different fields. If you’ve grasped the basics of Python and are itching to build something with the language, then it’s time to figure out what your next step should be.

In this video course, you’ll see how you can use Python for:

- Doing general software development

- Diving into data science and math

- Speeding up and automating your workflow

- Building embedded systems and robots

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

PyCon: Call for Volunteers: PyCon US Code of Conduct Team

We are looking for volunteers to join the Code of Conduct Team for PyCon US 2025 in Pittsburgh, PA. The Code of Conduct Team supports the PyCon US community by taking reports should anyone violate the PyCon US Code of Conduct and, when appropriate, participating in deciding how PyCon US should respond.

Code of Conduct Team shifts are 3-4 hours long. We are looking for volunteers for the tutorials (May 14 - 15), the main conference (May 16 - 18), and the first 2 days of sprints (May 19 - 20), and ask that you be prepared to do a minimum of two shifts.

As a member of the Code of Conduct Team, you will:

Take reports of incidents that occur at PyCon US (there’s a handy form for this)

Keep track of your email and/or Slack while on shift

Participate in discussions about how to respond to incidents (as needed)

As a member of the Code of Conduct Team, you will not:

Have to make any tough decisions on your own

Have to approach anyone you are uncomfortable approaching

Have to address incidents that involve your friends

In addition to the minimum two shifts, you will need to attend a 90-minute training session virtually before PyCon US or in-person on May 14th or 16th.

We are looking for people with a wide range of experiences in and outside of the Python community! We are especially interested in talking with you if you:

Have experience with code of conduct response, moderation, etc

Speak multiple languages (especially Spanish)

Are from outside the United States

If you are interested in joining us on the Code of Conduct Team, either fill out this form or email molly.deblanc@pyfound.org.

TechBeamers Python: 10 Viral Tips to Learn Python Instantly 🚀

PyCoder’s Weekly: Issue #674: LangGraph, Marimo, Django Template Components, and More (March 25, 2025)

#674 – MARCH 25, 2025

View in Browser »

LangGraph: Build Stateful AI Agents in Python

LangGraph is a versatile Python library designed for stateful, cyclic, and multi-actor Large Language Model (LLM) applications. This tutorial will give you an overview of LangGraph fundamentals through hands-on examples, and the tools needed to build your own LLM workflows and agents in LangGraph.

REAL PYTHON

Reinventing Notebooks as Reusable Python Programs

Marimo is a Jupyter replacement that uses Python as its source instead of JSON, solving a lot of issues with notebooks. This article shows you why you might switch to marimo.

AKSHAY, MYLES, & MADISETTI

How to Build AI Agents With Python & Temporal

Join us on April 3 at 9am PST/12pm EST to learn how Temporal’s Python SDK powers an agentic AI workflow creation. We’ll start by covering how Temporal lets you orchestrate agentic AI, then transition to a live demo →

TEMPORALsponsor

Django Template Components Are Slowly Coming

Django 5.2 brings the Simple Block tag which is very similar to React children, allowing templated components. This post shows several examples from Andy’s own code.

ANDREW MILLER

Articles & Tutorials

A Decade of Automating the Boring Stuff With Python

What goes into updating one of the most popular books about working with Python? After a decade of changes in the Python landscape, what projects, libraries, and skills are relevant to an office worker? This week on the show, we speak with previous guest Al Sweigart about the third edition of “Automate the Boring Stuff With Python.”

REAL PYTHONpodcast

PyCon US: Travel Grants & Refund Policy

PyCon US offers travel grants to visitors. This post explains how they are decided. Also, with changing border requirements in the US, you may also be interested in the Refund Policy for International Attendees

PYCON.BLOGSPOT.COM

Using Structural Pattern Matching in Python

In this video course, you’ll learn how to harness the power of structural pattern matching in Python. You’ll explore the new syntax, delve into various pattern types, and find appropriate applications for pattern matching, all while identifying common pitfalls.

REAL PYTHONcourse

Smoke Test Your Django Admin Site

When writing code that uses the Django Admin, sometimes you forget to match things up. Since it is the Admin, who tests that? That doesn’t mean it won’t fail. This post shows you a general pytest function for checking that empty Admin pages work correctly.

JUSTIN DUKE

Python’s Instance, Class, and Static Methods Demystified

In this tutorial, you’ll compare Python’s instance methods, class methods, and static methods. You’ll gain an understanding of when and how to use each method type to write clear and maintainable object-oriented code.

REAL PYTHON

I Fear for the Unauthenticated Web

A short opinion post by Seth commenting on how companies scraping the web to build LLMs are causing real costs to users, and suggests you implement billing limits on your services.

SETH M LARSON

Django Query Optimization: Defer, Only, and Exclude

Database queries are usually the bottlenecks of most web apps. To minimize the amount of data fetched, you can leverage Django’s defer(), only(), and exclude() methods.

TESTDRIVEN.IO• Shared by Michael Herman

How to Use Async Agnostic Decorators in Python

Using decorators in a codebase that has both synchronous and asynchronous functions poses many challenges. One solution is to use generators. This post shows you how.

PATREON• Shared by Patreon Engineering

PEP 779: Criteria for Supported Status for Free-Threaded Python

PEP 703 (Making the Global Interpreter Lock Optional in CPython), described three phases of development. This PEP outlines the criteria to move between phases.

PYTHON.ORG

uv overtakes Poetry

Wagtail, the Django-based CMS, tracks download statistics including by which installation tool. Recently, uv overtook Poetry. This post shows the stats.

THIBAUD COLAS

Using Pyinstrument to Profile FastHTML Apps

A quick post with instructions on how to add profiling to your FastHTML app with pyinstrument.

DANIEL ROY GREENFIELD

Projects & Code

Events

Weekly Real Python Office Hours Q&A (Virtual)

March 26, 2025

REALPYTHON.COM

SPb Python Drinkup

March 27, 2025

MEETUP.COM

Python Leiden User Group

March 27, 2025

PYTHONLEIDEN.NL

PyLadies Amsterdam: Introduction to BDD in Python

March 31, 2025

MEETUP.COM

Happy Pythoning!

This was PyCoder’s Weekly Issue #674.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

Python GUIs: Multithreading PySide6 applications with QThreadPool — Run background tasks concurrently without impacting your UI

A common problem when building Python GUI applications is the interface "locking up" when attempting to perform long-running background tasks. In this tutorial, we'll cover quick ways to achieve concurrent execution in PySide6.

If you'd like to run external programs (such as command-line utilities) from your applications, check out the Using QProcess to run external programs tutorial.

Background: The frozen GUI issue

Applications based on Qt (like most GUI applications) are based on events. This means that execution is driven in response to user interaction, signals, and timers. In an event-driven application, clicking a button creates an event that your application subsequently handles to produce some expected output. Events are pushed onto and taken off an event queue and processed sequentially.

In PySide6, we create an app with the following code:

app = QApplication([])

window = MainWindow()

app.exec()

The event loop starts when you call .exec() on the QApplication object and runs within the same thread as your Python code. The thread that runs this event loop — commonly referred to as the GUI thread— also handles all window communication with the host operating system.

By default, any execution triggered by the event loop will also run synchronously within this thread. In practice, this means that the time your PySide6 application spends doing something, the communication with the window and the interaction with the GUI are frozen.

If what you're doing is simple, and it returns control to the GUI loop quickly, the GUI freeze will be imperceptible to the user. However, if you need to perform longer-running tasks, for example, opening and writing a large file, downloading some data, or rendering a high-resolution image, there are going to be problems.

To your user, the application will appear to be unresponsive (because it is). Because your app is no longer communicating with the OS, on macOS, if you click on your app, you will see the spinning wheel of death. And, nobody wants that.

The solution is to move your long-running tasks out of the GUI thread into another thread. PySide6 provides a straightforward interface for this.

Preparation: A minimal stub app

To demonstrate multi-threaded execution, we need an application to work with. Below is a minimal stub application for PySide6 that will allow us to demonstrate multithreading and see the outcome in action. Simply copy and paste this into a new file and save it with an appropriate filename, like multithread.py. The remainder of the code will be added to this file. There is also a complete working example at the bottom if you're impatient:

import time

from PySide6.QtCore import (

QTimer,

)

from PySide6.QtWidgets import (

QApplication,

QLabel,

QMainWindow,

QPushButton,

QVBoxLayout,

QWidget,

)

class MainWindow(QMainWindow):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.counter = 0

layout = QVBoxLayout()

self.label = QLabel("Start")

button = QPushButton("DANGER!")

button.pressed.connect(self.oh_no)

layout.addWidget(self.label)

layout.addWidget(button)

w = QWidget()

w.setLayout(layout)

self.setCentralWidget(w)

self.show()

self.timer = QTimer()

self.timer.setInterval(1000)

self.timer.timeout.connect(self.recurring_timer)

self.timer.start()

def oh_no(self):

time.sleep(5)

def recurring_timer(self):

self.counter += 1

self.label.setText(f"Counter: {self.counter}")

app = QApplication([])

window = MainWindow()

app.exec()

Run the app as for any other Python application:

$ python multithread.py